文章目录

【全文大纲】 : https://blog.youkuaiyun.com/Engineer_LU/article/details/135149485

前言

- 理解一个 实时操作系统(RTOS) 时,阅读其源码无疑是最直接、最深刻的途径。从创建、到启动、到调度、再到通信——我们将不仅仅是在“读代码”,而是在脑海中构建一个动态的、可运行的FreeRTOS世界模型。理解了这些,你不仅能更自信、更高效地使用FreeRTOS,更能将这种对系统资源的精确管理、对并发问题的深刻洞察,应用到任何复杂的嵌入式系统设计之中,看完理解整篇文章,就可以手搓一个RTOS。

- 全文根据核心把各部分关联一起,深入浅出方式开展,细节偏多,力求解析每个细节存在的意义,源码截取核心走向进行描述(不影响每个细节的关联),文中默认核心宏打开的部分,调试/静态相关的宏默认不描述

- 依次从概念,任务创建,任务调度,内存管理,数据结构,任务通信开展,每个流程都是按源码顺序进行

一 : FreeRTOS 概念

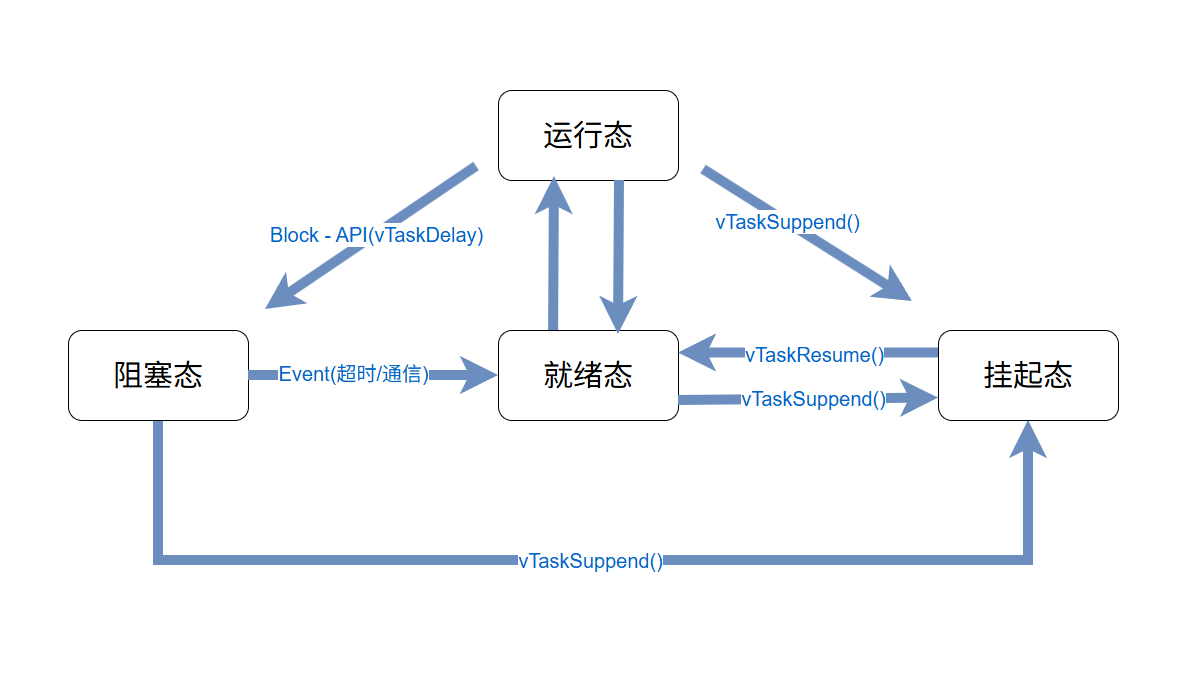

- FreeRTOS有四种状态,分别为运行态,就绪态,阻塞态,挂起态;

- FreeRTOS有五种链表:就绪链表,阻塞链表,挂起链表,缓存链表,回收链表;其中前三个对应状态的运行流程,后两者为缓存操作和删除任务时所用

- FreeRTOS为抢占式实时系统,所以核心调度逻辑高优先级任务优先执行,拥有抢占低优先级任务的权限;因此高优先级任务需要释放掉CPU执行权后,低优先级任务才可以执行,若是优先级相等,则是通过优先级就绪链表中的index遍历执行,就是传统意义上的时间片轮流执行

- FreeRTOS通常通过vTaskDelay,任务通信等主动切换任务,通过Tick中断被动切换任务(阻塞态的任务通过SysTick中断查询来唤醒)

- FreeRTOS的最终任务切换逻辑在PendSV中断里实现

- FreeRTOS写了两套API,分别用于任务与中断,例如队列通信有任务版本,中断版本(不阻塞)

- FreeRTOS的TCB任务控制块主要依靠xState和xEvent链表项放在各链表中进行调度,而链表项中的pvOwner指向当前TCB,pxContainer指向具体的链表,通过这两个在链表中可以关联到每个任务,这样通过管理链表来管理任务调度

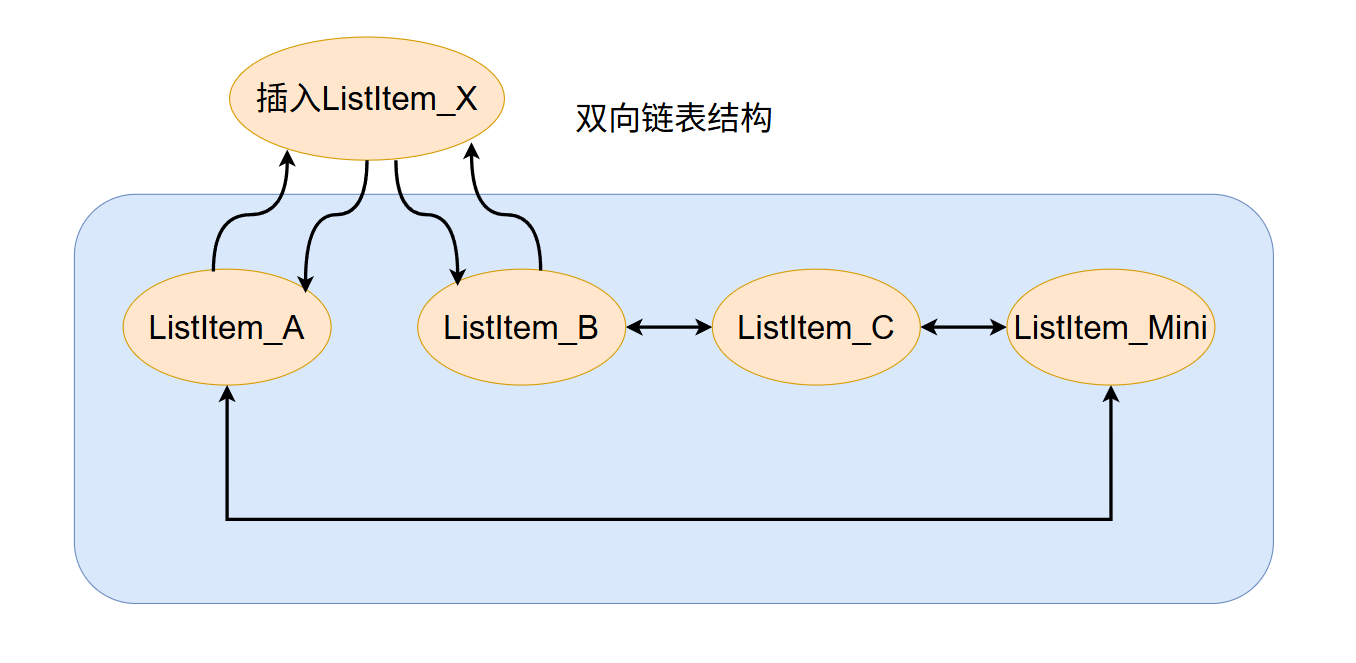

- FreeRTOS的链表是双向的,通常End节点为哨兵节点,插入的链表项在End节点之前

- FreeRTOS的内存管理有五种,heap_1~heap_5(详细描述在内存管理章节)

- FreeRTOS的任务通信通常采样队列,事件组,任务通知,流缓冲区,触发通信完成后会顺带触发任务切换,关于队列还有队列集的概念,总而言之是为了让任务间形成独立的空间,通过线程安全的API通信完成多线程逻辑

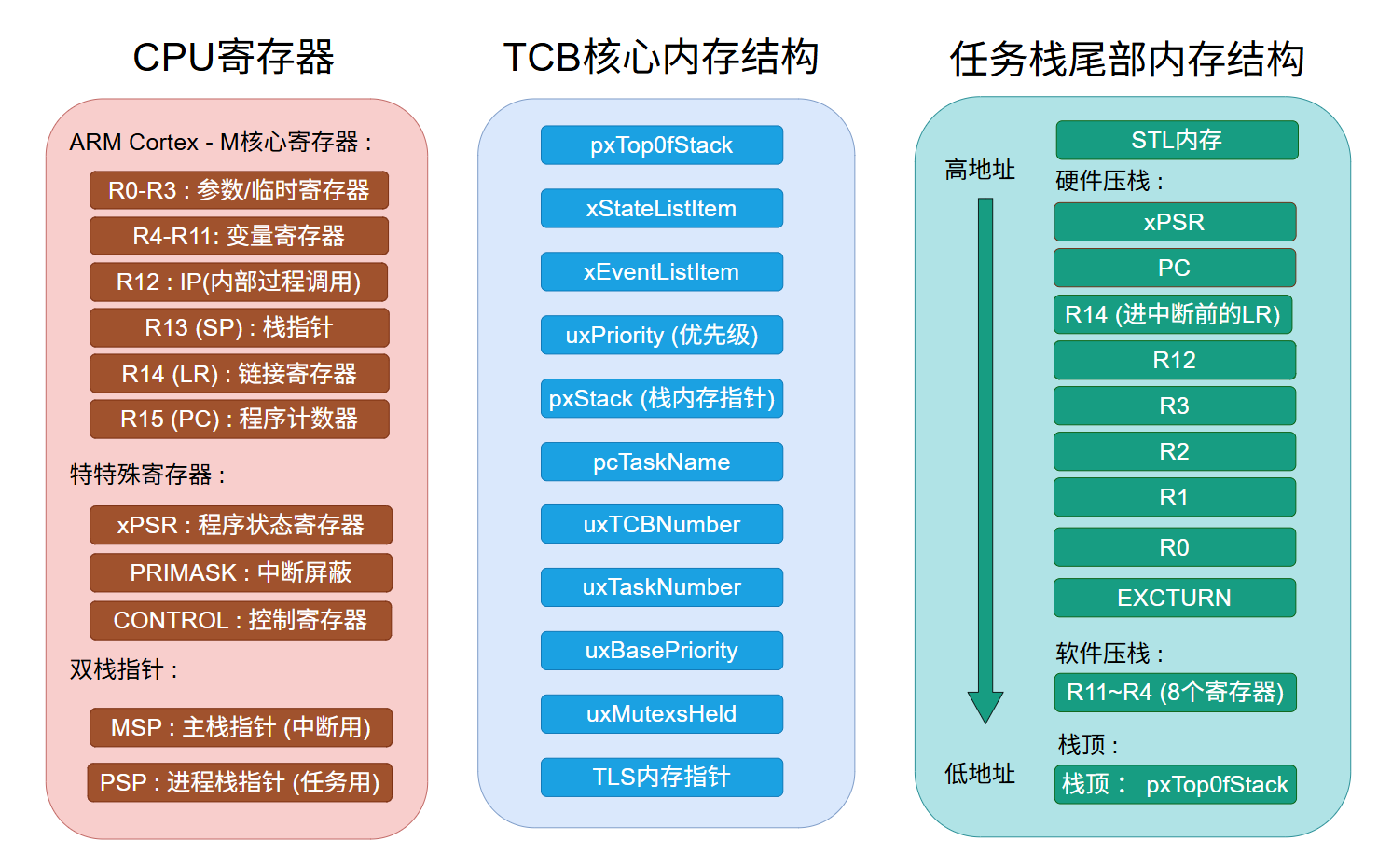

- 内核寄存器与任务栈描述:

TCB任务栈尾部内存结构中,EXCTURN后还有一个保留字(4字节),这个属于ARM M核在MDK编译中的特性,关于核心寄存器中CONTROL(控制寄存器) , PRIMASK(中断屏蔽), BASEPRI (优先级屏蔽寄存器),FAULTMASK 是故障屏蔽寄存器 此处作出描述,

- CONTROL 寄存器是一个32位寄存器,但通常只使用其中4个有效位,其余位为保留位(读取为0,写入无效)。它主要用于配置处理器的特权级别和栈指针选择,是实现FreeRTOS等操作系统任务隔离与模式切换的基础。

- PRIMASK 是 ARM Cortex-M(包括STM32H750)内核中用于快速全局开关中断的优先级屏蔽寄存器。可以把它理解为一个“总中断开关”,但它比“总开关”更精细一些。其核心功能是临时提升处理器执行代码的“优先级”到0级(可编程的最高优先级),以保护极其关键、不允许被任何普通中断打断的代码段。

- BASEPRI 是一个可编程优先级屏蔽寄存器。它比 PRIMASK 更智能,允许你设置一个优先级阈值,只屏蔽那些优先级等于或低于该阈值的中断,而更高优先级的中断仍然可以正常响应。这是实现可嵌套临界区和保证系统实时性的关键。

- FAULTMASK 是故障屏蔽寄存器,它的“屏蔽力度”最强。将它置1后,除了 NMI(不可屏蔽中断),所有其他中断和异常(包括 HardFault)都会被屏蔽。处理器执行权限也会提升到特权级。它主要用于故障处理程序内部,以便在系统严重错误后能安全地执行恢复或关闭操作。

- 内核寄存器常规用法,直接操作要小心:如果因特殊原因需要直接写入(如在某些极端优化的启动代码中),你必须手动屏蔽保留位,并在修改 CONTROL 寄存器后立即添加 DSB 和 ISB 屏障指令。

| CONTROL | 名称 | 功能描述 |

|---|---|---|

| bit0 | nPRIV | 线程模式特权级控制。 0 : 线程模式为特权模式,可以访问所有处理器资源。通常FreeRTOS内核运行于此模式。 1 : 线程模式为用户(非特权)模式,对某些系统寄存器和内存区域的访问受限。FreeRTOS的用户任务可配置为此模式。 |

| bit1 | SPSEL | 栈指针(SP)选择。 0 : 在线程模式下使用主栈指针(MSP)。 1 : 在线程模式下使用进程栈指针(PSP)。这是多任务操作系统的典型配置,每个任务拥有独立的栈空间。 |

| bit2 | FPCA | 浮点上下文激活标志(Cortex-M4/M7特有)。 0 : 当前上下文未使用浮点单元(FPU)。 1 : 当前上下文使用了FPU。在进行任务切换时,系统会根据此位决定是否保存/恢复浮点寄存器以优化性能。 |

| bit3 | SFPA | 安全浮点上下文激活标志(仅支持TrustZone的Cortex-M23/M33/M55等内核)。对于STM32H750(Cortex-M7),此位为保留位,无作用。 |

| [31 : 8] | - | 保留。必须写入0。 |

/* CONTROL寄存器应用 */

// 设置线程模式为特权模式,并使用MSP

__set_CONTROL(0x00);

// 设置线程模式为用户模式,并使用PSP(FreeRTOS任务典型配置)

__set_CONTROL(0x03); // 即 nPRIV=1, SPSEL=1

| PRIMASK | 名称 | 功能描述 |

|---|---|---|

| bit0 | PM | 优先级屏蔽位。 0 : 不屏蔽(默认值),所有中断根据其优先级正常响应。 1 : 屏蔽,除了 NMI(不可屏蔽中断) 和 HardFault 外,屏蔽所有优先级可配置的中断。 |

| [31 : 1] | - | 保留。必须写入0。 |

/* CPRIMASK寄存器 */

// 操作 PRIMASK 寄存器

__disable_irq(); // 等效于 __set_PRIMASK(1);

__enable_irq(); // 等效于 __set_PRIMASK(0);

| BASEPRI | 名称 | 功能描述 |

|---|---|---|

| [7 : 0] | PRI_MASK | 优先级屏蔽字段(在STM32H750等Cortex-M7中通常使用8位中的高4位,即 [7:4])。 0 : 不屏蔽任何中断(与 PRIMASK=0 效果相同,也是默认值)。 非0值 (N) : 屏蔽所有优先级数值大于或等于N的中断。请注意优先级数值的方向:在ARM中,数值越小,优先级越高。因此,BASEPRI=0x10 会屏蔽优先级为 0x10, 0x20, 0x30…(即所有优先级等于或低于 0x10 的)中断,而优先级为 0x0C(更高)的中断仍可通行。 |

| [31 : 8] | - | 保留。必须写入0。 |

/* BASEPRI寄存器应用 */

// FreeRTOS 中常用 configMAX_SYSCALL_INTERRUPT_PRIORITY 来配置 BASEPRI

// 假设 configMAX_SYSCALL_INTERRUPT_PRIORITY 设置为 5 (对应优先级值 0x50)

#define portDISABLE_INTERRUPTS() __set_BASEPRI( ( 5UL << (8 - __NVIC_PRIO_BITS) ) )

#define portENABLE_INTERRUPTS() __set_BASEPRI( 0 )

| FAULTMASK | 名称 | 功能描述 |

|---|---|---|

| bit0 | FM | 故障屏蔽位。 0 : 不屏蔽故障(默认值)。 1 : 屏蔽所有中断和除NMI外的所有异常(包括 HardFault、MemManage 等)。此时处理器运行在“等效于优先级-1”的状态。 |

| [31 : 1] | - | 保留。必须写入0。 |

/* FAULTMASK 寄存器 */

void HardFault_Handler(void) {

__asm volatile (“cpsid f”); // 屏蔽所有,进入最高优先级状态

// 关键操作:将寄存器、栈等信息保存到安全位置

__asm volatile (“cpsie f”); // 恢复,允许其他高优先级故障(如有)被处理

// 可能进入错误处理循环或尝试恢复

while(1);

}

补充描述 :

cpsie i / cpsid i :PRIMASK 开启/关闭中断 除 NMI 和 HardFault 外的所有可屏蔽中断。

cpsie f / cpsid f :FAULTMASK 开启/关闭故障 所有异常(包括大多数Fault)和中断,但 NMI 仍然不可屏蔽。

cpsie i 等价于 __enable_irq()

cpsid i 等价于 __disable_irq()

cpsie f 等价于 __enable_fault_irq()

cpsid f 等价于 __disable_fault_irq()

中断优先级数值 < BASEPRI设定值 中断的实际优先级更高 (允许响应) ,VIP通道,紧急事件,任何情况下都要处理。

中断优先级数值 >= BASEPRI设定值 中断的实际优先级更低(或等于), (被屏蔽) 普通通道,可以被系统临时挂起,以保护关键代码。

假设系统中断优先级使用4位(STM32常见配置),优先级范围是 0(最高)到 15(最低)。

通过 __set_BASEPRI(0x50); 进行设置(0x50是8位表示,其高4位优先级数值为5)。

此时的状态如下:

中断 A,优先级数值为 2 (0x20) → 数值2 < 5 → 更高优先级 → 可以正常运行,不被屏蔽。

中断 B,优先级数值为 5 (0x50) → 数值5 == 5 → 等于阈值 → 被屏蔽,无法响应。

中断 C,优先级数值为 8 (0x80) → 数值8 > 5 → 更低优先级 → 被屏蔽,无法响应。

二 : 源码 : 任务创建

任务创建 API : xTaskCreate(myTask, “myTask”, 512, NULL, 1, &myTaskHandler);

任务创建 Step1 :创建任务成功则添加到就绪列表

任务创建 Step2 :给TCB块以及任务栈申请动态内存

任务创建 Step3 :初始化TCB成员以及栈顶

任务创建 Step4 :创建的任务加入到具体的就绪优先级链表中

跳转置顶

1. 任务创建成功加入就绪链表

-

任务创建Step1 : 创建任务成功则添加到就绪列表,根据下图可以看到创建任务的源码,主要做两件事

1.1 :首先申请 任务控制块(TCB) 内存,申请到的任务栈内存地址传给 pxNewTCB;

1.2 :若成功申请则把当前 pxNewTCB 任务控制块 链表项插入到就绪链表等待调度(任务创建step4分析);BaseType_t xTaskCreate( TaskFunction_t pxTaskCode, const char * const pcName, const configSTACK_DEPTH_TYPE uxStackDepth, void * const pvParameters, UBaseType_t uxPriority, TaskHandle_t * const pxCreatedTask ) { TCB_t * pxNewTCB; BaseType_t xReturn; traceENTER_xTaskCreate( pxTaskCode, pcName, uxStackDepth, pvParameters, uxPriority, pxCreatedTask ); pxNewTCB = prvCreateTask( pxTaskCode, pcName, uxStackDepth, pvParameters, uxPriority, pxCreatedTask ); if( pxNewTCB != NULL ) { #if ( ( configNUMBER_OF_CORES > 1 ) && ( configUSE_CORE_AFFINITY == 1 ) ) { /* Set the task's affinity before scheduling it. */ pxNewTCB->uxCoreAffinityMask = configTASK_DEFAULT_CORE_AFFINITY; } #endif prvAddNewTaskToReadyList( pxNewTCB ); xReturn = pdPASS; } else { xReturn = errCOULD_NOT_ALLOCATE_REQUIRED_MEMORY; } traceRETURN_xTaskCreate( xReturn ); return xReturn; }【A】创建任务的六个参数作用 :

- pxTaskCode // 任务函数指针 - 任务执行体入口;

- pcName // 任务名称字符串 - 用于调试识别;

- uxStackDepth // 栈深度 - 用于任务调度时压栈,任务变量存储,函数调用关联到的核心寄存器压栈;

- pvParameters // 任务参数 - 通过R0传递到任务函数;

- uxPriority // 任务优先级 - 数值赋予越大,任务优先级越高;

- pxCreatedTask // 任务句柄 - 指向TCB的指针;

2. 内存申请

-

任务创建Step2 : 给TCB块以及任务栈申请动态内存,prvCreateTask 函数里主要做三件事。

2.1 :给 TCB 任务控制块申请内存;

2.2 :给任务栈申请内存(TCB的成员指针指向这片内存);

2.3 :调用 prvInitialiseNewTask 初始化任务,步骤往下解析具体如何初始化;

2.1 :给 TCB 任务控制块申请内存;

pxNewTCB = ( TCB_t * ) pvPortMalloc( sizeof( TCB_t ) );

2.2 :给任务栈申请内存(TCB的成员指针指向这片内存);

pxNewTCB->pxStack = ( StackType_t * ) pvPortMallocStack( ( ( ( size_t ) uxStackDepth ) * sizeof( StackType_t ) ) );

2.3 :调用 prvInitialiseNewTask 初始化任务,步骤往下解析具体如何初始化;

prvInitialiseNewTask( pxTaskCode, //任务接口地址 pcName, //任务名称 uxStackDepth, //任务栈深度,以字为单位 pvParameters, //任务参数 uxPriority, //任务优先级 pxCreatedTask,//任务句柄(二级指针) pxNewTCB, //创建任务过程中申请的对象(一级指针)最终赋予TCB指向 NULL //MemoryRegion关于内存分区操作,一般为NULL );

3. 初始化TCB成员以及栈顶

-

任务创建Step3 : 初始化TCB成员以及栈顶,prvInitialiseNewTask 任务解析

3.1 :初始化申请到的任务栈具体数据 tskSTACK_FILL_BYTE 为 0xA5,若是栈溢出,可以检索这些内存来辨识;

3.2 :给pxTopOfStack指向首次栈顶位置,默认按满减递增方式设置栈方向,内存8字节对齐;

3.3 :给TCB控制块设置任务名称;

3.4 :限制优先级,初始化优先级,初始化Base优先级(用于互斥锁优先级反转用);

3.5 :初始化任务xState和xEvent链表项中的pxContainer ,初始的具体行为只是把 pxContainer 指向为 NULL,pxContainer一般指向具体的链表(如就绪链表,阻塞链表等);

3.6 :初始化任务xState和xEvent链表项中的pvOwner指向,pvOwner指向当前创建的任务,所以通过TCB块的pxContainer 和 pvOwner就知道当前任务所属哪个链表,所属哪个任务,另外事件的ItemValue是记录优先级,数越低优先级越高(与任务优先级反过来);

3.7 :初始化一段TLS内存,这段内存在申请的空间的顶部,_tls_size()具体大小根据当前平台申请得出;

3.8 :把核心寄存器进行压栈,具体行为是根据当前栈顶⼿动往下递进依次赋值常量xPSR,PC(应⽤层创建传⼊的任务接⼝地址), LR (⼿动保存为prvTaskExitError函数地址), R12, R3, R2, R1, R0(任务参数指针), EXCTURN,再把栈顶往下8个字( R11 ~ R4) ,总共⼿动设置任务栈寄存器 17 个字,因此任务栈顶在 TLS内存后紧跟着17个字后,任务栈顶指向R4,初始完pxNewTCB后,⽤当初创建任务传⼊的&TCB指针,其实传⼊了个⼆级指针(&指针),那样可以在内部给这个⼆级指针⾥的⼀级指向⼀块区域,这块区域就是任务栈空间,压完寄存器后的地址为栈顶pxTopOfStack

3.1 初始化申请到的任务栈具体数据 tskSTACK_FILL_BYTE 为 0xA5,若是栈溢出,可以检索这些内存来辨识;

( void ) memset ( pxNewTCB->pxStack, ( int ) tskSTACK_FILL_BYTE, //0xA5 ( size_t ) uxStackDepth * sizeof( StackType_t ) );

3.2 :给pxTopOfStack指向首次栈顶位置,默认按满减递增方式设置栈方向,内存8字节对齐;

pxTopOfStack = &( pxNewTCB->pxStack[ uxStackDepth - ( configSTACK_DEPTH_TYPE ) 1 ] ); pxTopOfStack = ( StackType_t * ) ( ( ( portPOINTER_SIZE_TYPE) pxTopOfStack ) & ( ~( ( portPOINTER_SIZE_TYPE ) portBYTE_ALIGNMENT_MASK ) ) ) #if ( configRECORD_STACK_HIGH_ADDRESS == 1 ) { /* 这里先指向最高位地址,认为是尾部,后面压栈后,形成栈顶与栈尾区别 */ pxNewTCB->pxEndOfStack = pxTopOfStack; } #endif /* configRECORD_STACK_HIGH_ADDRESS */

3.3 :给TCB控制块设置任务名称;

if( pcName != NULL ) { for( x = ( UBaseType_t ) 0; x < ( UBaseType_t ) configMAX_TASK_NAME_LEN; x++ ) { pxNewTCB->pcTaskName[ x ] = pcName[ x ]; /* Don't copy all configMAX_TASK_NAME_LEN if the string is shorter than * configMAX_TASK_NAME_LEN characters just in case the memory after the * string is not accessible (extremely unlikely). */ if( pcName[ x ] == ( char ) 0x00 ) { break; } else { mtCOVERAGE_TEST_MARKER(); } } /* Ensure the name string is terminated in the case that the string length * was greater or equal to configMAX_TASK_NAME_LEN. */ pxNewTCB->pcTaskName[ configMAX_TASK_NAME_LEN - 1U ] = '\0'; } else { mtCOVERAGE_TEST_MARKER(); }

3.4 :限制优先级,初始化优先级,初始化Base优先级(用于互斥锁优先级反转用);

if( uxPriority >= ( UBaseType_t ) configMAX_PRIORITIES ) { uxPriority = ( UBaseType_t ) configMAX_PRIORITIES - ( UBaseType_t ) 1U; } pxNewTCB->uxPriority = uxPriority; pxNewTCB->uxBasePriority = uxPriority;

3.5 :初始化任务xState和xEvent链表项中的pxContainer ,初始的具体行为只是把 pxContainer 指向为 NULL,pxContainer一般指向具体的链表(如就绪链表,阻塞链表等);

vListInitialiseItem( &( pxNewTCB->xStateListItem ) ); vListInitialiseItem( &( pxNewTCB->xEventListItem ) );

3.6 :初始化任务xState和xEvent链表项中的pvOwner指向,pvOwner指向当前创建的任务,所以通过TCB块的pxContainer 和 pvOwner就知道当前任务所属哪个链表,所属哪个任务,另外事件的ItemValue是记录优先级,数越低优先级越高(与任务优先级反过来);

listSET_LIST_ITEM_OWNER( &( pxNewTCB->xStateListItem ), pxNewTCB ); listSET_LIST_ITEM_VALUE( &( pxNewTCB->xEventListItem ), ( TickType_t ) configMAX_PRIORITIES - ( TickType_t ) uxPriority ); listSET_LIST_ITEM_OWNER( &( pxNewTCB->xEventListItem ), pxNewTCB );

3.7 :初始化一段TLS内存,这段内存在申请的空间的顶部,_tls_size()具体大小根据当前平台申请得出;

configINIT_TLS_BLOCK( pxNewTCB->xTLSBlock, pxTopOfStack );

3.8 :把核心寄存器进行压栈,具体行为是根据当前栈顶⼿动往下递进依次赋值常量xPSR,PC(应⽤层创建传⼊的任务接⼝地址), LR (⼿动保存为prvTaskExitError函数地址), R12, R3, R2, R1, R0(任务参数指针), EXCTURN,再把栈顶往下8个字( R11 ~ R4) ,总共⼿动设置任务栈寄存器 17 个字,因此任务栈顶在 TLS内存后紧跟着17个字后,任务栈顶指向R4,初始完pxNewTCB后,⽤当初创建任务传⼊的&TCB指针,其实传⼊了个⼆级指针(&指针),那样可以在内部给这个⼆级指针⾥的⼀级指向⼀块区域,这块区域就是任务栈空间,压完寄存器后的地址为栈顶pxTopOfStack

pxNewTCB->pxTopOfStack = pxPortInitialiseStack( pxTopOfStack, pxTaskCode, pvParameters ); StackType_t * pxPortInitialiseStack( StackType_t * pxTopOfStack, TaskFunction_t pxCode, void * pvParameters ) { pxTopOfStack--; *pxTopOfStack = portINITIAL_XPSR; /* xPSR */ pxTopOfStack--; *pxTopOfStack = ( ( StackType_t ) pxCode ) & portSTART_ADDRESS_MASK; /* PC */ pxTopOfStack--; *pxTopOfStack = ( StackType_t ) prvTaskExitError; /* LR */ /* Save code space by skipping register initialisation. */ pxTopOfStack -= 5; /* R12, R3, R2 and R1. */ *pxTopOfStack = ( StackType_t ) pvParameters; /* R0 */ /* A save method is being used that requires each task to maintain its * own exec return value. */ pxTopOfStack--; *pxTopOfStack = portINITIAL_EXC_RETURN; pxTopOfStack -= 8; /* R11, R10, R9, R8, R7, R6, R5 and R4. */ return pxTopOfStack; }

4. 创建的任务加入到对应的就绪优先级链表中

-

任务创建Step4 : 创建的任务加入到具体的就绪优先级链表中,prvAddTaskToReadyList( pxNewTCB );

4.1 :给 TCB 任务控制块申请内存;

4.2 :uxCurrentNumberOfTasks 记录创建的任务数量;

4.3 :确定 pxCurrentTCB的指向,若是首次创建任务则初始化链表(包含就绪链表,两个阻塞链表,缓存链表,待释放链表,挂起链表) ,重初始阻塞链表指针,若是创建任务的优先级高于当前任务优先级,则把创建的任务作为当前任务;

4.4 :uxCurrentNumberOfTasks 和 uxTaskNumber的区别是前者是记录全局任务数量,后者是TCB内部记录当前编号;

4.5 :把任务加入就绪链表,uxTopReadyPriority记录全局任务优先级,创建的任务优先级 ( uxReadyPriorities ) |= ( 1UL << ( uxPriority ) ) ,FreeRTOS从而让最高优先级任务抢占调度

4.6 :由于FreeRTOS是多优先级抢占式,因此就绪链表是数组形式,每个就绪链表对应一个优先级,所以传入对应的优先级链表,把任务 xStateListItem 链表项插入到该优先级链表的当前index链表项之前,由于是双向链表,因此先设置新插入的Next和Previous指向,再通过index索引改变链表对index前一个链表项的指向,重构融于新链表项后的链表,然后记录链表内的链表项数量

4.7 :任务加入到就绪链表后调用 taskYIELD_ANY_CORE_IF_USING_PREEMPTION( pxNewTCB ); 作用是若加入到就绪链表的任务优先级高于当前任务优先级,则⽴刻切换⼀次任务portYIELD_WITHIN_API(),具体切换代码在下方,⾄此创建任务整个流程完成4.1 :给 TCB 任务控制块申请内存;

taskENTER_CRITICAL(); //关闭中断,禁止调度

4.2 :uxCurrentNumberOfTasks 记录创建的任务数量;

uxCurrentNumberOfTasks = ( UBaseType_t ) ( uxCurrentNumberOfTasks + 1U );

4.3 :确定 pxCurrentTCB的指向,若是首次创建任务则初始化链表(包含就绪链表,两个阻塞链表,缓存链表,待释放链表,挂起链表) ,重初始阻塞链表指针,若是创建任务的优先级高于当前任务优先级,则把创建的任务作为当前任务;

if( pxCurrentTCB == NULL ) { /* There are no other tasks, or all the other tasks are in * the suspended state - make this the current task. */ pxCurrentTCB = pxNewTCB; if( uxCurrentNumberOfTasks == ( UBaseType_t ) 1 ) { /* This is the first task to be created so do the preliminary * initialisation required. We will not recover if this call * fails, but we will report the failure. */ prvInitialiseTaskLists(); } else { mtCOVERAGE_TEST_MARKER(); } } else { /* If the scheduler is not already running, make this task the * current task if it is the highest priority task to be created * so far. */ if( xSchedulerRunning == pdFALSE ) { if( pxCurrentTCB->uxPriority <= pxNewTCB->uxPriority ) { pxCurrentTCB = pxNewTCB; } else { mtCOVERAGE_TEST_MARKER(); } } else { mtCOVERAGE_TEST_MARKER(); } }

4.4 :uxCurrentNumberOfTasks 和 uxTaskNumber的区别是前者是记录全局任务数量,后者是TCB内部记录当前编号;

uxTaskNumber++; pxNewTCB->uxTCBNumber = uxTaskNumber;

4.5 :把任务加入就绪链表,uxTopReadyPriority记录全局任务优先级,创建的任务优先级 ( uxReadyPriorities ) |= ( 1UL << ( uxPriority ) ) ,FreeRTOS从而让最高优先级任务抢占调度

prvAddTaskToReadyList( pxNewTCB ); #define prvAddTaskToReadyList( pxTCB ) do { traceMOVED_TASK_TO_READY_STATE( pxTCB ); taskRECORD_READY_PRIORITY( ( pxTCB )->uxPriority ); listINSERT_END( &( pxReadyTasksLists[ ( pxTCB )->uxPriority ] ), &( ( pxTCB )->xStateListItem ) ); tracePOST_MOVED_TASK_TO_READY_STATE( pxTCB ); } while( 0 )) );

4.6 :由于FreeRTOS是多优先级抢占式,因此就绪链表是数组形式,每个就绪链表对应一个优先级,所以传入对应的优先级链表,把任务 xStateListItem 链表项插入到该优先级链表的当前index链表项之前,由于是双向链表,因此先设置新插入的Next和Previous指向,再通过index索引改变链表对index前一个链表项的指向,重构融于新链表项后的链表,然后记录链表内的链表项数量

#define listINSERT_END( pxList, pxNewListItem ) do { ListItem_t * const pxIndex = ( pxList )->pxIndex; ( pxNewListItem )->pxNext = pxIndex; ( pxNewListItem )->pxPrevious = pxIndex->pxPrevious; pxIndex->pxPrevious->pxNext = ( pxNewListItem ); pxIndex->pxPrevious = ( pxNewListItem ); /* Remember which list the item is in. */ ( pxNewListItem )->pxContainer = ( pxList ); ( ( pxList )->uxNumberOfItems ) = ( UBaseType_t ) ( ( ( pxList )->uxNumberOfItems ) + 1U ); } while( 0 )

4.7 :任务加入到就绪链表后调用 taskYIELD_ANY_CORE_IF_USING_PREEMPTION( pxNewTCB ); 作用是若加入到就绪链表的任务优先级高于当前任务优先级,则⽴刻切换⼀次任务portYIELD_WITHIN_API(),具体切换代码在下方,⾄此创建任务整个流程完成

taskYIELD_ANY_CORE_IF_USING_PREEMPTION( pxNewTCB ); #define taskYIELD_ANY_CORE_IF_USING_PREEMPTION( pxTCB ) \ do { \ if( pxCurrentTCB->uxPriority < ( pxTCB )->uxPriority ) \ { \ portYIELD_WITHIN_API(); \ } \ else \ { \ mtCOVERAGE_TEST_MARKER(); \ } \ } while( 0 ) #define portYIELD_WITHIN_API portYIELD #define portYIELD() \ { \ /* Set a PendSV to request a context switch. */ \ portNVIC_INT_CTRL_REG = portNVIC_PENDSVSET_BIT; \ \ /* Barriers are normally not required but do ensure the code is completely \ * within the specified behaviour for the architecture. */ \ __dsb( portSY_FULL_READ_WRITE ); \ __isb( portSY_FULL_READ_WRITE ); \ }

三 : 源码 : 调度启动与任务切换

任务调度API : vTaskStartScheduler();

任务调度 Step1 :创建空闲任务

任务调度 Step2 :创建定时器任务

任务调度 Step3 :配置调度

任务调度 Step4 :SVC中断 : 任务启动

任务调度 Step5 :PendSV中断 : 任务切换

任务调度 Step6 :SysTick中断 : 系统心跳

任务调度 Step7 :vTaskDelay : 任务阻塞(主动调度)

跳转置顶

1. 创建空闲任务

-

任务调度Step1 : 创建空闲任务

1.1 :空闲任务名默认为"IDLE";

1.2 :函数指针指向静态函数(空闲任务); pxIdleTaskFunction = prvIdleTask;

1.3 :configMINIMAL_STACK_SIZE默认为130个字,portPRIVILEGE_BIT默认为0,因此空闲任务优先级最低,xIdleTaskHandles是静态创建的全局TCB指针变量,任务创建执行;xReturn = xTaskCreate( pxIdleTaskFunction, cIdleName, configMINIMAL_STACK_SIZE, ( void * ) NULL, portPRIVILEGE_BIT, /* In effect ( tskIDLE_PRIORITY | portPRIVILEGE_BIT ), but tskIDLE_PRIORITY is zero. */ &xIdleTaskHandles[ xCoreID ] );

2. 创建定时器任务

-

任务调度Step2 : 创建定时器任务

2.1 : 进入临界区

2.2 : 初始定时器链表(类似阻塞链表有CurrentTimer和OverflowTimer)

2.3 : 创建队列,定时器任务其实就是专门处理接收定时器命令的队列任务,且带超时阻塞机制实现定时逻辑

2.4 : 队列注册到静态调试队列,方便调试,0x20001000: / TmrQ: 调试阶段,基于调试名显示,方便直观

2.5 : 创建任务,定时器任务名默认“Tmr Svc”;定时器任务栈默认 configMINIMAL_STACK_SIZE * 2;定时器任务默认最高 configTIMER_TASK_PRIORITY 宏定义为 configMAX_PRIORITIES - 1

2.1 :进入临界区

taskENTER_CRITICAL();

2.2 :初始定时器链表(类似阻塞链表有CurrentTimer和OverflowTimer)

vListInitialise( &xActiveTimerList1 ); vListInitialise( &xActiveTimerList2 ); pxCurrentTimerList = &xActiveTimerList1; pxOverflowTimerList = &xActiveTimerList2;

2.3 :创建队列,定时器任务其实就是专门处理接收定时器命令的队列任务,且带超时阻塞机制实现定时逻辑

xTimerQueue = xQueueCreate( ( UBaseType_t ) configTIMER_QUEUE_LENGTH, ( UBaseType_t ) sizeof( DaemonTaskMessage_t ) );

2.4 :队列注册到静态调试队列,方便调试,0x20001000: / TmrQ: 调试阶段,基于调试名显示,方便直观

vQueueAddToRegistry( xTimerQueue, "TmrQ" );

2.5 :创建任务,定时器任务名默认“Tmr Svc”;定时器任务栈默认 configMINIMAL_STACK_SIZE * 2;定时器任务默认最高 configTIMER_TASK_PRIORITY 宏定义为 configMAX_PRIORITIES - 1

xReturn = xTaskCreate( prvTimerTask, configTIMER_SERVICE_TASK_NAME, configTIMER_TASK_STACK_DEPTH, NULL, ( ( UBaseType_t ) configTIMER_TASK_PRIORITY ) | portPRIVILEGE_BIT, &xTimerTaskHandle );

3. 配置调度逻辑

-

任务调度Step3 : 配置调度逻辑

3.1 : 关闭中断

3.2 : 初始当前任务TLS段数据(每个线程有自己的TLS数据)

3.3 : 初始调度阻塞的检测限值为最大(Tick查询阻塞态任务时先预检该值),启动调度器标志位,初始xTickCount为0

3.4 : 初始执行逻辑,设PendSV优先级,SysTick优先级,配置SysTick定时频率,复位临界区嵌套计数,使能FPU单元,FPU启用惰性机制,进入启动汇编执行逻辑

3.5 : 加载向量表中的第一个数据赋予MSP实现初始MSP栈顶,control寄存器初始为0(参考寄存器章节描述),cpsie i ; 启用 IRQ 中断(清除 PRIMASK),cpsie f ; 启用 Fault 中断(清除 FAULTMASK),数据与指令同步,svc中断启动, svc x (x : 0~255表示不同服务)

3.1 :关闭中断

portDISABLE_INTERRUPTS();

3.2 :初始当前任务TLS段数据(每个线程有自己的TLS数据)

configSET_TLS_BLOCK( pxCurrentTCB->xTLSBlock );

3.3 :初始调度阻塞的检测限值为最大(Tick查询阻塞态任务时先预检该值),启动调度器标志位,初始xTickCount为0

xNextTaskUnblockTime = portMAX_DELAY; xSchedulerRunning = pdTRUE; xTickCount = ( TickType_t ) configINITIAL_TICK_COUNT;

3.4 :初始执行逻辑,设PendSV优先级,SysTick优先级,配置SysTick定时频率,复位临界区嵌套计数,使能FPU单元,FPU启用惰性机制,进入启动汇编执行逻辑

BaseType_t xPortStartScheduler( void ) { /* Make PendSV and SysTick the lowest priority interrupts. */ portNVIC_SHPR3_REG |= portNVIC_PENDSV_PRI; //设PendSV优先级为最低 portNVIC_SHPR3_REG |= portNVIC_SYSTICK_PRI;//设SysTick优先级为最低 /* Start the timer that generates the tick ISR. Interrupts are disabled * here already. */ vPortSetupTimerInterrupt(); //配置SysTick定时频率,时钟源(主频或主频/8),中断使能,SysTick自身使能 /* Initialise the critical nesting count ready for the first task. */ uxCriticalNesting = 0; //临界区嵌套深度计数,为0说明临界区嵌套调用层数为0没有嵌套 /* Ensure the VFP is enabled - it should be anyway. */ /* 使能处理器的浮点单元(FPU),主要是设置CPACR寄存器。 * 此操作必须在首次使用浮点指令前完成。 */ prvEnableVFP(); /* Lazy save always. */ /* 在FPCCR寄存器中设置ASPEN和LSPEN位,全局启用“惰性堆叠”机制。 * 此配置使得硬件在任务首次使用FPU后,才在上下文切换时自动保存/恢复浮点寄存器, * 从而优化未使用FPU任务的切换开销。此配置是全局性的,作用于所有任务。 */ *( portFPCCR ) |= portASPEN_AND_LSPEN_BITS; /* Start the first task. */ prvStartFirstTask(); //启动第一个任务 /* Should not get here! */ return 0; }

3.5 :加载向量表中的第一个数据赋予MSP实现初始MSP栈顶,control寄存器初始为0(参考寄存器章节描述),cpsie i ; 启用 IRQ 中断(清除 PRIMASK),cpsie f ; 启用 Fault 中断(清除 FAULTMASK),数据与指令同步,svc中断启动, svc x (x : 0~255表示不同服务)

__asm void prvStartFirstTask( void ) { /* *INDENT-OFF* */ PRESERVE8 /* Use the NVIC offset register to locate the stack. */ ldr r0, =0xE000ED08 ldr r0, [ r0 ] ldr r0, [ r0 ] /* Set the msp back to the start of the stack. */ msr msp, r0 /* Clear the bit that indicates the FPU is in use in case the FPU was used * before the scheduler was started - which would otherwise result in the * unnecessary leaving of space in the SVC stack for lazy saving of FPU * registers. */ mov r0, #0 msr control, r0 /* Globally enable interrupts. */ cpsie i cpsie f dsb isb /* Call SVC to start the first task. */ svc 0 nop nop /* *INDENT-ON* */ }

4. SVC中断 : 任务启动

-

任务调度Step4 : SVC中断 : 任务启动

4.1 :获取pxCurrentTCB的栈顶,根据栈顶开始⽤R0来当指针恢复新TCB的R4 ~ R11,R14,msr basepri, r0 恢复中断优先级屏蔽,bx r14触发异常返回序列Handler,Thread,msp,psp,选择Thread,psp模式,当svc中断退出时,触发硬件弹栈,根据psp指针指向任务栈R0处开始弹栈,弹栈完成,此时PC值是新任务控制块保存的PC地址,因此跳转在任务保存的PC值处执⾏

__asm void vPortSVCHandler( void ) { /* *INDENT-OFF* */ PRESERVE8 /* Get the location of the current TCB. */ ldr r3, =pxCurrentTCB ldr r1, [ r3 ] ldr r0, [ r1 ] /* Pop the core registers. */ ldmia r0!, { r4-r11, r14 } msr psp, r0 isb mov r0, #0 msr basepri, r0 bx r14 /* *INDENT-ON* */ }

5. PendSV中断 : 任务切换

-

任务调度Step5 : PendSV中断 : 任务切换

5.1 : 进⼊中断硬件⾃动压栈 : xPSR, PC, LR, R12, R3 ~ R0,EXCTURN,保留字, 此时R14的参数是EXCTURN;R0指向PSP,获取当前 pxCurrentTCB 指向的任务块地址;根据EXCTURN的bit4选择⼿动压栈FPU寄存器,再压R4 ~ R11, R14(因切换任务块所以保存R14 EXCTURN);关⻔中断,调⽤ vTaskSwitchContext 切换任务块;获取新指向的任务控制块栈顶 pxTop0fStack ,⽤R0指向栈顶弹栈 R4~R11, R14,根据R14(EXCTURN) 选择恢复FPU寄存器 s16 ~ s31;PSP指向软件弹栈后的R0,,执⾏bx r14触发异常返回序列;中断退出,此时PSP恰好在栈顶 pxTop0fStack ,在这个新任务控制块的栈顶硬件⾃动弹栈新任务控制块的寄存器

__asm void xPortPendSVHandler( void ) { extern uxCriticalNesting; extern pxCurrentTCB; extern vTaskSwitchContext; /* *INDENT-OFF* */ PRESERVE8 mrs r0, psp isb /* Get the location of the current TCB. */ ldr r3, =pxCurrentTCB ldr r2, [ r3 ] /* Is the task using the FPU context? If so, push high vfp registers. */ tst r14, #0x10 it eq vstmdbeq r0!, {s16-s31} /* Save the core registers. */ stmdb r0!, {r4-r11, r14 } /* Save the new top of stack into the first member of the TCB. */ str r0, [ r2 ] /* 把r0和r3压栈,关闭中断,指令同步后切换TCB块 */ stmdb sp!, { r0, r3 } mov r0, #configMAX_SYSCALL_INTERRUPT_PRIORITY cpsid i msr basepri, r0 dsb isb cpsie i bl vTaskSwitchContext mov r0, #0 msr basepri, r0 ldmia sp!, { r0, r3 } /* 这里r3 是 pxCurrentTCB的地址,[r3]表示解引用二级指针得到当前pxCurrentTCB一级指针指向的地址 */ ldr r1, [ r3 ] /* 解引用pxCurrentTCB,得到第一个成员栈顶,此时r0是栈顶的指向,那么就可以基于栈顶恢复任务状态 */ ldr r0, [ r1 ] /* Pop the core registers. */ ldmia r0!, { r4-r11, r14 } /* Is the task using the FPU context? If so, pop the high vfp registers * too. */ tst r14, #0x10 it eq vldmiaeq r0!, { s16-s31 } msr psp, r0 isb #ifdef WORKAROUND_PMU_CM001 /* XMC4000 specific errata */ #if WORKAROUND_PMU_CM001 == 1 push { r14 } pop { pc } nop #endif #endif bx r14 /* *INDENT-ON* */ }5.2 :vTaskSwitchContext 中核心调用了以下这段代码,基于前导零算法(顺序翻转)获取当前全局最高优先级,基于最高优先级在就绪链表中的pvOwner 索引到TCB任务控制块,至此 pxCurrentTCB 当前全局TCB切换指向为当前优先级就绪链表中的下一个TCB块,完成切换指向,之后PendSV继续执行基于TCB栈顶恢复状态

#define portGET_HIGHEST_PRIORITY( uxTopPriority, uxReadyPriorities ) uxTopPriority = ( 31UL - ( uint32_t ) __clz( ( uxReadyPriorities ) ) ) #define taskSELECT_HIGHEST_PRIORITY_TASK() \ do { \ UBaseType_t uxTopPriority; \ \ /* Find the highest priority list that contains ready tasks. */ \ portGET_HIGHEST_PRIORITY( uxTopPriority, uxTopReadyPriority ); \ configASSERT( listCURRENT_LIST_LENGTH( &( pxReadyTasksLists[ uxTopPriority ] ) ) > 0 ); \ listGET_OWNER_OF_NEXT_ENTRY( pxCurrentTCB, &( pxReadyTasksLists[ uxTopPriority ] ) ); \ } while( 0 ) #define listGET_OWNER_OF_NEXT_ENTRY( pxTCB, pxList ) \ do { \ List_t * const pxConstList = ( pxList ); \ /* Increment the index to the next item and return the item, ensuring */ \ /* we don't return the marker used at the end of the list. */ \ ( pxConstList )->pxIndex = ( pxConstList )->pxIndex->pxNext; \ if( ( void * ) ( pxConstList )->pxIndex == ( void * ) &( ( pxConstList )->xListEnd ) ) \ { \ ( pxConstList )->pxIndex = ( pxConstList )->xListEnd.pxNext; \ } \ ( pxTCB ) = ( pxConstList )->pxIndex->pvOwner; \ } while( 0 )

6 . SysTick中断 : 系统心跳

-

任务调度Step6 : SysTick中断 : 系统心跳

6.1:以下是 FreeRTOS 中作为系统心跳的 SysTick 定时器中断服务程序 xPortSysTickHandler 的核心实现。它在进入时通过 vPortRaiseBASEPRI() 快速提升中断屏蔽级别以保护临界区,然后调用 xTaskIncrementTick() 函数递增系统时钟节拍,并检查是否有延时任务到期或时间片轮转需要触发任务切换;若需要切换,则通过写 portNVIC_INT_CTRL_REG 寄存器设置 PendSV 中断挂起位,将实际耗时的上下文切换工作延迟到低优先级的 PendSV 异常中处理,从而确保 SysTick 中断本身的快速执行与退出;最后通过 vPortClearBASEPRIFromISR() 恢复中断屏蔽状态。此机制是 FreeRTOS 实现基于时间片的任务调度、阻塞超时管理及系统时间基准维护的基础。

void xPortSysTickHandler( void ) { /* The SysTick runs at the lowest interrupt priority, so when this interrupt * executes all interrupts must be unmasked. There is therefore no need to * save and then restore the interrupt mask value as its value is already * known - therefore the slightly faster vPortRaiseBASEPRI() function is used * in place of portSET_INTERRUPT_MASK_FROM_ISR(). */ vPortRaiseBASEPRI(); traceISR_ENTER(); { /* Increment the RTOS tick. */ if( xTaskIncrementTick() != pdFALSE ) { traceISR_EXIT_TO_SCHEDULER(); /* A context switch is required. Context switching is performed in * the PendSV interrupt. Pend the PendSV interrupt. */ portNVIC_INT_CTRL_REG = portNVIC_PENDSVSET_BIT; } else { traceISR_EXIT(); } } vPortClearBASEPRIFromISR(); }6.2 :以下这段代码是 FreeRTOS 系统节拍递增与任务调度检查的核心函数 xTaskIncrementTick 的实现,它在调度器未挂起时递增系统节拍计数器 xTickCount,并处理计数器回绕时的延迟列表切换;随后检查该节拍是否已达到或超过下一个任务解除阻塞的时间点 xNextTaskUnblockTime:若是,则遍历延迟任务列表,将所有已到期的任务移出阻塞态并加入就绪列表,同时根据被唤醒任务的优先级与当前任务的优先级比较(在可抢占模式下),或根据就绪列表中同优先级任务的数量(在时间片轮转模式下),来判断是否需要触发一次任务切换;此外,若调度器处于挂起状态,则递增挂起节拍计数。函数最终返回一个布尔值,指示 SysTick 中断服务程序是否需要触发 PendSV 中断以执行实际的任务上下文切换。

BaseType_t xTaskIncrementTick( void ) { TCB_t * pxTCB; TickType_t xItemValue; BaseType_t xSwitchRequired = pdFALSE; #if ( configUSE_PREEMPTION == 1 ) && ( configNUMBER_OF_CORES > 1 ) BaseType_t xYieldRequiredForCore[ configNUMBER_OF_CORES ] = { pdFALSE }; #endif /* #if ( configUSE_PREEMPTION == 1 ) && ( configNUMBER_OF_CORES > 1 ) */ traceENTER_xTaskIncrementTick(); /* Called by the portable layer each time a tick interrupt occurs. * Increments the tick then checks to see if the new tick value will cause any * tasks to be unblocked. */ traceTASK_INCREMENT_TICK( xTickCount ); /* Tick increment should occur on every kernel timer event. Core 0 has the * responsibility to increment the tick, or increment the pended ticks if the * scheduler is suspended. If pended ticks is greater than zero, the core that * calls xTaskResumeAll has the responsibility to increment the tick. */ if( uxSchedulerSuspended == ( UBaseType_t ) 0U ) { /* Minor optimisation. The tick count cannot change in this * block. */ const TickType_t xConstTickCount = xTickCount + ( TickType_t ) 1; /* Increment the RTOS tick, switching the delayed and overflowed * delayed lists if it wraps to 0. */ xTickCount = xConstTickCount; if( xConstTickCount == ( TickType_t ) 0U ) { taskSWITCH_DELAYED_LISTS(); } else { mtCOVERAGE_TEST_MARKER(); } /* See if this tick has made a timeout expire. Tasks are stored in * the queue in the order of their wake time - meaning once one task * has been found whose block time has not expired there is no need to * look any further down the list. */ if( xConstTickCount >= xNextTaskUnblockTime ) { for( ; ; ) { if( listLIST_IS_EMPTY( pxDelayedTaskList ) != pdFALSE ) { /* The delayed list is empty. Set xNextTaskUnblockTime * to the maximum possible value so it is extremely * unlikely that the * if( xTickCount >= xNextTaskUnblockTime ) test will pass * next time through. */ xNextTaskUnblockTime = portMAX_DELAY; break; } else { /* The delayed list is not empty, get the value of the * item at the head of the delayed list. This is the time * at which the task at the head of the delayed list must * be removed from the Blocked state. */ /* MISRA Ref 11.5.3 [Void pointer assignment] */ /* More details at: https://github.com/FreeRTOS/FreeRTOS-Kernel/blob/main/MISRA.md#rule-115 */ /* coverity[misra_c_2012_rule_11_5_violation] */ pxTCB = listGET_OWNER_OF_HEAD_ENTRY( pxDelayedTaskList ); xItemValue = listGET_LIST_ITEM_VALUE( &( pxTCB->xStateListItem ) ); if( xConstTickCount < xItemValue ) { /* It is not time to unblock this item yet, but the * item value is the time at which the task at the head * of the blocked list must be removed from the Blocked * state - so record the item value in * xNextTaskUnblockTime. */ xNextTaskUnblockTime = xItemValue; break; } else { mtCOVERAGE_TEST_MARKER(); } /* It is time to remove the item from the Blocked state. */ listREMOVE_ITEM( &( pxTCB->xStateListItem ) ); /* Is the task waiting on an event also? If so remove * it from the event list. */ if( listLIST_ITEM_CONTAINER( &( pxTCB->xEventListItem ) ) != NULL ) { listREMOVE_ITEM( &( pxTCB->xEventListItem ) ); } else { mtCOVERAGE_TEST_MARKER(); } /* Place the unblocked task into the appropriate ready * list. */ prvAddTaskToReadyList( pxTCB ); /* A task being unblocked cannot cause an immediate * context switch if preemption is turned off. */ #if ( configUSE_PREEMPTION == 1 ) { #if ( configNUMBER_OF_CORES == 1 ) { /* Preemption is on, but a context switch should * only be performed if the unblocked task's * priority is higher than the currently executing * task. * The case of equal priority tasks sharing * processing time (which happens when both * preemption and time slicing are on) is * handled below.*/ if( pxTCB->uxPriority > pxCurrentTCB->uxPriority ) { xSwitchRequired = pdTRUE; } else { mtCOVERAGE_TEST_MARKER(); } } #else /* #if( configNUMBER_OF_CORES == 1 ) */ { prvYieldForTask( pxTCB ); } #endif /* #if( configNUMBER_OF_CORES == 1 ) */ } #endif /* #if ( configUSE_PREEMPTION == 1 ) */ } } } /* Tasks of equal priority to the currently running task will share * processing time (time slice) if preemption is on, and the application * writer has not explicitly turned time slicing off. */ #if ( ( configUSE_PREEMPTION == 1 ) && ( configUSE_TIME_SLICING == 1 ) ) { #if ( configNUMBER_OF_CORES == 1 ) { if( listCURRENT_LIST_LENGTH( &( pxReadyTasksLists[ pxCurrentTCB->uxPriority ] ) ) > 1U ) { xSwitchRequired = pdTRUE; } else { mtCOVERAGE_TEST_MARKER(); } } #else /* #if ( configNUMBER_OF_CORES == 1 ) */ { BaseType_t xCoreID; for( xCoreID = 0; xCoreID < ( ( BaseType_t ) configNUMBER_OF_CORES ); xCoreID++ ) { if( listCURRENT_LIST_LENGTH( &( pxReadyTasksLists[ pxCurrentTCBs[ xCoreID ]->uxPriority ] ) ) > 1U ) { xYieldRequiredForCore[ xCoreID ] = pdTRUE; } else { mtCOVERAGE_TEST_MARKER(); } } } #endif /* #if ( configNUMBER_OF_CORES == 1 ) */ } #endif /* #if ( ( configUSE_PREEMPTION == 1 ) && ( configUSE_TIME_SLICING == 1 ) ) */ #if ( configUSE_TICK_HOOK == 1 ) { /* Guard against the tick hook being called when the pended tick * count is being unwound (when the scheduler is being unlocked). */ if( xPendedTicks == ( TickType_t ) 0 ) { vApplicationTickHook(); } else { mtCOVERAGE_TEST_MARKER(); } } #endif /* configUSE_TICK_HOOK */ #if ( configUSE_PREEMPTION == 1 ) { #if ( configNUMBER_OF_CORES == 1 ) { /* For single core the core ID is always 0. */ if( xYieldPendings[ 0 ] != pdFALSE ) { xSwitchRequired = pdTRUE; } else { mtCOVERAGE_TEST_MARKER(); } } #else /* #if ( configNUMBER_OF_CORES == 1 ) */ { BaseType_t xCoreID, xCurrentCoreID; xCurrentCoreID = ( BaseType_t ) portGET_CORE_ID(); for( xCoreID = 0; xCoreID < ( BaseType_t ) configNUMBER_OF_CORES; xCoreID++ ) { #if ( configUSE_TASK_PREEMPTION_DISABLE == 1 ) if( pxCurrentTCBs[ xCoreID ]->xPreemptionDisable == pdFALSE ) #endif { if( ( xYieldRequiredForCore[ xCoreID ] != pdFALSE ) || ( xYieldPendings[ xCoreID ] != pdFALSE ) ) { if( xCoreID == xCurrentCoreID ) { xSwitchRequired = pdTRUE; } else { prvYieldCore( xCoreID ); } } else { mtCOVERAGE_TEST_MARKER(); } } } } #endif /* #if ( configNUMBER_OF_CORES == 1 ) */ } #endif /* #if ( configUSE_PREEMPTION == 1 ) */ } else { xPendedTicks += 1U; /* The tick hook gets called at regular intervals, even if the * scheduler is locked. */ #if ( configUSE_TICK_HOOK == 1 ) { vApplicationTickHook(); } #endif } traceRETURN_xTaskIncrementTick( xSwitchRequired ); return xSwitchRequired; }

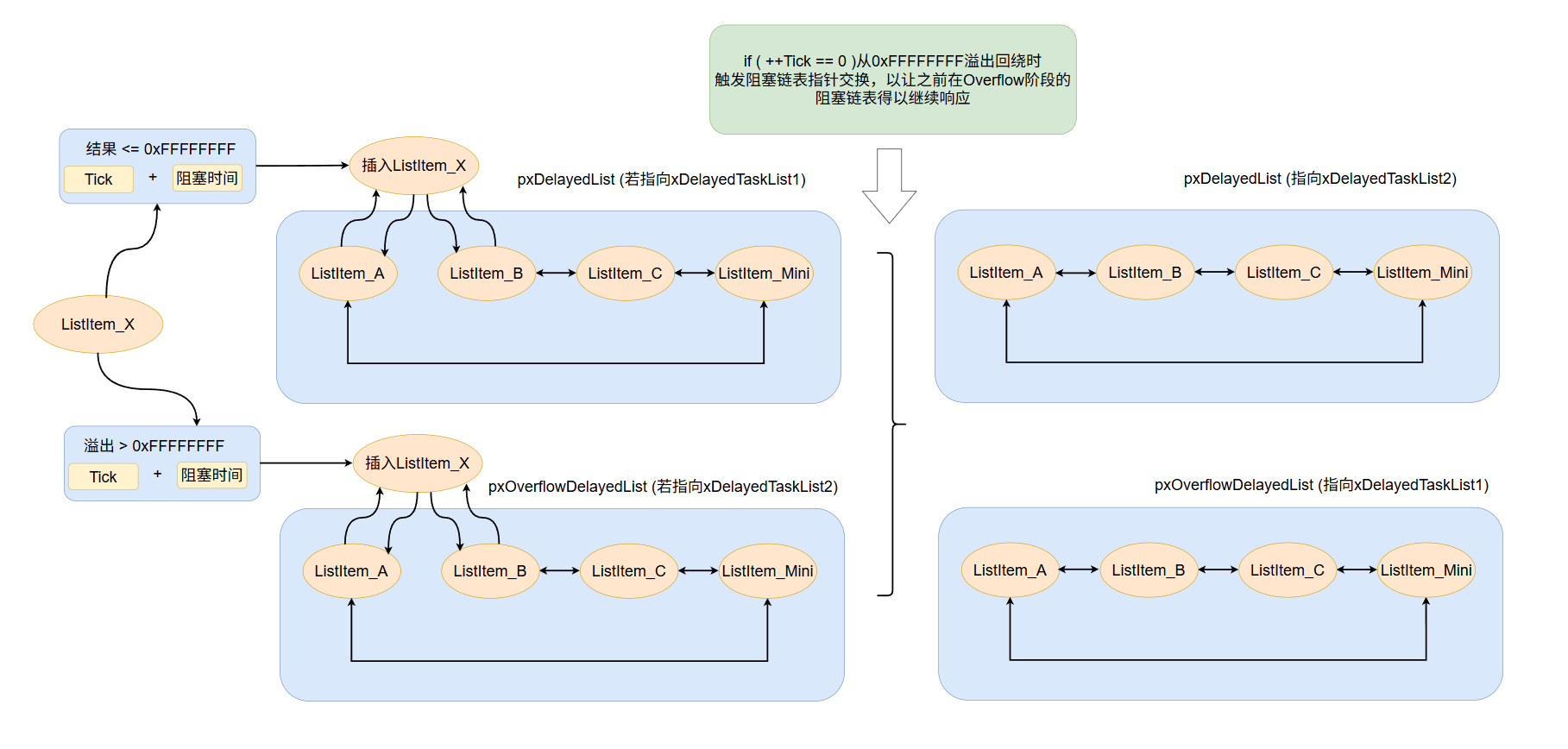

6.3 :以下这段代码是FreeRTOS中用于处理系统节拍计数器溢出时交换延迟任务列表的宏。当系统节拍计数器xTickCount溢出回绕时,它会交换常规延迟任务列表pxDelayedTaskList和溢出延迟任务列表pxOverflowDelayedTaskList的指针角色,同时递增溢出计数器并重新计算下一个任务唤醒时间。

这个宏与prvAddCurrentTaskToDelayedList中的溢出处理逻辑相配合:当一个任务的唤醒时间因计算溢出(即xTimeToWake < xConstTickCount)而被放入溢出延迟列表后,当系统节拍计数器实际发生溢出时,通过交换两个列表的指针,原先的"溢出"任务现在实际上已不再溢出,从而能被正常调度唤醒。#define taskSWITCH_DELAYED_LISTS() \ do { \ List_t * pxTemp; \ \ /* The delayed tasks list should be empty when the lists are switched. */ \ configASSERT( ( listLIST_IS_EMPTY( pxDelayedTaskList ) ) ); \ \ pxTemp = pxDelayedTaskList; \ pxDelayedTaskList = pxOverflowDelayedTaskList; \ pxOverflowDelayedTaskList = pxTemp; \ xNumOfOverflows = ( BaseType_t ) ( xNumOfOverflows + 1 ); \ prvResetNextTaskUnblockTime(); \ } while( 0 )

7 . vTaskDelay / xTaskDelayUntil (任务阻塞)

-

任务调度Step7 : vTaskDelay : 任务阻塞(主动调度)

7.1 :以下这段代码是 FreeRTOS 中实现任务主动延时功能的核心函数 vTaskDelay 的实现,其主要逻辑是:若传入的延时时间大于零,函数会首先挂起任务调度器,通过调用 prvAddCurrentTaskToDelayedList 将当前正在执行的任务从就绪列表中移除,并根据延时周期将其置入相应的延迟任务列表,使其进入阻塞态;随后恢复调度器。若延时时间为零,该函数则纯粹作为一个强制任务切换的请求。无论是以上哪种情况,函数最终都会通过检查 xAlreadyYielded 标志或主动调用 taskYIELD_WITHIN_API() 来确保调度器有机会进行一次重新调度,从而使其他就绪的、特别是高优先级的任务得以运行。此机制是任务主动放弃 CPU、实现周期性执行或简单协作调度的基础;

void vTaskDelay( const TickType_t xTicksToDelay ) { BaseType_t xAlreadyYielded = pdFALSE; traceENTER_vTaskDelay( xTicksToDelay ); /* A delay time of zero just forces a reschedule. */ if( xTicksToDelay > ( TickType_t ) 0U ) { vTaskSuspendAll(); { configASSERT( uxSchedulerSuspended == 1U ); traceTASK_DELAY(); /* A task that is removed from the event list while the * scheduler is suspended will not get placed in the ready * list or removed from the blocked list until the scheduler * is resumed. * * This task cannot be in an event list as it is the currently * executing task. */ prvAddCurrentTaskToDelayedList( xTicksToDelay, pdFALSE ); } xAlreadyYielded = xTaskResumeAll(); } else { mtCOVERAGE_TEST_MARKER(); } /* Force a reschedule if xTaskResumeAll has not already done so, we may * have put ourselves to sleep. */ if( xAlreadyYielded == pdFALSE ) { taskYIELD_WITHIN_API(); } else { mtCOVERAGE_TEST_MARKER(); } traceRETURN_vTaskDelay(); }7.2 :以下这段代码是FreeRTOS中将当前任务添加到延迟列表的核心函数,主要功能如下:将当前任务从就绪列表中移除,根据延时时间计算唤醒时间点,然后将其插入到适当的延迟列表中。如果延时时间设置为最大值且允许无限期阻塞,则任务会被挂起;否则,根据唤醒时间是否发生溢出(由于系统节拍计数器回绕),任务会被插入到常规延迟列表或溢出延迟列表中。当任务被添加到常规延迟列表头部时,还会更新下一个任务唤醒时间(xNextTaskUnblockTime)以优化调度效率。

static void prvAddCurrentTaskToDelayedList( TickType_t xTicksToWait, const BaseType_t xCanBlockIndefinitely ) { TickType_t xTimeToWake; const TickType_t xConstTickCount = xTickCount; List_t * const pxDelayedList = pxDelayedTaskList; List_t * const pxOverflowDelayedList = pxOverflowDelayedTaskList; #if ( INCLUDE_xTaskAbortDelay == 1 ) { /* About to enter a delayed list, so ensure the ucDelayAborted flag is * reset to pdFALSE so it can be detected as having been set to pdTRUE * when the task leaves the Blocked state. */ pxCurrentTCB->ucDelayAborted = ( uint8_t ) pdFALSE; } #endif /* Remove the task from the ready list before adding it to the blocked list * as the same list item is used for both lists. */ if( uxListRemove( &( pxCurrentTCB->xStateListItem ) ) == ( UBaseType_t ) 0 ) { /* The current task must be in a ready list, so there is no need to * check, and the port reset macro can be called directly. */ portRESET_READY_PRIORITY( pxCurrentTCB->uxPriority, uxTopReadyPriority ); } else { mtCOVERAGE_TEST_MARKER(); } #if ( INCLUDE_vTaskSuspend == 1 ) { if( ( xTicksToWait == portMAX_DELAY ) && ( xCanBlockIndefinitely != pdFALSE ) ) { /* Add the task to the suspended task list instead of a delayed task * list to ensure it is not woken by a timing event. It will block * indefinitely. */ listINSERT_END( &xSuspendedTaskList, &( pxCurrentTCB->xStateListItem ) ); } else { /* Calculate the time at which the task should be woken if the event * does not occur. This may overflow but this doesn't matter, the * kernel will manage it correctly. */ xTimeToWake = xConstTickCount + xTicksToWait; /* The list item will be inserted in wake time order. */ listSET_LIST_ITEM_VALUE( &( pxCurrentTCB->xStateListItem ), xTimeToWake ); if( xTimeToWake < xConstTickCount ) { /* Wake time has overflowed. Place this item in the overflow * list. */ traceMOVED_TASK_TO_OVERFLOW_DELAYED_LIST(); vListInsert( pxOverflowDelayedList, &( pxCurrentTCB->xStateListItem ) ); } else { /* The wake time has not overflowed, so the current block list * is used. */ traceMOVED_TASK_TO_DELAYED_LIST(); vListInsert( pxDelayedList, &( pxCurrentTCB->xStateListItem ) ); /* If the task entering the blocked state was placed at the * head of the list of blocked tasks then xNextTaskUnblockTime * needs to be updated too. */ if( xTimeToWake < xNextTaskUnblockTime ) { xNextTaskUnblockTime = xTimeToWake; } else { mtCOVERAGE_TEST_MARKER(); } } } } #else /* INCLUDE_vTaskSuspend */ { /* Calculate the time at which the task should be woken if the event * does not occur. This may overflow but this doesn't matter, the kernel * will manage it correctly. */ xTimeToWake = xConstTickCount + xTicksToWait; /* The list item will be inserted in wake time order. */ listSET_LIST_ITEM_VALUE( &( pxCurrentTCB->xStateListItem ), xTimeToWake ); if( xTimeToWake < xConstTickCount ) { traceMOVED_TASK_TO_OVERFLOW_DELAYED_LIST(); /* Wake time has overflowed. Place this item in the overflow list. */ vListInsert( pxOverflowDelayedList, &( pxCurrentTCB->xStateListItem ) ); } else { traceMOVED_TASK_TO_DELAYED_LIST(); /* The wake time has not overflowed, so the current block list is used. */ vListInsert( pxDelayedList, &( pxCurrentTCB->xStateListItem ) ); /* If the task entering the blocked state was placed at the head of the * list of blocked tasks then xNextTaskUnblockTime needs to be updated * too. */ if( xTimeToWake < xNextTaskUnblockTime ) { xNextTaskUnblockTime = xTimeToWake; } else { mtCOVERAGE_TEST_MARKER(); } } /* Avoid compiler warning when INCLUDE_vTaskSuspend is not 1. */ ( void ) xCanBlockIndefinitely; } #endif /* INCLUDE_vTaskSuspend */ }

7.3 :以下这段代码实现了FreeRTOS中精确周期性延迟函数xTaskDelayUntil的核心逻辑:通过挂起调度器确保计算的原子性,它基于任务上次唤醒时间*pxPreviousWakeTime和固定周期xTimeIncrement计算出下一次理论唤醒时间点xTimeToWake,并细致地处理了系统tick计数器可能溢出的情况;然后判断当前时刻是否已超过或到达该唤醒时间——若未到达,则将任务精确阻塞相应时长并加入延迟列表;若已超过(意味着任务因执行过久错过了周期),则立即返回而不阻塞。最后,函数更新唤醒时间为下一次周期的起点,并在必要时强制进行一次任务切换。整个设计旨在为任务提供稳定、可累积误差的固定周期执行能力,总而言之就是若因任务处理时间超出设定的时间,则不延时,立刻返回继续执行任务,否则得到Tick差进行延时,目的是让任务运行时间频率尽量一致,例如10ms执行一次,若有一次任务执行用了8ms,那么xTaskDelayUntil只会再阻塞2ms,若是任务执行用了10ms以上,则不阻塞立刻返回继续执行。

BaseType_t xTaskDelayUntil( TickType_t * const pxPreviousWakeTime, const TickType_t xTimeIncrement ) { TickType_t xTimeToWake; BaseType_t xAlreadyYielded, xShouldDelay = pdFALSE; traceENTER_xTaskDelayUntil( pxPreviousWakeTime, xTimeIncrement ); configASSERT( pxPreviousWakeTime ); configASSERT( ( xTimeIncrement > 0U ) ); vTaskSuspendAll(); { /* Minor optimisation. The tick count cannot change in this * block. */ const TickType_t xConstTickCount = xTickCount; configASSERT( uxSchedulerSuspended == 1U ); /* Generate the tick time at which the task wants to wake. */ xTimeToWake = *pxPreviousWakeTime + xTimeIncrement; if( xConstTickCount < *pxPreviousWakeTime ) { /* The tick count has overflowed since this function was * lasted called. In this case the only time we should ever * actually delay is if the wake time has also overflowed, * and the wake time is greater than the tick time. When this * is the case it is as if neither time had overflowed. */ if( ( xTimeToWake < *pxPreviousWakeTime ) && ( xTimeToWake > xConstTickCount ) ) { xShouldDelay = pdTRUE; } else { mtCOVERAGE_TEST_MARKER(); } } else { /* The tick time has not overflowed. In this case we will * delay if either the wake time has overflowed, and/or the * tick time is less than the wake time. */ if( ( xTimeToWake < *pxPreviousWakeTime ) || ( xTimeToWake > xConstTickCount ) ) { xShouldDelay = pdTRUE; } else { mtCOVERAGE_TEST_MARKER(); } } /* Update the wake time ready for the next call. */ *pxPreviousWakeTime = xTimeToWake; if( xShouldDelay != pdFALSE ) { traceTASK_DELAY_UNTIL( xTimeToWake ); /* prvAddCurrentTaskToDelayedList() needs the block time, not * the time to wake, so subtract the current tick count. */ prvAddCurrentTaskToDelayedList( xTimeToWake - xConstTickCount, pdFALSE ); } else { mtCOVERAGE_TEST_MARKER(); } } xAlreadyYielded = xTaskResumeAll(); /* Force a reschedule if xTaskResumeAll has not already done so, we may * have put ourselves to sleep. */ if( xAlreadyYielded == pdFALSE ) { taskYIELD_WITHIN_API(); } else { mtCOVERAGE_TEST_MARKER(); } traceRETURN_xTaskDelayUntil( xShouldDelay ); return xShouldDelay; }

四. 源码 : 内存管理

前言 : 以下五种内存管理方案,笔者在源码中添加了注释,这里前言主要强调两点

- 源码内存管理注重 内存池字节对齐 ,申请的内存起始地址 内存字节对齐;

- 内存池每个空闲块通过头部信息 (头部信息大小为8字节) 管理块,通过链表管理整个内存池

| 内存管理模式 | 功能 |

|---|---|

| heap1 | 只申请内存,不释放内存 |

| heap2 | 申请内存,释放内存,拆分内存 |

| heap3 | 间接调用平台内存申请接口 |

| heap4 | 申请内存,释放内存,拆分内存,合并内存 |

| heap5 | 申请内存,释放内存,拆分内存,合并内存,多段区域内存管理 |

1. heap_1.c 内存管理

内存管理API : pvPortMalloc(); vPortFree();

内存管理heap1 :heap_1.c源码,截全核心代码,每个细节笔者根据自己理解注解

跳转置顶

-

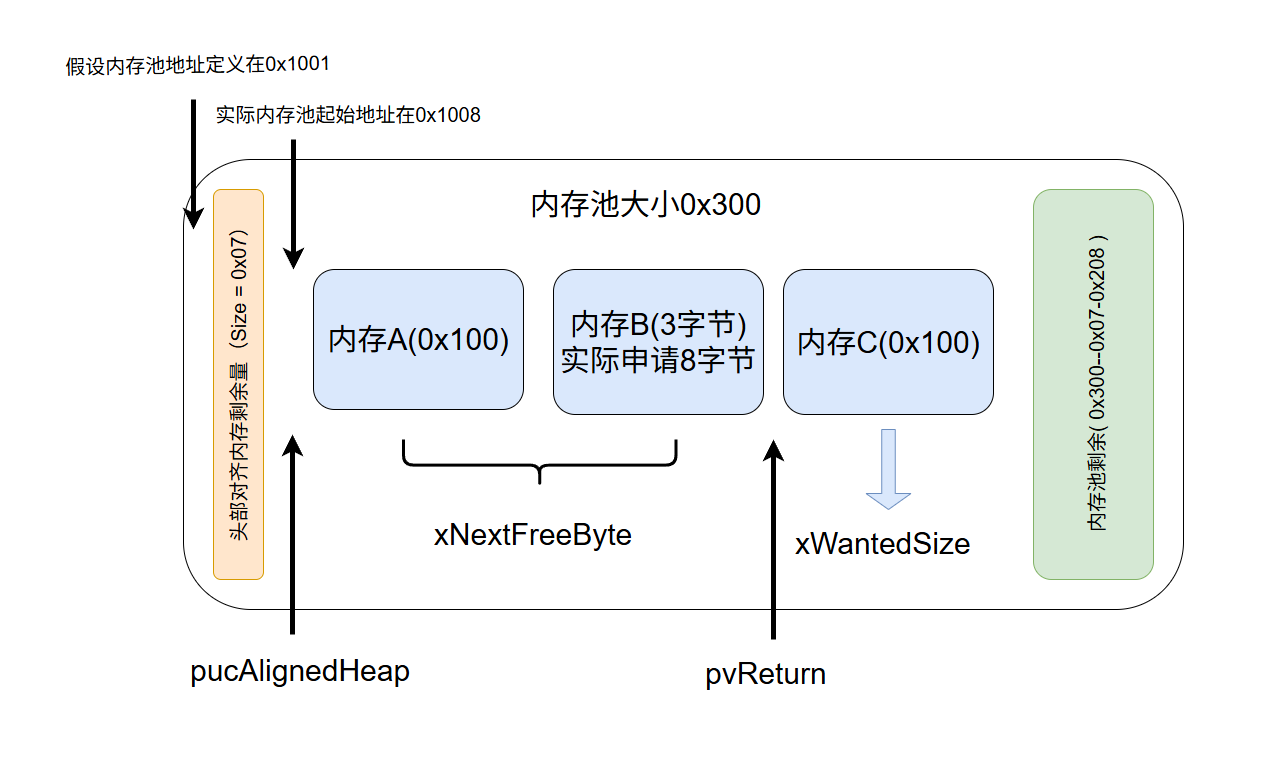

heap_1.c 内存管理

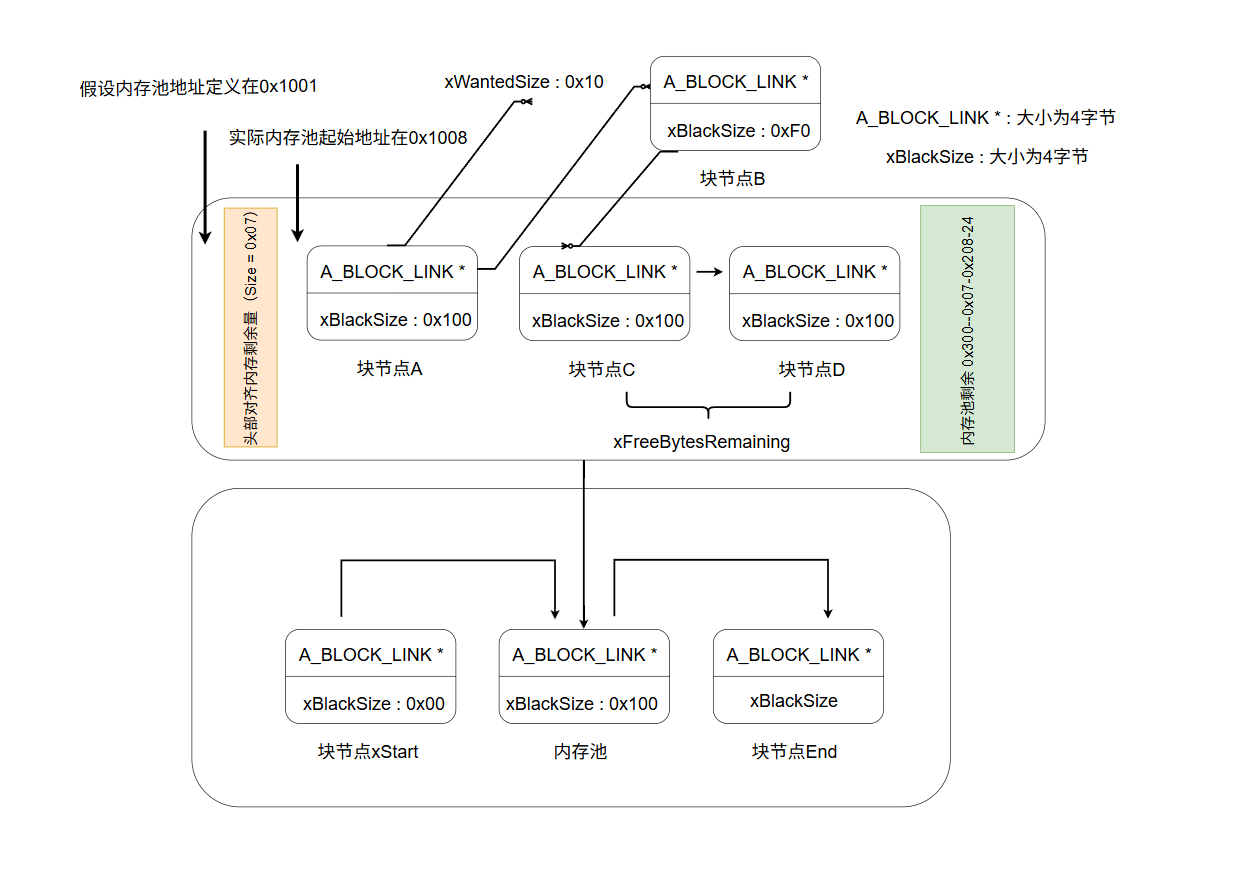

1.1 :heap_1内存管理图解,分开两个对齐,第一个对齐是内存池自身地址对齐,预留八个字节用于向下对齐,例如0x1001,则在0x1001~0x1009之间找到8的倍数对齐,即0x1008,第二个对齐是内存申请的对齐,例如申请3字节,则向上对齐8的倍数,则实际申请内存为8字节

1.2 完整heap_1.c源码解析,这段代码是FreeRTOS中 heap_1内存管理方案的核心实现,它采用一种简单、确定的静态内存分配策略。其核心机制是预先定义一个静态数组ucHeap作为全局内存池,在首次分配时通过地址掩码操作确保返回的起始地址满足字节对齐要求(如8字节)。分配过程通过维护一个全局偏移量xNextFreeByte来顺序分配内存,并在分配时挂起调度器以保证线程安全。该方案的特点是只进行内存分配,不支持释放,所有分配的内存会在应用程序生命周期内一直被占用。它通过xPortGetFreeHeapSize等函数提供剩余内存查询,并通过钩子函数支持分配失败的定制处理,其设计确保了在资源受限的嵌入式环境(尤其是启动阶段)中内存操作的可靠性和可预测性

/* 实际可用内存为总内存池减去对齐字节(8) */ #define configADJUSTED_HEAP_SIZE ( configTOTAL_HEAP_SIZE - portBYTE_ALIGNMENT ) /* 定义内存池 */ #if ( configAPPLICATION_ALLOCATED_HEAP == 1 ) extern uint8_t ucHeap[ configTOTAL_HEAP_SIZE ]; #else static uint8_t ucHeap[ configTOTAL_HEAP_SIZE ]; #endif /* configAPPLICATION_ALLOCATED_HEAP */ /* 已申请内存的大小 */ static size_t xNextFreeByte = ( size_t ) 0U; void * pvPortMalloc( size_t xWantedSize ) { void * pvReturn = NULL; static uint8_t * pucAlignedHeap = NULL; /* 若申请的内存非对齐情况下,叠加对齐剩余量向上对齐,例如申请内存为3字节,而最小对齐字节8字节, 则3+(8-(3&7)) 结果为8,则可以理解为不够8的倍数,则以向上对齐到8的倍数来申请内存 */ #if ( portBYTE_ALIGNMENT != 1 ) { if( xWantedSize & portBYTE_ALIGNMENT_MASK ) { if( ( xWantedSize + ( portBYTE_ALIGNMENT - ( xWantedSize & portBYTE_ALIGNMENT_MASK ) ) ) > xWantedSize ) { xWantedSize += ( portBYTE_ALIGNMENT - ( xWantedSize & portBYTE_ALIGNMENT_MASK ) ); } else { xWantedSize = 0; } } } #endif /* 实际操作内存池时,挂起调度器 */ vTaskSuspendAll(); { if( pucAlignedHeap == NULL ) { /* 这里先指向内存池数组[7]的位置,也就是第八个,通过&上掩码 (~0x00000007) 得到八字节对齐, 例如内存池大小定义为0x1001,0x1001&0xFFF8得到0x1000,所以内存池实际起始地址为向下对齐8字节 */ pucAlignedHeap = ( uint8_t * ) ( ( ( portPOINTER_SIZE_TYPE ) & ucHeap[ portBYTE_ALIGNMENT - 1 ] ) & ( ~( ( portPOINTER_SIZE_TYPE ) portBYTE_ALIGNMENT_MASK ) ) ); } /* 若是已申请的内存 + 此刻需申请的内存在内存池范围内,且未溢出则认为可向内存池申请 */ if( ( xWantedSize > 0 ) && ( ( xNextFreeByte + xWantedSize ) < configADJUSTED_HEAP_SIZE ) && ( ( xNextFreeByte + xWantedSize ) > xNextFreeByte ) ) { /* 返回的内存地址为内存池对齐点 叠加 上一次的内存申请的尾部地址,即是返回新内存申请的头部 */ pvReturn = pucAlignedHeap + xNextFreeByte; /* 内存申请成功,累加申请的内存 */ xNextFreeByte += xWantedSize; } traceMALLOC( pvReturn, xWantedSize ); } /* 操作完内存池,恢复调度器 */ ( void ) xTaskResumeAll(); #if ( configUSE_MALLOC_FAILED_HOOK == 1 ) { if( pvReturn == NULL ) { /* 内存申请失败的钩子函数 */ vApplicationMallocFailedHook(); } } #endif return pvReturn; } void vPortFree( void * pv ) { /* heap1只申请内存,不进行释放 */ ( void ) pv; configASSERT( pv == NULL ); } void vPortInitialiseBlocks( void ) { /* 内存积累初始为0 */ xNextFreeByte = ( size_t ) 0; } size_t xPortGetFreeHeapSize( void ) { /* 可用内存减去积累内存得到剩余内存 */ return( configADJUSTED_HEAP_SIZE - xNextFreeByte ); } void vPortHeapResetState( void ) { /* 内存积累初始为0 */ xNextFreeByte = ( size_t ) 0U; }

2. heap_2.c 内存管理

内存管理API : pvPortMalloc(); vPortFree();

内存管理heap2 :heap_2.c源码,截全核心代码,每个细节笔者根据自己理解注解

跳转置顶

-

heap_2.c 内存管理

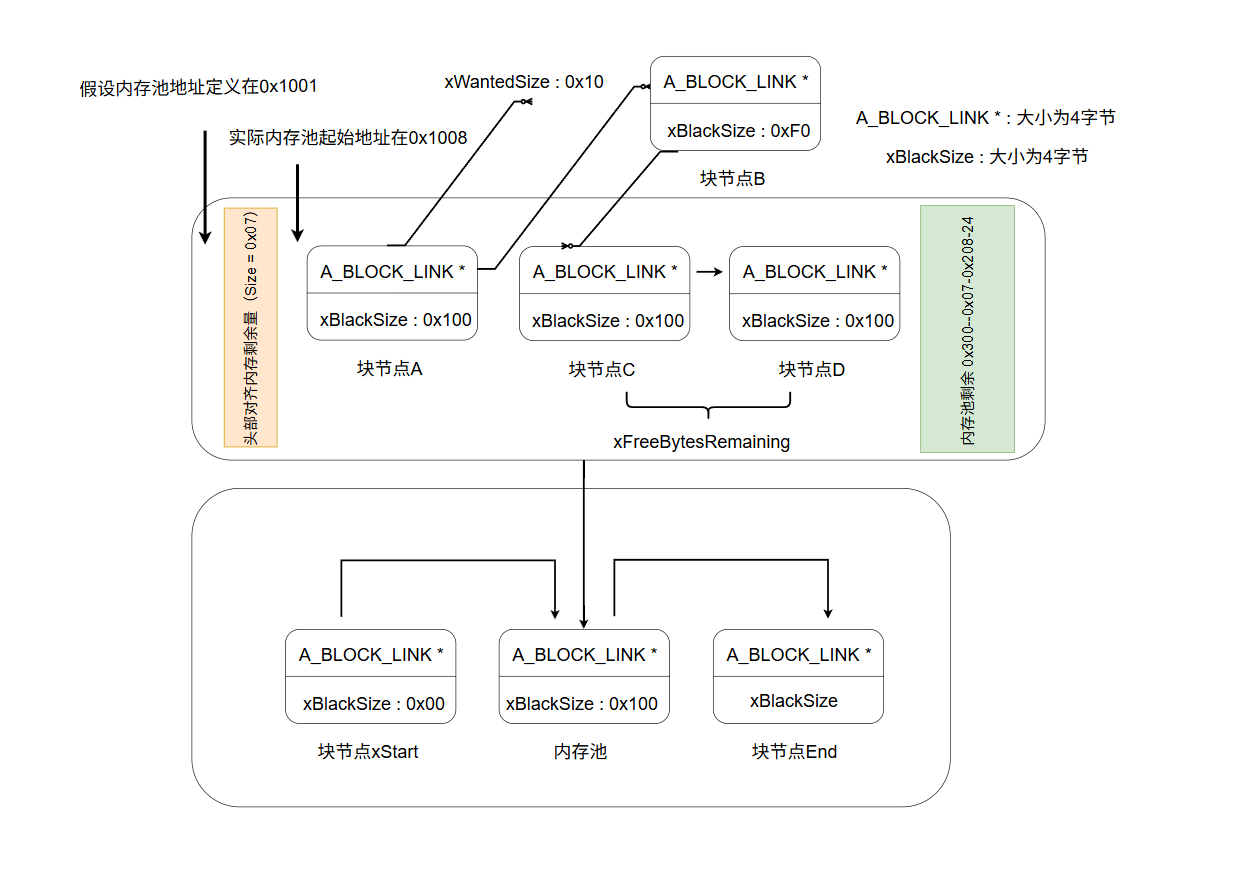

2.1 :heap_2内存管理图解,基于heap_1内存管理多了链表管理,拆分功能,释放内存功能

2.2 :完整heap_2.c源码解析,这段代码是 FreeRTOS 中 heap_2 内存管理方案的核心实现,它采用一种基于最佳适配算法的动态内存分配与释放策略。其核心机制是维护一个按块大小排序的单向空闲链表,每个空闲块通过 BlockLink_t 结构体(包含块大小和指向下一空闲块的指针)进行管理。在分配时,算法从链表头部开始遍历,寻找第一个大小足够满足请求的空闲块(最佳适配),如果该块剩余空间足够大,则会进行块拆分,将剩余部分作为新空闲块重新插入链表,并使用最高位标记法将已分配块的块大小字段标记为“已分配”。在释放时,通过指针回退找到内存块的头部信息,清除分配标记后,将整个块作为一个新的空闲块重新按大小顺序插入空闲链表。整个分配和释放过程都在挂起调度器的临界区内进行以确保线程安全,并且通过计算剩余总空闲字节数(xFreeBytesRemaining)来快速评估内存池整体使用情况。该方案支持内存的重复利用,适用于存在动态分配和释放、且分配块大小多变的场景,但其不合并相邻空闲块的设计可能导致内存碎片随时间累积。

/* 释放的内存清零 */ #define configHEAP_CLEAR_MEMORY_ON_FREE 0 /* 实际可用内存为总内存池减去对齐字节(8) */ #define configADJUSTED_HEAP_SIZE ( configTOTAL_HEAP_SIZE - portBYTE_ALIGNMENT ) /* 头部预留 8个字节 */ #define heapBITS_PER_BYTE ( ( size_t ) 8 ) /* 常量最大值 */ #define heapSIZE_MAX ( ~( ( size_t ) 0 ) ) /*检查乘法结果溢出*/ #define heapMULTIPLY_WILL_OVERFLOW( a, b ) ( ( ( a ) > 0 ) && ( ( b ) > ( heapSIZE_MAX / ( a ) ) ) ) /* 检查加法结果溢出 */ #define heapADD_WILL_OVERFLOW( a, b ) ( ( a ) > ( heapSIZE_MAX - ( b ) ) ) /* xBlockSize最高位定义为是否已分配 */ #define heapBLOCK_ALLOCATED_BITMASK ( ( ( size_t ) 1 ) << ( ( sizeof( size_t ) * heapBITS_PER_BYTE ) - 1 ) ) /* 判断该块是否未分配标记 */ #define heapBLOCK_SIZE_IS_VALID( xBlockSize ) ( ( ( xBlockSize ) & heapBLOCK_ALLOCATED_BITMASK ) == 0 ) /* 判断该块是否已分配标记 */ #define heapBLOCK_IS_ALLOCATED( pxBlock ) ( ( ( pxBlock->xBlockSize ) & heapBLOCK_ALLOCATED_BITMASK ) != 0 ) /* 块分配标记 */ #define heapALLOCATE_BLOCK( pxBlock ) ( ( pxBlock->xBlockSize ) |= heapBLOCK_ALLOCATED_BITMASK ) /* 块取消分配标记 */ #define heapFREE_BLOCK( pxBlock ) ( ( pxBlock->xBlockSize ) &= ~heapBLOCK_ALLOCATED_BITMASK ) /*-----------------------------------------------------------*/ /* 定义内存池 */ #if ( configAPPLICATION_ALLOCATED_HEAP == 1 ) extern uint8_t ucHeap[ configTOTAL_HEAP_SIZE ]; #else PRIVILEGED_DATA static uint8_t ucHeap[ configTOTAL_HEAP_SIZE ]; #endif /* 块结构, 头部信息 */ typedef struct A_BLOCK_LINK { struct A_BLOCK_LINK * pxNextFreeBlock; /*<< The next free block in the list. */ size_t xBlockSize; /*<< The size of the free block. */ } BlockLink_t; /* 头部信息大小 */ static const size_t xHeapStructSize = ( ( sizeof( BlockLink_t ) + ( size_t ) ( portBYTE_ALIGNMENT - 1 ) ) & ~( ( size_t ) portBYTE_ALIGNMENT_MASK ) ); /* 为了进行拆分,因此需要两倍头部大小进行分配 */ #define heapMINIMUM_BLOCK_SIZE ( ( size_t ) ( xHeapStructSize * 2 ) ) /* 定义内存池内存起始与内存尾部 */ PRIVILEGED_DATA static BlockLink_t xStart, xEnd; /* 内存池剩余空间 */ PRIVILEGED_DATA static size_t xFreeBytesRemaining = configADJUSTED_HEAP_SIZE; /* 内存池初始化标志位 */ PRIVILEGED_DATA static BaseType_t xHeapHasBeenInitialised = pdFALSE; /*-----------------------------------------------------------*/ static void prvHeapInit( void ) PRIVILEGED_FUNCTION; /* 定义个迭代器,从内存起始遍历到可用内存大小满足则新块大小,则插入到此节点 */ #define prvInsertBlockIntoFreeList( pxBlockToInsert ) { /* 定义迭代器 */ BlockLink_t * pxIterator; size_t xBlockSize; /* 获取插入块的空间大小 */ xBlockSize = pxBlockToInsert->xBlockSize; /* 迭代器的下一个块节点遍历到空闲块空间大于插入块空间的块节点,这样相当于块空间大小排序,从内存维度映射到链表空间维度排序 */ for( pxIterator = &xStart; pxIterator->pxNextFreeBlock->xBlockSize < xBlockSize; pxIterator = pxIterator->pxNextFreeBlock ) \ { /* There is nothing to do here - just iterate to the correct position. */ } /* 插入块的下一个块节点指向刚刚遍历到的块节点的下一个节点 */ pxBlockToInsert->pxNextFreeBlock = pxIterator->pxNextFreeBlock; /* 基于块节点将链表索引的下一个节点指向为新块 */ pxIterator->pxNextFreeBlock = pxBlockToInsert; } /*-----------------------------------------------------------*/ void * pvPortMalloc( size_t xWantedSize ) { BlockLink_t * pxBlock; BlockLink_t * pxPreviousBlock; BlockLink_t * pxNewBlockLink; void * pvReturn = NULL; size_t xAdditionalRequiredSize; if( xWantedSize > 0 ) { /* 检测申请内存叠加头部信息是否溢出 */ if( heapADD_WILL_OVERFLOW( xWantedSize, xHeapStructSize ) == 0 ) { /*申请的内存叠加头部信息大小*/ xWantedSize += xHeapStructSize; /* 这里与heap1一样,内存向上8字节倍数对齐 */ if( ( xWantedSize & portBYTE_ALIGNMENT_MASK ) != 0x00 ) { xAdditionalRequiredSize = portBYTE_ALIGNMENT - ( xWantedSize & portBYTE_ALIGNMENT_MASK ); if( heapADD_WILL_OVERFLOW( xWantedSize, xAdditionalRequiredSize ) == 0 ) { xWantedSize += xAdditionalRequiredSize; } else { xWantedSize = 0; } } else { mtCOVERAGE_TEST_MARKER(); } } else { xWantedSize = 0; } } else { mtCOVERAGE_TEST_MARKER(); } /* 挂起调度器 */ vTaskSuspendAll(); { if( xHeapHasBeenInitialised == pdFALSE ) { /* 首次申请内存,则初始化内存池 */ prvHeapInit(); xHeapHasBeenInitialised = pdTRUE; } /* 检查申请的内存块是否已分配 */ if( heapBLOCK_SIZE_IS_VALID( xWantedSize ) != 0 ) { /* 若容量足够,则可继续申请 */ if( ( xWantedSize > 0 ) && ( xWantedSize <= xFreeBytesRemaining ) ) { /* 设定块节点指向 */ pxPreviousBlock = &xStart; pxBlock = xStart.pxNextFreeBlock; /* 从start节点的下一块节点开始遍历链表,寻找空间足够的块,End节点为NULL */ while( ( pxBlock->xBlockSize < xWantedSize ) && ( pxBlock->pxNextFreeBlock != NULL ) ) { pxPreviousBlock = pxBlock; pxBlock = pxBlock->pxNextFreeBlock; } /* 遍历到尾部节点则认为无空间申请 */ if( pxBlock != &xEnd ) { /* 申请内存的节点指向刚刚遍历到的块节点+头部信息大小后的地址 */ pvReturn = ( void * ) ( ( ( uint8_t * ) pxPreviousBlock->pxNextFreeBlock ) + xHeapStructSize ); /* 此时该块当作已分配,因此链表中基于此块索引改变链表链接到下一个节点 */ pxPreviousBlock->pxNextFreeBlock = pxBlock->pxNextFreeBlock; /* 申请的块空间减去所需若有两倍头部信息以上,则把该块进行拆分 */ if( ( pxBlock->xBlockSize - xWantedSize ) > heapMINIMUM_BLOCK_SIZE ) { /* 分出一个新块指向新分配块之后 */ pxNewBlockLink = ( void * ) ( ( ( uint8_t * ) pxBlock ) + xWantedSize ); /* 设定新块的空间为之前可分配块的剩余空间 */ pxNewBlockLink->xBlockSize = pxBlock->xBlockSize - xWantedSize; /* 申请块的空间大小为分配的空间大小 */ pxBlock->xBlockSize = xWantedSize; /* 将新快插入到内存池链表中 */ prvInsertBlockIntoFreeList( ( pxNewBlockLink ) ); } /* 更新内存池剩余空间 */ xFreeBytesRemaining -= pxBlock->xBlockSize; heapALLOCATE_BLOCK( pxBlock ); /* 若该块已分配,则该块断开链表联系,下一个块节点设为NULL */ pxBlock->pxNextFreeBlock = NULL; } } } traceMALLOC( pvReturn, xWantedSize ); } /* 恢复调度器 */ ( void ) xTaskResumeAll(); #if ( configUSE_MALLOC_FAILED_HOOK == 1 ) { if( pvReturn == NULL ) { vApplicationMallocFailedHook(); } } #endif return pvReturn; } /*-----------------------------------------------------------*/ void vPortFree( void * pv ) { uint8_t * puc = ( uint8_t * ) pv; BlockLink_t * pxLink; if( pv != NULL ) { /* 基于待释放空间索引到头部 */ puc -= xHeapStructSize; /* 指向待释放空间的头部 */ pxLink = ( void * ) puc; configASSERT( heapBLOCK_IS_ALLOCATED( pxLink ) != 0 ); configASSERT( pxLink->pxNextFreeBlock == NULL ); /* 若是该块有已分配标记则进如释放流程 */ if( heapBLOCK_IS_ALLOCATED( pxLink ) != 0 ) { /* 分配过的块指向的下一个节点为NULL,符合已分配逻辑,继续进行释放 */ if( pxLink->pxNextFreeBlock == NULL ) { /* 将头部信息的xBlockSize的最高位清除,标记为待分配状态 */ heapFREE_BLOCK( pxLink ); #if ( configHEAP_CLEAR_MEMORY_ON_FREE == 1 ) { /* 将释放块的空间全部初始为0x00 */ ( void ) memset( puc + xHeapStructSize, 0, pxLink->xBlockSize - xHeapStructSize ); } #endif /* 挂起调度器 */ vTaskSuspendAll(); { /* 将释放的块插入回链表中 */ prvInsertBlockIntoFreeList( ( ( BlockLink_t * ) pxLink ) ); /* 更新剩余空间 */ xFreeBytesRemaining += pxLink->xBlockSize; traceFREE( pv, pxLink->xBlockSize ); } /* 恢复调度器 */ ( void ) xTaskResumeAll(); } } } } /*-----------------------------------------------------------*/ size_t xPortGetFreeHeapSize( void ) { return xFreeBytesRemaining; } /*-----------------------------------------------------------*/ void vPortInitialiseBlocks( void ) { /* This just exists to keep the linker quiet. */ } /*-----------------------------------------------------------*/ /* Calloc申请空间,并数据初始为0x00 */ void * pvPortCalloc( size_t xNum, size_t xSize ) { void * pv = NULL; /* 检查乘法是否溢出 */ if( heapMULTIPLY_WILL_OVERFLOW( xNum, xSize ) == 0 ) { pv = pvPortMalloc( xNum * xSize ); if( pv != NULL ) { ( void ) memset( pv, 0, xNum * xSize ); } } return pv; } static void prvHeapInit( void ) /* PRIVILEGED_FUNCTION */ { /*定义块节点*/ BlockLink_t * pxFirstFreeBlock; uint8_t * pucAlignedHeap; /* 指向内存池(本身索引就是第8个字节的下标),然后向下对齐8字节的地址 */ pucAlignedHeap = ( uint8_t * ) ( ( ( portPOINTER_SIZE_TYPE ) & ucHeap[ portBYTE_ALIGNMENT - 1 ] ) & ( ~( ( portPOINTER_SIZE_TYPE ) portBYTE_ALIGNMENT_MASK ) ) ); /* 初始内存池start的下一个节点指向内存对齐后的内存池,xStart与End为全局变量节点 */ xStart.pxNextFreeBlock = ( void * ) pucAlignedHeap; /* xStart块节点空间大小为0x00 */ xStart.xBlockSize = ( size_t ) 0; /* 尾部块节点的初始大小为最大对齐上限大小 */ xEnd.xBlockSize = configADJUSTED_HEAP_SIZE; /* 尾部块节点不存在下一个节点 */ xEnd.pxNextFreeBlock = NULL; /* 指向内存池对齐地址 */ pxFirstFreeBlock = ( BlockLink_t * ) pucAlignedHeap; /* 基于索引调整大小为最大对齐上限大小,初始后为一整块内存大小 */ pxFirstFreeBlock->xBlockSize = configADJUSTED_HEAP_SIZE; /* 链接尾部块节点,形成xStart块节点→内存池→End块节点 */ pxFirstFreeBlock->pxNextFreeBlock = &xEnd; }

3. heap_3.c 内存管理

内存管理API : pvPortMalloc(); vPortFree();

内存管理heap3 :heap_3.c源码,截全核心代码,每个细节笔者根据自己理解注解

跳转置顶

-

heap_3.c 内存管理

3.1 :heap_3内存管理图解,间接调用平台的malloc与free接口

3.2 :完整heap_3.c源码解析,这段代码是FreeRTOS中heap_3内存管理方案的核心实现,其本质是对标准C库的malloc()和free()进行了一层轻量级的线程安全封装。该方案自身并不实现独立的内存管理算法,其核心机制是在调用底层malloc/free前后,通过vTaskSuspendAll()和xTaskResumeAll()挂起和恢复任务调度器,以创建一个临时的临界区,从而确保在多任务环境中,对标准库内存管理函数的并发访问是安全的。它完全依赖宿主机操作系统或嵌入式平台C运行库提供的内存管理能力,因此其特性(如碎片处理、分配效率)和行为(如分配失败处理)均由底层库决定。此方案适用于已经具备完整、可靠的标准库内存管理支持的系统(如运行在Linux或Windows上的FreeRTOS移植版本,或某些自带动态内存管理功能的嵌入式平台),使得FreeRTOS能够以最小的开销直接利用系统级的内存资源,但牺牲了在裸机或无库环境下的确定性与可移植性。

void * pvPortMalloc( size_t xWantedSize ) { void * pvReturn; /* 挂起调度器 */ vTaskSuspendAll(); { /* 调用平台malloc接口 */ pvReturn = malloc( xWantedSize ); traceMALLOC( pvReturn, xWantedSize ); } /* 恢复调度器 */ ( void ) xTaskResumeAll(); #if ( configUSE_MALLOC_FAILED_HOOK == 1 ) { if( pvReturn == NULL ) { vApplicationMallocFailedHook(); } } #endif return pvReturn; } void vPortFree( void * pv ) { if( pv != NULL ) { /* 挂起调度器 */ vTaskSuspendAll(); { /* 调用平台free接口 */ free( pv ); traceFREE( pv, 0 ); } /* 恢复调度器 */ ( void ) xTaskResumeAll(); } } void vPortHeapResetState( void ) { }

4. heap_4.c 内存管理

内存管理API : pvPortMalloc(); vPortFree();

内存管理heap4 :heap_4.c源码,截全核心代码,每个细节笔者根据自己理解注解

跳转置顶

-

heap_4.c 内存管理

4.1 :heap_4内存管理图解,heap_4基于heap_2增加了释放合并功能,其余一致

4.2 :完整heap_4.c源码解析,这段代码是FreeRTOS中 heap_4内存管理方案 的核心实现,它基于heap_2的“最佳适配”算法进行了关键性增强,通过引入 “相邻空闲块合并”机制 来有效缓解和消除内存碎片。其核心工作流程与heap_2相似,同样在挂起调度器的临界区内,维护一个按地址(而非heap_2的大小)排序的单向空闲链表,并使用块头部的最高位标记分配状态。最核心的改进在于释放内存时:当调用 vPortFree 后,prvInsertBlockIntoFreeList 函数不仅将释放的块按地址顺序插回链表,还会立即检查并向前或向后合并物理地址相邻的空闲块,将它们融合为单个更大的空闲块,从而有效减少外部碎片。此外,该方案内置了详细的堆状态统计功能(如历史最小空闲内存、分配/释放次数),并通过可选的 heapPROTECT_BLOCK_POINTER 机制(利用异或操作的“金丝雀值”)来保护链表指针的完整性,防止因内存越界写导致的链表损坏。heap_4因此成为FreeRTOS中最通用、最可靠的内存管理方案,特别适合需要长时间运行、且存在频繁且不可预测的内存分配与释放场景的嵌入式应用。

/* 释放的内存清零 */ #define configHEAP_CLEAR_MEMORY_ON_FREE 0 /* 为了进行拆分,因此需要两倍头部大小进行分配 */ #define heapMINIMUM_BLOCK_SIZE ( ( size_t ) ( xHeapStructSize << 1 ) ) /* 头部预留 8个字节 */ #define heapBITS_PER_BYTE ( ( size_t ) 8 ) /* 常量最大值 */ #define heapSIZE_MAX ( ~( ( size_t ) 0 ) ) /*检查乘法结果溢出*/ #define heapMULTIPLY_WILL_OVERFLOW( a, b ) ( ( ( a ) > 0 ) && ( ( b ) > ( heapSIZE_MAX / ( a ) ) ) ) /* 检查加法结果溢出 */ #define heapADD_WILL_OVERFLOW( a, b ) ( ( a ) > ( heapSIZE_MAX - ( b ) ) ) /* 获取比较结果 */ #define heapSUBTRACT_WILL_UNDERFLOW( a, b ) ( ( a ) < ( b ) ) /* xBlockSize最高位定义为是否已分配 */ #define heapBLOCK_ALLOCATED_BITMASK ( ( ( size_t ) 1 ) << ( ( sizeof( size_t ) * heapBITS_PER_BYTE ) - 1 ) ) /* 判断该块是否未分配标记 */ #define heapBLOCK_SIZE_IS_VALID( xBlockSize ) ( ( ( xBlockSize ) & heapBLOCK_ALLOCATED_BITMASK ) == 0 ) /* 判断该块是否已分配标记 */ #define heapBLOCK_IS_ALLOCATED( pxBlock ) ( ( ( pxBlock->xBlockSize ) & heapBLOCK_ALLOCATED_BITMASK ) != 0 ) /* 块分配标记 */ #define heapALLOCATE_BLOCK( pxBlock ) ( ( pxBlock->xBlockSize ) |= heapBLOCK_ALLOCATED_BITMASK ) /* 块取消分配标记 */ #define heapFREE_BLOCK( pxBlock ) ( ( pxBlock->xBlockSize ) &= ~heapBLOCK_ALLOCATED_BITMASK ) /* 定义内存池 */ #if ( configAPPLICATION_ALLOCATED_HEAP == 1 ) extern uint8_t ucHeap[ configTOTAL_HEAP_SIZE ]; #else PRIVILEGED_DATA static uint8_t ucHeap[ configTOTAL_HEAP_SIZE ]; #endif /* 块结构, 头部信息 */ typedef struct A_BLOCK_LINK { struct A_BLOCK_LINK * pxNextFreeBlock; /**< The next free block in the list. */ size_t xBlockSize; /**< The size of the free block. */ } BlockLink_t; /* Canary金丝雀随机值,通过A ^ B = C; A ^ C = B, 对内存池进行溢出攻击保护 */ #if ( configENABLE_HEAP_PROTECTOR == 1 ) extern void vApplicationGetRandomHeapCanary( portPOINTER_SIZE_TYPE * pxHeapCanary ); PRIVILEGED_DATA static portPOINTER_SIZE_TYPE xHeapCanary; #define heapPROTECT_BLOCK_POINTER( pxBlock ) ( ( BlockLink_t * ) ( ( ( portPOINTER_SIZE_TYPE ) ( pxBlock ) ) ^ xHeapCanary ) ) #else #define heapPROTECT_BLOCK_POINTER( pxBlock ) ( pxBlock ) #endif /* 调试地址 */ #define heapVALIDATE_BLOCK_POINTER( pxBlock ) \ configASSERT( ( ( uint8_t * ) ( pxBlock ) >= &( ucHeap[ 0 ] ) ) && \ ( ( uint8_t * ) ( pxBlock ) <= &( ucHeap[ configTOTAL_HEAP_SIZE - 1 ] ) ) ) static void prvInsertBlockIntoFreeList( BlockLink_t * pxBlockToInsert ) PRIVILEGED_FUNCTION; static void prvHeapInit( void ) PRIVILEGED_FUNCTION; /*-----------------------------------------------------------*/ /* 头部信息大小 */ static const size_t xHeapStructSize = ( sizeof( BlockLink_t ) + ( ( size_t ) ( portBYTE_ALIGNMENT - 1 ) ) ) & ~( ( size_t ) portBYTE_ALIGNMENT_MASK ); /* 定义内存池内存起始与内存尾部,尾部为指针 */ PRIVILEGED_DATA static BlockLink_t xStart; PRIVILEGED_DATA static BlockLink_t * pxEnd = NULL; /* 内存池剩余空间大小 */ PRIVILEGED_DATA static size_t xFreeBytesRemaining = ( size_t ) 0U; /* 历史最小空间信息 */ PRIVILEGED_DATA static size_t xMinimumEverFreeBytesRemaining = ( size_t ) 0U; /* 成功分配内存次数 */ PRIVILEGED_DATA static size_t xNumberOfSuccessfulAllocations = ( size_t ) 0U; /* 成功释放内存次数 */ PRIVILEGED_DATA static size_t xNumberOfSuccessfulFrees = ( size_t ) 0U; /*-----------------------------------------------------------*/ void * pvPortMalloc( size_t xWantedSize ) { BlockLink_t * pxBlock; BlockLink_t * pxPreviousBlock; BlockLink_t * pxNewBlockLink; void * pvReturn = NULL; size_t xAdditionalRequiredSize; if( xWantedSize > 0 ) { /* 检测申请内存叠加头部信息是否溢出 */ if( heapADD_WILL_OVERFLOW( xWantedSize, xHeapStructSize ) == 0 ) { /*申请的内存叠加头部信息大小*/ xWantedSize += xHeapStructSize; /* 这里与heap1一样,内存向上8字节倍数对齐,细节可以回看heap1 */ if( ( xWantedSize & portBYTE_ALIGNMENT_MASK ) != 0x00 ) { xAdditionalRequiredSize = portBYTE_ALIGNMENT - ( xWantedSize & portBYTE_ALIGNMENT_MASK ); if( heapADD_WILL_OVERFLOW( xWantedSize, xAdditionalRequiredSize ) == 0 ) { xWantedSize += xAdditionalRequiredSize; } else { xWantedSize = 0; } } else { mtCOVERAGE_TEST_MARKER(); } } else { xWantedSize = 0; } } else { mtCOVERAGE_TEST_MARKER(); } /* 挂起调度器 */ vTaskSuspendAll(); { if( pxEnd == NULL ) { /* pxEnd未指向,则进行内存池初始化 */ prvHeapInit(); } else { mtCOVERAGE_TEST_MARKER(); } /* 检查申请的内存块是否已分配 */ if( heapBLOCK_SIZE_IS_VALID( xWantedSize ) != 0 ) { /* 若容量足够,则可继续申请 */ if( ( xWantedSize > 0 ) && ( xWantedSize <= xFreeBytesRemaining ) ) { /* 设定块节点指向 */ pxPreviousBlock = &xStart; pxBlock = heapPROTECT_BLOCK_POINTER( xStart.pxNextFreeBlock ); heapVALIDATE_BLOCK_POINTER( pxBlock ); /* 从start节点的下一块节点开始遍历链表,寻找空间足够的块,End节点为NULL */ while( ( pxBlock->xBlockSize < xWantedSize ) && ( pxBlock->pxNextFreeBlock != heapPROTECT_BLOCK_POINTER( NULL ) ) ) { pxPreviousBlock = pxBlock; pxBlock = heapPROTECT_BLOCK_POINTER( pxBlock->pxNextFreeBlock ); heapVALIDATE_BLOCK_POINTER( pxBlock ); } /* 遍历到尾部节点则认为无空间申请 */ if( pxBlock != pxEnd ) { /* 申请内存的节点指向刚刚遍历到的块节点+头部信息大小后的地址 */ pvReturn = ( void * ) ( ( ( uint8_t * ) heapPROTECT_BLOCK_POINTER( pxPreviousBlock->pxNextFreeBlock ) ) + xHeapStructSize ); heapVALIDATE_BLOCK_POINTER( pvReturn ); /* 此时该块当作已分配,因此链表中基于此块索引改变链表链接到下一个节点 */ pxPreviousBlock->pxNextFreeBlock = pxBlock->pxNextFreeBlock; configASSERT( heapSUBTRACT_WILL_UNDERFLOW( pxBlock->xBlockSize, xWantedSize ) == 0 ); /* 申请的块空间减去所需若有两倍头部信息以上,则把该块进行拆分,充足说明至少分配一个头部信息且带8字节块大小剩余空间 */ if( ( pxBlock->xBlockSize - xWantedSize ) > heapMINIMUM_BLOCK_SIZE ) { /* 分出一个新块指向新分配块之后 */ pxNewBlockLink = ( void * ) ( ( ( uint8_t * ) pxBlock ) + xWantedSize ); configASSERT( ( ( ( size_t ) pxNewBlockLink ) & portBYTE_ALIGNMENT_MASK ) == 0 ); /* 设定新块的空间为之前可分配块的剩余空间 */ pxNewBlockLink->xBlockSize = pxBlock->xBlockSize - xWantedSize; /* 申请块的空间大小为分配的空间大小 */ pxBlock->xBlockSize = xWantedSize; /* 将新快插入到内存池链表中,此处与heap2有区别,heap2会进行一次剩余空间大小排序, 而因为heap4带合并功能,因此不需要剩余空间插入排序 */ pxNewBlockLink->pxNextFreeBlock = pxPreviousBlock->pxNextFreeBlock; pxPreviousBlock->pxNextFreeBlock = heapPROTECT_BLOCK_POINTER( pxNewBlockLink ); } else { mtCOVERAGE_TEST_MARKER(); } /* 更新内存池剩余空间 */ xFreeBytesRemaining -= pxBlock->xBlockSize; /* 记录内存池剩余空间历史最小值,即使剩余1K,也只是各节点加起来1K,不代表可以分配连续1K内存 */ if( xFreeBytesRemaining < xMinimumEverFreeBytesRemaining ) { xMinimumEverFreeBytesRemaining = xFreeBytesRemaining; } else { mtCOVERAGE_TEST_MARKER(); } heapALLOCATE_BLOCK( pxBlock ); /* 若该块已分配,则该块断开链表联系,下一个块节点设为NULL */ pxBlock->pxNextFreeBlock = NULL; /* 记录成功分配次数 */ xNumberOfSuccessfulAllocations++; } else { mtCOVERAGE_TEST_MARKER(); } } else { mtCOVERAGE_TEST_MARKER(); } } else { mtCOVERAGE_TEST_MARKER(); } traceMALLOC( pvReturn, xWantedSize ); } /* 恢复调度器 */ ( void ) xTaskResumeAll(); #if ( configUSE_MALLOC_FAILED_HOOK == 1 ) { if( pvReturn == NULL ) { vApplicationMallocFailedHook(); } else { mtCOVERAGE_TEST_MARKER(); } } #endif /* if ( configUSE_MALLOC_FAILED_HOOK == 1 ) */ configASSERT( ( ( ( size_t ) pvReturn ) & ( size_t ) portBYTE_ALIGNMENT_MASK ) == 0 ); return pvReturn; } /*-----------------------------------------------------------*/ void vPortFree( void * pv ) { uint8_t * puc = ( uint8_t * ) pv; BlockLink_t * pxLink; if( pv != NULL ) { /* 基于待释放空间索引到头部 */ puc -= xHeapStructSize; /* 指向待释放空间的头部 */ pxLink = ( void * ) puc; heapVALIDATE_BLOCK_POINTER( pxLink ); configASSERT( heapBLOCK_IS_ALLOCATED( pxLink ) != 0 ); configASSERT( pxLink->pxNextFreeBlock == NULL ); /* 若是该块有已分配标记则进如释放流程 */ if( heapBLOCK_IS_ALLOCATED( pxLink ) != 0 ) { /* 分配过的块指向的下一个节点为NULL,符合已分配逻辑,继续进行释放 */ if( pxLink->pxNextFreeBlock == NULL ) { /* 将头部信息的xBlockSize的最高位清除,标记为待分配状态 */ heapFREE_BLOCK( pxLink ); #if ( configHEAP_CLEAR_MEMORY_ON_FREE == 1 ) { if( heapSUBTRACT_WILL_UNDERFLOW( pxLink->xBlockSize, xHeapStructSize ) == 0 ) { /* 将释放块的空间全部初始为0x00 */ ( void ) memset( puc + xHeapStructSize, 0, pxLink->xBlockSize - xHeapStructSize ); } } #endif /* 挂起调度器 */ vTaskSuspendAll(); { /* 更新剩余空间 */ xFreeBytesRemaining += pxLink->xBlockSize; traceFREE( pv, pxLink->xBlockSize ); /* 将释放的块插入回链表中,此处接口实现与heap2不同,heap4此处实现带有合并功能 */ prvInsertBlockIntoFreeList( ( ( BlockLink_t * ) pxLink ) ); xNumberOfSuccessfulFrees++; } /* 恢复调度器 */ ( void ) xTaskResumeAll(); } else { mtCOVERAGE_TEST_MARKER(); } } else { mtCOVERAGE_TEST_MARKER(); } } } /*-----------------------------------------------------------*/ size_t xPortGetFreeHeapSize( void ) { /* 返回内存池剩余空间 */ return xFreeBytesRemaining; } /*-----------------------------------------------------------*/ size_t xPortGetMinimumEverFreeHeapSize( void ) { /* 返回内存池剩余空间历史最小值 */ return xMinimumEverFreeBytesRemaining; } /*-----------------------------------------------------------*/ void vPortInitialiseBlocks( void ) { } /*-----------------------------------------------------------*/ /* Calloc申请空间,并数据初始为0x00 */ void * pvPortCalloc( size_t xNum, size_t xSize ) { void * pv = NULL; if( heapMULTIPLY_WILL_OVERFLOW( xNum, xSize ) == 0 ) { pv = pvPortMalloc( xNum * xSize ); if( pv != NULL ) { ( void ) memset( pv, 0, xNum * xSize ); } } return pv; } /*-----------------------------------------------------------*/ static void prvHeapInit( void ) /* PRIVILEGED_FUNCTION */ { /*定义块节点*/ BlockLink_t * pxFirstFreeBlock; portPOINTER_SIZE_TYPE uxStartAddress, uxEndAddress; size_t xTotalHeapSize = configTOTAL_HEAP_SIZE; /* 指向内存池首地址 */ uxStartAddress = ( portPOINTER_SIZE_TYPE ) ucHeap; /* 指向内存池(本身索引就是第8个字节的下标),然后向下对齐8字节的地址 */ if( ( uxStartAddress & portBYTE_ALIGNMENT_MASK ) != 0 ) { uxStartAddress += ( portBYTE_ALIGNMENT - 1 ); uxStartAddress &= ~( ( portPOINTER_SIZE_TYPE ) portBYTE_ALIGNMENT_MASK ); xTotalHeapSize -= ( size_t ) ( uxStartAddress - ( portPOINTER_SIZE_TYPE ) ucHeap ); } #if ( configENABLE_HEAP_PROTECTOR == 1 ) { /* 实现一个随机值作为基值设定进行保护 */ vApplicationGetRandomHeapCanary( &( xHeapCanary ) ); } #endif /* 初始内存池start的下一个节点指向内存对齐后的内存池,xStart为全局变量节点 */ xStart.pxNextFreeBlock = ( void * ) heapPROTECT_BLOCK_POINTER( uxStartAddress ); /* xStart块节点空间大小为0x00 */ xStart.xBlockSize = ( size_t ) 0; /* 尾部块地址初始为起始节点叠加内存池总大小后,从最后往前偏移一个头部信息作为尾部块地址,且进行内存对齐 */ uxEndAddress = uxStartAddress + ( portPOINTER_SIZE_TYPE ) xTotalHeapSize; uxEndAddress -= ( portPOINTER_SIZE_TYPE ) xHeapStructSize; uxEndAddress &= ~( ( portPOINTER_SIZE_TYPE ) portBYTE_ALIGNMENT_MASK ); /* 尾部块节点指向刚刚计算后的尾部块地址 */ pxEnd = ( BlockLink_t * ) uxEndAddress; /* 尾部块节点空间大小设0x00 */ pxEnd->xBlockSize = 0; /* 尾部块节点不存在下一个节点 */ pxEnd->pxNextFreeBlock = heapPROTECT_BLOCK_POINTER( NULL ); /* 指向内存池对齐地址 */ pxFirstFreeBlock = ( BlockLink_t * ) uxStartAddress; /* 基于索引调整大小尾部节点减去起始头部后地址得到总空间,初始后为一整块内存大小 */ pxFirstFreeBlock->xBlockSize = ( size_t ) ( uxEndAddress - ( portPOINTER_SIZE_TYPE ) pxFirstFreeBlock ); /* 链接尾部块节点,形成xStart块节点→内存池→End块节点 */ pxFirstFreeBlock->pxNextFreeBlock = heapPROTECT_BLOCK_POINTER( pxEnd ); /* 初始历史剩余空间最小值 */ xMinimumEverFreeBytesRemaining = pxFirstFreeBlock->xBlockSize; /* 初始内存池剩余空间 */ xFreeBytesRemaining = pxFirstFreeBlock->xBlockSize; } /*-----------------------------------------------------------*/ static void prvInsertBlockIntoFreeList( BlockLink_t * pxBlockToInsert ) /* PRIVILEGED_FUNCTION */ { /* 定义迭代器 */ BlockLink_t * pxIterator; uint8_t * puc; /* 迭代器的下一个块节点遍历到待分配块节点之前 */ for( pxIterator = &xStart; heapPROTECT_BLOCK_POINTER( pxIterator->pxNextFreeBlock ) < pxBlockToInsert; pxIterator = heapPROTECT_BLOCK_POINTER( pxIterator->pxNextFreeBlock ) ) { } /* 迭代位置不在起始处 */ if( pxIterator != &xStart ) { heapVALIDATE_BLOCK_POINTER( pxIterator ); } /* 获取迭代处地址 */ puc = ( uint8_t * ) pxIterator; /* 如果插入节点的前一个节点和插入节点是相邻的,则向前合并成一块连续内存空间的块节点,此情况链表不需要重新改变链接关联指向 */ if( ( puc + pxIterator->xBlockSize ) == ( uint8_t * ) pxBlockToInsert ) { pxIterator->xBlockSize += pxBlockToInsert->xBlockSize; pxBlockToInsert = pxIterator; } else { mtCOVERAGE_TEST_MARKER(); } /* 获取插入块节点地址 */ puc = ( uint8_t * ) pxBlockToInsert; /* 如果插入节点的相邻下个节点是待分配的连续内存,则向后合并成一块连续内存空间的块节点,此时需重新改变链表的双向关联指向 */ if( ( puc + pxBlockToInsert->xBlockSize ) == ( uint8_t * ) heapPROTECT_BLOCK_POINTER( pxIterator->pxNextFreeBlock ) ) { /* 向后合并的前提是相邻下一个节点并不是End节点 */ if( heapPROTECT_BLOCK_POINTER( pxIterator->pxNextFreeBlock ) != pxEnd ) { /* Form one big block from the two blocks. */ pxBlockToInsert->xBlockSize += heapPROTECT_BLOCK_POINTER( pxIterator->pxNextFreeBlock )->xBlockSize; pxBlockToInsert->pxNextFreeBlock = heapPROTECT_BLOCK_POINTER( pxIterator->pxNextFreeBlock )->pxNextFreeBlock; } else { /* 否则插入块节点指向End节点 */ pxBlockToInsert->pxNextFreeBlock = heapPROTECT_BLOCK_POINTER( pxEnd ); } } else { /* 插入块节点指向链表中的迭代地址的下一个节点 */ pxBlockToInsert->pxNextFreeBlock = pxIterator->pxNextFreeBlock; } /* 非向前合并的情况下,如正常释放,或向后合并需要重新指向链表的关联指向,这里是链表的上一个节点重新指向为新节点 */ if( pxIterator != pxBlockToInsert ) { pxIterator->pxNextFreeBlock = heapPROTECT_BLOCK_POINTER( pxBlockToInsert ); } else { mtCOVERAGE_TEST_MARKER(); } } /*-----------------------------------------------------------*/ void vPortGetHeapStats( HeapStats_t * pxHeapStats ) { BlockLink_t * pxBlock; size_t xBlocks = 0, xMaxSize = 0, xMinSize = portMAX_DELAY; /* 挂起调度器 */ vTaskSuspendAll(); { /* 获取内存池起始块节点 */ pxBlock = heapPROTECT_BLOCK_POINTER( xStart.pxNextFreeBlock ); /* 遍历得到整块内存池节点的最大/最小待分配的空间 */ if( pxBlock != NULL ) { while( pxBlock != pxEnd ) { /* 记录有多少个待分配节点 */ xBlocks++; if( pxBlock->xBlockSize > xMaxSize ) { xMaxSize = pxBlock->xBlockSize; } if( pxBlock->xBlockSize < xMinSize ) { xMinSize = pxBlock->xBlockSize; } pxBlock = heapPROTECT_BLOCK_POINTER( pxBlock->pxNextFreeBlock ); } } } /* 恢复调度器 */ ( void ) xTaskResumeAll(); /* 传入索引 */ pxHeapStats->xSizeOfLargestFreeBlockInBytes = xMaxSize; pxHeapStats->xSizeOfSmallestFreeBlockInBytes = xMinSize; pxHeapStats->xNumberOfFreeBlocks = xBlocks; /* 进入临界区 */ taskENTER_CRITICAL(); { /* 内存池剩余空间 */ pxHeapStats->xAvailableHeapSpaceInBytes = xFreeBytesRemaining; /* 成功分配的次数 */ pxHeapStats->xNumberOfSuccessfulAllocations = xNumberOfSuccessfulAllocations; /* 成功释放的次数 */ pxHeapStats->xNumberOfSuccessfulFrees = xNumberOfSuccessfulFrees; /* 内存池剩余空间历史最小值 */ pxHeapStats->xMinimumEverFreeBytesRemaining = xMinimumEverFreeBytesRemaining; } /* 退出临界区 */ taskEXIT_CRITICAL(); } /*-----------------------------------------------------------*/ void vPortHeapResetState( void ) { /* 初始尾部节点 */ pxEnd = NULL; /* 复位记录数据 */ xFreeBytesRemaining = ( size_t ) 0U; xMinimumEverFreeBytesRemaining = ( size_t ) 0U; xNumberOfSuccessfulAllocations = ( size_t ) 0U; xNumberOfSuccessfulFrees = ( size_t ) 0U; } /*-----------------------------------------------------------*/

5. heap_5.c 内存管理

内存管理API : pvPortMalloc(); vPortFree();

内存管理heap5 :heap_5.c源码,截全核心代码,每个细节笔者根据自己理解注解

跳转置顶

-

heap_5.c 内存管理

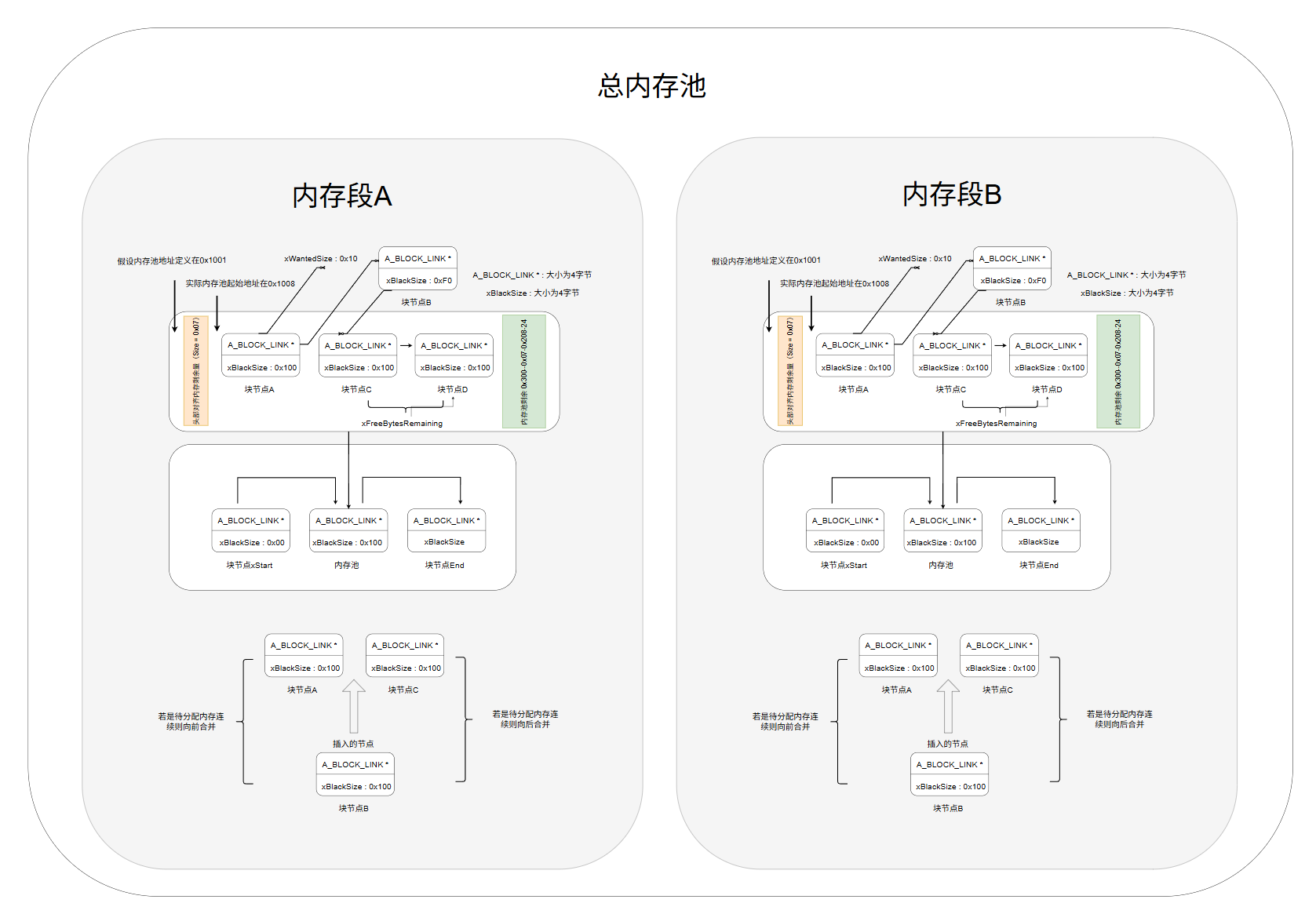

5.1 :heap_5内存管理图解,与heap4内存管理一致,增加多段内存区管理

5.2 :完整heap_5.c源码解析,这段代码是FreeRTOS中 heap_5内存管理方案的核心实现,它基于heap_4的“合并式最佳适配”算法进行了关键性扩展,使其能够管理多个物理上非连续的内存区域。其最核心的机制是通过用户提供的 HeapRegion_t 结构体数组,在系统启动时调用 vPortDefineHeapRegions() 函数,将分散的、独立的物理内存块(如片上SRAM、外部SDRAM)初始化并链接成一个逻辑上统一、按地址排序的单向空闲链表。之后,其分配、释放、合并及统计机制与heap_4完全相同,在临界区内执行,并有效合并相邻空闲块以对抗碎片。因此,heap_5是功能最强大的FreeRTOS内存管理器,它既继承了heap_4的抗碎片能力和丰富统计特性,又提供了管理复杂、非连续物理内存布局的灵活性,是使用外部存储器或拥有多块内存的复杂嵌入式系统的理想选择。