// 导入所需的OpenCV头文件

#include <opencv2/core.hpp>

#include <opencv2/imgproc.hpp>

#include <opencv2/highgui.hpp>

#include <opencv2/features2d.hpp>

// 导入向量和映射容器

#include <vector>

#include <map>

// 导入输入输出流库

#include <iostream>

// 使用标准命名空间和OpenCV命名空间,避免在使用这些命名空间下的类型和函数时反复输入std::和cv::

using namespace std;

using namespace cv;

// 帮助函数,用于提供程序的使用说明

static void help(char** argv)

{

cout << "\n This program demonstrates how to use BLOB to detect and filter region \n"

<< "Usage: \n"

<< argv[0]

<< " <image1(detect_blob.png as default)>\n"

<< "Press a key when image window is active to change descriptor";

}

// 函数Legende用于根据SimpleBlobDetector的参数pAct生成不同Blob检测条件的文字描述

static String Legende(SimpleBlobDetector::Params &pAct)

{

// 创建一个空字符串s,用于存放最终生成的描述文字

String s = "";

// 如果启用了面积过滤器filterByArea

if (pAct.filterByArea)

{

// 将最小面积minArea和最大面积maxArea转换成字符串表示,并追加到s中

String inf = static_cast<const ostringstream&>(ostringstream() << pAct.minArea).str();

String sup = static_cast<const ostringstream&>(ostringstream() << pAct.maxArea).str();

s = " Area range [" + inf + " to " + sup + "]";

}

// 如果启用了圆度过滤器filterByCircularity

if (pAct.filterByCircularity)

{

// 将最小圆度minCircularity和最大圆度maxCircularity转换成字符串表示,并追加到s中

String inf = static_cast<const ostringstream&>(ostringstream() << pAct.minCircularity).str();

String sup = static_cast<const ostringstream&>(ostringstream() << pAct.maxCircularity).str();

// 判断之前的描述文字s是否为空,如果为空则直接赋值,不为空则添加"AND"进行连接

if (s.length() == 0)

s = " Circularity range [" + inf + " to " + sup + "]";

else

s += " AND Circularity range [" + inf + " to " + sup + "]";

}

// 如果启用了颜色过滤器filterByColor

if (pAct.filterByColor)

{

// 将Blob的颜色blobColor转换为整数并转换成字符串表示,然后追加到s中

String inf = static_cast<const ostringstream&>(ostringstream() << (int)pAct.blobColor).str();

// 判断之前的描述文字s是否为空,如果为空则直接赋值,不为空则添加"AND"进行连接

if (s.length() == 0)

s = " Blob color " + inf;

else

s += " AND Blob color " + inf;

}

// 如果启用了凸度过滤器filterByConvexity

if (pAct.filterByConvexity)

{

// 将最小凸度minConvexity和最大凸度maxConvexity转换成字符串表示,并追加到s中

String inf = static_cast<const ostringstream&>(ostringstream() << pAct.minConvexity).str();

String sup = static_cast<const ostringstream&>(ostringstream() << pAct.maxConvexity).str();

// 判断之前的描述文字s是否为空,如果为空则直接赋值,不为空则添加"AND"进行连接

if (s.length() == 0)

s = " Convexity range[" + inf + " to " + sup + "]";

else

s += " AND Convexity range[" + inf + " to " + sup + "]";

}

// 如果启用了惯性比过滤器filterByInertia

if (pAct.filterByInertia)

{

// 将最小惯性比minInertiaRatio和最大惯性比maxInertiaRatio转换成字符串表示,并追加到s中

String inf = static_cast<const ostringstream&>(ostringstream() << pAct.minInertiaRatio).str();

String sup = static_cast<const ostringstream&>(ostringstream() << pAct.maxInertiaRatio).str();

// 判断之前的描述文字s是否为空,如果为空则直接赋值,不为空则添加"AND"进行连接

if (s.length() == 0)

s = " Inertia ratio range [" + inf + " to " + sup + "]";

else

s += " AND Inertia ratio range [" + inf + " to " + sup + "]";

}

// 返回最终生成的Blob检测条件描述文字

return s;

}

// 主函数

int main(int argc, char *argv[])

{

// 用于存储读取的文件名

String fileName;

// 创建命令行解析器,用于处理通过命令行传入的参数

cv::CommandLineParser parser(argc, argv, "{@input |detect_blob.png| }{h help | | }");

// 如果有"-h"或"--help"参数,显示帮助信息后结束程序

if (parser.has("h"))

{

help(argv);

return 0;

}

// 如果没有提供输入文件名参数,则使用默认的"detect_blob.png"

fileName = parser.get<string>("@input");

// 读取并存储图像

Mat img = imread(samples::findFile(fileName), IMREAD_COLOR);

// 如果读取失败或图像为空,则输出错误信息并结束程序

if (img.empty())

{

cout << "Image " << fileName << " is empty or cannot be found\n";

return 1;

}

// 初始化SimpleBlobDetector的默认参数

SimpleBlobDetector::Params pDefaultBLOB;

// 设置默认的BLOB检测器的参数

// 设置SimpleBlobDetector的阈值步长

pDefaultBLOB.thresholdStep = 10;

// 设置SimpleBlobDetector的最小阈值

pDefaultBLOB.minThreshold = 10;

// 设置SimpleBlobDetector的最大阈值

pDefaultBLOB.maxThreshold = 220;

// 设置SimpleBlobDetector的最小重复性

pDefaultBLOB.minRepeatability = 2;

// 设置SimpleBlobDetector的BLOB之间的最小距离

pDefaultBLOB.minDistBetweenBlobs = 10;

pDefaultBLOB.filterByColor = false; // 不按颜色过滤

pDefaultBLOB.blobColor = 0; // BLOB的默认颜色

pDefaultBLOB.filterByArea = false; // 不按区域大小过滤

pDefaultBLOB.minArea = 25; // 最小区域大小

pDefaultBLOB.maxArea = 5000; // 最大区域大小

pDefaultBLOB.filterByCircularity = false; // 不按圆度过滤

pDefaultBLOB.minCircularity = 0.9f; // 最小圆度

pDefaultBLOB.maxCircularity = (float)1e37; // 设置一个非常大的数,代表无上限

pDefaultBLOB.filterByInertia = false; // 不按惯性比过滤

pDefaultBLOB.minInertiaRatio = 0.1f; // 最小惯性比

pDefaultBLOB.maxInertiaRatio = (float)1e37; // 设置一个非常大的数,代表无上限

pDefaultBLOB.filterByConvexity = false; // 不按凸度过滤

pDefaultBLOB.minConvexity = 0.95f; // 最小凸度

pDefaultBLOB.maxConvexity = (float)1e37; // 设置一个非常大的数,代表无上限

// 存储BLOB类型描述符的字符串向量

vector<String> typeDesc;

// 存储不同BLOB参数的向量

vector<SimpleBlobDetector::Params> pBLOB;

// BLOB参数向量的迭代器

vector<SimpleBlobDetector::Params>::iterator itBLOB;

// 初始化一个颜色调色板,用于给不同的BLOB着色

vector< Vec3b > palette;

// 随机生成调色板中的颜色

for (int i = 0; i<65536; i++)

{

uchar c1 = (uchar)rand();

uchar c2 = (uchar)rand();

uchar c3 = (uchar)rand();

palette.push_back(Vec3b(c1, c2, c3));

}

// 调用help函数显示帮助信息

help(argv);

// 下面代码将创建不同参数的BLOB检测器,并显示它们的结果

// 配置六种不同参数的BLOB检测器

// 例如,第一个检测器我们要检测所有BLOB

// 对每种类型描述符进行初始化,然后按不同的过滤条件修改参数

// 将"BLOB"类型推入描述符类型向量

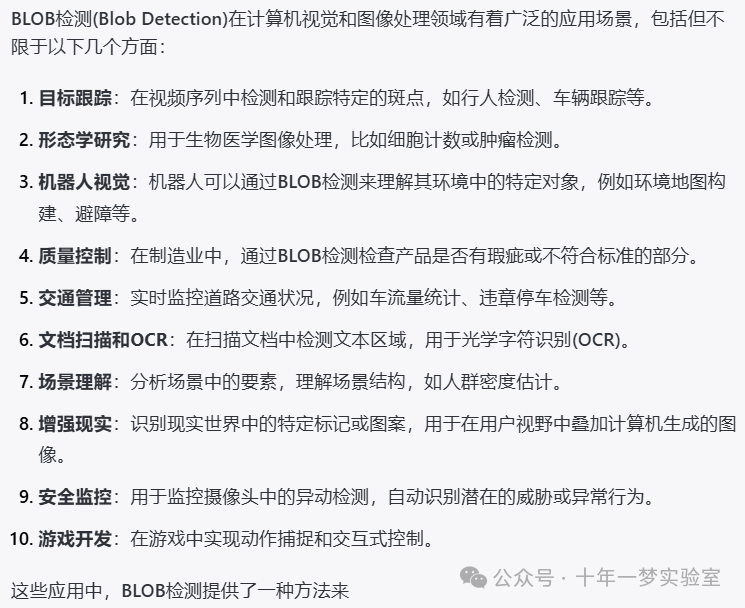

typeDesc.push_back("BLOB"); // 参见OpenCV官方文档SimpleBlobDetector类的描述

pBLOB.push_back(pDefaultBLOB); // 将默认BLOB参数推入参数向量

pBLOB.back().filterByArea = true; // 启用面积过滤

pBLOB.back().minArea = 1; // 设置筛选的最小面积

pBLOB.back().maxArea = float(img.rows * img.cols); // 设置筛选的最大面积为图像的总面积

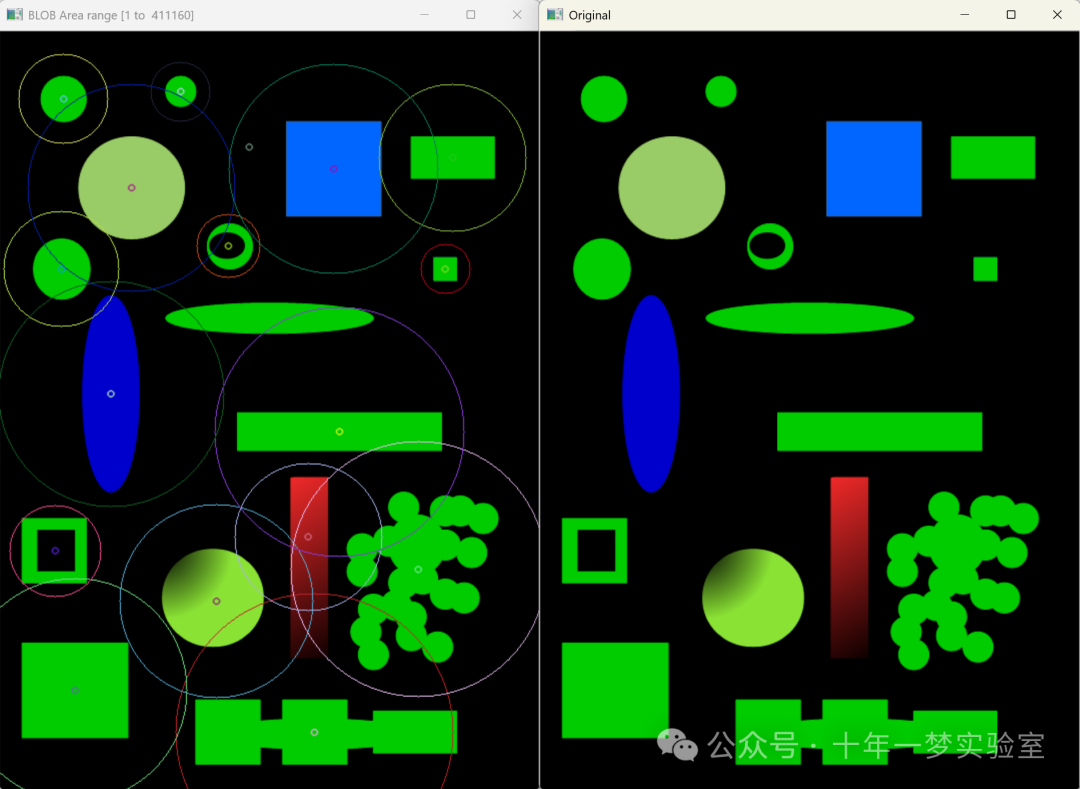

// 第二个BLOB检测器的参数设置:要求检测面积在500到2900像素之间的区域

typeDesc.push_back("BLOB"); // 类型描述符追加"BLOB"

pBLOB.push_back(pDefaultBLOB); // 使用默认参数作为基础

pBLOB.back().filterByArea = true; // 启用面积过滤

pBLOB.back().minArea = 500; // 设置最小面积为500像素

pBLOB.back().maxArea = 2900; // 设置最大面积为2900像素

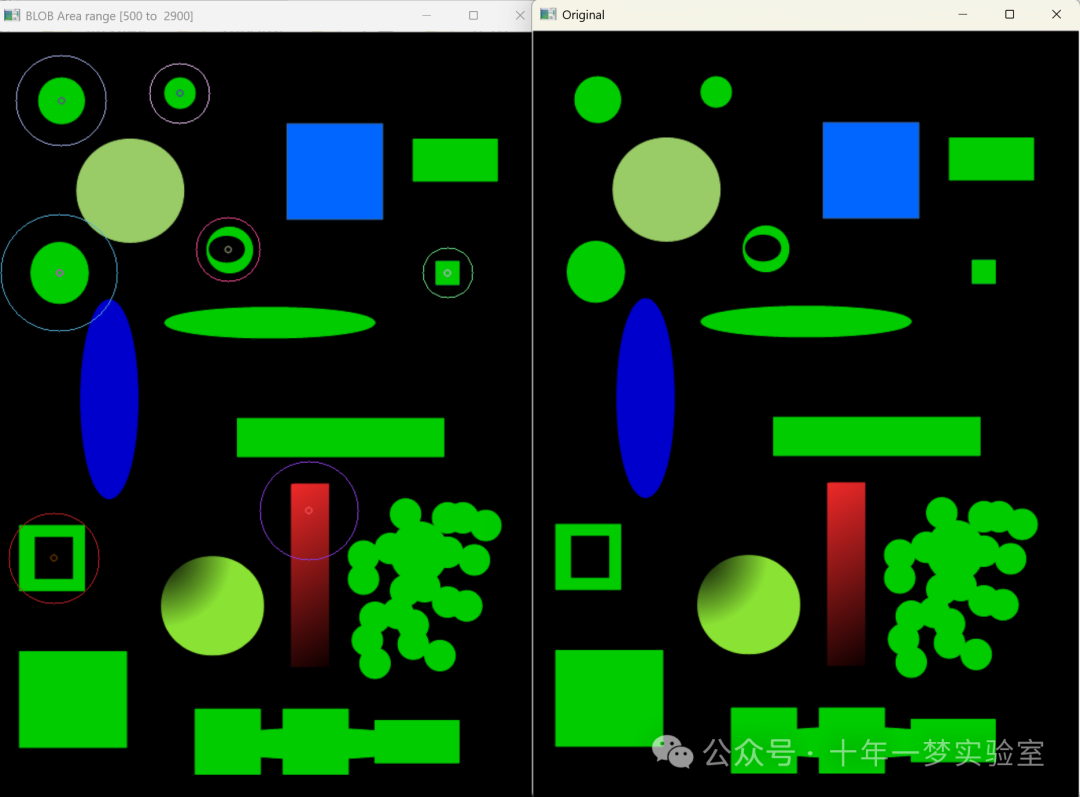

// 第三个BLOB检测器的参数设置:仅检测圆形物体

typeDesc.push_back("BLOB"); // 类型描述符追加"BLOB"

pBLOB.push_back(pDefaultBLOB); // 使用默认参数作为基础

pBLOB.back().filterByCircularity = true; // 启用圆度过滤

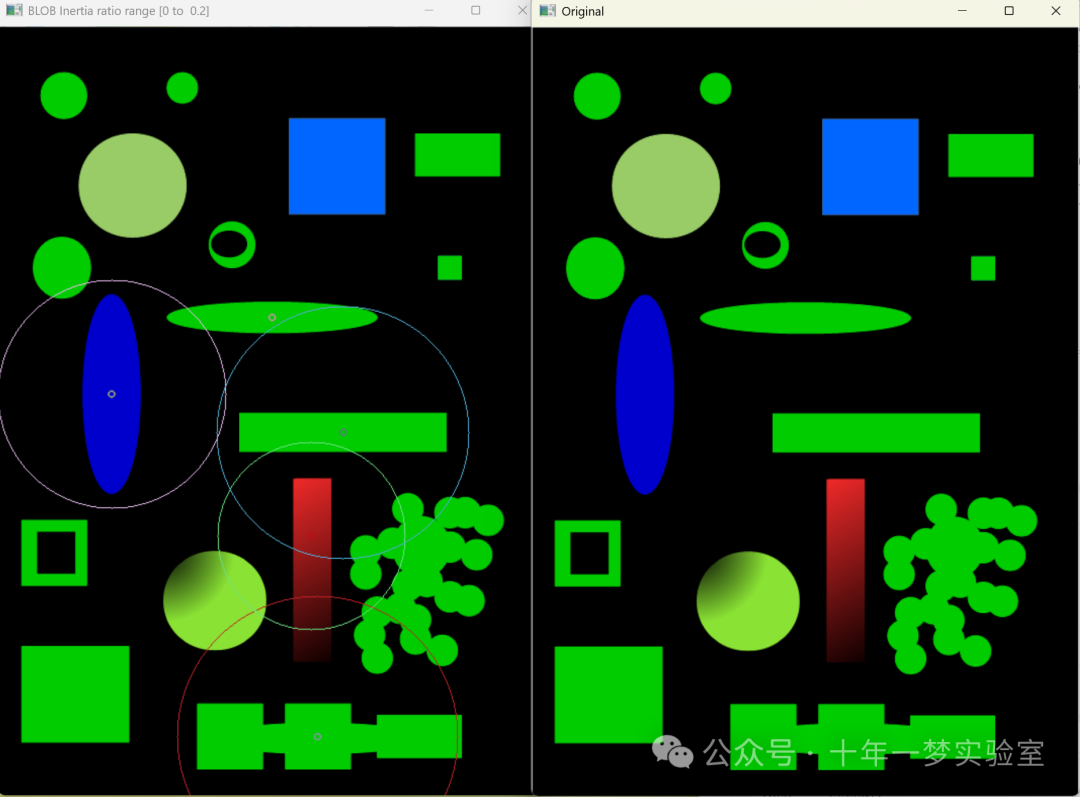

// 第四个BLOB检测器的参数设置:根据惯性比进行筛选

typeDesc.push_back("BLOB"); // 类型描述符追加"BLOB"

pBLOB.push_back(pDefaultBLOB); // 使用默认参数作为基础

pBLOB.back().filterByInertia = true; // 启用惯性比过滤

pBLOB.back().minInertiaRatio = 0; // 设置最小惯性比为0

pBLOB.back().maxInertiaRatio = (float)0.2; // 设置最大惯性比为0.2

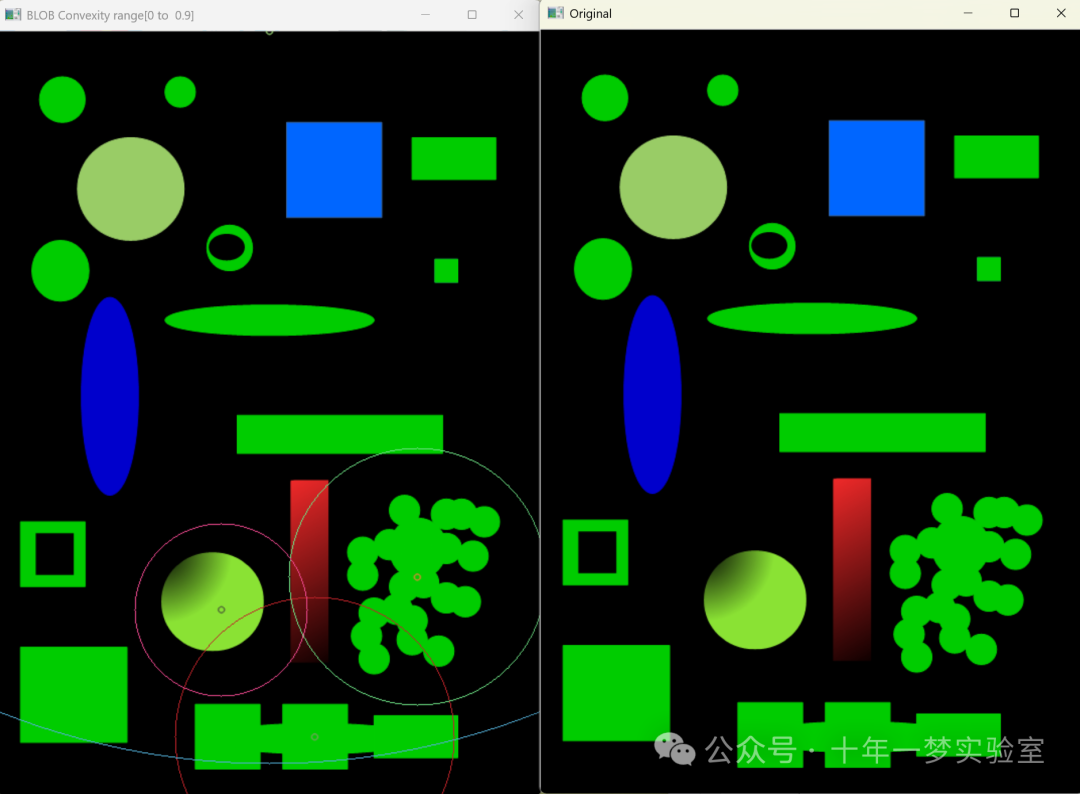

// 第五个BLOB检测器的参数设置:根据凸度进行筛选

typeDesc.push_back("BLOB"); // 类型描述符追加"BLOB"

pBLOB.push_back(pDefaultBLOB); // 使用默认参数作为基础

pBLOB.back().filterByConvexity = true; // 启用凸度过滤

pBLOB.back().minConvexity = 0.; // 设置最小凸度为0

pBLOB.back().maxConvexity = (float)0.9; // 设置最大凸度为0.9

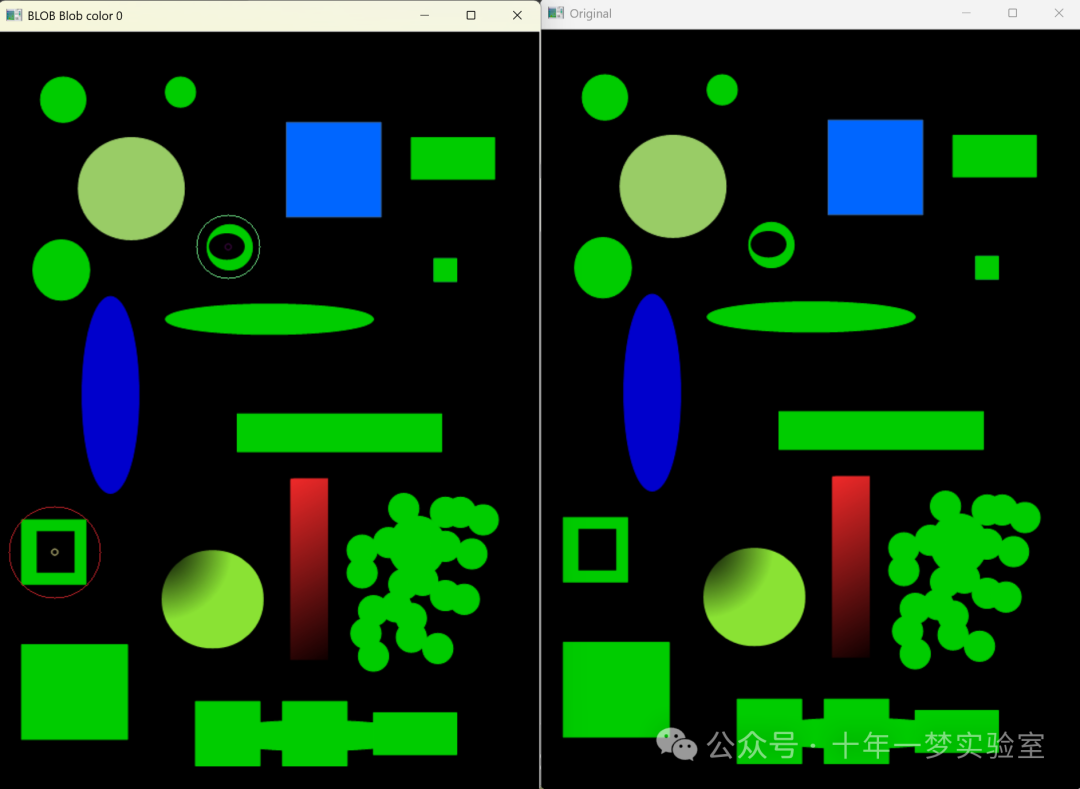

// 第六个BLOB检测器的参数设置:检测重心颜色为0的BLOB

typeDesc.push_back("BLOB"); // 类型描述符追加"BLOB"

pBLOB.push_back(pDefaultBLOB); // 使用默认参数作为基础

pBLOB.back().filterByColor = true; // 启用颜色过滤

pBLOB.back().blobColor = 0; // 设置筛选的BLOB颜色为0

// 迭代器指向BLOB参数向量的起始位置

itBLOB = pBLOB.begin();

// 存储比较结果的向量

vector<double> desMethCmp;

// 创建Feature2D的智能指针,用于后续的特征检测

Ptr<Feature2D> b;

// 用于存储文本标签

String label;

// 循环遍历所有类型描述符

vector<String>::iterator itDesc;

for (itDesc = typeDesc.begin(); itDesc != typeDesc.end(); ++itDesc)

{

// 存储检测到的关键点

vector<KeyPoint> keyImg1;

// 对于BLOB类型描述符

if (*itDesc == "BLOB")

{

b = SimpleBlobDetector::create(*itBLOB); // 创建BLOB检测器

label = Legende(*itBLOB); // 生成描述字符串

++itBLOB; // 移动到下一个参数集

}

// 错误处理

try

{

// 存储检测到的关键点

vector<KeyPoint> keyImg;

vector<Rect> zone;

vector<vector <Point> > region;

// 创建用于描述的矩阵和结果显示的图像

Mat desc, result(img.rows, img.cols, CV_8UC3);

// 如果是SimpleBlobDetector

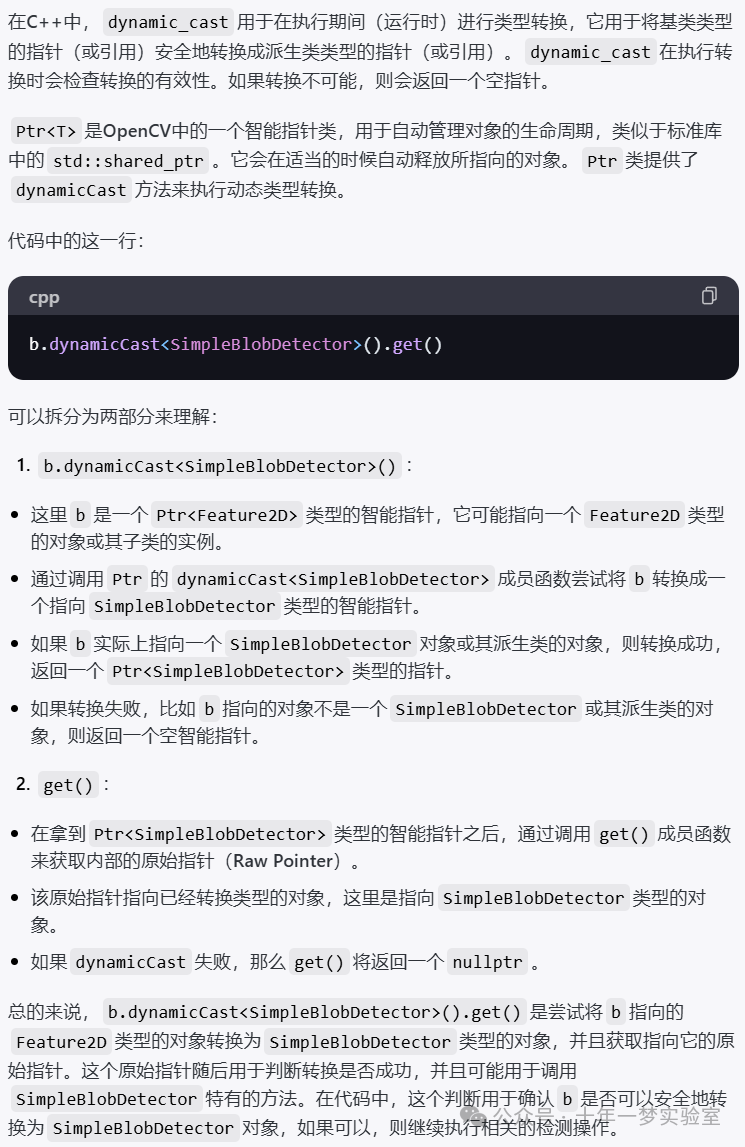

if (b.dynamicCast<SimpleBlobDetector>().get())

{

// 动态转换为SimpleBlobDetector

Ptr<SimpleBlobDetector> sbd = b.dynamicCast<SimpleBlobDetector>();

// 使用SimpleBlobDetector检测关键点

sbd->detect(img, keyImg, Mat());

// 绘制检测到的关键点

drawKeypoints(img, keyImg, result);

// 遍历关键点,并在结果图中用圆圈表示

int i = 0;

for (vector<KeyPoint>::iterator k = keyImg.begin(); k != keyImg.end(); ++k, ++i)

circle(result, k->pt, (int)k->size, palette[i % 65536]);

}

// 创建窗口显示结果

namedWindow(*itDesc + label, WINDOW_AUTOSIZE);

imshow(*itDesc + label, result);

// 显示原始图像

imshow("Original", img);

// 等待用户响应

waitKey();

}

catch (const Exception& e)

{

// 如果发生错误,则打印错误信息

cout << "Feature : " << *itDesc << "\n";

cout << e.msg << endl;

}

}

// 程序正常退出

return 0;

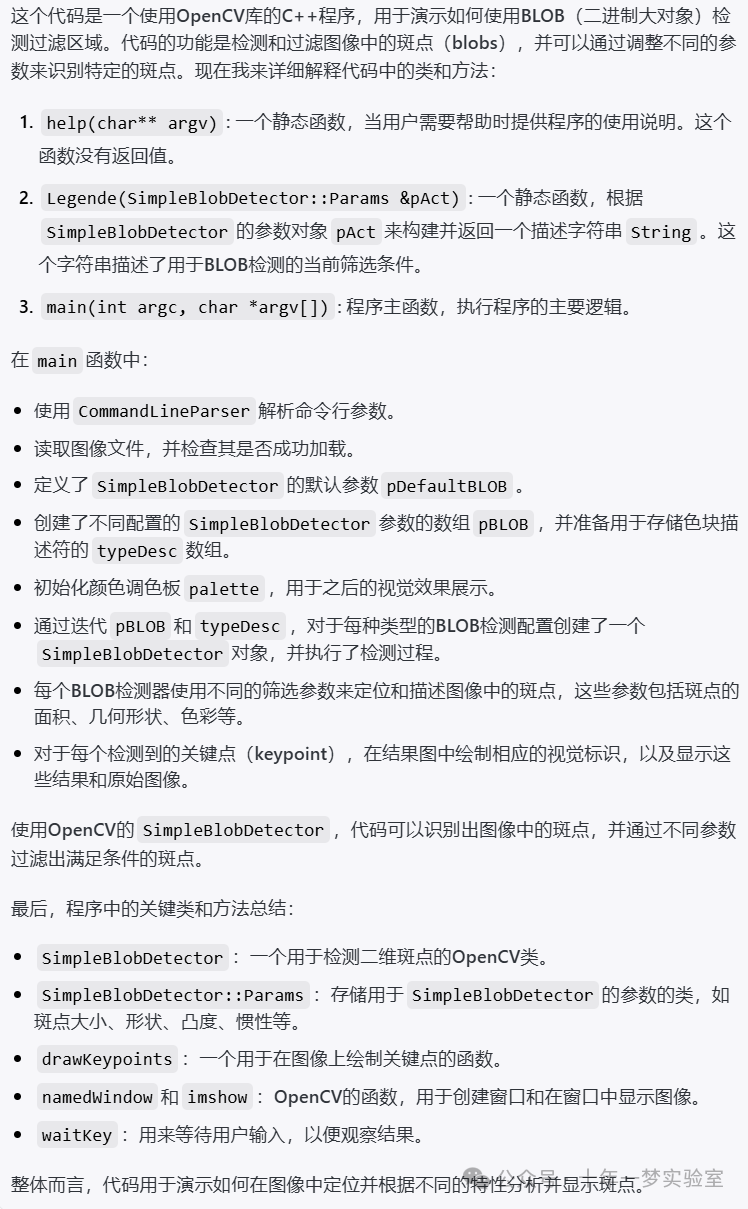

}这段代码是使用OpenCV库编写的C++源码,用于演示如何通过SimpleBlobDetector类检测图像中的BLOB(Binary Large Object,二进制大对象),并根据不同参数过滤和显示检测到的区域。BLOB主要用于分割图像中具有不同特性(如面积、颜色、凸性等)的连续区域。代码中包括Blob检测参数的配置、随机颜色调色板的生成、关键点检测、过滤条件的文字描述生成、图像的显示以及异常处理。通过更改SimpleBlobDetector的参数,用户可以筛选满足特定条件的图像区域,比如特定大小、形状或颜色的物体。

b.dynamicCast<SimpleBlobDetector>().get()

3180

3180

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?