- 普通docker的使用

- 注:此方法实用于linux系统

docker gpu

-

尝试运行

docker run --gpus=all -it --net=host --ipc=host --ulimit memlock=-1 --ulimit stack=67108864 nvcr.io/nvidia/pytorch:22.05-py3 bash -

如果没有正确配置会报错:could not select device driver “” with capabilities: [[gpu]].

-

以下是配置的过程

-

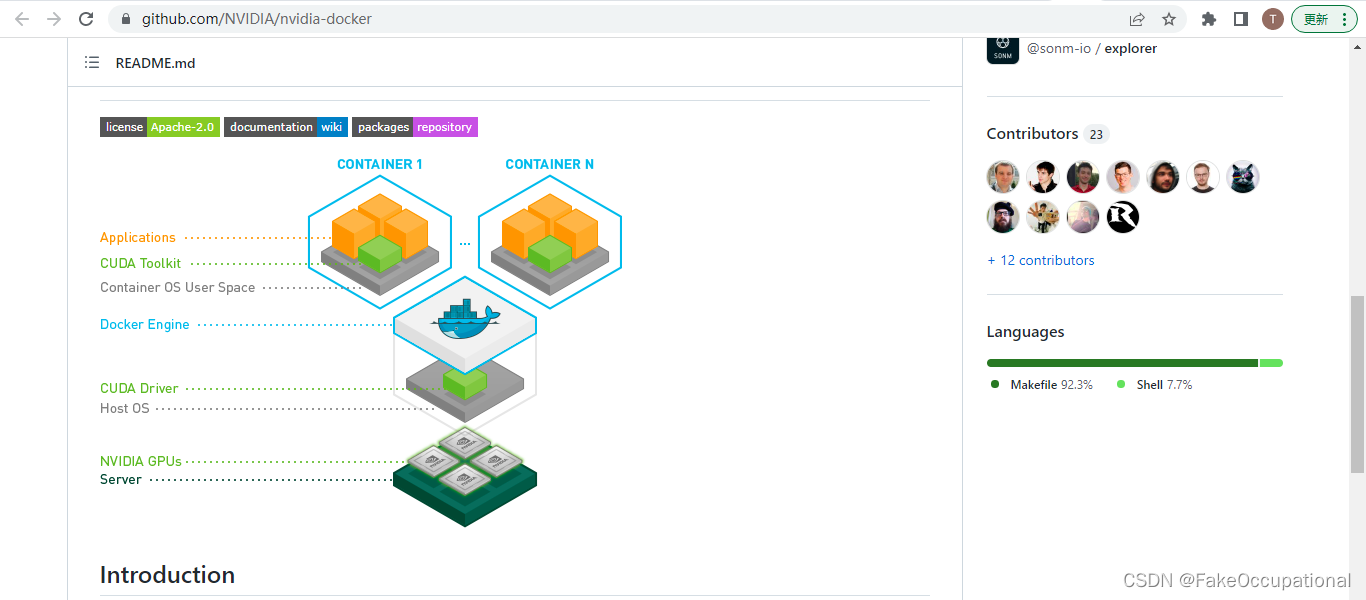

apt-get install nvidia-container-runtime:nvidia-container-runtime 是 NVIDIA 提供的一个运行时环境,允许 Docker 容器访问 NVIDIA GPU。 -

distribution=$(. /etc/os-release;echo $ID$VERSION_ID):读取当前操作系统的版本信息,并存储到 distribution 变量中 -

curl -s -L https://nvidia.github.io/nvidia-docker/gpgkey | sudo apt-key add - -

Warning: apt-key is deprecated. Manage keyring files in trusted.gpg.d instead (see apt-key(8)).

OK -

curl -s -L https://nvidia.github.io/nvidia-docker/$distribution/nvidia-docker.list | sudo tee /etc/apt/sources.list.d/nvidia-docker.list -

deb https://nvidia.github.io/libnvidia-container/stable/ubuntu18.04/KaTeX parse error: Expected 'EOF', got '#' at position 10: (ARCH) / #̲deb https://nvi…(ARCH) /

deb https://nvidia.github.io/nvidia-container-runtime/stable/ubuntu18.04/KaTeX parse error: Expected 'EOF', got '#' at position 10: (ARCH) / #̲deb https://nvi…(ARCH) /

deb https://nvidia.github.io/nvidia-docker/ubuntu18.04/$(ARCH) / -

sudo apt-get update && sudo apt-get install -y nvidia-container-toolkit

正在设置 libnvidia-container1:amd64 (1.12.0-1) ...

正在设置 libnvidia-container-tools (1.12.0-1) ...

正在设置 nvidia-container-toolkit (1.12.0-1) ...

正在处理用于 libc-bin (2.35-0ubuntu3.1) 的触发器 ...

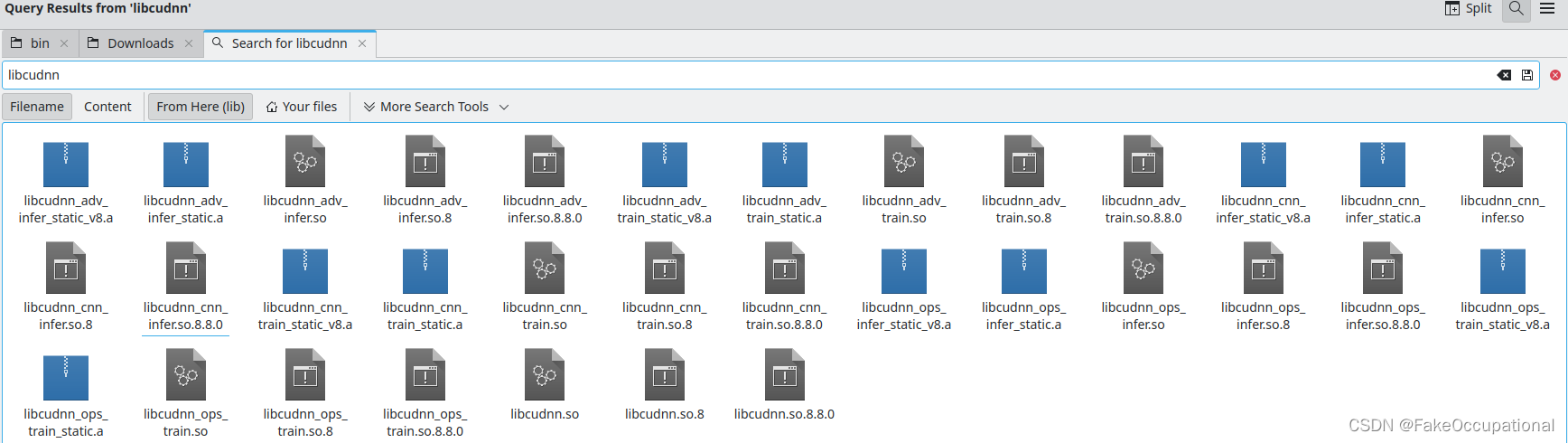

/sbin/ldconfig.real: /usr/local/cuda-11.4/targets/x86_64-linux/lib/libcudnn_cnn_infer.so.8 is not a symbolic link

/sbin/ldconfig.real: /usr/local/cuda-11.4/targets/x86_64-linux/lib/libcudnn_adv_train.so.8 is not a symbolic link

/sbin/ldconfig.real: /usr/local/cuda-11.4/targets/x86_64-linux/lib/libcudnn_ops_infer.so.8 is not a symbolic link

/sbin/ldconfig.real: /usr/local/cuda-11.4/targets/x86_64-linux/lib/libcudnn_cnn_train.so.8 is not a symbolic link

/sbin/ldconfig.real: /usr/local/cuda-11.4/targets/x86_64-linux/lib/libcudnn.so.8 is not a symbolic link

/sbin/ldconfig.real: /usr/local/cuda-11.4/targets/x86_64-linux/lib/libcudnn_ops_train.so.8 is not a symbolic link

/sbin/ldconfig.real: /usr/local/cuda-11.4/targets/x86_64-linux/lib/libcudnn_adv_infer.so.8 is not a symbolic link

- 可能原因:/usr/local/cuda-11.4/lib64/ 和 /usr/local/cuda-11.4/targets/x86_64-linux/lib是链接关系,再使用copy方法安装cudnn时,部分软连接文件没有拷贝到cuda-11.4/lib64/文件目录

# 解决方案

$ sudo ln -sf /usr/local/cuda-11.4/targets/x86_64-linux/lib/libcudnn_cnn_infer.so.8.8.0 /usr/local/cuda-11.4/targets/x86_64-linux/lib/libcudnn_cnn_infer.so.8

$ sudo ln -sf /usr/local/cuda-11.2/targets/x86_64-linux/lib/libcudnn_adv_train.so.8.8.0 /usr/local/cuda-11.4/targets/x86_64-linux/lib/libcudnn_adv_train.so.8

$ sudo ln -sf /usr/local/cuda-11.2/targets/x86_64-linux/lib/libcudnn_ops_infer.so.8.8.0 /usr/local/cuda-11.4/targets/x86_64-linux/lib/libcudnn_ops_infer.so.8

$ sudo ln -sf /usr/local/cuda-11.4/targets/x86_64-linux/lib/libcudnn_cnn_train.so.8.8.0 /usr/local/cuda-11.4/targets/x86_64-linux/lib/libcudnn_cnn_train.so.8

$ sudo ln -sf /usr/local/cuda-11.4/targets/x86_64-linux/lib/libcudnn.so.8.8.0 /usr/local/cuda-11.4/targets/x86_64-linux/lib/libcudnn.so.8

$ sudo ln -sf /usr/local/cuda-11.4/targets/x86_64-linux/lib/libcudnn_ops_train.so.8.8.0 /usr/local/cuda-11.4/targets/x86_64-linux/lib/libcudnn_ops_train.so.8

$ sudo ln -sf /usr/local/cuda-11.4/targets/x86_64-linux/lib/libcudnn_adv_infer.so.8.8.0 /usr/local/cuda-11.4/targets/x86_64-linux/lib/libcudnn_adv_infer.so.8

sudo systemctl restart docker

(base) pdd@pdd-Dell-G15-5511:~$ sudo docker run --gpus=all --rm -it --net=host --ipc=host --ulimit memlock=-1 --ulimit stack=67108864 nvcr.io/nvidia/pytorch:22.05-py3 bash

=============

== PyTorch ==

=============

NVIDIA Release 22.05 (build 37432893)

PyTorch Version 1.12.0a0+8a1a93a

Container image Copyright (c) 2022, NVIDIA CORPORATION & AFFILIATES. All rights reserved.

Copyright (c) 2014-2022 Facebook Inc.

Copyright (c) 2011-2014 Idiap Research Institute (Ronan Collobert)

Copyright (c) 2012-2014 Deepmind Technologies (Koray Kavukcuoglu)

Copyright (c) 2011-2012 NEC Laboratories America (Koray Kavukcuoglu)

Copyright (c) 2011-2013 NYU (Clement Farabet)

Copyright (c) 2006-2010 NEC Laboratories America (Ronan Collobert, Leon Bottou, Iain Melvin, Jason Weston)

Copyright (c) 2006 Idiap Research Institute (Samy Bengio)

Copyright (c) 2001-2004 Idiap Research Institute (Ronan Collobert, Samy Bengio, Johnny Mariethoz)

Copyright (c) 2015 Google Inc.

Copyright (c) 2015 Yangqing Jia

Copyright (c) 2013-2016 The Caffe contributors

All rights reserved.

Various files include modifications (c) NVIDIA CORPORATION & AFFILIATES. All rights reserved.

This container image and its contents are governed by the NVIDIA Deep Learning Container License.

By pulling and using the container, you accept the terms and conditions of this license:

https://developer.nvidia.com/ngc/nvidia-deep-learning-container-license

WARNING: CUDA Minor Version Compatibility mode ENABLED.

Using driver version 470.161.03 which has support for CUDA 11.4. This container

was built with CUDA 11.7 and will be run in Minor Version Compatibility mode.

CUDA Forward Compatibility is preferred over Minor Version Compatibility for use

with this container but was unavailable:

[[Forward compatibility was attempted on non supported HW (CUDA_ERROR_COMPAT_NOT_SUPPORTED_ON_DEVICE) cuInit()=804]]

See https://docs.nvidia.com/deploy/cuda-compatibility/ for details.

root@pdd-Dell-G15-5511:/workspace# nvidia-smi

Sat Mar 11 05:45:06 2023

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 470.161.03 Driver Version: 470.161.03 CUDA Version: 11.4 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

CG

- https://stackoverflow.com/questions/25185405/using-gpu-from-a-docker-container

1013

1013

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?