今日任务:

- 通道注意力模块复习

- 空间注意力模块

- CBAM 的定义

作业:尝试对今天的模型检查参数数目,并用tensorboard查看训练过程

学习资料:【深度学习注意力机制系列】—— CBAM注意力机制(附pytorch实现)

文章:CBAM: Convolutional Block Attention Module

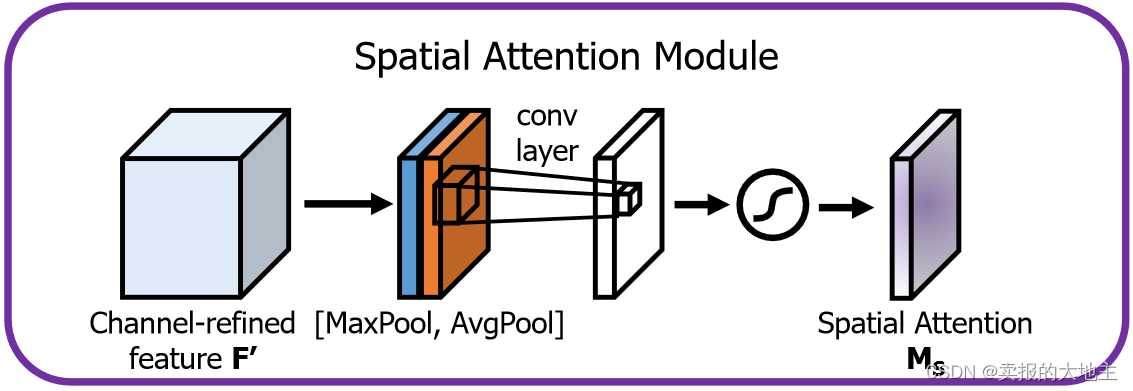

CBAM 注意力

与只关注通道维度的SE模块不同,CBAM(Convolutional Block Attention Module,卷积块注意力模块)同时关注了通道维度和空间维度,并将通道注意力和空间注意按顺序组合在一起,共同作用于输入的特征图。

- 通道注意力:分析“哪些通道的特征更关键”(如图像中的颜色、纹理通道等)——关注“什么”

- 空间注意力:定位“关键特征在图像中的具体位置”(如物体所在的区域)——关注“哪里”

CBAM 注意力模块的优势:

- 轻量级:仅增加少量计算量(全局池化 + 简单卷积),适合嵌入各种 CNN 架构

- 即插即用:无需修改原有模型主体结构,直接作为模块插入卷积层之间

- 双重优化:同时提升通道和空间维度的特征质量,适合复杂场景(小目标检测、语义分割)。

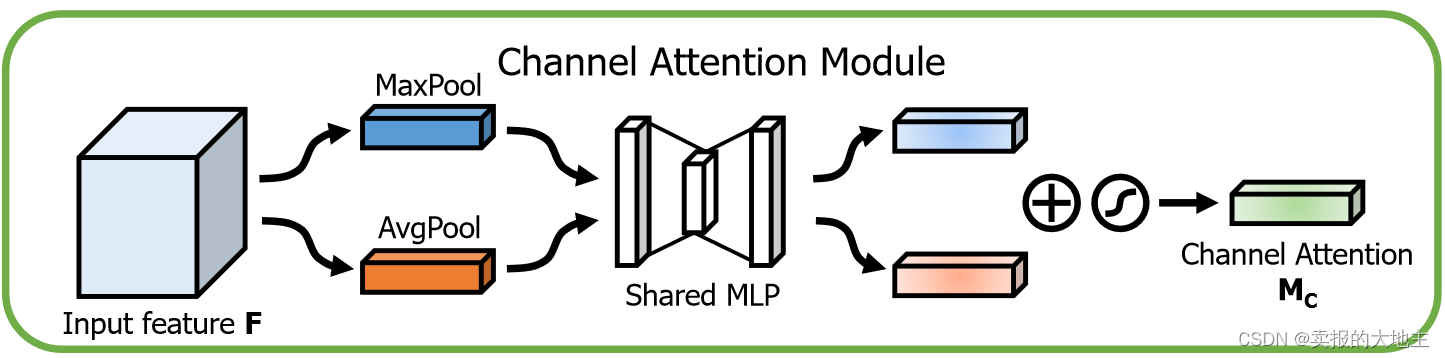

(1)通道注意力模块

CBAM 模块中的通道注意力整体结构与SE模块中的相似,但在池化步骤中加入了全局最大池化:

- 全局平均池化:捕捉图像的整体统计信息,感受野是全局的

- 全局最大池化:捕捉图像中最具辨别力的特征,例如物体的边缘、纹理等

具体步骤:并行池化 → 共享参数的MLP → 两个输出相加并使用sigmoid激活 → Reweight

# 通道注意力

import torch.nn as nn

import torch

class ChannelAttention(nn.Module):

def __init__(self,in_channels,reduction_ratio=16):

super(ChannelAttention,self).__init__()

# 1-全局池化(两重)

self.avg_pool = nn.AdaptiveAvgPool2d(1) # 全局平均池化

self.max_pool = nn.AdaptiveMaxPool2d(1) # 全局最大池化

# 2-全连接层

self.fc = nn.Sequential(

nn.Linear(in_channels,in_channels//reduction_ratio,bias=False),

nn.ReLU(inplace=True),

nn.Linear(in_channels//reduction_ratio,in_channels,bias=False)

)

self.sigmoid = nn.Sigmoid() # 两个池化,sigmoid函数单独写

def forward(self,x):

b,c,h,w = x.shape

avg_out = self.fc(self.avg_pool(x).view(b,c)) # 注意全连接层对维度的要求

max_out = self.fc(self.max_pool(x).view(b,c)) # 注意维度

add_out = avg_out + max_out # 逐元素相加

attention_weight = self.sigmoid(add_out).view(b,c,1,1) # 注意维度

return x * attention_weight # 注意力权重与原始特征相乘,广播机制(2)空间注意力模块

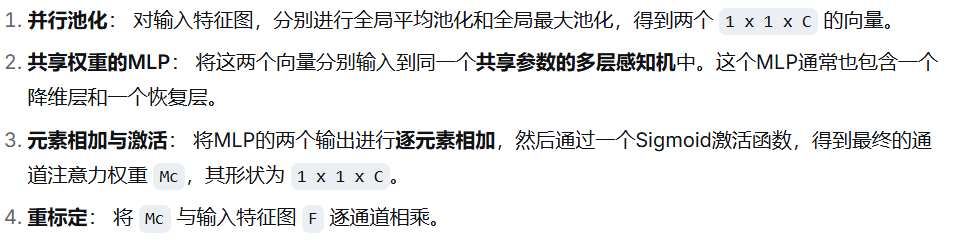

核心思想:沿通道轴进行池化操作,将多通道的信息压缩成单通道的特征图,突出重要的位置信息

注:空间注意力模块中的池化操作是在通道维度上进行(多通道到单通道),可以保留空间维度(H,W),这与通道注意力模块的全局池化有区别。

具体步骤:

- 沿通道维度的池化: 对通道注意力模块的输出

F',分别进行沿通道维度的平均池化(每个位置上所有通道的平均响应)和沿通道维度的最大池化(每个位置上所有通道中最突出的响应)→ 两个 H ✖ W ✖ 1 的特征图 - 通道拼接: 将这两个 H ✖ W ✖ 1 的特征图在通道维度上进行拼接 → 一个 H ✖ W ✖ 2 的特征图。

- 卷积与激活: 使用一个标准的 7✖7 (默认值)卷积层对拼接后的特征图进行卷积操作(将通道数从2降为1)。再经过一个Sigmoid激活函数,得到最终的空间注意力权重

Ms,其形状为 H ✖ W ✖ 1 。(由多通道到单通道) - 重标定: 将

Ms与输入特征图F'逐位置相乘。

# 空间注意力

class SpatialAttention(nn.Module):

def __init__(self,kernel_size=7):

super().__init__()

self.conv = nn.Conv2d(2,1,kernel_size,padding=kernel_size//2,bias=False) # 保证输出尺寸和输入相同

self.sigmoid = nn.Sigmoid()

def forward(self,x):

avg_out = torch.mean(x,dim=1,keepdim=True) # 平均池化,(B,1,H,W)

max_out,_ = torch.max(x,dim=1,keepdim=True) # 最大池化,(B,1,H,W);返回元组(value,indices)

out = torch.cat([avg_out,max_out],dim=1) # 拼接,(B,2,H,W)

attention_weight = self.sigmoid(self.conv(out)) # 卷积提取空间特征并激活

return x * attention_weight # 注意力权重与原始特征相乘,广播机制(3)混合注意力模块

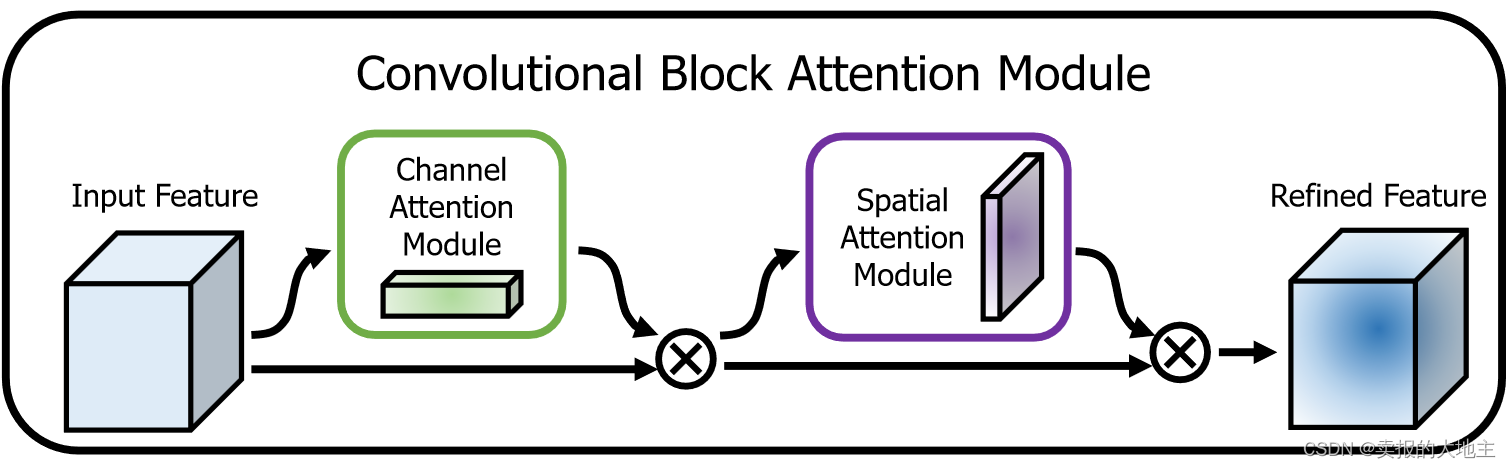

CBAM:输入的特征 → 通道注意力 → 空间注意力 → 增强的特征图

# 混合注意力

class CBAM(nn.Module):

def __init__(self,in_channels,reduction_ratio=16,kernel_size=7):

super().__init__()

self.channel = ChannelAttention(in_channels=in_channels,reduction_ratio=reduction_ratio)

self.spatial = SpatialAttention(kernel_size=kernel_size)

def forward(self,x):

channel_out = self.channel(x)

spatial_out = self.spatial(channel_out)

return spatial_out关于CBAM 在CNN架构中插入的位置:每个卷积块末尾(推荐)

# 定义带有CBAM的CNN模型

class CBAM_CNN(nn.Module):

def __init__(self):

super(CBAM_CNN, self).__init__()

# ---------------------- 第一个卷积块(带CBAM) ----------------------

self.conv1 = nn.Conv2d(3, 32, kernel_size=3, padding=1)

self.bn1 = nn.BatchNorm2d(32) # 批归一化

self.relu1 = nn.ReLU()

self.pool1 = nn.MaxPool2d(kernel_size=2)

self.cbam1 = CBAM(in_channels=32) # 在第一个卷积块后添加CBAM

# ---------------------- 第二个卷积块(带CBAM) ----------------------

self.conv2 = nn.Conv2d(32, 64, kernel_size=3, padding=1)

self.bn2 = nn.BatchNorm2d(64)

self.relu2 = nn.ReLU()

self.pool2 = nn.MaxPool2d(kernel_size=2)

self.cbam2 = CBAM(in_channels=64) # 在第二个卷积块后添加CBAM

# ---------------------- 第三个卷积块(带CBAM) ----------------------

self.conv3 = nn.Conv2d(64, 128, kernel_size=3, padding=1)

self.bn3 = nn.BatchNorm2d(128)

self.relu3 = nn.ReLU()

self.pool3 = nn.MaxPool2d(kernel_size=2)

self.cbam3 = CBAM(in_channels=128) # 在第三个卷积块后添加CBAM

# ---------------------- 全连接层 ----------------------

self.fc1 = nn.Linear(128 * 4 * 4, 512)

self.dropout = nn.Dropout(p=0.5)

self.fc2 = nn.Linear(512, 10)

def forward(self, x):

# 第一个卷积块

x = self.conv1(x)

x = self.bn1(x)

x = self.relu1(x)

x = self.pool1(x)

x = self.cbam1(x) # 应用CBAM

# 第二个卷积块

x = self.conv2(x)

x = self.bn2(x)

x = self.relu2(x)

x = self.pool2(x)

x = self.cbam2(x) # 应用CBAM

# 第三个卷积块

x = self.conv3(x)

x = self.bn3(x)

x = self.relu3(x)

x = self.pool3(x)

x = self.cbam3(x) # 应用CBAM

# 全连接层

x = x.view(-1, 128 * 4 * 4)

x = self.fc1(x)

x = self.relu3(x)

x = self.dropout(x)

x = self.fc2(x)

return x

# 初始化模型并移至设备

model = CBAM_CNN().to(device)

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(), lr=0.001)

scheduler = optim.lr_scheduler.ReduceLROnPlateau(optimizer, mode='min', patience=3, factor=0.5)训练(CNN + CBAM)

复用之前的数据处理和训练函数的代码:

# 前置准备

import torch

import torch.nn as nn

import torch.optim as optim

from torchvision import datasets, transforms

from torch.utils.data import DataLoader

import matplotlib.pyplot as plt

import numpy as np

# 设置中文字体支持

plt.rcParams["font.family"] = ["SimHei"]

plt.rcParams['axes.unicode_minus'] = False # 解决负号显示问题

# 检查GPU是否可用

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print(f"使用设备: {device}")

# 数据预处理(与原代码一致)

train_transform = transforms.Compose([

transforms.RandomCrop(32, padding=4),

transforms.RandomHorizontalFlip(),

transforms.ColorJitter(brightness=0.2, contrast=0.2, saturation=0.2, hue=0.1),

transforms.RandomRotation(15),

transforms.ToTensor(),

transforms.Normalize((0.4914, 0.4822, 0.4465), (0.2023, 0.1994, 0.2010))

])

test_transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.4914, 0.4822, 0.4465), (0.2023, 0.1994, 0.2010))

])

# 加载数据集(与原代码一致)

train_dataset = datasets.CIFAR10(root='./data', train=True, download=True, transform=train_transform)

test_dataset = datasets.CIFAR10(root='./data', train=False, transform=test_transform)

train_loader = DataLoader(train_dataset, batch_size=64, shuffle=True)

test_loader = DataLoader(test_dataset, batch_size=64, shuffle=False)# 训练

def train(model, train_loader, test_loader, criterion, optimizer, scheduler, device, epochs):

model.train()

all_iter_losses = []

iter_indices = []

train_acc_history = []

test_acc_history = []

train_loss_history = []

test_loss_history = []

for epoch in range(epochs):

running_loss = 0.0

correct = 0

total = 0

for batch_idx, (data, target) in enumerate(train_loader):

data, target = data.to(device), target.to(device)

optimizer.zero_grad()

output = model(data)

loss = criterion(output, target)

loss.backward()

optimizer.step()

iter_loss = loss.item()

all_iter_losses.append(iter_loss)

iter_indices.append(epoch * len(train_loader) + batch_idx + 1)

running_loss += iter_loss

_, predicted = output.max(1)

total += target.size(0)

correct += predicted.eq(target).sum().item()

if (batch_idx + 1) % 100 == 0:

print(f'Epoch: {epoch+1}/{epochs} | Batch: {batch_idx+1}/{len(train_loader)} '

f'| 单Batch损失: {iter_loss:.4f} | 累计平均损失: {running_loss/(batch_idx+1):.4f}')

epoch_train_loss = running_loss / len(train_loader)

epoch_train_acc = 100. * correct / total

train_acc_history.append(epoch_train_acc)

train_loss_history.append(epoch_train_loss)

# 测试阶段

model.eval()

test_loss = 0

correct_test = 0

total_test = 0

with torch.no_grad():

for data, target in test_loader:

data, target = data.to(device), target.to(device)

output = model(data)

test_loss += criterion(output, target).item()

_, predicted = output.max(1)

total_test += target.size(0)

correct_test += predicted.eq(target).sum().item()

epoch_test_loss = test_loss / len(test_loader)

epoch_test_acc = 100. * correct_test / total_test

test_acc_history.append(epoch_test_acc)

test_loss_history.append(epoch_test_loss)

scheduler.step(epoch_test_loss)

print(f'Epoch {epoch+1}/{epochs} 完成 | 训练准确率: {epoch_train_acc:.2f}% | 测试准确率: {epoch_test_acc:.2f}%')

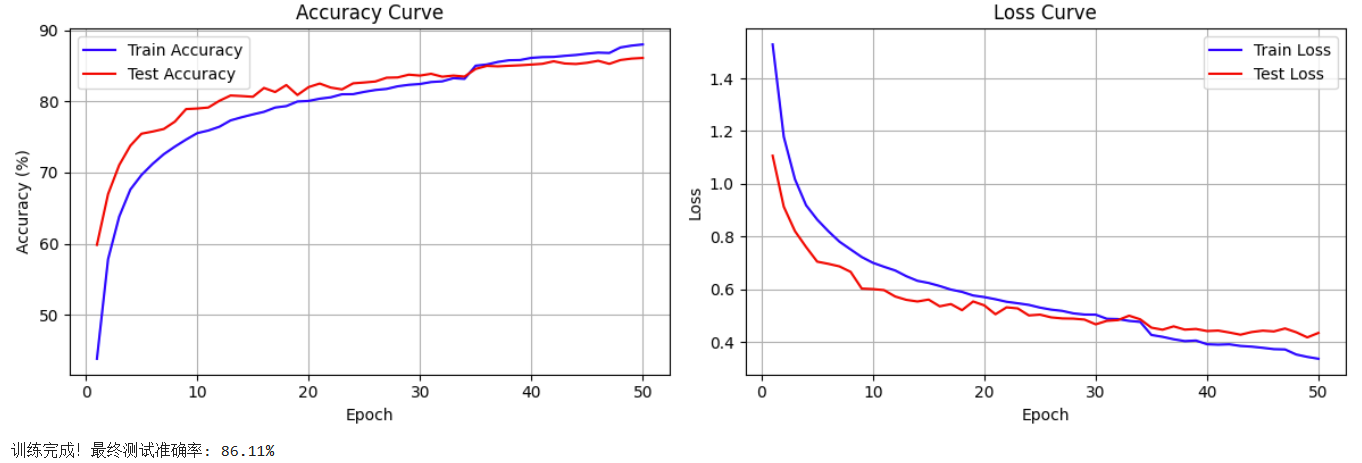

plot_iter_losses(all_iter_losses, iter_indices)

plot_epoch_metrics(train_acc_history, test_acc_history, train_loss_history, test_loss_history)

return epoch_test_acc

# 绘图函数

def plot_iter_losses(losses, indices):

plt.figure(figsize=(10, 4))

plt.plot(indices, losses, 'b-', alpha=0.7, label='Iteration Loss')

plt.xlabel('Iteration')

plt.ylabel('Loss')

plt.title('The Loss of Each Iteration')

plt.legend()

plt.grid(True)

plt.tight_layout()

plt.show()

# 7. 绘制每个 epoch 的准确率和损失曲线

def plot_epoch_metrics(train_acc, test_acc, train_loss, test_loss):

epochs = range(1, len(train_acc) + 1)

plt.figure(figsize=(12, 4))

# 绘制准确率曲线

plt.subplot(1, 2, 1)

plt.plot(epochs, train_acc, 'b-', label='Train Accuracy')

plt.plot(epochs, test_acc, 'r-', label='Test Accuracy')

plt.xlabel('Epoch')

plt.ylabel('Accuracy (%)')

plt.title('Accuracy Curve')

plt.legend()

plt.grid(True)

# 绘制损失曲线

plt.subplot(1, 2, 2)

plt.plot(epochs, train_loss, 'b-', label='Train Loss')

plt.plot(epochs, test_loss, 'r-', label='Test Loss')

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.title('Loss Curve')

plt.legend()

plt.grid(True)

plt.tight_layout()

plt.show()

# 执行训练

epochs = 50

print("开始使用带CBAM的CNN训练模型...")

final_accuracy = train(model, train_loader, test_loader, criterion, optimizer, scheduler, device, epochs)

print(f"训练完成!最终测试准确率: {final_accuracy:.2f}%")

# # 保存模型

# torch.save(model.state_dict(), 'cifar10_cbam_cnn_model.pth')

# print("模型已保存为: cifar10_cbam_cnn_model.pth")

作业:CNN + CBAM + Tensorboard

1.tensorboard 监控

复用之前的代码

# 训练(使用tensorboard)

from torch.utils.tensorboard import SummaryWriter

import torchvision

import time

import os

# ======================== TensorBoard 核心配置 ========================

# 创建 TensorBoard 日志目录(自动避免重复)

log_dir = "runs/cifar10_cnn_cbam"

if os.path.exists(log_dir):

version = 1

while os.path.exists(f"{log_dir}_v{version}"):

version += 1

log_dir = f"{log_dir}_v{version}"

writer = SummaryWriter(log_dir) # 初始化 SummaryWriter

# 5. 训练模型(整合 TensorBoard 记录)

def train(model, train_loader, test_loader, criterion, optimizer, scheduler, device, epochs, writer):

model.train()

global_step = 0 #全局步骤,用于 TensorBoard 标量记录

# (可选)记录模型结构:用一个真实样本走一遍前向传播,让 TensorBoard 解析计算图

dataiter = iter(train_loader)

images, labels = next(dataiter)

images = images.to(device)

writer.add_graph(model, images) # 写入模型结构到 TensorBoard

# (可选)记录原始训练图像:可视化数据增强前/后效果

img_grid = torchvision.utils.make_grid(images[:8].cpu()) # 取前8张

writer.add_image('原始训练图像(增强前)', img_grid, global_step=0)

for epoch in range(epochs):

running_loss = 0.0

correct = 0

total = 0

# 记录时间

epoch_start = time.time()

for batch_idx, (data, target) in enumerate(train_loader):

data, target = data.to(device), target.to(device)

optimizer.zero_grad()

output = model(data)

loss = criterion(output, target)

loss.backward()

optimizer.step()

# 统计准确率

running_loss += loss.item()

_, predicted = output.max(1)

total += target.size(0)

correct += predicted.eq(target).sum().item()

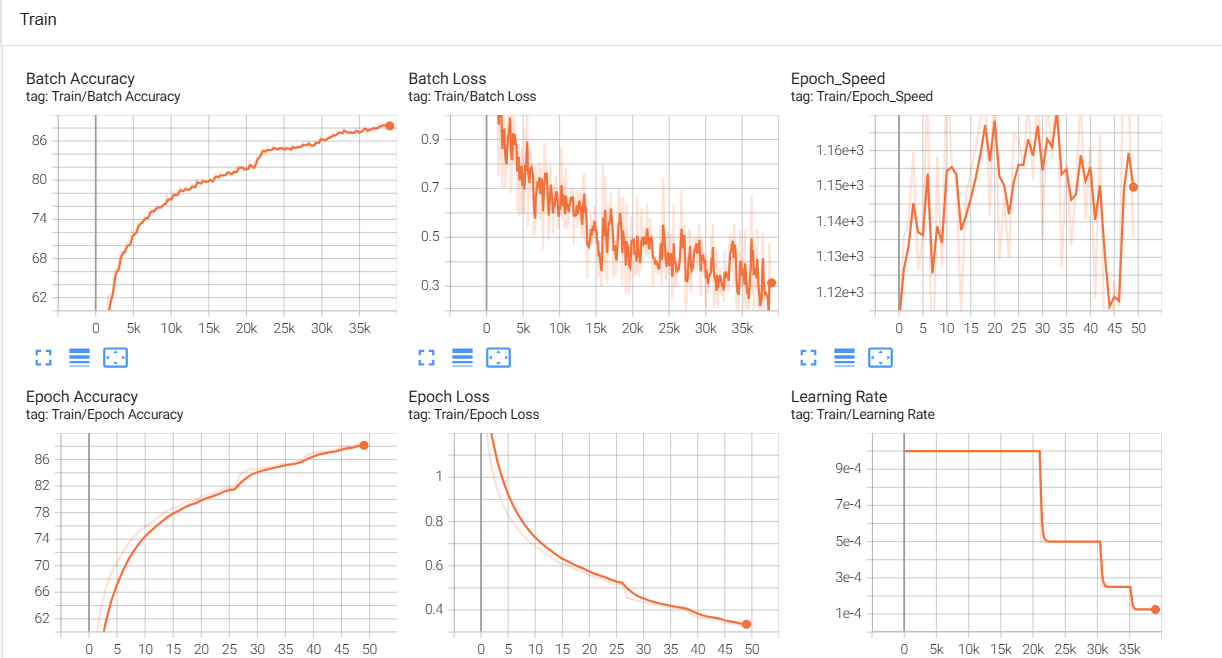

# ======================== TensorBoard 标量记录 ========================

# 每 100 个 batch 打印控制台日志(同原代码)

if (batch_idx + 1) % 100 == 0:

batch_loss = loss.item()

batch_acc = 100. * correct / total

# 记录标量数据(loss\accuracy)

writer.add_scalar('Train/Batch Loss', batch_loss, global_step)

writer.add_scalar('Train/Batch Accuracy', batch_acc, global_step)

# 记录学习率(可选)

writer.add_scalar('Train/Learning Rate', optimizer.param_groups[0]['lr'], global_step)

print(f'Epoch: {epoch+1}/{epochs} | Batch: {batch_idx+1}/{len(train_loader)} '

f'| 单Batch损失: {batch_loss:.4f} | 累计平均损失: {running_loss/(batch_idx+1):.4f}')

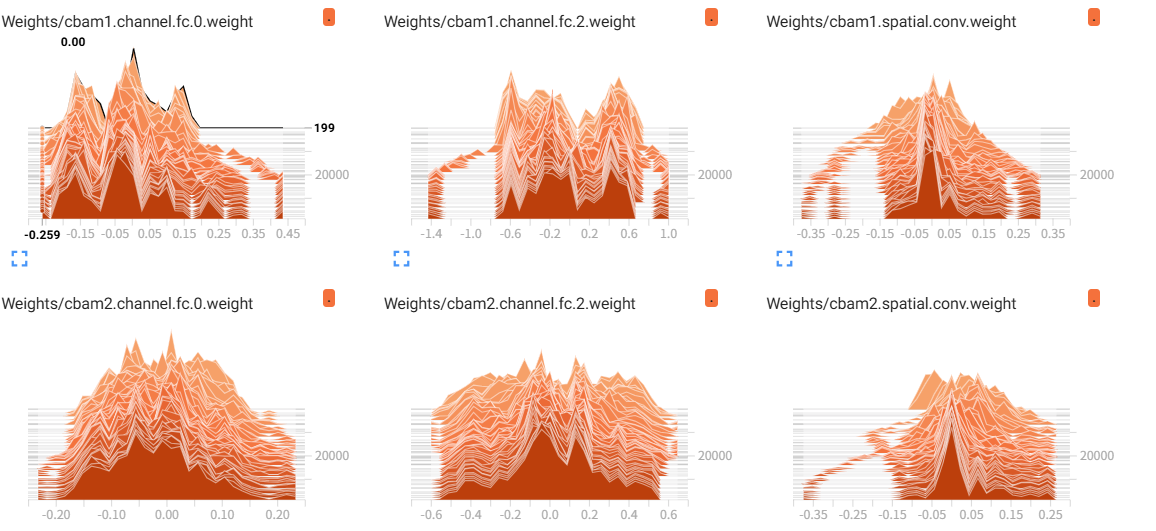

# 每 200 个 batch 记录一次参数直方图(可选,耗时稍高)

if (batch_idx + 1) % 200 == 0:

for name, param in model.named_parameters():

writer.add_histogram(f'Weights/{name}', param, global_step)

if param.grad is not None:

writer.add_histogram(f'Gradients/{name}', param.grad, global_step)

global_step += 1 # 全局步骤递增

# 计算 epoch 级训练指标

epoch_train_loss = running_loss / len(train_loader)

epoch_train_acc = 100. * correct / total

# ======================== TensorBoard epoch 标量记录 ========================

writer.add_scalar('Train/Epoch Loss', epoch_train_loss, epoch)

writer.add_scalar('Train/Epoch Accuracy', epoch_train_acc, epoch)

# 测试阶段

model.eval()

test_loss = 0

correct_test = 0

total_test = 0

wrong_images = [] # 存储错误预测样本(用于可视化)

wrong_labels = []

wrong_preds = []

with torch.no_grad():

for data, target in test_loader:

data, target = data.to(device), target.to(device)

output = model(data)

test_loss += criterion(output, target).item()

_, predicted = output.max(1)

total_test += target.size(0)

correct_test += predicted.eq(target).sum().item()

# 收集错误预测样本(用于可视化)

wrong_mask = (predicted != target)

if wrong_mask.sum() > 0:

wrong_batch_images = data[wrong_mask][:8].cpu() # 最多存8张

wrong_batch_labels = target[wrong_mask][:8].cpu()

wrong_batch_preds = predicted[wrong_mask][:8].cpu()

wrong_images.extend(wrong_batch_images)

wrong_labels.extend(wrong_batch_labels)

wrong_preds.extend(wrong_batch_preds)

# 计算 epoch 级测试指标

epoch_test_loss = test_loss / len(test_loader)

epoch_test_acc = 100. * correct_test / total_test

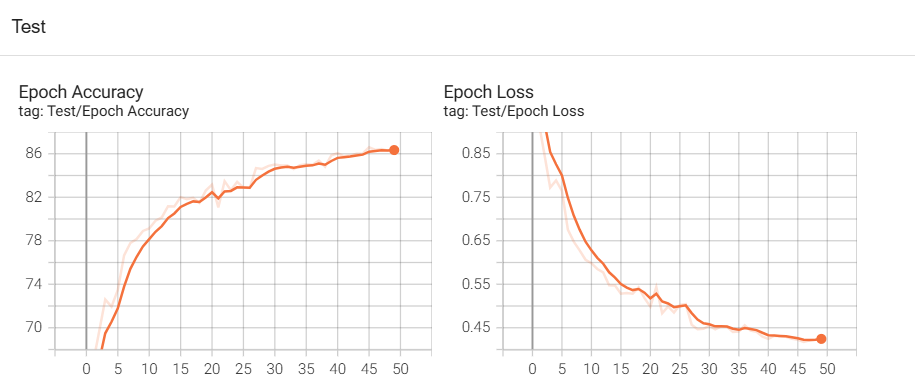

# ======================== TensorBoard 测试集记录 ========================

writer.add_scalar('Test/Epoch Loss', epoch_test_loss, epoch)

writer.add_scalar('Test/Epoch Accuracy', epoch_test_acc, epoch)

# 计算每个epoch的速度

epoch_end = time.time()

epoch_duration = epoch_end - epoch_start

samples_per_epoch = len(train_loader.dataset) # 每个epoch处理的样本总数

epoch_speed = samples_per_epoch / epoch_duration

# 记录速度指标

writer.add_scalar('Train/Epoch_Speed', epoch_speed, epoch)

# (可选)可视化错误预测样本

# if wrong_images:

# wrong_img_grid = torchvision.utils.make_grid(wrong_images)

# writer.add_image('错误预测样本', wrong_img_grid, epoch)

# # 写入错误标签文本(可选)

# wrong_text = [f"真实: {classes[wl]}, 预测: {classes[wp]}"

# for wl, wp in zip(wrong_labels, wrong_preds)]

# writer.add_text('错误预测标签', '\n'.join(wrong_text), epoch)

# 更新学习率调度器

scheduler.step(epoch_test_loss)

print(f'Epoch {epoch+1}/{epochs} 完成 | 训练准确率: {epoch_train_acc:.2f}% | 测试准确率: {epoch_test_acc:.2f}%')

# 关闭 TensorBoard 写入器

writer.close()

return epoch_test_acc

# (可选)CIFAR-10 类别名

classes = ('plane', 'car', 'bird', 'cat',

'deer', 'dog', 'frog', 'horse', 'ship', 'truck')

# 7. 执行训练(传入 TensorBoard writer)

epochs = 50

print("开始使用带CBAM的CNN训练模型...")

print(f"TensorBoard 日志目录: {log_dir}")

print("训练后执行: tensorboard --logdir=runs 查看可视化")

final_accuracy = train(model, train_loader, test_loader, criterion, optimizer, scheduler, device, epochs, writer)

print(f"训练完成!最终测试准确率: {final_accuracy:.2f}%")

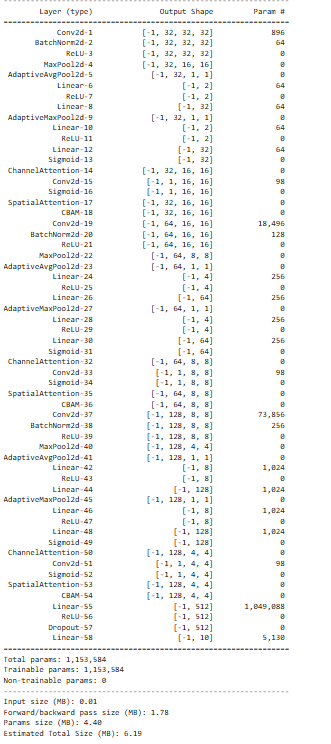

2.参数数量查看

训练完成后再使用torchsummary.summary查看

注:先查看参数数量再训练可能导致训练异常,比如BatchNorm状态改变

# 查看模型的参数数量

from torchsummary import summary

model = CBAM_CNN().to(device)

summary(model, input_size=(3, 32, 32)) # CIFAR-10 输入尺寸总共有 1,153,584 个参数,

577

577

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?