FROM

- 🍨 本文为🔗365天深度学习训练营 中的学习记录博客

- 🍖 原作者:K同学啊

我的环境

- 语言环境:Python 3.11.9

- 开发工具:Jupyter Lab

- 深度学习环境:

- torch==2.3.1+cu121

- torchvision==0.18.1+cu121

一、本周内容和个人收获

1. v1和v2的区别

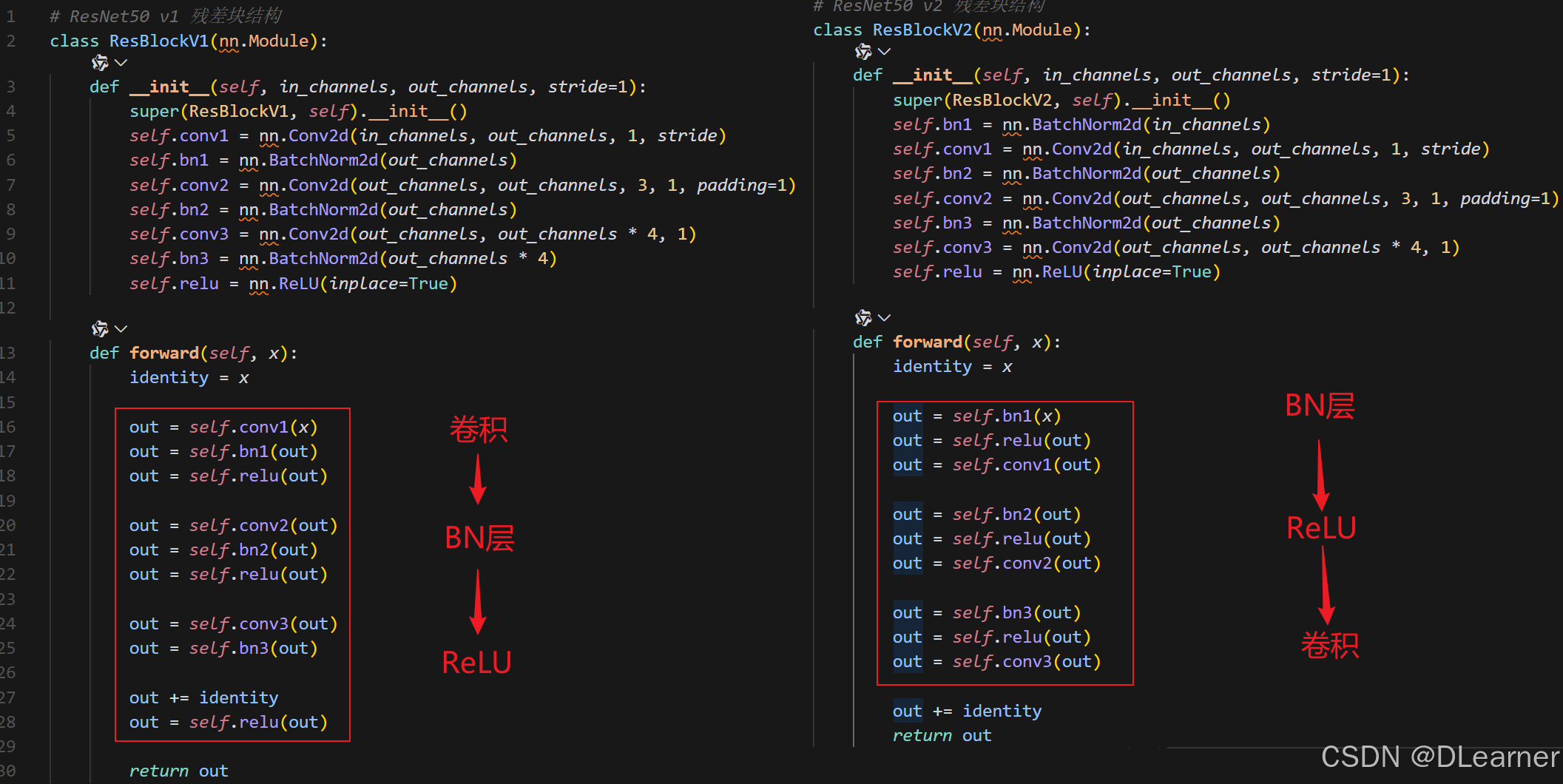

ResNet50V2是何恺明等人在ResNet基础上提出的改进版本,主要改进点在于残差结构和激活函数的使用。ResNetV2结构与原始ResNet结构的主要差别在于,原始ResNet结构先进行卷积操作,然后进行BN和激活函数计算,最后执行加法操作后再进行ReLU计算;而ResNetV2结构则是先进行BN和激活函数计算后卷积,把加法操作后的ReLU计算放到了残差结构内部。

ResNet v1 和 v2 的核心区别在于残差块(Residual Block)的内部结构:

1. 预激活设计:

v1:采用 “卷积层 -> BN层 -> ReLU” 的顺序

v2:采用 “BN层 -> ReLU -> 卷积层” 的顺序(预激活设计)

2. 信号流路径:

v1:identity分支在最后与主分支相加后再进行ReLU

v2:identity分支直接与主分支相加,不进行最后的ReLU

2. v2的主要结构

class ResNet50V2(nn.Module):

def __init__(self, num_classes=1000):

super(ResNet50V2, self).__init__()

# 1. 初始层

self.conv1 = nn.Sequential(

nn.ZeroPad2d(3),

nn.Conv2d(3, 64, kernel_size=7, stride=2),

nn.ZeroPad2d(1),

nn.MaxPool2d(kernel_size=3, stride=2)

)

# 2. 残差块堆叠

self.conv2_x = self._make_layer(64, 64, blocks=3) # 输出 256 通道

self.conv3_x = self._make_layer(256, 128, blocks=4) # 输出 512 通道

self.conv4_x = self._make_layer(512, 256, blocks=6) # 输出 1024 通道

self.conv5_x = self._make_layer(1024, 512, blocks=3) # 输出 2048 通道

# 3. 输出层

self.post_bn = nn.BatchNorm2d(2048)

self.post_relu = nn.ReLU(inplace=True)

self.avgpool = nn.AdaptiveAvgPool2d((1, 1))

self.fc = nn.Linear(2048, num_classes)

二、核心代码及运行截图

模型代码:

import torch

import torch.nn as nn

class ResNet50V2(nn.Module):

def __init__(self,

include_top=True, # 是否包含顶部的全连接层

preact=True, # 是否使用预激活

use_bias=True, # 是否使用偏置

input_shape=(3, 224, 224), # 输入形状 (C,H,W)

pooling=None, # 池化方式

num_classes=1000, # 分类数量

classifier_activation='softmax'): # 分类层激活函数

super(ResNet50V2, self).__init__()

self.include_top = include_top

self.preact = preact

self.pooling = pooling

# 初始层

self.conv1_pad = nn.ZeroPad2d(3)

self.conv1 = nn.Conv2d(input_shape[0], 64, kernel_size=7,

stride=2, bias=use_bias)

if not preact:

self ResNet50V2与ResNet区别及核心代码

ResNet50V2与ResNet区别及核心代码

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

857

857

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?