先根据我上篇文章Moveit控制真实机械臂franka-优快云博客实现moveit对franka的控制

Step1:下载编译功能包:

1、安装vision_visp

(咱也不知道什么用,看别的文章都说装,那就装!)

cd ~/catkin_ws/src

git clone -b noetic-devel https://github.com/lagadic/vision_visp.git

cd ..

catkin_make可以删除其中的visp_tracker,visp_auto_tracker

2、安装aruco_ros

cd ~/catkin_ws/src

git clone -b noetic-devel https://github.com/pal-robotics/aruco_ros

cd ..

catkin_make测试aruco_ros

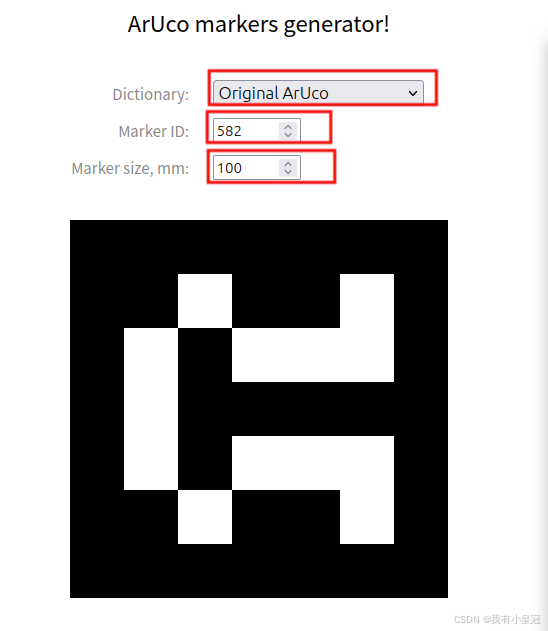

aruco码生成:Online ArUco markers generator

注意!!!dictionary需要选orginal ArUco,ID和size记住

启动zed2i相机:

roslaunch zed_wrapper zed2i.launch修改aruco_ros-launch-single.launch文件

<arg name="markerId" default="06"/>

<arg name="markerSize" default="0.018"/> <!-- in m -->该为前面记下的ID和Size。

然后在此launch文件下运行single.launch

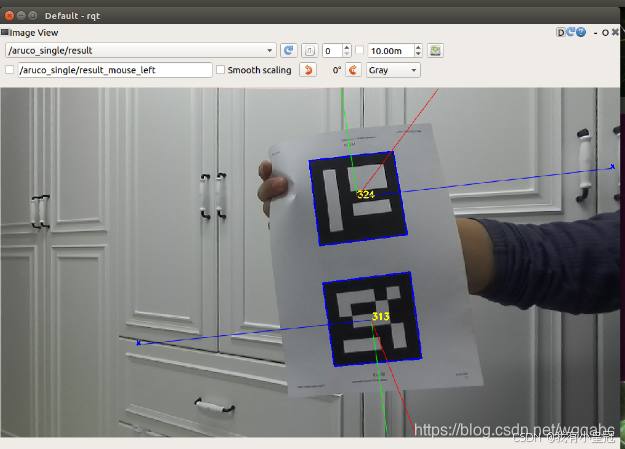

aruco码上出现坐标和方框就是识别成功!

3、安装easy_handeye

cd ~/catkin_ws/src

git clone https://github.com/IFL-CAMP/easy_handeye

cd ..

catkin_makeStep2.编写launch文件

编写两个launch文件,放在目录catkin_ws/src/easy_handeye/easy_handeye/launch下面

zed_panda_calibrate.launch:

<?xml version="1.0" ?>

<launch>

<arg name="namespace_prefix" default="panda_eob_calib"/>

<!-- (start your robot's MoveIt! stack, e.g. include its moveit_planning_execution.launch) -->

<include file="/home/admin1/catkin_ws/src/fu_moveit/launch/franka_control.launch">

<arg name="robot_ip" value="172.16.0.3"/> <!-- set your robot ip -->

<arg name="load_gripper" value="false"/>

</include>

<!--<arg name="marker_frame" default="aruco_marker_frame"/>-->

<arg name="marker_size" default="0.18" />

<arg name="marker_id" default="06" />

<arg name="eye" default="left"/>

<arg name="ref_frame" default="zed2i_left_camera_frame"/> <!-- leave empty and the pose will be published wrt param parent_name -->

<arg name="corner_refinement" default="LINES" /> <!-- NONE, HARRIS, LINES, SUBPIX -->

<!-- start ArUco -->

<rosparam file="$(find fu_moveit)/config/kinematics.yaml" command="load" />

<node name="aruco_tracker" pkg="aruco_ros" type="single">

<remap from="/camera_info" to="/zed2i/zed_node/left/camera_info" />

<remap from="/image" to="/zed2i/zed_node/left/image_rect_color" />

<param name="image_is_rectified" value="true"/>

<param name="marker_size" value="0.18"/>

<param name="marker_id" value="06"/>

<param name="reference_frame" value="zed2i_left_camera_frame"/>

<param name="camera_frame" value="zed2i_left_camera_frame"/>

<param name="marker_frame" value="aruco_marker_frame" />

<param name="corner_refinement" value="$(arg corner_refinement)" />

</node>

</launch>zed_panda_easy.launch:

<?xml version="1.0" ?>

<launch>

<arg name="namespace_prefix" default="panda_eob_calib" />

<include file="$(find easy_handeye)/launch/calibrate.launch">

<arg name="eye_on_hand" value="false"/>

<arg name="namespace_prefix" value="$(arg namespace_prefix)"/>

<arg name="move_group" value="panda_arm" />

<!--<arg name="move_group" value="panda_manipulator" doc="the name of move_group for the automatic robot motion with MoveIt!" /-->

<arg name="freehand_robot_movement" value="false"/>

<!-- fill in the following parameters according to your robot's published tf frames -->

<arg name="robot_base_frame" value="world"/>

<arg name="robot_effector_frame" value="panda_link8"/>

<!-- fill in the following parameters according to your tracking system's published tf frames -->

<arg name="tracking_base_frame" value="zed2i_left_camera_frame"/>

<arg name="tracking_marker_frame" value="aruco_marker_frame"/>

<arg name="robot_velocity_scaling" value="0.5" />

<arg name="robot_acceleration_scaling" value="0.2" />

</include>

<!-- (publish tf after the calibration) -->

<!-- roslaunch easy_handeye publish.launch eye_on_hand:=false namespace_prefix:=$(arg namespace_prefix) -->

</launch>Step3.开始标定!

打开终端,先后运行以下三个launch,必须等前一个launch运行完再开下一个

roslaunch zed_wrapper zed2i.launch

roslaunch easy_handeye zed_panda_calibrate.launch

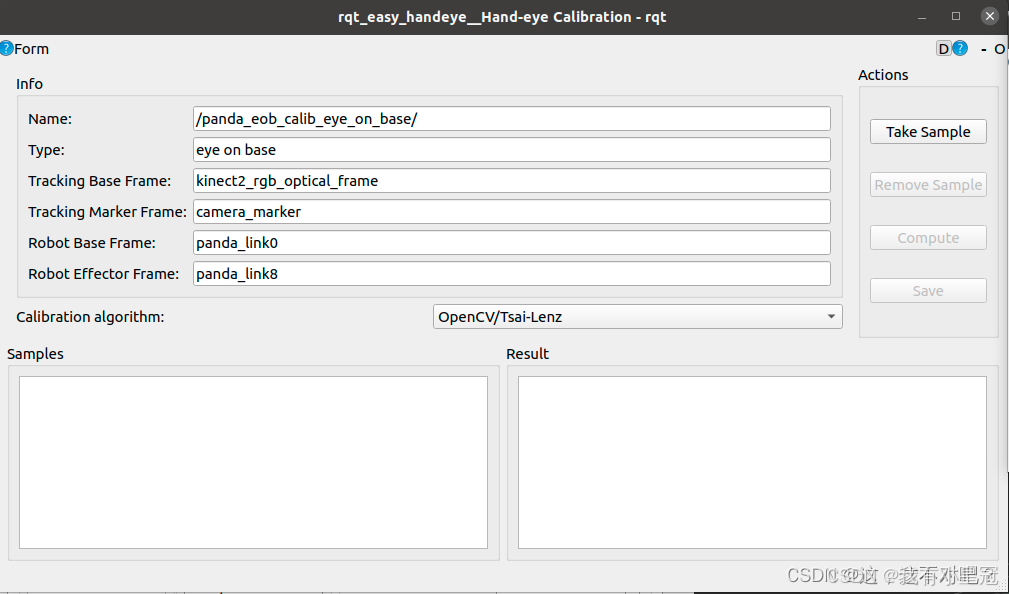

roslaunch easy_handeye zed_panda_easy.launch出来两个rviz和以下两个窗口

必须添加图像信息才能开始标定!打开rqt_image_view或者在rviz中add-image或者rqt-plugins-visualization-image view

以下内容取自:在Ubuntu20.04下对Kinect V2+Franka panda进行眼在手外标定_franka 手眼标定-优快云博客

开始标定:

同时,操作另外两个窗口

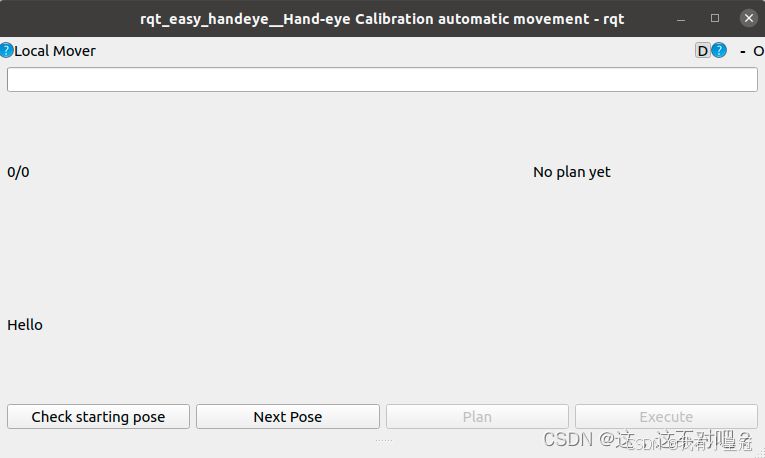

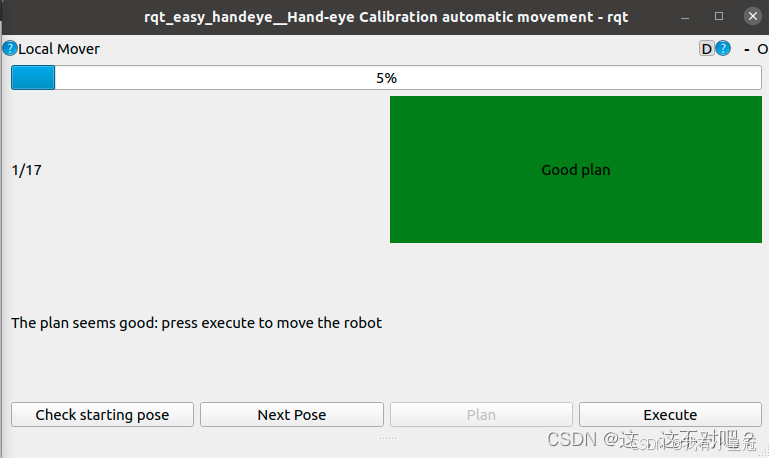

点击Check starting pose 弹出Ready to start:click on next pose即说明可以开始采样;随后点击Take Sample进行采样,之后点击Next Pose再点击Plan,如图所示:

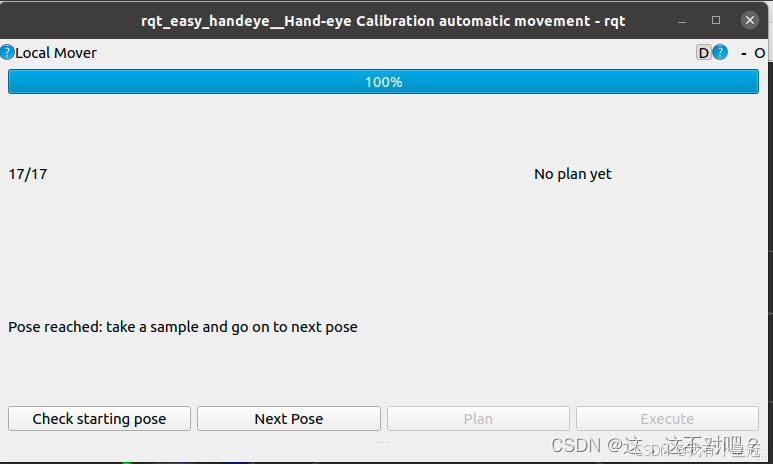

依次执行,采样十七次,点击Compute进行计算输出转换矩阵,如图所示:

--------------------------------------------------------------我是分割线---------------------------------------------------

按照上述步骤,我点plan之后一直报错,找了很多博客都没解决,最终通过手动拖动franka完成了采样(就是手动拖动franka到一个位置然后点击Take Sample),虽然很愚蠢,但卓有成效!点击compute一样有结果!

完结,撒花!

Franka机械臂手眼标定步骤与实践

Franka机械臂手眼标定步骤与实践

1685

1685

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?