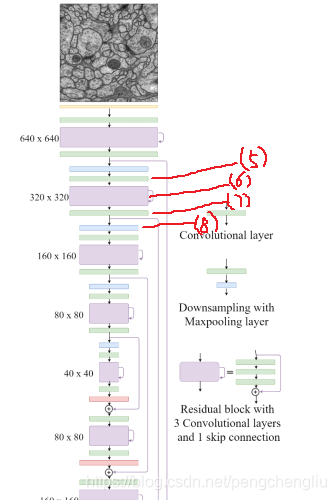

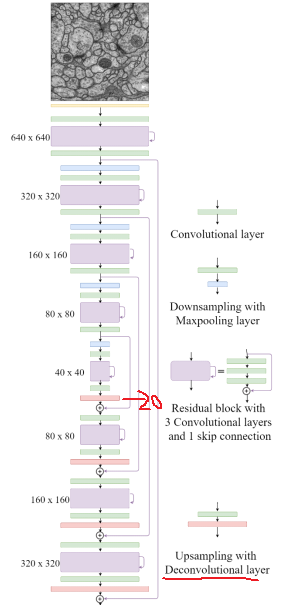

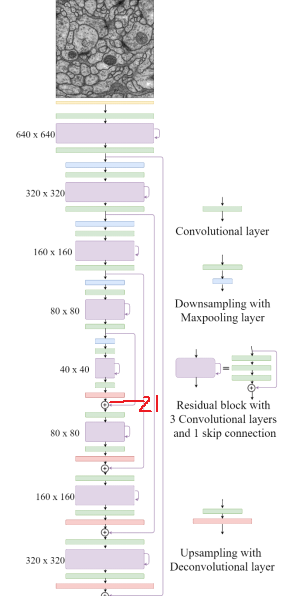

一、网络结构

各层的参数为:

二、一步一步实现FusionNet

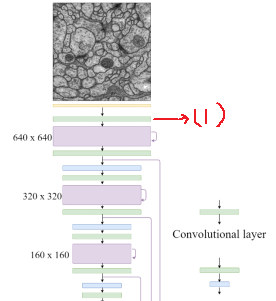

(1)

输入图像为640*640,3通道。

经过一个卷积层,代码为:

self.conv11 = nn.Sequential(

nn.Conv2d(3, 64, kernel_size=3, stride=1, padding=1), #输入通道为3,输出通道为64。padding=1,输出尺寸不变。还是640*640.

nn.BatchNorm2d(64),

nn.ReLU(),

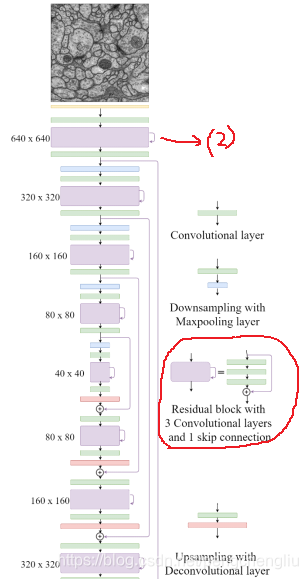

)(2)

连续三个卷积层为:

self.residual1 = nn.Sequential(

nn.Conv2d(64, 64, kernel_size=3, stride=1, padding=1), #尺寸不变,还是640*640

nn.BatchNorm2d(64),

nn.ReLU(),

nn.Conv2d(64, 64, kernel_size=3, stride=1, padding=1), # 尺寸不变,还是640*640

nn.BatchNorm2d(64),

nn.ReLU(),

nn.Conv2d(64, 64, kernel_size=3, stride=1, padding=1), # 尺寸不变,还是640*640

nn.BatchNorm2d(64),

)在forward函数中增加跳跃连接、求和:

def forward(self, x): #输入图像640*640

conv11 = self.conv11(x) #卷积,尺寸不变,还是640*640。通道数变为64。

residual1 = self.residual1(conv11)

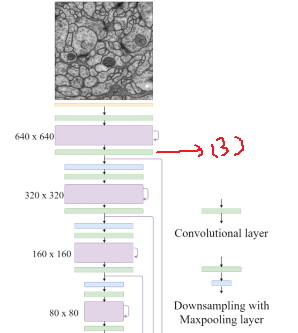

out1 = conv11 + residual1 #跳跃连接,求和(3)

同(1):

self.conv12 = nn.Sequential(

nn.Conv2d(64, 64, kernel_size=3, stride=1, padding=1), # 尺寸不变,还是640*640.

nn.BatchNorm2d(64),

nn.ReLU(),

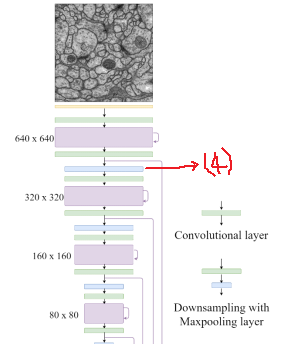

)(4)下采样

下采样,尺寸减半。

# 第1个下采样:蓝

self.pool1= nn.Sequential( #这里使用了卷积层,没有使用pool层。也达到了尺寸减半的目的。

nn.Conv2d(64, 64, kernel_size=3, stride=2, padding=1), #尺寸减半,变为320*320.

nn.BatchNorm2d(64),

nn.ReLU(),

)(5-8)

同(1)-(4):

# 第2个三明治:绿紫绿

self.conv21 = nn.Sequential(

nn.Conv2d(64, 128, kernel_size=3, stride=1, padding=1), #输入通道为64,输出通道为128。padding=1,输出尺寸不变。还是320*320.

nn.BatchNorm2d(128),

nn.ReLU(),

)

self.residual2 = nn.Sequential(

nn.Conv2d(128, 128, kernel_size=3, stride=1, padding=1), #尺寸不变,还是320*320

nn.BatchNorm2d(128),

nn.ReLU(),

nn.Conv2d(128, 128, kernel_size=3, stride=1, padding=1), # 尺寸不变,还是320*320

nn.BatchNorm2d(128),

nn.ReLU(),

nn.Conv2d(128, 128, kernel_size=3, stride=1, padding=1), # 尺寸不变,还是320*320

nn.BatchNorm2d(128),

)

self.conv22 = nn.Sequential(

nn.Conv2d(128, 128, kernel_size=3, stride=1, padding=1), # 尺寸不变,还是320*320.

nn.BatchNorm2d(128),

nn.ReLU(),

)

# 第2个下采样:蓝

self.pool2= nn.Sequential( #这里使用了卷积层,没有使用pool层。也达到了尺寸减半的目的。

nn.Conv2d(128, 128, kernel_size=3, stride=2, padding=1), #尺寸减半,变为160*160.

nn.BatchNorm2d(128),

nn.ReLU(),

)forward函数中:

#第2个三明治:绿紫绿

conv21 = self.conv21(pool1) #通道由64变为128。尺寸不变。还是320*320.

residual2 = self.residual2(conv21) # 尺寸不变,还是320*320。通道还是128

out2 = conv21 + residual2

conv22 = self.conv22(out2)

#第2个下采样:蓝

pool2 = self.pool2(conv22) #尺寸减半,变为160*160。通道不变还是128.(9-12)

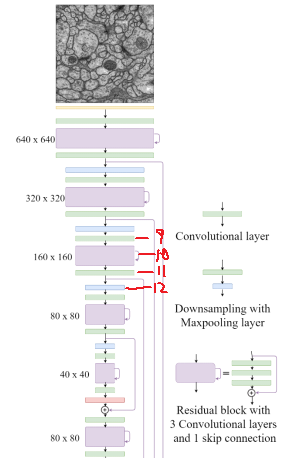

同前。

# 第3个三明治:绿紫绿

self.conv31 = nn.Sequential(

nn.Conv2d(128, 256, kernel_size=3, stride=1, padding=1), #输入通道为128,输出通道为256。padding=1,输出尺寸不变。还是160*160.

nn.BatchNorm2d(256),

nn.ReLU(),

)

self.residual3 = nn.Sequential(

nn.Conv2d(256, 256, kernel_size=3, stride=1, padding=1), #尺寸不变,还是160*160

nn.BatchNorm2d(256),

nn.ReLU(),

nn.Conv2d(256, 256, kernel_size=3, stride=1, padding=1), # 尺寸不变,还是160*160

nn.BatchNorm2d(256),

nn.ReLU(),

nn.Conv2d(256, 256, kernel_size=3, stride=1, padding=1), # 尺寸不变,还是160*160

nn.BatchNorm2d(256),

)

self.conv32 = nn.Sequential(

nn.Conv2d(256, 256, kernel_size=3, stride=1, padding=1), # 尺寸不变,还是160*160

nn.BatchNorm2d(256),

nn.ReLU(),

)

# 第3个下采样:蓝

self.pool3= nn.Sequential( #这里使用了卷积层,没有使用pool层。也达到了尺寸减半的目的。

nn.Conv2d(256, 256, kernel_size=3, stride=2, padding=1), #尺寸减半,变为80*80.

nn.BatchNorm2d(256),

nn.ReLU(),

)forward()函数中为:

#第3个三明治:绿紫绿

conv31 = self.conv31(pool2) #通道由128变为256。尺寸不变。还是160*160.

residual3 = self.residual3(conv31) # 尺寸不变,还是160*160。通道还是256

out3 = conv31 + residual3

conv32 = self.conv32(out3)

#第3个下采样:蓝

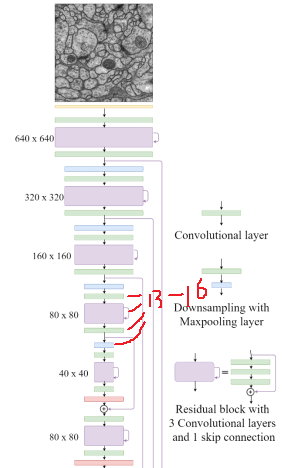

pool3 = self.pool3(conv32) #尺寸减半,变为80*80。通道不变还是256.(13-16)

# 第4个三明治:绿紫绿

self.conv41 = nn.Sequential(

nn.Conv2d(256, 512, kernel_size=3, stride=1, padding=1), # 输入通道为256,输出通道为512。padding=1,输出尺寸不变。还是80*80.

nn.BatchNorm2d(512),

nn.ReLU(),

)

self.residual4 = nn.Sequential(

nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=1), # 尺寸不变,还是80*80

nn.BatchNorm2d(512),

nn.ReLU(),

nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=1), # 尺寸不变,还是80*80

nn.BatchNorm2d(512),

nn.ReLU(),

nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=1), # 尺寸不变,还是80*80

nn.BatchNorm2d(512),

)

self.conv42 = nn.Sequential(

nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=1), # 尺寸不变,还是80*80

nn.BatchNorm2d(512),

nn.ReLU(),

)

# 第4个下采样:蓝

self.pool4 = nn.Sequential( # 这里使用了卷积层,没有使用pool层。也达到了尺寸减半的目的。

nn.Conv2d(512, 512, kernel_size=3, stride=2, padding=1), # 尺寸减半,变为40*40.

nn.BatchNorm2d(512),

nn.ReLU(),

)forward()函数中:

#第4个三明治:绿紫绿

conv41 = self.conv41(pool3) #通道由256变为512。尺寸不变。还是80*80.

residual4 = self.residual4(conv41) # 尺寸不变,还是80*80。通道还是512

out4 = conv41 + residual4

conv42 = self.conv42(out4)

#第4个下采样:蓝

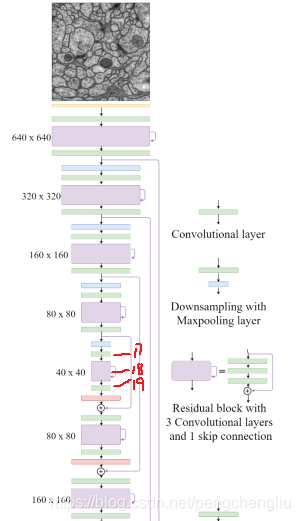

pool4 = self.pool4(conv42) #尺寸减半,变为40*40。通道不变还是512.(17-19)bridge

#中间的bridge:绿紫绿

self.convb1 = nn.Sequential(

nn.Conv2d(512, 1024, kernel_size=3, stride=1, padding=1), # 输入通道为512,输出通道为1024。padding=1,输出尺寸不变。还是40*40.

nn.BatchNorm2d(1024),

nn.ReLU(),

)

self.residualb = nn.Sequential(

nn.Conv2d(1024, 1024, kernel_size=3, stride=1, padding=1), # 尺寸不变,还是40*40

nn.BatchNorm2d(1024),

nn.ReLU(),

nn.Conv2d(1024, 1024, kernel_size=3, stride=1, padding=1), # 尺寸不变,还是40*40

nn.BatchNorm2d(1024),

nn.ReLU(),

nn.Conv2d(1024, 1024, kernel_size=3, stride=1, padding=1), # 尺寸不变,还是40*40

nn.BatchNorm2d(1024),

)

self.convb2 = nn.Sequential(

nn.Conv2d(1024, 1024, kernel_size=3, stride=1, padding=1), # 尺寸不变,还是40*40

nn.BatchNorm2d(1024),

nn.ReLU(),

)forward()中:

#中间的bridge:绿紫绿

convb1 = self.convb1(pool4) #通道由512变为1024。尺寸不变。还是40*40.

residualb = self.residualb(convb1) # 尺寸不变,还是40*40。通道还是1024

outb = convb1 + residualb

convb2 = self.convb2(outb)(20)上采样

#第4个上采样:红

self.up4 = nn.Sequential(

nn.ConvTranspose2d(1024, 512, kernel_size=3, stride=2, padding=1,output_padding=1),

nn.BatchNorm2d(512),

nn.ReLU(),

)(21)合并

当前forward()函数为:

def forward(self, x): #输入图像640*640

#第1个三明治;绿紫绿

conv11 = self.conv11(x) #卷积,尺寸不变,还是640*640。通道数变为64。

residual1 = self.residual1(conv11)

out1 = conv11 + residual1 #跳跃连接,求和

conv12 = self.conv12(out1)

#第1个下采样:蓝

pool1 = self.pool1(conv12) #尺寸减半,变为320*320。通道不变还是64.

#第2个三明治:绿紫绿

conv21 = self.conv21(pool1) #通道由64变为128。尺寸不变。还是320*320.

residual2 = self.residual2(conv21) # 尺寸不变,还是320*320。通道还是128

out2 = conv21 + residual2

conv22 = self.conv22(

本文详细介绍了如何实现FusionNet,从网络结构到逐步的代码实现,包括卷积层、跳跃连接、下采样和上采样等步骤。在简化过程中,将通用操作抽取为单独函数,以提升代码可读性。最后总结了代码编写和网络结构设计的注意事项。

本文详细介绍了如何实现FusionNet,从网络结构到逐步的代码实现,包括卷积层、跳跃连接、下采样和上采样等步骤。在简化过程中,将通用操作抽取为单独函数,以提升代码可读性。最后总结了代码编写和网络结构设计的注意事项。

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

3136

3136

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?