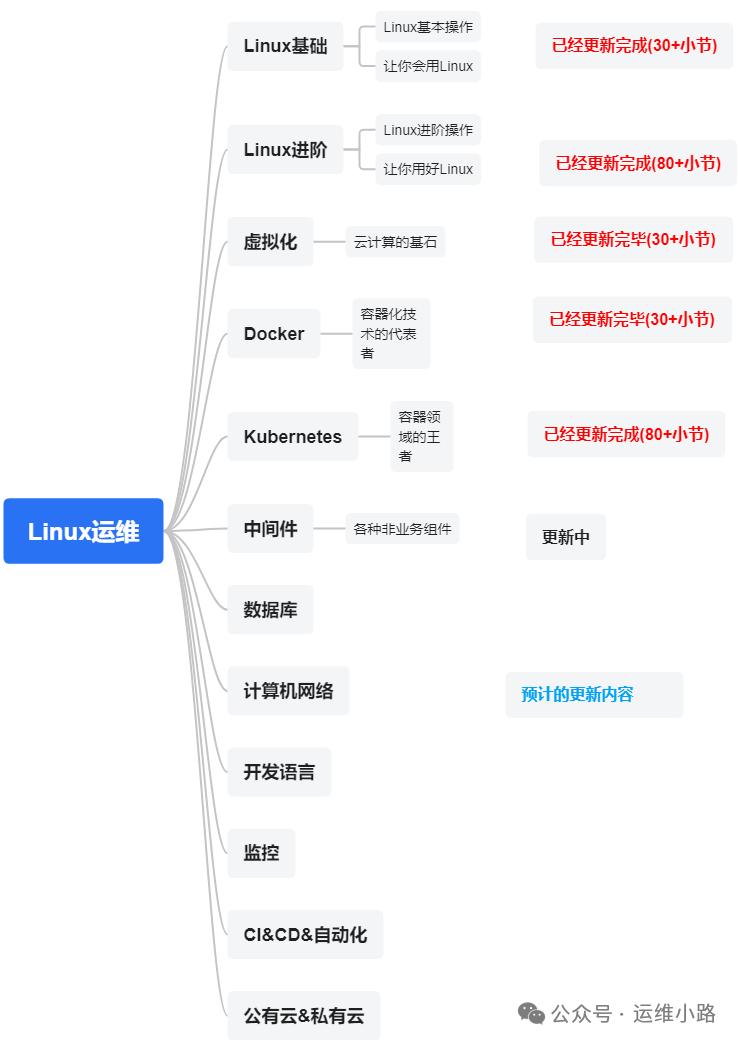

作者介绍:简历上没有一个精通的运维工程师。请点击上方的蓝色《运维小路》关注我,下面的思维导图也是预计更新的内容和当前进度(不定时更新)。

中间件,我给它的定义就是为了实现某系业务功能依赖的软件,包括如下部分:

Web服务器

代理服务器

ZooKeeper

Kafka(本章节)

一般情况下,我们的分区很少会出现异常的情况,但是在Broker异常下线,尤其是一次性下线多个节点的情况就会出现。我这里为了演示,所以把上个小节5节点Kafka直接下线2个节点,就可以模拟这个情况。

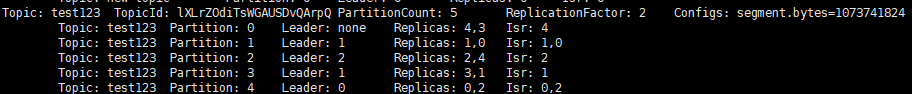

故障前正常情况

为了更容易模拟故障,所以这里设置是2副本,刚号分区0是2个副本都是后2个节点,所以这里我把后面2个节点宕机,刚好可以模拟这个情况。

Topic: test123 TopicId: lXLrZOdiTsWGAUSDvQArpQ PartitionCount: 5 ReplicationFactor: 2 Configs: segment.bytes=1073741824

Topic: test123 Partition: 0 Leader: 4 Replicas: 4,3 Isr: 4,3

Topic: test123 Partition: 1 Leader: 1 Replicas: 1,0 Isr: 1,0

Topic: test123 Partition: 2 Leader: 2 Replicas: 2,4 Isr: 2,4

Topic: test123 Partition: 3 Leader: 3 Replicas: 3,1 Isr: 3,1

Topic: test123 Partition: 4 Leader: 0 Replicas: 0,2 Isr: 0,2

故障修复

没有Leader分区

分区1,由于2个副本都在宕机的节点,所以它没有了Leader,部分版本会出现Leader是-1的情况。

修复方式

由于2个分区都没有,并且节点无法恢复,所以这里数据是无法找回的,这里只把5个分区都恢复正常。

修复方式

创建topic.json,名字可以随意取,这里主要是定义Topic的名字。

{

"topics": [

{"topic": "test123"}], #若有多个topic可后面继续添加 使用','隔开

"version": 1

}

生成reassign结果,这里的意思是把topic.json这个文件里面的Topic进行重新分区,并生成计划(不涉及重新分区)重新把这个Topic进行分配到0,1,2这3个Broker节点。

./bin/kafka-reassign-partitions.sh \

--zookeeper 192.168.31.143:2181/kafka \

--topics-to-move-json-file topic.json \

--broker-list "0,1,2" \

--generate

这个操作生成的计划会对所有分区都进行调整。

[root@localhost kafka_2.13-2.8.2]# ./bin/kafka-reassign-partitions.sh --zookeeper 192.168.31.143:2181/kafka --topics-to-move-json-file topic.json --broker-list "0,1,2" --generate

OpenJDK 64-Bit Server VM warning: If the number of processors is expected to increase from one, then you should configure the number of parallel GC threads appropriately using -XX:ParallelGCThreads=N

Warning: --zookeeper is deprecated, and will be removed in a future version of Kafka.

Current partition replica assignment

{"version":1,"partitions":[{"topic":"test123","partition":0,"replicas":[4,3],"log_dirs":["any","any"]},{"topic":"test123","partition":1,"replicas":[1,0],"log_dirs":["any","any"]},{"topic":"test123","partition":2,"replicas":[2,4],"log_dirs":["any","any"]},{"topic":"test123","partition":3,"replicas":[3,1],"log_dirs":["any","any"]},{"topic":"test123","partition":4,"replicas":[0,2],"log_dirs":["any","any"]}]}

Proposed partition reassignment configuration

{"version":1,"partitions":[{"topic":"test123","partition":0,"replicas":[2,0],"log_dirs":["any","any"]},{"topic":"test123","partition":1,"replicas":[0,1],"log_dirs":["any","any"]},{"topic":"test123","partition":2,"replicas":[1,2],"log_dirs":["any","any"]},{"topic":"test123","partition":3,"replicas":[2,1],"log_dirs":["any","any"]},{"topic":"test123","partition":4,"replicas":[0,2],"log_dirs":["any","any"]}]}

如果我们为了减少影响,这里我们只需要对不可用分区1进行调整。实际就是把上面生成的内容取需要的部分,并将该文件输出为另外的json文件。下面的配置文件就是只对异常的分区0进行修复,并且指定2个副本到Broker1和Broker2节点。

{

"version": 1,

"partitions": [

{"topic": "topic123", "partition": 0, "replicas": [1, 2]}

]

}

执行修复

./bin/kafka-reassign-partitions.sh \

--zookeeper 192.168.31.143:2181/kafka \

--reassignment-json-file reassignment.json \

--execute

#输出结果

{"version":1,"partitions":[{"topic":"test123","partition":0,"replicas":[4,3],"log_dirs":["any","any"]}]}

Save this to use as the --reassignment-json-file option during rollback

Successfully started partition reassignment for test123-0

输出过程,可以简单理解这里只是增加了2个副本。

Topic: test123 TopicId: lXLrZOdiTsWGAUSDvQArpQ PartitionCount: 5 ReplicationFactor: 2 Configs: segment.bytes=1073741824

Topic: test123 Partition: 0 Leader: none Replicas: 1,2,4,3 Isr: 4 Adding Replicas: 1,2 Removing Replicas: 4,3

修改zk数据,这里就是指定Leader分区,和ISR分区,然后等待Kafka自动修复即可。这个方法在我们以前的版本修复成功,但是当前版本它会一直卡在输出过程的样子,重启以后才恢复正常。

所以如果真正的环境遇到这样的问题,需要在同样的版本做测试,然后再到生产环境做修复(对生产环境要有敬畏之心)。

[zk: localhost:2181(CONNECTED) 4] get /kafka/brokers/topics/test123/partitions/0/state

{"controller_epoch":25,"leader":-1,"version":1,"leader_epoch":3,"isr":[4]}

[zk: localhost:2181(CONNECTED) 5] set /brokers/topics/test123/partitions/0/state '{"controller_epoch":5,"leader":1,"version":1,"epoch_zookeeper":35,"leader_epoch":6,"isr":[1,2]}'

这里还有另外一个问题未讲,就是有Leader分区,但是ISR是异常,因为节点下线,实际上就是把上面的修复过程的json文件做一定调整即可。还有就是把ZooKeeper里面的Broker数据清除。

运维小路

一个不会开发的运维!一个要学开发的运维!一个学不会开发的运维!欢迎大家骚扰的运维!

关注微信公众号《运维小路》获取更多内容。

1221

1221

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?