内部细节

KV cache显存计算:

2×精度×层数×embed维度×最大序列长度×批次大小2 \times 精度 \times 层数 \times embed维度 \times 最大序列长度 \times 批次大小2×精度×层数×embed维度×最大序列长度×批次大小

2×batch_size×seq_len×nlayers×dmodel×precison2 \times batch\_size \times seq\_len \times n_{layers} \times d_{model} \times precison2×batch_size×seq_len×nlayers×dmodel×precison

在huggingface的transformers库中是通过一个pass_key_values来存储的。

past_key_values = (

(key_layer_1, value_layer_1),

(key_layer_2, value_layer_2),

...

(key_layer_N, value_layer_N),

)

{key/value}_layer_i的shape是(batch_size, num_heads, seq_len, head_dim)

d_model=num_heads×head_dimd\_model = num\_heads \times head\_dimd_model=num_heads×head_dim

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

model = AutoModelForCausalLM.from_pretrained("/home/caixingyi/model/vicuna-68m")

tokenizer = AutoTokenizer.from_pretrained("/home/caixingyi/model/vicuna-68m")

input_ids = tokenizer.encode("Hello, my name is", return_tensors="pt")

print(input_ids, input_ids.shape)

输出:

tensor([[ 1, 15043, 29892, 590, 1024, 338]]) torch.Size([1, 6])

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

model = AutoModelForCausalLM.from_pretrained("/home/caixingyi/model/vicuna-68m")

tokenizer = AutoTokenizer.from_pretrained("/home/caixingyi/model/vicuna-68m")

input_ids = tokenizer.encode("Hello, my name is", return_tensors="pt")

output = model(input_ids, use_cache=True)

ppo

print(output.logits.shape)

print(next_tokens_logtis, next_tokens_logtis.shape)

输出:

torch.Size([1, 6, 32000])

tensor([[ -5.0442, -11.5779, -3.1342, ..., -7.3937, -10.4950, -5.2277]],

grad_fn=<SliceBackward0>) torch.Size([1, 32000])

kv cache的形状

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

model = AutoModelForCausalLM.from_pretrained("/home/caixingyi/model/vicuna-68m")

tokenizer = AutoTokenizer.from_pretrained("/home/caixingyi/model/vicuna-68m")

input_ids = tokenizer.encode("Hello, my name is", return_tensors="pt")

output = model(input_ids, use_cache=True)

past_key_values = output.past_key_values

print(len(past_key_values), past_key_values[0][0].shape)

输出

2 torch.Size([1, 12, 6, 64])

model的config

LlamaConfig {

"_name_or_path": "/home/caixingyi/model/vicuna-68m",

"architectures": [

"LlamaForCausalLM"

],

"attention_bias": false,

"attention_dropout": 0.0,

"bos_token_id": 0,

"eos_token_id": 2,

"hidden_act": "silu",

"hidden_size": 768,

"initializer_range": 0.02,

"intermediate_size": 3072,

"max_position_embeddings": 2048,

"model_type": "llama",

"num_attention_heads": 12,

"num_hidden_layers": 2,

"num_key_value_heads": 12,

"pad_token_id": 1,

"pretraining_tp": 1,

"rms_norm_eps": 1e-06,

"rope_scaling": null,

"rope_theta": 10000.0,

"tie_word_embeddings": false,

"torch_dtype": "float32",

"transformers_version": "4.37.1",

"use_cache": true,

"vocab_size": 32000

}

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

model = AutoModelForCausalLM.from_pretrained("/home/caixingyi/model/vicuna-68m")

tokenizer = AutoTokenizer.from_pretrained("/home/caixingyi/model/vicuna-68m")

input_ids = tokenizer.encode("Hello, my name is", return_tensors="pt")

output = model(input_ids, use_cache=True)

past_key_values = output.past_key_values

next_tokens_logtis = output.logits[:, -1, :]

raw_past_key_values = output.past_key_values

next_token = torch.argmax(next_tokens_logtis, dim=-1).unsqueeze(-1)

output = model(next_token, past_key_values=past_key_values, use_cache=True)

print(next_token)

past_key_values = output.past_key_values

print(len(past_key_values), past_key_values[0][0].shape)

tensor([[590]])

2 torch.Size([1, 12, 7, 64])

更新之后可以看到past_key_values中的矩阵发生了变化,长度增加了1从原来的6变成了7.

对比

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

import numpy as np

model = AutoModelForCausalLM.from_pretrained("/home/caixingyi/model/vicuna-68m")

tokenizer = AutoTokenizer.from_pretrained("/home/caixingyi/model/vicuna-68m")

model.eval()

# input_ids = tokenizer.encode("Hello, my name is", return_tensors="pt")

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

model.to(device)

input_text = "Once upon a time"

input_ids = tokenizer.encode(input_text, return_tensors="pt").to(device)

max_length = 30

greedy_output = model.generate(

input_ids,

max_length=max_length,

num_return_sequences=1,

do_sample=False

)

greedy_text = tokenizer.decode(greedy_output[0], skip_special_tokens=True)

print(greedy_text)

generated_tokens = input_ids

past_key_values = None

steps = max_length - input_ids.shape[1]

with torch.no_grad():

for step in range(steps):

if step == 0:

outputs = model(generated_tokens, use_cache=True)

else:

outputs = model(next_token, use_cache=True, past_key_values=past_key_values)

past_key_values = outputs.past_key_values

next_token_logits = outputs.logits[:, -1, :]

next_token = torch.argmax(next_token_logits, dim=-1).unsqueeze(-1)

if next_token == 2:

break

generated_tokens = torch.cat((generated_tokens, next_token), dim=1)

print(tokenizer.decode(generated_tokens[0], skip_special_tokens=True))

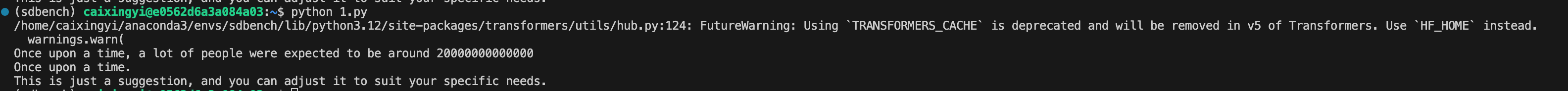

可以看到下面这张图两次的生成结果并不一致,可能是由于transformers库中实现了一些对重复内容的惩罚以及温度缩放等内容。(这个需要等我看一下transformers中的实现之后来填坑,让两者保持一样)

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?