MASK_RCNN解读4-网络正向传播总体流程A

继续回到class GeneralizedRCNN(nn.Module)的forward函数,此时,我们得到了经过resnet+fpn网络正向传播的features,一个包含了5个tensor的tuple。

1)通过proposals, proposal_losses = self.rpn(images, features, targets),进行下一阶段rpn网络的操作。

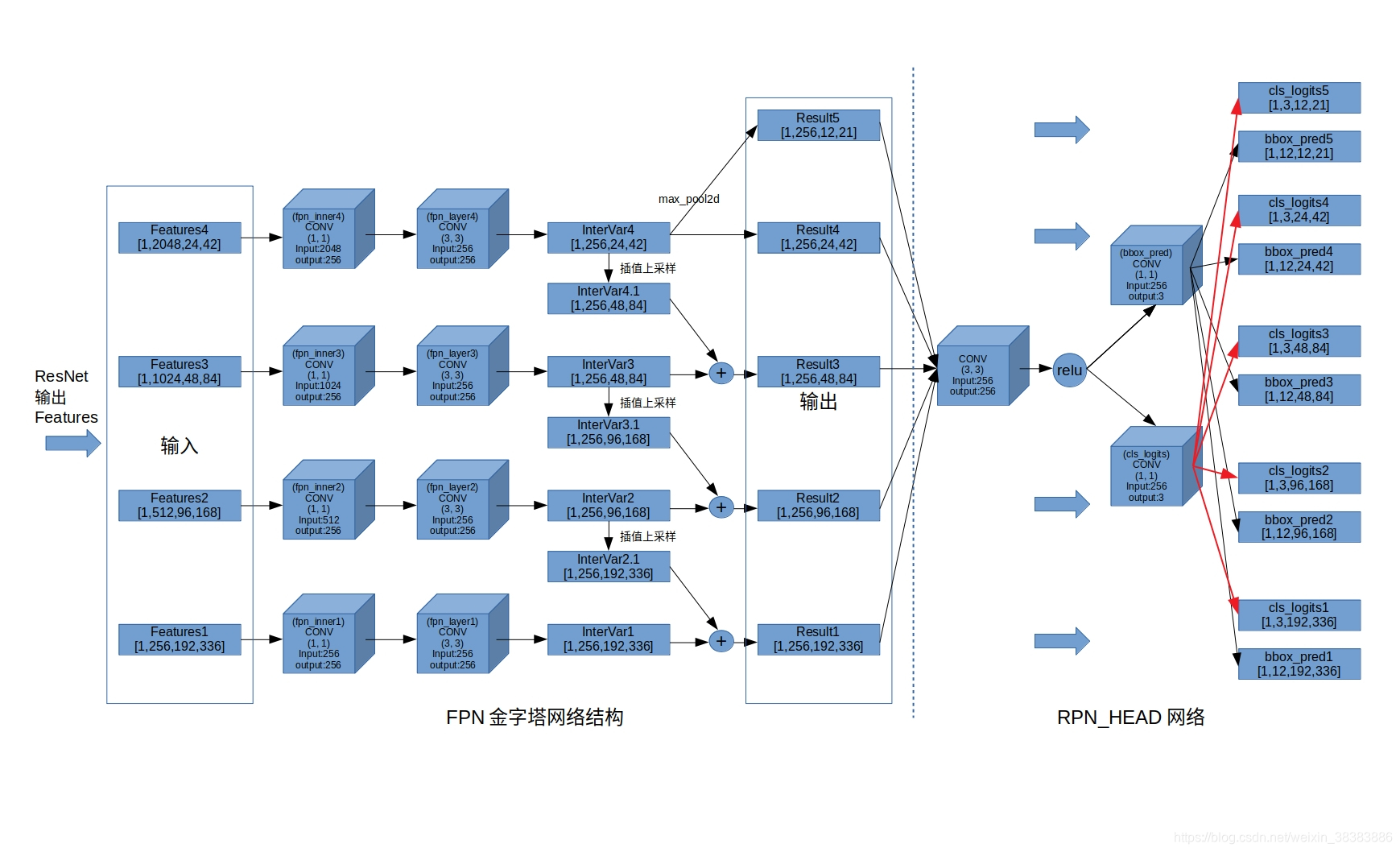

2)进入class RPNModule(torch.nn.Module):的forward函数,通过 objectness, rpn_box_regression = self.head(features)进入class RPNHead(nn.Module):的forward函数构建如下图右边的RPN——HEAD网络结构,生成了一系列的tensor:

from IPython.display import Image

Image(filename = 'MaskRcnn的FPN_RPNHEAD.jpg', width=1200, height=800)

注意:RPN——HEAD网络结构的输出,即为每个RPN中,每层features的每个anchors的测量值结果,由于训练和测试时,每个点的anchors设置为3个,所以每组分类cls_logits结果的第二维为结构3,bbox回归结构为12。

回到class GeneralizedRCNN(nn.Module)的forward函数,通过proposals, proposal_losses = self.rpn(images, features, targets)函数,生成了概率靠前的1000个proposals的后选框。1000个后选框的cls_logits(概率值)如下。

{‘objectness’: tensor([1.0000, 0.9999, 0.9999, 0.9999, 0.9998, 0.9995, 0.9986, 0.9951, 0.9904,

0.9810, 0.9315, 0.9168, 0.9047, 0.8911, 0.8899, 0.7852, 0.7464, 0.7432,

0.7219, 0.6798, 0.6653, 0.6551, 0.6016, 0.5618, 0.5523, 0.5485, 0.5467,

0.4886, 0.4826, 0.4818, 0.4782, 0.4766, 0.4417, 0.4384, 0.4213, 0.4138,

0.4054, 0.4047, 0.4012, 0.3945, 0.3741, 0.3479, 0.3470, 0.3448, 0.3299,

0.3248, 0.3220, 0.3209, 0.3165, 0.3148, 0.3105, 0.3065, 0.2898, 0.2776,

0.2756, 0.2744, 0.2731, 0.2702, 0.2649, 0.2511, 0.2505, 0.2443, 0.2370,

0.2269, 0.2257, 0.2200, 0.2101, 0.2055, 0.2049, 0.2035, 0.1985, 0.1982,

0.1928, 0.1858, 0.1792, 0.1775, 0.1755, 0.1732, 0.1707, 0.1649, 0.1558,

0.1523, 0.1483, 0.1342, 0.1338, 0.1299, 0.1295, 0.1256, 0.1234, 0.1226,

0.1219, 0.1195, 0.1187, 0.1142, 0.1105, 0.1085, 0.1063, 0.1028, 0.1024,

0.1022, 0.1013, 0.1002, 0.0977, 0.0977, 0.0940, 0.0924, 0.0908, 0.0897,

0.0882, 0.0852, 0.0848, 0.0813, 0.0812, 0.0806, 0.0783, 0.0779, 0.0777,

0.0760, 0.0759, 0.0758, 0.0756, 0.0752, 0.0752, 0.0738, 0.0733, 0.0728,

0.0726, 0.0710, 0.0697, 0.0696, 0.0690, 0.0680, 0.0672, 0.0669, 0.0665,

0.0664, 0.0661, 0.0655, 0.0632, 0.0624, 0.0609, 0.0609, 0.0603, 0.0601,

0.0598, 0.0578, 0.0575, 0.0570, 0.0570, 0.0564, 0.0545, 0.0543, 0.0543,

0.0541, 0.0533, 0.0530, 0.0529, 0.0516, 0.0516, 0.0514, 0.0512, 0.0506,

0.0504, 0.0502, 0.0499, 0.0491, 0.0490, 0.0489, 0.0487, 0.0480, 0.0478,

0.0475, 0.0471, 0.0460, 0.0437, 0.0426, 0.0424, 0.0419, 0.0418, 0.0415,

0.0410, 0.0406, 0.0402, 0.0401, 0.0400, 0.0394, 0.0393, 0.0387, 0.0381,

0.0381, 0.0378, 0.0370, 0.0365, 0.0364, 0.0363, 0.0362, 0.0362, 0.0361,

0.0359, 0.0344, 0.0339, 0.0338, 0.0338, 0.0331, 0.0327, 0.0318, 0.0318,

0.0311, 0.0310, 0.0305, 0.0305, 0.0301, 0.0297, 0.0294, 0.0290, 0.0288,

0.0288, 0.0286, 0.0283, 0.0281, 0.0279, 0.0279, 0.0272, 0.0272, 0.0270,

0.0269, 0.0268, 0.0268, 0.0267, 0.0266, 0.0263, 0.0258, 0.0256, 0.0254,

0.0252, 0.0251, 0.0251, 0.0249, 0.0247, 0.0244, 0.0241, 0.0237, 0.0237,

0.0232, 0.0232, 0.0230, 0.0225, 0.0224, 0.0223, 0.0222, 0.0220, 0.0220,

0.0219, 0.0219, 0.0218, 0.0218, 0.0214, 0.0214, 0.0213, 0.0208, 0.0207,

0.0205, 0.0205, 0.0202, 0.0199, 0.0198, 0.0195, 0.0195, 0.0195, 0.0195,

0.0193, 0.0191, 0.0191, 0.0189, 0.0189, 0.0189, 0.0187, 0.0185, 0.0185,

0.0184, 0.0183, 0.0181, 0.0180, 0.0179, 0.0177, 0.0177, 0.0175, 0.0175,

0.0174, 0.0174, 0.0173, 0.0170, 0.0169, 0.0169, 0.0169, 0.0169, 0.0169,

0.0168, 0.0167, 0.0167, 0.0165, 0.0163, 0.0163, 0.0162, 0.0161, 0.0157,

0.0155, 0.0153, 0.0153, 0.0151, 0.0151, 0.0151, 0.0151, 0.0148, 0.0147,

0.0146, 0.0145, 0.0145, 0.0143, 0.0143, 0.0140, 0.0139, 0.0138, 0.0138,

0.0138, 0.0137, 0.0136, 0.0134, 0.0134, 0.0132, 0.0132, 0.0132, 0.0131,

0.0131, 0.0131, 0.0131, 0.0131, 0.0130, 0.0130, 0.0129, 0.0127, 0.0127,

0.0127, 0.0125, 0.0124, 0.0123, 0.0123, 0.0123, 0.0122, 0.0122, 0.0122,

0.0121, 0.0121, 0.0121, 0.0121, 0.0120, 0.0120, 0.0119, 0.0119, 0.0118,

0.0118, 0.0118, 0.0118, 0.0117, 0.0117, 0.0116, 0.0115, 0.0115, 0.0115,

0.0114, 0.0113, 0.0113, 0.0112, 0.0112, 0.0111, 0.0111, 0.0110, 0.0109,

0.0109, 0.0109, 0.0108, 0.0107, 0.0107, 0.0106, 0.0105, 0.0104, 0.0104,

0.0104, 0.0104, 0.0104, 0.0103, 0.0103, 0.0102, 0.0102, 0.0102, 0.0102,

0.0102, 0.0101, 0.0101, 0.0101, 0.0101, 0.0100, 0.0100, 0.0100, 0.0100,

0.0099, 0.0097, 0.0097, 0.0097, 0.0095, 0.0095, 0.0095, 0.0093, 0.0093,

0.0093, 0.0093, 0.0092, 0.0091, 0.0091, 0.0091, 0.0090, 0.0088, 0.0087,

0.0087, 0.0086, 0.0086, 0.0086, 0.0086, 0.0086, 0.0086, 0.0086, 0.0085,

0.0085, 0.0085, 0.0085, 0.0084, 0.0084, 0.0084, 0.0083, 0.0083, 0.0083,

0.0083, 0.0082, 0.0082, 0.0082, 0.0082, 0.0081, 0.0081, 0.0080, 0.0080,

0.0079, 0.0079, 0.0078, 0.0078, 0.0078, 0.0078, 0.0077, 0.0077, 0.0077,

0.0076, 0.0075, 0.0075, 0.0075, 0.0075, 0.0074, 0.0074, 0.0074, 0.0074,

0.0073, 0.0073, 0.0073, 0.0073, 0.0072, 0.0072, 0.0072, 0.0072, 0.0071,

0.0071, 0.0071, 0.0071, 0.0070, 0.0070, 0.0070, 0.0070, 0.0070, 0.0070,

0.0069, 0.0068, 0.0068, 0.0067, 0.0066, 0.0066, 0.0066, 0.0065, 0.0065,

0.0065, 0.0065, 0.0065, 0.0065, 0.0065, 0.0065, 0.0064, 0.0064, 0.0063,

0.0063, 0.0063, 0.0062, 0.0062, 0.0062, 0.0062, 0.0061, 0.0061, 0.0061,

0.0061, 0.0061, 0.0060, 0.0060, 0.0059, 0.0059, 0.0059, 0.0059, 0.0059,

0.0058, 0.0058, 0.0058, 0.0057, 0.0057, 0.0057, 0.0057, 0.0057, 0.0057,

0.0057, 0.0056, 0.0056, 0.0056, 0.0056, 0.0056, 0.0056, 0.0055, 0.0055,

0.0055, 0.0055, 0.0055, 0.0055, 0.0055, 0.0054, 0.0054, 0.0054, 0.0054,

0.0054, 0.0054, 0.0053, 0.0053, 0.0052, 0.0052, 0.0052, 0.0052, 0.0052,

0.0051, 0.0051, 0.0050, 0.0050, 0.0049, 0.0049, 0.0049, 0.0049, 0.0049,

0.0049, 0.0048, 0.0048, 0.0048, 0.0048, 0.0048, 0.0047, 0.0047, 0.0047,

0.0047, 0.0047, 0.0047, 0.0046, 0.0046, 0.0046, 0.0046, 0.0045, 0.0045,

0.0045, 0.0044, 0.0044, 0.0044, 0.0044, 0.0043, 0.0043, 0.0043, 0.0043,

0.0043, 0.0043, 0.0042, 0.0042, 0.0042, 0.0042, 0.0042, 0.0042, 0.0041,

0.0041, 0.0041, 0.0041, 0.0041, 0.0041, 0.0041, 0.0041, 0.0040, 0.0040,

0.0040, 0.0040, 0.0040, 0.0040, 0.0040, 0.0040, 0.0039, 0.0039, 0.0039,

0.0039, 0.0039, 0.0039, 0.0039, 0.0039, 0.0038, 0.0038, 0.0038, 0.0038,

0.0038, 0.0038, 0.0037, 0.0037, 0.0037, 0.0037, 0.0037, 0.0037, 0.0037,

0.0036, 0.0036, 0.0036, 0.0036, 0.0036, 0.0036, 0.0036, 0.0036, 0.0036,

0.0036, 0.0036, 0.0036, 0.0036, 0.0036, 0.0036, 0.0036, 0.0035, 0.0035,

0.0035, 0.0035, 0.0035, 0.0035, 0.0035, 0.0035, 0.0034, 0.0034, 0.0034,

0.0034, 0.0034, 0.0034, 0.0034, 0.0033, 0.0033, 0.0033, 0.0033, 0.0033,

0.0033, 0.0032, 0.0032, 0.0032, 0.0032, 0.0032, 0.0032, 0.0032, 0.0032,

0.0031, 0.0031, 0.0031, 0.0031, 0.0031, 0.0031, 0.0031, 0.0031, 0.0030,

0.0030, 0.0030, 0.0030, 0.0030, 0.0030, 0.0030, 0.0030, 0.0030, 0.0029,

0.0029, 0.0029, 0.0029, 0.0029, 0.0029, 0.0029, 0.0029, 0.0029, 0.0029,

0.0028, 0.0028, 0.0028, 0.0028, 0.0028, 0.0028, 0.0028, 0.0028, 0.0028,

0.0028, 0.0028, 0.0028, 0.0028, 0.0027, 0.0027, 0.0027, 0.0027, 0.0026,

0.0026, 0.0026, 0.0026, 0.0026, 0.0026, 0.0026, 0.0026, 0.0026, 0.0025,

0.0025, 0.0025, 0.0025, 0.0025, 0.0025, 0.0025, 0.0025, 0.0025, 0.0025,

0.0025, 0.0024, 0.0024, 0.0024, 0.0024, 0.0024, 0.0024, 0.0024, 0.0023,

0.0023, 0.0023, 0.0023, 0.0023, 0.0023, 0.0023, 0.0023, 0.0022, 0.0022,

0.0022, 0.0022, 0.0022, 0.0022, 0.0022, 0.0022, 0.0022, 0.0022, 0.0022,

0.0022, 0.0022, 0.0022, 0.0022, 0.0022, 0.0021, 0.0021, 0.0021, 0.0021,

0.0021, 0.0021, 0.0021, 0.0021, 0.0021, 0.0021, 0.0021, 0.0021, 0.0021,

0.0021, 0.0021, 0.0021, 0.0021, 0.0020, 0.0020, 0.0020, 0.0020, 0.0020,

0.0020, 0.0020, 0.0020, 0.0020, 0.0020, 0.0020, 0.0020, 0.0020, 0.0020,

0.0020, 0.0020, 0.0020, 0.0020, 0.0019, 0.0019, 0.0019, 0.0019, 0.0019,

0.0019, 0.0019, 0.0019, 0.0019, 0.0019, 0.0019, 0.0019, 0.0018, 0.0018,

0.0018, 0.0018, 0.0018, 0.0018, 0.0018, 0.0018, 0.0018, 0.0018, 0.0018,

0.0018, 0.0018, 0.0018, 0.0018, 0.0017, 0.0017, 0.0017, 0.0017, 0.0017,

0.0017, 0.0017, 0.0017, 0.0017, 0.0017, 0.0017, 0.0017, 0.0017, 0.0017,

0.0017, 0.0017, 0.0017, 0.0017, 0.0017, 0.0017, 0.0017, 0.0016, 0.0016,

0.0016, 0.0016, 0.0016, 0.0016, 0.0016, 0.0016, 0.0016, 0.0016, 0.0016,

0.0016, 0.0016, 0.0016, 0.0016, 0.0016, 0.0016, 0.0016, 0.0016, 0.0016,

0.0016, 0.0016, 0.0016, 0.0016, 0.0016, 0.0016, 0.0016, 0.0016, 0.0015,

0.0015, 0.0015, 0.0015, 0.0015, 0.0015, 0.0015, 0.0015, 0.0015, 0.0015,

0.0015, 0.0015, 0.0015, 0.0015, 0.0015, 0.0015, 0.0015, 0.0014, 0.0014,

0.0014, 0.0014, 0.0014, 0.0014, 0.0014, 0.0014, 0.0014, 0.0014, 0.0014,

0.0014, 0.0014, 0.0014, 0.0014, 0.0014, 0.0014, 0.0014, 0.0014, 0.0014,

0.0014, 0.0014, 0.0014, 0.0013, 0.0013, 0.0013, 0.0013, 0.0013, 0.0013,

0.0013, 0.0013, 0.0013, 0.0013, 0.0013, 0.0013, 0.0013, 0.0013, 0.0013,

0.0013, 0.0013, 0.0013, 0.0013, 0.0013, 0.0013, 0.0013, 0.0013, 0.0012,

0.0012, 0.0012, 0.0012, 0.0012, 0.0012, 0.0012, 0.0012, 0.0012, 0.0012,

0.0012, 0.0012, 0.0012, 0.0012, 0.0012, 0.0012, 0.0012, 0.0012, 0.0012,

0.0012, 0.0012, 0.0012, 0.0011, 0.0011, 0.0011, 0.0011, 0.0011, 0.0011,

0.0011, 0.0011, 0.0011, 0.0011, 0.0011, 0.0011, 0.0011, 0.0011, 0.0011,

0.0011, 0.0011, 0.0011, 0.0011, 0.0011, 0.0011, 0.0011, 0.0011, 0.0011,

0.0011], device=‘cuda:0’)}

再进入x, result, detector_losses = self.roi_heads(features, proposals, targets)函数,进入class CombinedROIHeads(torch.nn.ModuleDict):的forward函数。进入class ROIBoxHead(torch.nn.Module):的forward函数。

进入x = self.feature_extractor(features, proposals)函数,从修正后的预测边框对应的特征图里进一步提取特征,官方说明“extract features that will be fed to the final classifier, The feature_extractor generally corresponds to the pooler + heads”。进入class FPN2MLPFeatureExtractor(nn.Module):的forward进一步提取。

1)通过x = self.pooler(x, proposals)函数,使能RoIAlign机制,具体RoIAlign机制参考 :https://blog.youkuaiyun.com/u013066730/article/details/84062027

2)通过步骤1,将不同feature层的tensor以及1000个box,归并到torch.Size([1000, 256, 7, 7])的尺度上,为后续的全连接进行准备。

3)通过x = F.relu(self.fc6(x))和x = F.relu(self.fc7(x))进行计算,输出特征提取后的torch.Size([1000, 1024])维度的tensor。

再进入class_logits, box_regression = self.predictor(x),进行回归和分类计算。其中cls_score函数输入1024维度,输出11维度。bbox_pred函数,输入1024维度,输出44维度。其中的11,是我们指定的11个物体类别。

通过class_logits, box_regression = self.predictor(x),我们得到了这1000个候选框最后的回归数据和分类数据。

再进入result = self.post_processor((class_logits, box_regression), proposals)函数,官方解释“From a set of classification scores, box regression and proposals,computes the post-processed boxes, and applies NMS to obtain the final results.”主要是通过softmax函数将class_logits转换为概率值,通过BoxCoder函数,将box_regression转换为原始图片的box,通过NMS算法及其他的一些抑制算法,得到最终候选的3个对象:

回归框:tensor([[1070.7845, 9.4443, 1258.5970, 416.1075],

[ 0.0000, 576.4607, 1332.0000, 746.1382],

[ 22.3919, 580.1149, 671.8248, 748.1027]], device='cuda:0')

概率值:'scores': tensor([1.0000, 1.0000, 0.1078]

为了降低难度,由于我们暂时屏蔽了self.cfg.MODEL.MASK_ON,所以完成了predictions = self.model(image_list)模型的预测,跳转回主函数,def compute_prediction(self, original_image):,主函数再根据概率值0.7为边界,删除了最后一个边框。

补一张一直测试使用的图片,分辨率为1280x720;

Image(filename = '20191225_091233_rsize1280x720.jpg', width=1280, height=720)

识别结果为:

Image(filename = 'mypredictions.jpg', width=1280, height=720)

本文详细介绍了MASK_RCNN网络的正向传播过程,从RPN模块开始,通过对象性得分和边界框回归生成一系列的测量值,深入探讨了RPN网络的HEAD部分结构及其在目标检测中的作用。

本文详细介绍了MASK_RCNN网络的正向传播过程,从RPN模块开始,通过对象性得分和边界框回归生成一系列的测量值,深入探讨了RPN网络的HEAD部分结构及其在目标检测中的作用。

800

800

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?