参考以下连接获取原始 retinaface模型:

mirrors / wzj5133329 / retinaface_caffe · GitCode

https://github.com/deepinsight/insightface/tree/master/RetinaFace

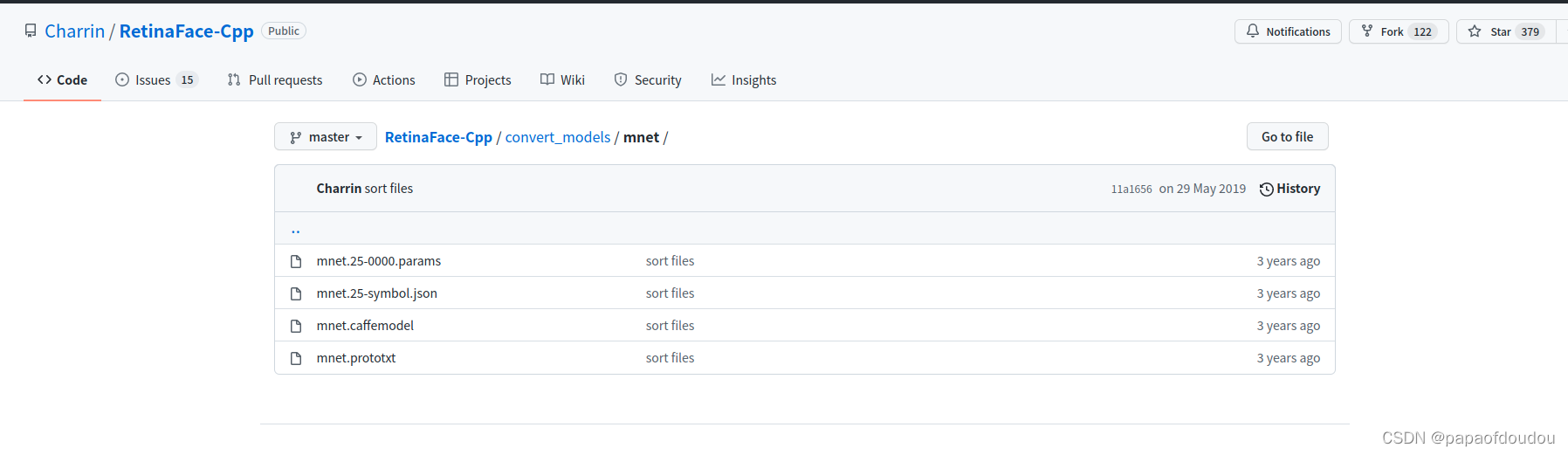

retinaface(mxnet)的CAFFE模型

https://github.com/Charrin/RetinaFace-Cpp/tree/master/convert_models/mnet

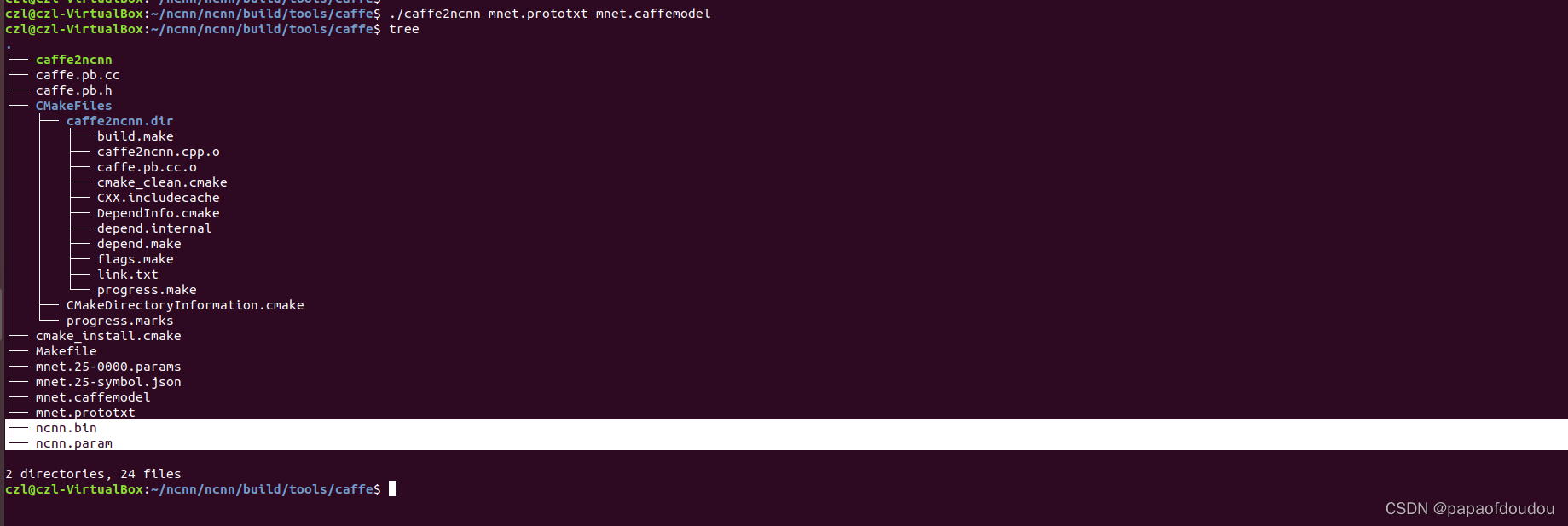

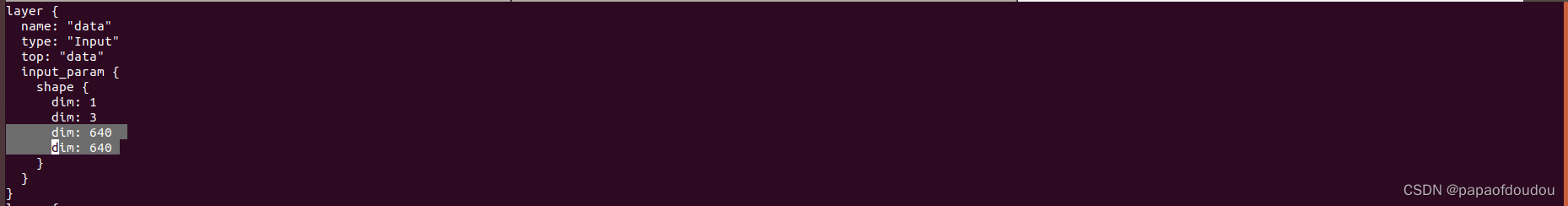

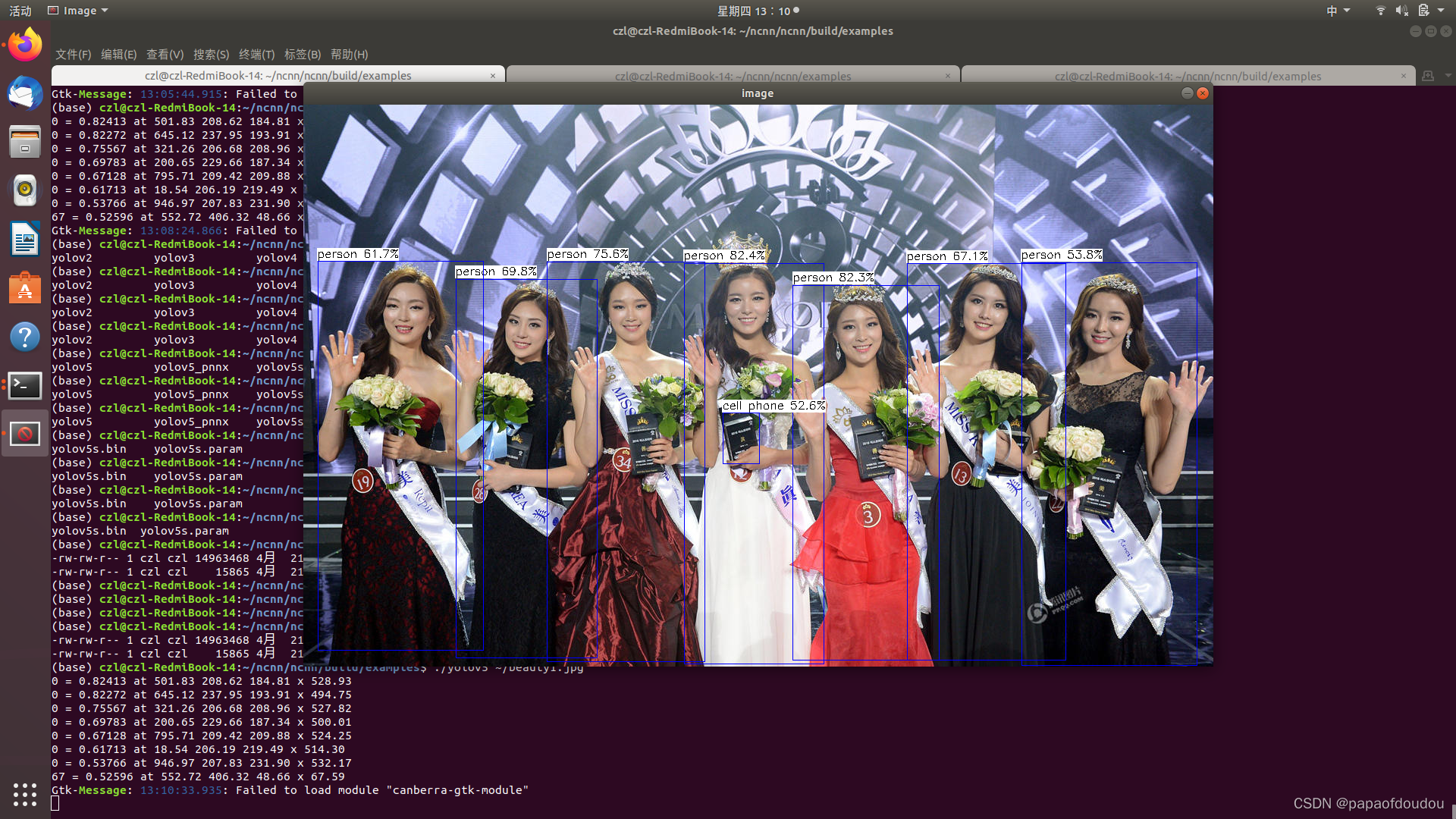

使用caffe2ncnn将模型转换为NCNN格式,需要注意的是,环境需要事先安装protobuf的开发环境,否则CMAKE阶段会警告无法生成caffe2ncnn和onnx2ncnn的问题,因为这两种格式用的都是protobuf来描述的。

sudo apt-get install libprotobuf-dev protobuf-compiler

sudo apt-get autoremove --purge protobuf-compiler

sudo apt-get autoremove --purge libprotobuf-dev编译成功后,执行命令./caffe2ncnn mnet.prototxt mnet.caffemodel进行模型转换即可。

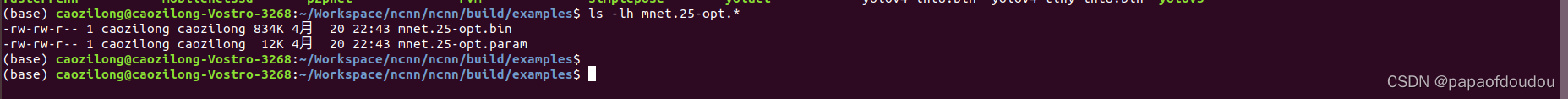

之后重命名ncnn.bin,ncnn.param为应用识别的文件名,即可以推理验证:

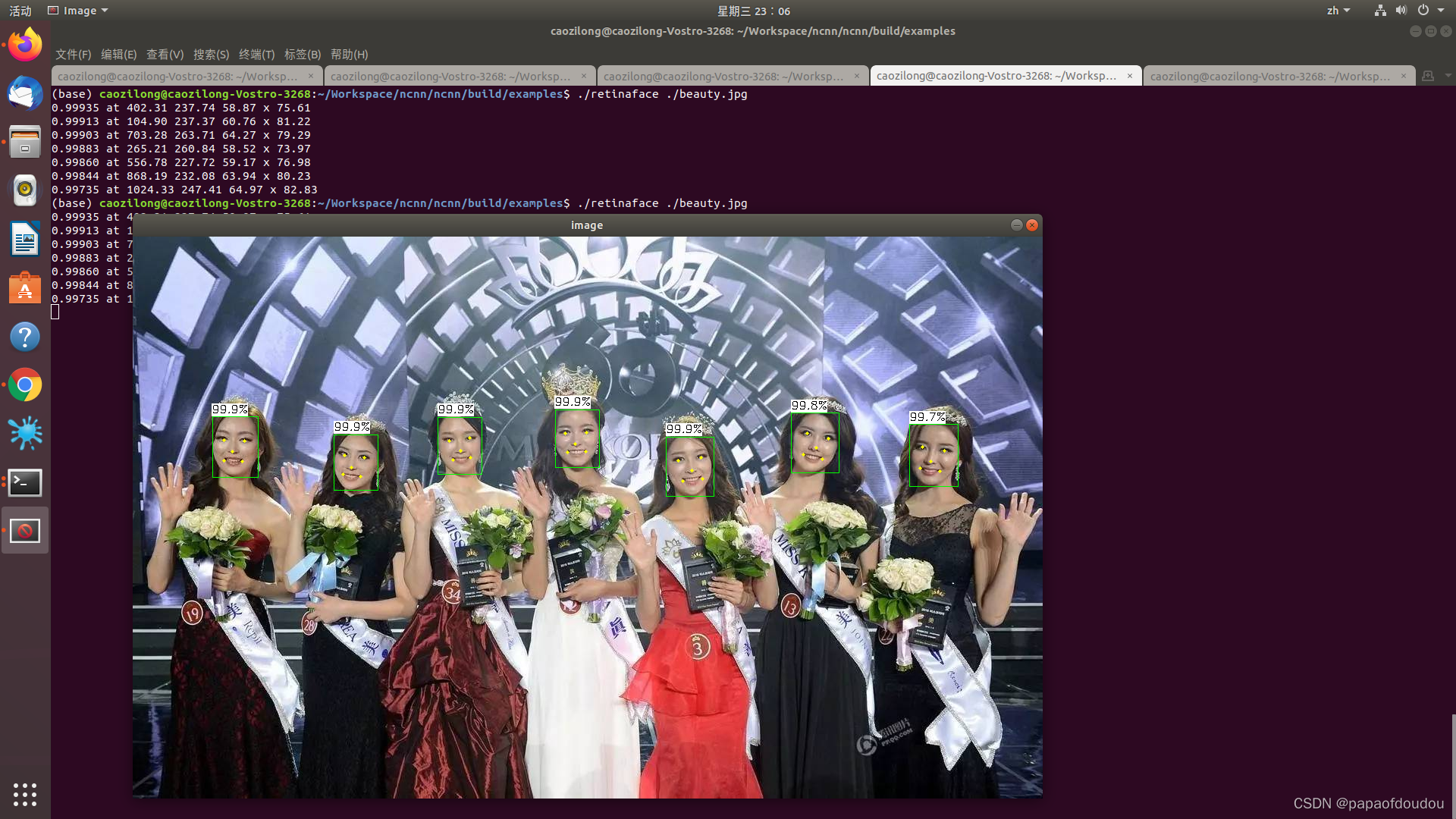

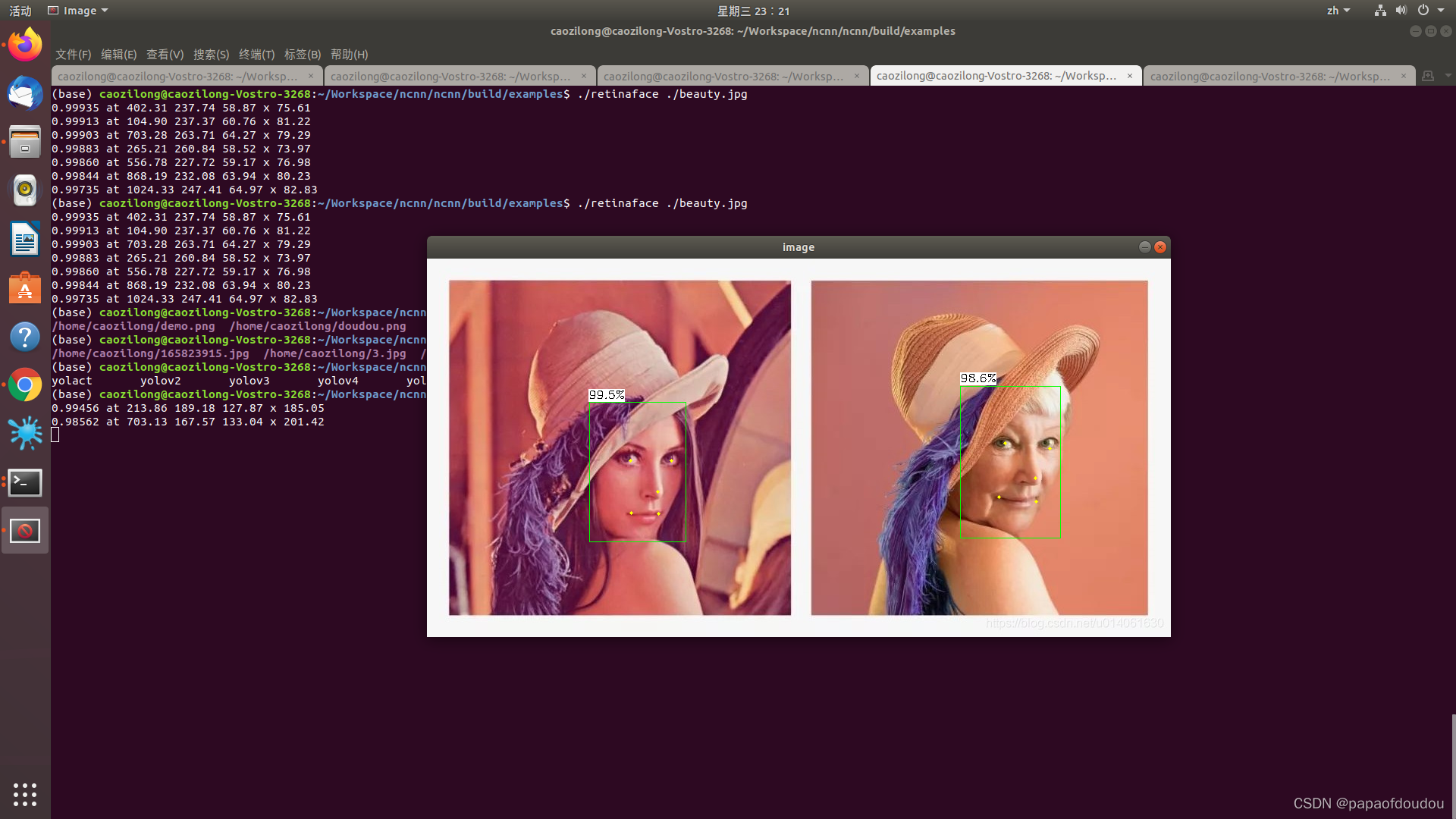

执行命令:./retinaface ./beauty.jpg

lena大妈

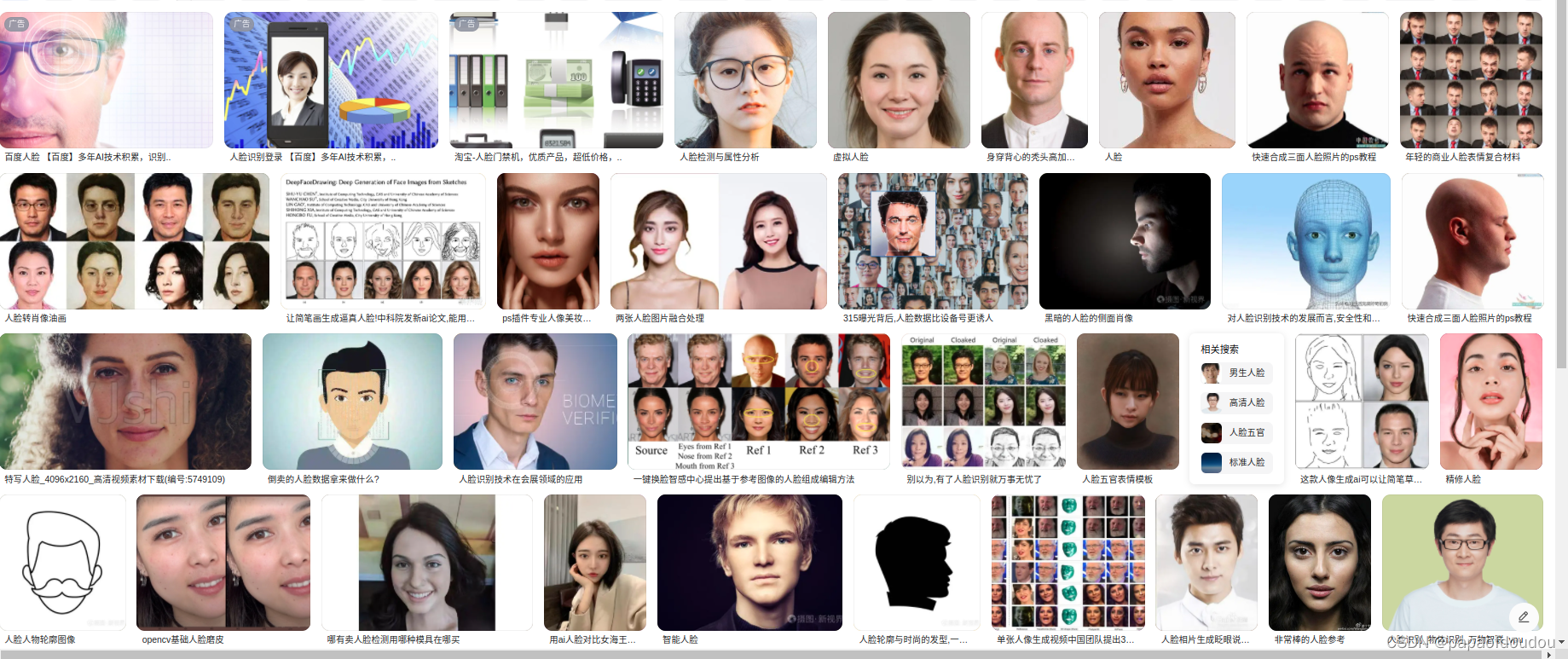

百度人脸的结果:

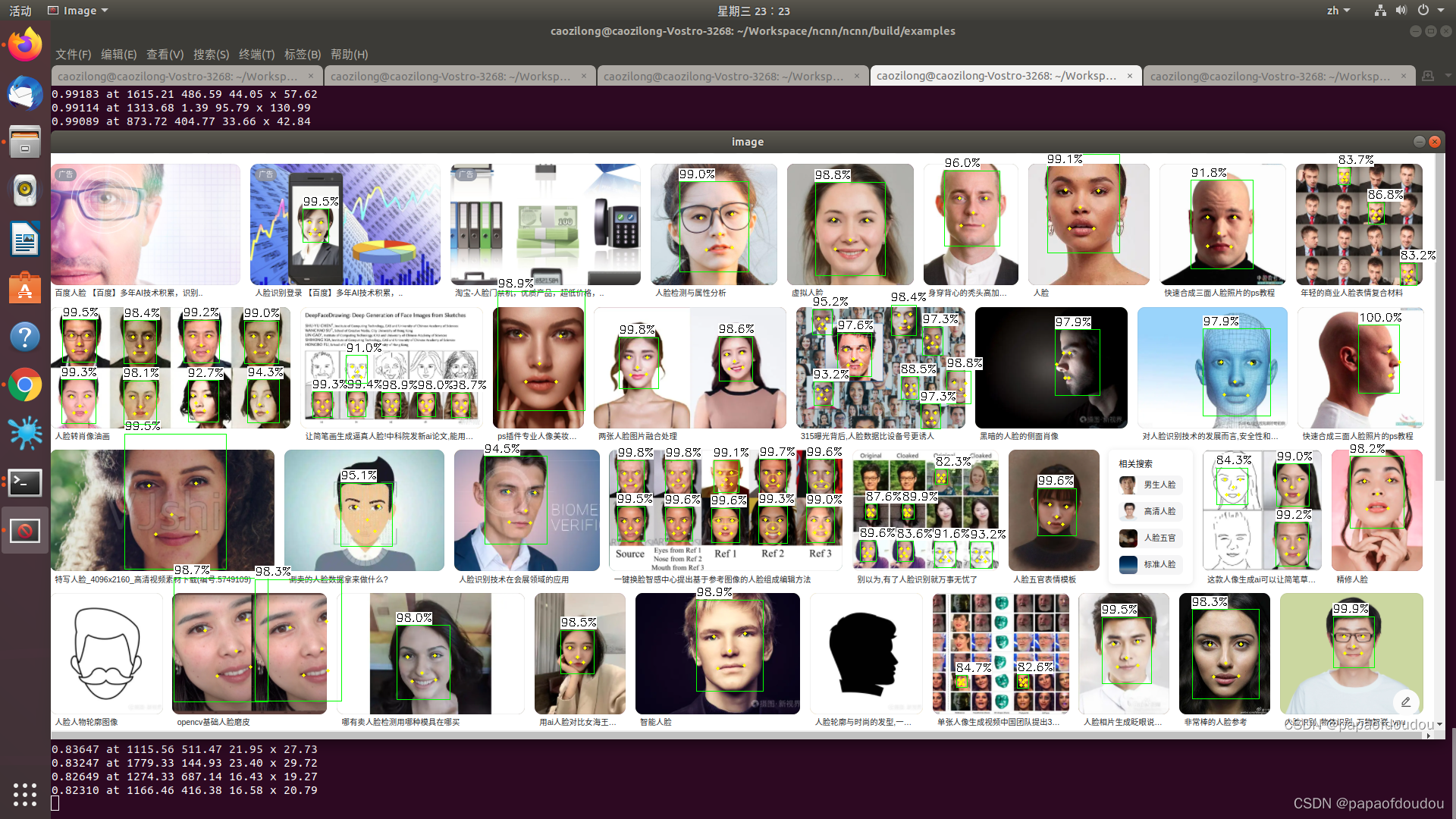

对结果进行检测:

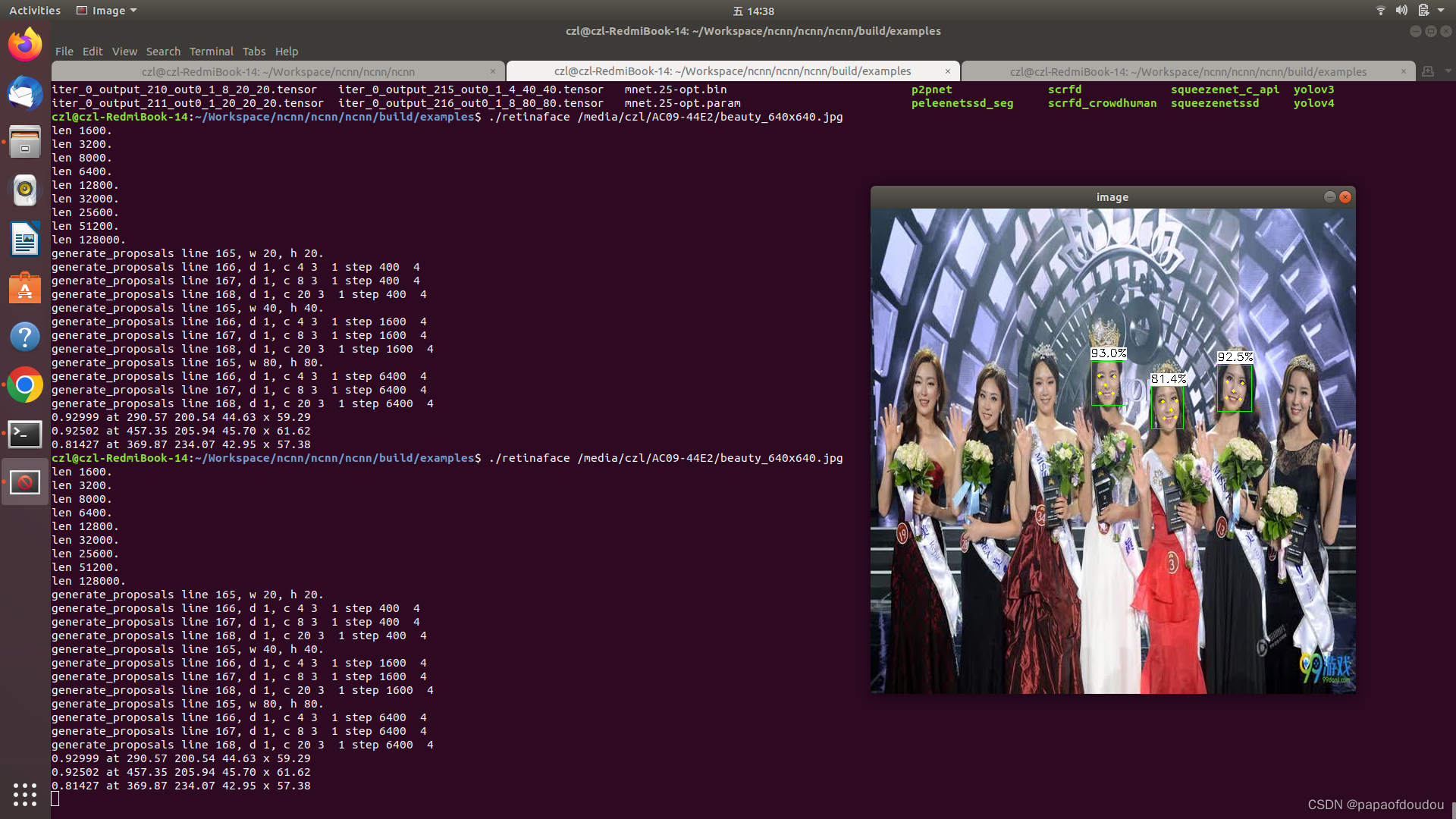

以上是用NCNN自带的模型测试的效果,下面用自己转的模型验证:

按照符合网络输入要求的图像尺寸做scale后输入:

效果很好!

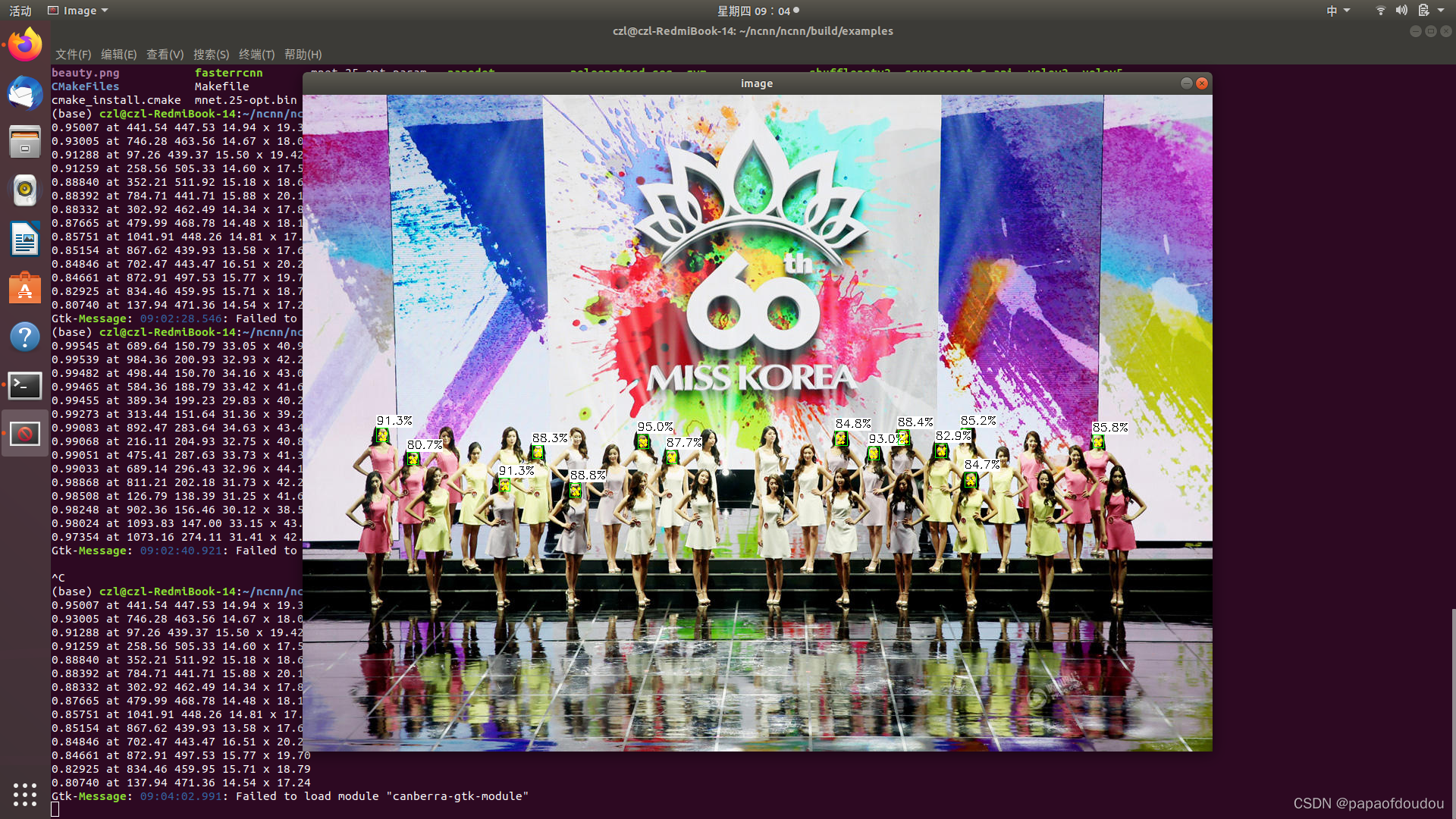

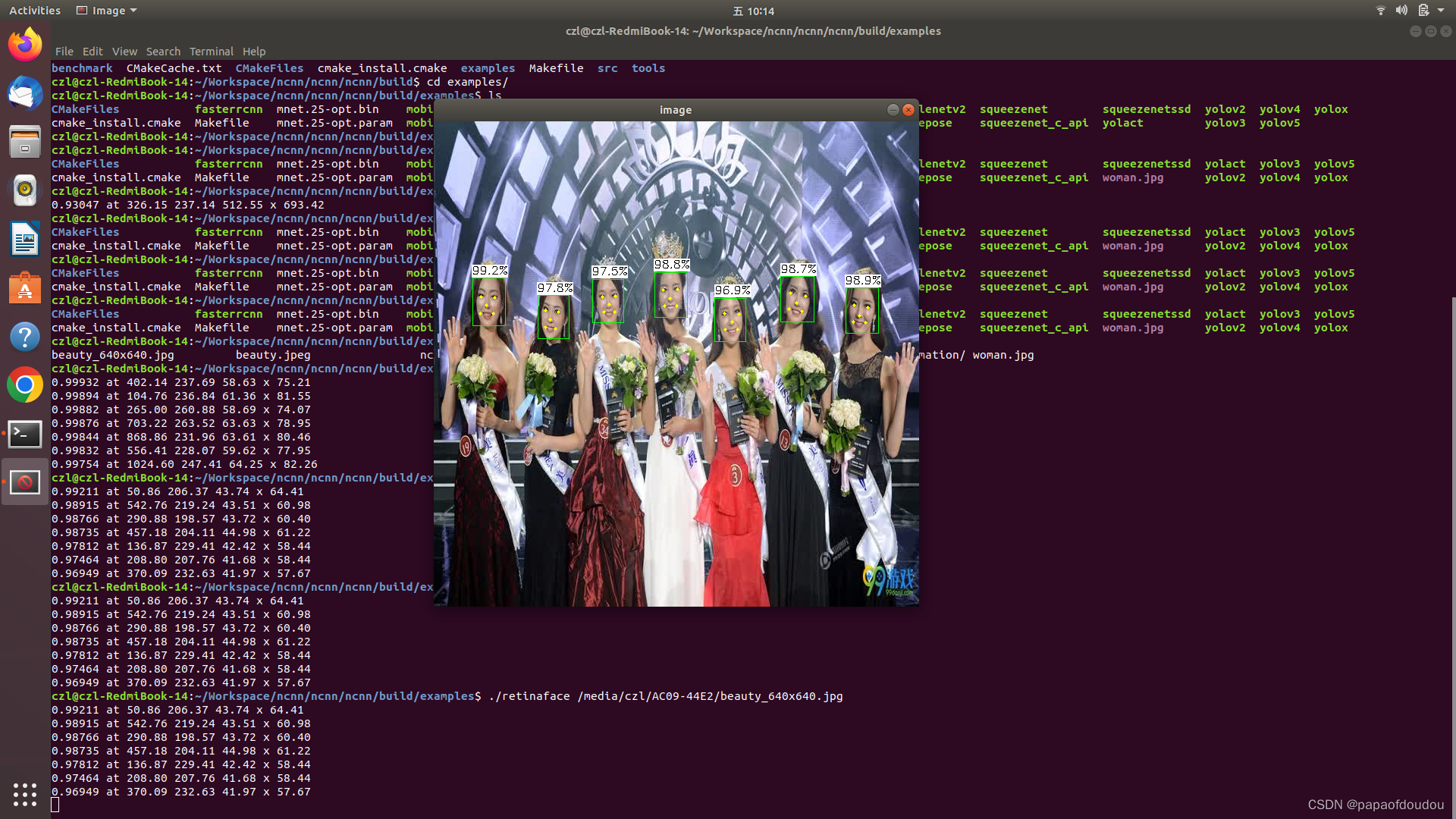

下面是用NCNN YOLOV5用的模型文件,对比一下人脸检测和人形目标检测算法的区别:

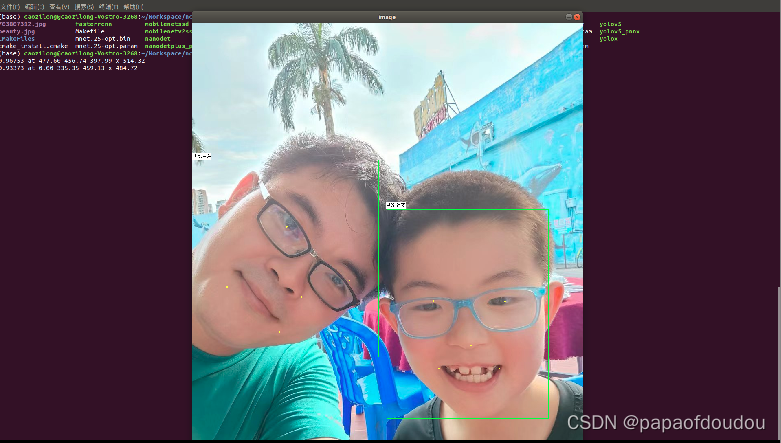

小鲜肉和老腊肉:

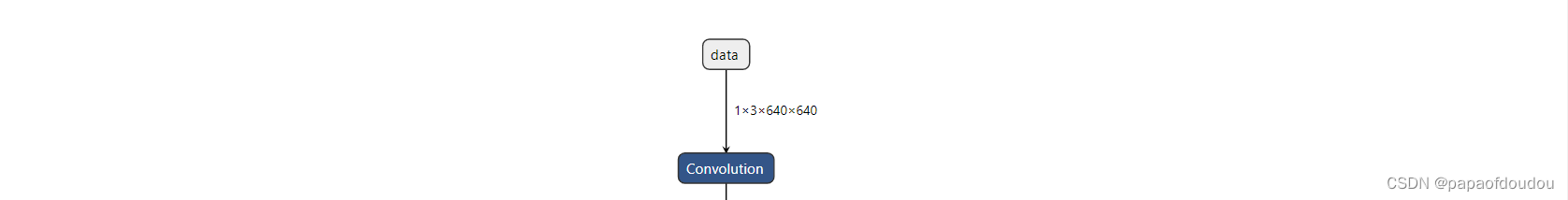

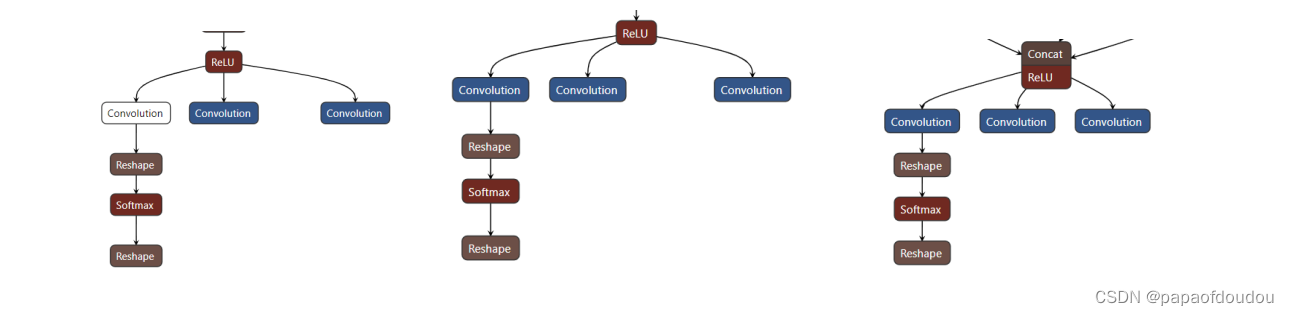

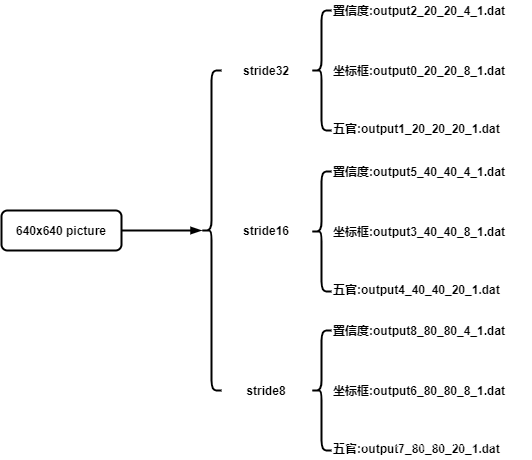

网络分析:

一输入九输出

基于NCNN修改的后处理程序:

// Tencent is pleased to support the open source community by making ncnn available.

//

// Copyright (C) 2019 THL A29 Limited, a Tencent company. All rights reserved.

//

// Licensed under the BSD 3-Clause License (the "License"); you may not use this file except

// in compliance with the License. You may obtain a copy of the License at

//

// https://opensource.org/licenses/BSD-3-Clause

//

// Unless required by applicable law or agreed to in writing, software distributed

// under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR

// CONDITIONS OF ANY KIND, either express or implied. See the License for the

// specific language governing permissions and limitations under the License.

#include "net.h"

#if defined(USE_NCNN_SIMPLEOCV)

#include "simpleocv.h"

#else

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#endif

#include <stdio.h>

#include <vector>

#include <vector>

#include <iostream>

using namespace std;

struct FaceObject

{

cv::Rect_<float> rect;

cv::Point2f landmark[5];

float prob;

};

static inline float intersection_area(const FaceObject& a, const FaceObject& b)

{

cv::Rect_<float> inter = a.rect & b.rect;

return inter.area();

}

static void qsort_descent_inplace(std::vector<FaceObject>& faceobjects, int left, int right)

{

int i = left;

int j = right;

float p = faceobjects[(left + right) / 2].prob;

while (i <= j)

{

while (faceobjects[i].prob > p)

i++;

while (faceobjects[j].prob < p)

j--;

if (i <= j)

{

// swap

std::swap(faceobjects[i], faceobjects[j]);

i++;

j--;

}

}

#pragma omp parallel sections

{

#pragma omp section

{

if (left < j) qsort_descent_inplace(faceobjects, left, j);

}

#pragma omp section

{

if (i < right) qsort_descent_inplace(faceobjects, i, right);

}

}

}

static void qsort_descent_inplace(std::vector<FaceObject>& faceobjects)

{

if (faceobjects.empty())

return;

qsort_descent_inplace(faceobjects, 0, faceobjects.size() - 1);

}

static void nms_sorted_bboxes(const std::vector<FaceObject>& faceobjects, std::vector<int>& picked, float nms_threshold)

{

picked.clear();

const int n = faceobjects.size();

std::vector<float> areas(n);

for (int i = 0; i < n; i++)

{

areas[i] = faceobjects[i].rect.area();

}

for (int i = 0; i < n; i++)

{

const FaceObject& a = faceobjects[i];

int keep = 1;

for (int j = 0; j < (int)picked.size(); j++)

{

const FaceObject& b = faceobjects[picked[j]];

// intersection over union

float inter_area = intersection_area(a, b);

float union_area = areas[i] + areas[picked[j]] - inter_area;

// float IoU = inter_area / union_area

if (inter_area / union_area > nms_threshold)

keep = 0;

}

if (keep)

picked.push_back(i);

}

}

// copy from src/layer/proposal.cpp

static ncnn::Mat generate_anchors(int base_size, const ncnn::Mat& ratios, const ncnn::Mat& scales)

{

int num_ratio = ratios.w;

int num_scale = scales.w;

ncnn::Mat anchors;

anchors.create(4, num_ratio * num_scale);

const float cx = base_size * 0.5f;

const float cy = base_size * 0.5f;

for (int i = 0; i < num_ratio; i++)

{

float ar = ratios[i];

int r_w = round(base_size / sqrt(ar));

int r_h = round(r_w * ar); //round(base_size * sqrt(ar));

for (int j = 0; j < num_scale; j++)

{

float scale = scales[j];

float rs_w = r_w * scale;

float rs_h = r_h * scale;

float* anchor = anchors.row(i * num_scale + j);

anchor[0] = cx - rs_w * 0.5f;

anchor[1] = cy - rs_h * 0.5f;

anchor[2] = cx + rs_w * 0.5f;

anchor[3] = cy + rs_h * 0.5f;

}

}

return anchors;

}

static void generate_proposals(const ncnn::Mat& anchors, int feat_stride, const ncnn::Mat& score_blob, const ncnn::Mat& bbox_blob, const ncnn::Mat& landmark_blob, float prob_threshold, std::vector<FaceObject>& faceobjects)

{

int w = score_blob.w;

int h = score_blob.h;

printf("%s line %d, w %d, h %d.\n", __func__, __LINE__, w, h);

printf("%s line %d, d %d, c %d %d %d step %ld %ld \n", __func__, __LINE__, score_blob.d, score_blob.c, score_blob.dims, score_blob.elempack, score_blob.cstep, score_blob.elemsize);

printf("%s line %d, d %d, c %d %d %d step %ld %ld \n", __func__, __LINE__, bbox_blob.d, bbox_blob.c, bbox_blob.dims, bbox_blob.elempack, bbox_blob.cstep, bbox_blob.elemsize);

printf("%s line %d, d %d, c %d %d %d step %ld %ld \n", __func__, __LINE__, landmark_blob.d, landmark_blob.c, landmark_blob.dims, landmark_blob.elempack, landmark_blob.cstep, landmark_blob.elemsize);

// generate face proposal from bbox deltas and shifted anchors

const int num_anchors = anchors.h;

for (int q = 0; q < num_anchors; q++)

{

const float* anchor = anchors.row(q);

const ncnn::Mat score = score_blob.channel(q + num_anchors);

const ncnn::Mat bbox = bbox_blob.channel_range(q * 4, 4);

const ncnn::Mat landmark = landmark_blob.channel_range(q * 10, 10);

// shifted anchor

float anchor_y = anchor[1];

float anchor_w = anchor[2] - anchor[0];

float anchor_h = anchor[3] - anchor[1];

for (int i = 0; i < h; i++)

{

float anchor_x = anchor[0];

for (int j = 0; j < w; j++)

{

int index = i * w + j;

float prob = score[index];

if (prob >= prob_threshold)

{

// apply center size

float dx = bbox.channel(0)[index];

float dy = bbox.channel(1)[index];

float dw = bbox.channel(2)[index];

float dh = bbox.channel(3)[index];

float cx = anchor_x + anchor_w * 0.5f;

float cy = anchor_y + anchor_h * 0.5f;

float pb_cx = cx + anchor_w * dx;

float pb_cy = cy + anchor_h * dy;

float pb_w = anchor_w * exp(dw);

float pb_h = anchor_h * exp(dh);

float x0 = pb_cx - pb_w * 0.5f;

float y0 = pb_cy - pb_h * 0.5f;

float x1 = pb_cx + pb_w * 0.5f;

float y1 = pb_cy + pb_h * 0.5f;

FaceObject obj;

obj.rect.x = x0;

obj.rect.y = y0;

obj.rect.width = x1 - x0 + 1;

obj.rect.height = y1 - y0 + 1;

obj.landmark[0].x = cx + (anchor_w + 1) * landmark.channel(0)[index];

obj.landmark[0].y = cy + (anchor_h + 1) * landmark.channel(1)[index];

obj.landmark[1].x = cx + (anchor_w + 1) * landmark.channel(2)[index];

obj.landmark[1].y = cy + (anchor_h + 1) * landmark.channel(3)[index];

obj.landmark[2].x = cx + (anchor_w + 1) * landmark.channel(4)[index];

obj.landmark[2].y = cy + (anchor_h + 1) * landmark.channel(5)[index];

obj.landmark[3].x = cx + (anchor_w + 1) * landmark.channel(6)[index];

obj.landmark[3].y = cy + (anchor_h + 1) * landmark.channel(7)[index];

obj.landmark[4].x = cx + (anchor_w + 1) * landmark.channel(8)[index];

obj.landmark[4].y = cy + (anchor_h + 1) * landmark.channel(9)[index];

obj.prob = prob;

faceobjects.push_back(obj);

}

anchor_x += feat_stride;

}

anchor_y += feat_stride;

}

}

}

int get_tensor_data(string file_path, float **data)

{

int len = 0;

static float *memory = NULL;

static int max_len = 10*1024*1024;

if(memory == NULL)

{

memory = (float *)malloc(max_len * sizeof(float));

}

FILE *fp = NULL;

if((fp = fopen(file_path.c_str(), "r")) == NULL)

{

cout << "open tensor file error!" << endl;

exit(-1);

}

int file_len = 0;

while(!feof(fp))

{

fscanf(fp, "%f ", &memory[len ++]);

}

*data = (float *)malloc(len * sizeof(float));

memcpy(*data, memory, len*sizeof(float));

fclose(fp);

if(len == 0 || *data == NULL)

{

cout << " read tensor error happend." << "len:" << len << "data address:" << *data << endl;

exit(-1);

}

printf("len %d.\n", len);

return len;

}

static int detect_retinaface(const cv::Mat& bgr, std::vector<FaceObject>& faceobjects)

{

ncnn::Net retinaface;

retinaface.opt.use_vulkan_compute = true;

// model is converted from

// https://github.com/deepinsight/insightface/tree/master/RetinaFace#retinaface-pretrained-models

// https://github.com/deepinsight/insightface/issues/669

// the ncnn model https://github.com/nihui/ncnn-assets/tree/master/models

// retinaface.load_param("retinaface-R50.param");

// retinaface.load_model("retinaface-R50.bin");

retinaface.load_param("mnet.25-opt.param");

retinaface.load_model("mnet.25-opt.bin");

const float prob_threshold = 0.8f;

const float nms_threshold = 0.4f;

int img_w = bgr.cols;

int img_h = bgr.rows;

ncnn::Mat in = ncnn::Mat::from_pixels(bgr.data, ncnn::Mat::PIXEL_BGR2RGB, img_w, img_h);

ncnn::Extractor ex = retinaface.create_extractor();

ex.input("data", in);

std::vector<FaceObject> faceproposals;

float *score0 = (float*)calloc(20*20*4, sizeof(float));

float *bbox0 = (float*)calloc(20*20*8, sizeof(float));

float *landmark0 = (float*)calloc(20*20*20, sizeof(float));

float *score1 = (float*)calloc(40*40*4, sizeof(float));

float *bbox1 = (float*)calloc(40*40*8, sizeof(float));

float *landmark1 = (float*)calloc(40*40*20, sizeof(float));

float *score2 = (float*)calloc(80*80*4, sizeof(float));

float *bbox2 = (float*)calloc(80*80*8, sizeof(float));

float *landmark2 = (float*)calloc(80*80*20, sizeof(float));

get_tensor_data("./iter_0_output_212_out0_1_4_20_20.tensor", &score0);

get_tensor_data("./iter_0_output_210_out0_1_8_20_20.tensor", &bbox0);

get_tensor_data("./iter_0_output_211_out0_1_20_20_20.tensor", &landmark0);

get_tensor_data("./iter_0_output_215_out0_1_4_40_40.tensor", &score1);

get_tensor_data("./iter_0_output_213_out0_1_8_40_40.tensor", &bbox1);

get_tensor_data("./iter_0_output_214_out0_1_20_40_40.tensor", &landmark1);

get_tensor_data("./iter_0_output_218_out0_1_4_80_80.tensor", &score2);

get_tensor_data("./iter_0_output_216_out0_1_8_80_80.tensor", &bbox2);

get_tensor_data("./iter_0_output_217_out0_1_20_80_80.tensor", &landmark2);

// stride 32

{

ncnn::Mat score_blob(20*20*4), bbox_blob(20*20*8), landmark_blob(20*20*20);

#if 0

ex.extract("face_rpn_cls_prob_reshape_stride32", score_blob);

ex.extract("face_rpn_bbox_pred_stride32", bbox_blob);

ex.extract("face_rpn_landmark_pred_stride32", landmark_blob);

#else

int j = 0;

for(j = 0; j < 20*20*4; j ++) score_blob[j] = score0[j];

for(j = 0; j < 20*20*8; j ++) bbox_blob[j] = bbox0[j];

for(j = 0; j < 20*20*20; j ++) landmark_blob[j] = landmark0[j];

score_blob.w = score_blob.h = bbox_blob.w = bbox_blob.h = landmark_blob.w = landmark_blob.h = 20;

score_blob.d = 1;

bbox_blob.d = 1;

landmark_blob.d = 1;

score_blob.c = 4;

bbox_blob.c = 8;

landmark_blob.c = 20;

score_blob.dims = 3;

bbox_blob.dims = 3;

landmark_blob.dims = 3;

score_blob.elempack = 1;

bbox_blob.elempack = 1;

landmark_blob.elempack = 1;

score_blob.cstep = 400;

bbox_blob.cstep = 400;

landmark_blob.cstep = 400;

landmark_blob.elemsize = bbox_blob.elemsize = score_blob.elemsize = 4;

free(score0);

free(bbox0);

free(landmark0);

#endif

const int base_size = 16;

const int feat_stride = 32;

ncnn::Mat ratios(1);

ratios[0] = 1.f;

ncnn::Mat scales(2);

scales[0] = 32.f;

scales[1] = 16.f;

ncnn::Mat anchors = generate_anchors(base_size, ratios, scales);

std::vector<FaceObject> faceobjects32;

generate_proposals(anchors, feat_stride, score_blob, bbox_blob, landmark_blob, prob_threshold, faceobjects32);

faceproposals.insert(faceproposals.end(), faceobjects32.begin(), faceobjects32.end());

}

// stride 16

{

ncnn::Mat score_blob(40*40*4), bbox_blob(40*40*8), landmark_blob(40*40*20);

#if 0

ex.extract("face_rpn_cls_prob_reshape_stride16", score_blob);

ex.extract("face_rpn_bbox_pred_stride16", bbox_blob);

ex.extract("face_rpn_landmark_pred_stride16", landmark_blob);

#else

int j = 0;

for(j = 0; j < 40*40*4; j ++) score_blob[j] = score1[j];

for(j = 0; j < 40*40*8; j ++) bbox_blob[j] = bbox1[j];

for(j = 0; j < 40*40*20; j ++) landmark_blob[j] = landmark1[j];

score_blob.w = score_blob.h = bbox_blob.w = bbox_blob.h = landmark_blob.w = landmark_blob.h = 40;

score_blob.d = 1;

bbox_blob.d = 1;

landmark_blob.d = 1;

score_blob.c = 4;

bbox_blob.c = 8;

landmark_blob.c = 20;

free(score1);

free(bbox1);

free(landmark1);

score_blob.dims = 3;

bbox_blob.dims = 3;

landmark_blob.dims = 3;

score_blob.elempack = 1;

bbox_blob.elempack = 1;

landmark_blob.elempack = 1;

score_blob.cstep = 1600;

bbox_blob.cstep = 1600;

landmark_blob.cstep = 1600;

landmark_blob.elemsize = bbox_blob.elemsize = score_blob.elemsize = 4;

#endif

const int base_size = 16;

const int feat_stride = 16;

ncnn::Mat ratios(1);

ratios[0] = 1.f;

ncnn::Mat scales(2);

scales[0] = 8.f;

scales[1] = 4.f;

ncnn::Mat anchors = generate_anchors(base_size, ratios, scales);

std::vector<FaceObject> faceobjects16;

generate_proposals(anchors, feat_stride, score_blob, bbox_blob, landmark_blob, prob_threshold, faceobjects16);

faceproposals.insert(faceproposals.end(), faceobjects16.begin(), faceobjects16.end());

}

// stride 8

{

ncnn::Mat score_blob(80*80*4), bbox_blob(80*80*8), landmark_blob(80*80*20);

#if 0

ex.extract("face_rpn_cls_prob_reshape_stride8", score_blob);

ex.extract("face_rpn_bbox_pred_stride8", bbox_blob);

ex.extract("face_rpn_landmark_pred_stride8", landmark_blob);

#else

int j = 0;

for(j = 0; j < 80*80*4; j ++) score_blob[j] = score2[j];

for(j = 0; j < 80*80*8; j ++) bbox_blob[j] = bbox2[j];

for(j = 0; j < 80*80*20; j ++) landmark_blob[j] = landmark2[j];

score_blob.w = score_blob.h = bbox_blob.w = bbox_blob.h = landmark_blob.w = landmark_blob.h = 80;

score_blob.d = 1;

bbox_blob.d = 1;

landmark_blob.d = 1;

score_blob.c = 4;

bbox_blob.c = 8;

landmark_blob.c = 20;

free(score2);

free(bbox2);

free(landmark2);

score_blob.dims = 3;

bbox_blob.dims = 3;

landmark_blob.dims = 3;

score_blob.elempack = 1;

bbox_blob.elempack = 1;

landmark_blob.elempack = 1;

score_blob.cstep = 6400;

bbox_blob.cstep = 6400;

landmark_blob.cstep = 6400;

landmark_blob.elemsize = bbox_blob.elemsize = score_blob.elemsize = 4;

#endif

const int base_size = 16;

const int feat_stride = 8;

ncnn::Mat ratios(1);

ratios[0] = 1.f;

ncnn::Mat scales(2);

scales[0] = 2.f;

scales[1] = 1.f;

ncnn::Mat anchors = generate_anchors(base_size, ratios, scales);

std::vector<FaceObject> faceobjects8;

generate_proposals(anchors, feat_stride, score_blob, bbox_blob, landmark_blob, prob_threshold, faceobjects8);

faceproposals.insert(faceproposals.end(), faceobjects8.begin(), faceobjects8.end());

}

// sort all proposals by score from highest to lowest

qsort_descent_inplace(faceproposals);

// apply nms with nms_threshold

std::vector<int> picked;

nms_sorted_bboxes(faceproposals, picked, nms_threshold);

int face_count = picked.size();

faceobjects.resize(face_count);

for (int i = 0; i < face_count; i++)

{

faceobjects[i] = faceproposals[picked[i]];

// clip to image size

float x0 = faceobjects[i].rect.x;

float y0 = faceobjects[i].rect.y;

float x1 = x0 + faceobjects[i].rect.width;

float y1 = y0 + faceobjects[i].rect.height;

x0 = std::max(std::min(x0, (float)img_w - 1), 0.f);

y0 = std::max(std::min(y0, (float)img_h - 1), 0.f);

x1 = std::max(std::min(x1, (float)img_w - 1), 0.f);

y1 = std::max(std::min(y1, (float)img_h - 1), 0.f);

faceobjects[i].rect.x = x0;

faceobjects[i].rect.y = y0;

faceobjects[i].rect.width = x1 - x0;

faceobjects[i].rect.height = y1 - y0;

}

return 0;

}

static void draw_faceobjects(const cv::Mat& bgr, const std::vector<FaceObject>& faceobjects)

{

cv::Mat image = bgr.clone();

for (size_t i = 0; i < faceobjects.size(); i++)

{

const FaceObject& obj = faceobjects[i];

fprintf(stderr, "%.5f at %.2f %.2f %.2f x %.2f\n", obj.prob,

obj.rect.x, obj.rect.y, obj.rect.width, obj.rect.height);

cv::rectangle(image, obj.rect, cv::Scalar(0, 255, 0));

cv::circle(image, obj.landmark[0], 2, cv::Scalar(0, 255, 255), -1);

cv::circle(image, obj.landmark[1], 2, cv::Scalar(0, 255, 255), -1);

cv::circle(image, obj.landmark[2], 2, cv::Scalar(0, 255, 255), -1);

cv::circle(image, obj.landmark[3], 2, cv::Scalar(0, 255, 255), -1);

cv::circle(image, obj.landmark[4], 2, cv::Scalar(0, 255, 255), -1);

char text[256];

sprintf(text, "%.1f%%", obj.prob * 100);

int baseLine = 0;

cv::Size label_size = cv::getTextSize(text, cv::FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

int x = obj.rect.x;

int y = obj.rect.y - label_size.height - baseLine;

if (y < 0)

y = 0;

if (x + label_size.width > image.cols)

x = image.cols - label_size.width;

cv::rectangle(image, cv::Rect(cv::Point(x, y), cv::Size(label_size.width, label_size.height + baseLine)),

cv::Scalar(255, 255, 255), -1);

cv::putText(image, text, cv::Point(x, y + label_size.height),

cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0, 0, 0));

}

cv::imshow("image", image);

cv::waitKey(0);

}

int main(int argc, char** argv)

{

if (argc != 2)

{

fprintf(stderr, "Usage: %s [imagepath]\n", argv[0]);

return -1;

}

const char* imagepath = argv[1];

cv::Mat m = cv::imread(imagepath, 1);

if (m.empty())

{

fprintf(stderr, "cv::imread %s failed\n", imagepath);

return -1;

}

std::vector<FaceObject> faceobjects;

detect_retinaface(m, faceobjects);

draw_faceobjects(m, faceobjects);

return 0;

}在推理芯原工具inference出的tensor的时候遇到问题,只找到了三位美女的脸和五官,问题待查.

部署自己YOLOV5S

参考下面博客将yolov5s.pt转换为ONNX格式.

pytorch yolov5 推理和训练环境搭建_papaofdoudou的博客-优快云博客

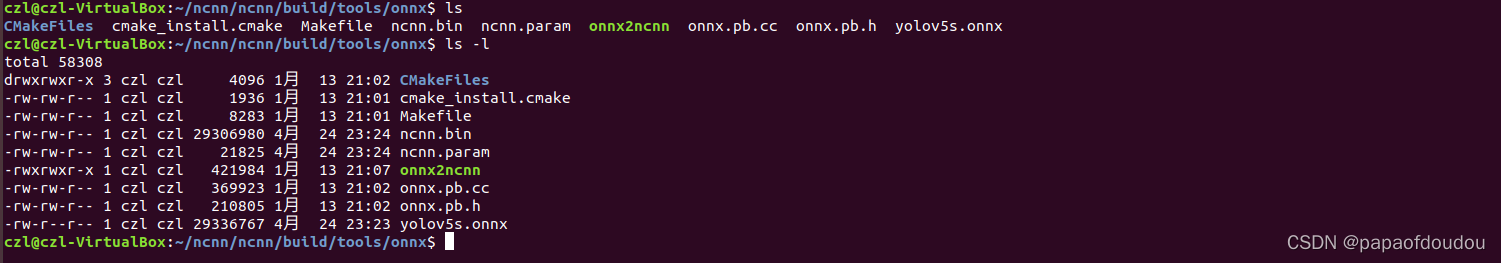

下一步将onnx格式转换为NCNN格式:

参考资料

GitHub - wzj5133329/retinaface_caffe: 完整版caffe-cpp实现

本文介绍了如何使用NCNN进行人脸识别和关键点检测,包括将原始的Retinaface模型从Caffe转换为NCNN格式,以及使用转换后的模型进行推理验证。同时,文章还探讨了自己转换YOLOV5S模型的过程,并对比了人脸检测与人形目标检测算法的差异。

本文介绍了如何使用NCNN进行人脸识别和关键点检测,包括将原始的Retinaface模型从Caffe转换为NCNN格式,以及使用转换后的模型进行推理验证。同时,文章还探讨了自己转换YOLOV5S模型的过程,并对比了人脸检测与人形目标检测算法的差异。

2万+

2万+