投影数据关联-求匹配点

利用算法projective data association对前一帧和当前帧的(Vertex、Normal)进行匹配,算法如下:

- 在当前帧 i 的深度图像上的每一个像素 U并行计算;

- 对于深度值大于0的像素,求该像素点对应的顶点在上一帧所处的相机坐标系下的位置Vi-1:方法是用上一帧的相机位姿Tg,k-1的逆矩阵左乘上一帧中该点对应的像素点的全局坐标Vi-1(g);

- 将Vi-1投影到像素平面得到p;

- 对于p属于当前帧范围内的点(说明该顶点在上一帧中也在相机视口范围内),用上一帧的位姿Tg,k-1左乘该点 Vi§(注:这是本帧中像素p对应的Vertex) 将其投影到全局坐标中得V;

- 同上得全局坐标下法向量 N;

- 如果V和 Vi-1(g)的距离小于阈值,且 N 和 Ni-1(g) 的夹角小于阈值,则找到匹配点对。

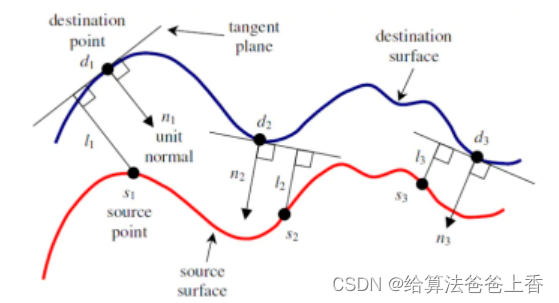

Point-to-Plane ICP

使用点到平面(point-plane)误差度量的迭代最近点 (ICP) 算法已被证明比使用点到点(point-point)误差度量的算法收敛得更快。在 ICP 算法的每次迭代中,产生最小点到平面误差的相对位姿变化通常使用标准的非线性最小二乘法来解决。例如 Levenberg-Marquardt 方法。当使用点到平面误差度量时,最小化的对象是每个源点与其对应目标点的切平面之间的平方距离之和。

详细介绍见点到面的ICP算法。

Frame.h

#pragma once

#include <Eigen/Dense>

#include <opencv2/opencv.hpp>

#define MINF -std::numeric_limits<float>::infinity()

class Frame

{

public:

Frame(cv::Mat depthMap, cv::Mat colorMap, Eigen::Matrix3f& depthIntrinsics, Eigen::Matrix4f& depthExtrinsics);

Eigen::Vector3f getVertexGlobal(size_t idx) const;

Eigen::Vector3f getNormalGlobal(size_t idx) const;

std::vector<Eigen::Vector3f>& getVertexMapGlobal();

std::vector<Eigen::Vector3f>& getNormalMapGlobal();

std::vector<Eigen::Vector3f>& getVertexMap();

std::vector<Eigen::Vector3f>& getNormalMap();

int getFrameHeight();

int getFrameWidth();

bool containsImgPoint(Eigen::Vector2i);

Eigen::Vector3f projectPointIntoFrame(const Eigen::Vector3f& point);

Eigen::Vector2i projectOntoImgPlane(const Eigen::Vector3f& point);

private:

void computeVertexMap(cv::Mat depthMap, const Eigen::Matrix3f& depthIntrinsics);

void computeNormalMap(cv::Mat depthMap);

std::vector<Eigen::Vector3f> transformPoints(const std::vector<Eigen::Vector3f>& points, const Eigen::Matrix4f& transformation);

std::vector<Eigen::Vector3f> rotatePoints(const std::vector<Eigen::Vector3f>& points, const Eigen::Matrix3f& rotation);

int depthWidth;

int depthHeight;

cv::Mat depthMap;

cv::Mat colorMap;

std::shared_ptr<std::vector<Eigen::Vector3f>> mVertices;

std::shared_ptr<std::vector<Eigen::Vector3f>> mNormals;

std::shared_ptr<std::vector<Eigen::Vector3f>> mVerticesGlobal;

std::shared_ptr<std::vector<Eigen::Vector3f>> mNormalsGlobal;

Eigen::Matrix4f extrinsicMatrix;

Eigen::Matrix3f intrinsicMatrix;

};

Frame.cpp

#include "Frame.h"

Frame::Frame(cv::Mat depthMap, cv::Mat colorMap, Eigen::Matrix3f& depthIntrinsics, Eigen::Matrix4f& depthExtrinsics): depthMap(depthMap), colorMap(colorMap)

{

depthWidth = depthMap.cols;

depthHeight = depthMap.rows;

computeVertexMap(depthMap, depthIntrinsics);

computeNormalMap(depthMap);

intrinsicMatrix = depthIntrinsics;

extrinsicMatrix = depthExtrinsics;

Eigen::Matrix4f extrinsicMatrixInv = extrinsicMatrix.inverse();

const auto rotation = extrinsicMatrixInv.block(0, 0, 3, 3);

mVerticesGlobal = std::make_shared<std::vector<Eigen::Vector3f>>(transformPoints(*mVertices, extrinsicMatrixInv));

mNormalsGlobal = std::make_shared<std::vector<Eigen::Vector3f>>(rotatePoints(*mNormals, rotation));

}

Eigen::Vector3f Frame::getVertexGlobal(size_t idx) const { return mVerticesGlobal->at(idx); }

Eigen::Vector3f Frame::getNormalGlobal(size_t idx) const { return mNormalsGlobal->at(idx); }

bool Frame::containsImgPoint(Eigen::Vector2i imgPoint)

{

return imgPoint[0] >= 0 && imgPoint[0] < depthWidth && imgPoint[1] >= 0 && imgPoint[1] < depthHeight;

}

int Frame::getFrameHeight() { return depthHeight; }

int Frame::getFrameWidth() { return depthWidth; }

std::vector<Eigen::Vector3f>& Frame::getVertexMap() { return *mVertices; }

std::vector<Eigen::Vector3f>& Frame::getNormalMap() { return *mNormals; }

std::vector<Eigen::Vector3f>& Frame::getVertexMapGlobal() { return *mVerticesGlobal; }

std::vector<Eigen::Vector3f>& Frame::getNormalMapGlobal() { return *mNormalsGlobal; }

Eigen::Vector3f Frame::projectPointIntoFrame(const Eigen::Vector3f& point)

{

const auto rotation = extrinsicMatrix.block(0, 0, 3, 3);

const auto translation = extrinsicMatrix.block(0, 3, 3, 1);

return rotation * point + translation;

}

Eigen::Vector2i Frame::projectOntoImgPlane(const Eigen::Vector3f& point)

{

Eigen::Vector3f projected = intrinsicMatrix * point;

if (projected[2] == 0) return Eigen::Vector2i(MINF, MINF);

projected /= projected[2];

return Eigen::Vector2i((int)round(projected.x()), (int)round(projected.y()));

}

std::vector<Eigen::Vector3f> Frame::transformPoints(const std::vector<Eigen::Vector3f>& points, const Eigen::Matrix4f& transformation)

{

const Eigen::Matrix3f rotation = transformation.block(0, 0, 3, 3);

const Eigen::Vector3f translation = transformation.block(0, 3, 3, 1);

std::vector<Eigen::Vector3f> transformed(points.size());

for (size_t idx = 0; idx < points.size(); ++idx)

{

if (points[idx].allFinite())

transformed[idx] = rotation * points[idx] + translation;

else

transformed[idx] = Eigen::Vector3f(MINF, MINF, MINF);

}

return transformed;

}

std::vector<Eigen::Vector3f> Frame::rotatePoints(const std::vector<Eigen::Vector3f>& points, const Eigen::Matrix3f& rotation)

{

std::vector<Eigen::Vector3f> transformed(points.size());

for (size_t idx = 0; idx < points.size(); ++idx)

{

if (points[idx].allFinite())

transformed[idx] = rotation * points[idx];

else

transformed[idx] = Eigen::Vector3f(MINF, MINF, MINF);

}

return transformed;

}

void Frame::computeVertexMap(cv::Mat depthMap, const Eigen::Matrix3f& depthIntrinsics)

{

float fx = depthIntrinsics(0, 0);

float fy = depthIntrinsics(1, 1);

float cx = depthIntrinsics(0, 2);

float cy = depthIntrinsics(1, 2);

mVertices = std::make_shared<std::vector<Eigen::Vector3f>>(std::vector<Eigen::Vector3f>());

mVertices->reserve(depthMap.rows * depthMap.cols);

for (size_t i = 0; i < depthMap.rows; i++)

{

for (size_t j = 0; j < depthMap.cols; j++)

{

if (depthMap.at<ushort>(i, j) == 0)

mVertices->emplace_back(Eigen::Vector3f(MINF, MINF, MINF));

else

{

float depth = (float)depthMap.at<ushort>(i, j) / 5000.0f;

mVertices->emplace_back(Eigen::Vector3f((j - cx) / fx * depth, (i - cy) / fy * depth, depth));

}

}

}

}

void Frame::computeNormalMap(cv::Mat depthMap)

{

mNormals = std::make_shared<std::vector<Eigen::Vector3f>>(std::vector<Eigen::Vector3f>());

mNormals->reserve(depthMap.rows * depthMap.cols);

for (size_t i = 0; i < depthMap.rows; i++)

{

for (size_t j = 0; j < depthMap.cols; j++)

{

size_t idx = i * depthMap.cols + j;

if (i == 0 || j == 0 || i == depthMap.rows - 1 || j == depthMap.cols - 1)

mNormals->emplace_back(Eigen::Vector3f(MINF, MINF, MINF));

else

{

Eigen::Vector3f du = mVertices->at(idx + 1) - mVertices->at(idx - 1);

Eigen::Vector3f dv = mVertices->at(idx + depthMap.cols) - mVertices->at(idx - depthMap.cols);

if (du.allFinite() && dv.allFinite())

mNormals->emplace_back(du.cross(dv).normalized());

else

mNormals->emplace_back(Eigen::Vector3f(MINF, MINF, MINF));

}

}

}

}

ICP.h

#pragma once

#include <Eigen/Dense>

#include "Frame.h"

class ICP

{

public:

ICP(Frame &_prevFrame, Frame &_curFrame, const double distanceThreshold, const double normalThreshold);

Eigen::Matrix4f estimatePose(Eigen::Matrix4f& pose, int iterations = 10 );

std::vector<std::pair<size_t, size_t>> findIndicesOfCorrespondingPoints(const Eigen::Matrix4f &pose);

private:

Frame &prevFrame;

Frame &curFrame;

const double distanceThreshold;

const double normalThreshold;

};

ICP.cpp

#include "ICP.h"

ICP::ICP(Frame& _prevFrame, Frame& _curFrame, const double distanceThreshold, const double normalThreshold):

prevFrame(_prevFrame), curFrame(_curFrame), distanceThreshold(distanceThreshold), normalThreshold(normalThreshold) {}

Eigen::Matrix4f ICP::estimatePose(Eigen::Matrix4f& pose, int iterations)

{

for (size_t iteration = 0; iteration < iterations; iteration++)

{

const std::vector<std::pair<size_t, size_t>> correspondenceIds = findIndicesOfCorrespondingPoints(pose);

std::cout <<"iteration: "<< iteration << "\t corresponding points: " << correspondenceIds.size() << std::endl;

Eigen::Matrix3f rotationEP = pose.block(0, 0, 3, 3);

Eigen::Vector3f translationEP = pose.block(0, 3, 3, 1);

Eigen::MatrixXf A = Eigen::MatrixXf::Zero(correspondenceIds.size(), 6);

Eigen::VectorXf b = Eigen::VectorXf::Zero(correspondenceIds.size());

for (size_t i = 0; i < correspondenceIds.size(); i++)

{

const auto& x = rotationEP * curFrame.getVertexGlobal(correspondenceIds[i].second) + translationEP;

const auto& y = prevFrame.getVertexGlobal(correspondenceIds[i].first);

const auto& n = prevFrame.getNormalGlobal(correspondenceIds[i].first);

A(i, 0) = n(2) * x(1) - n(1) * x(2);

A(i, 1) = n(0) * x(2) - n(2) * x(0);

A(i, 2) = n(1) * x(0) - n(0) * x(1);

A(i, 3) = n(0);

A(i, 4) = n(1);

A(i, 5) = n(2);

b(i) = n(0) * y(0) + n(1) * y(1) + n(2) * y(2) - n(0) * x(0) - n(1) * x(1) - n(2) * x(2);

}

Eigen::VectorXf x = A.bdcSvd(Eigen::ComputeThinU | Eigen::ComputeThinV).solve(b);

float alpha = x(0), beta = x(1), gamma = x(2);

Eigen::Vector3f translation = x.tail(3);

Eigen::Matrix3f rotation = Eigen::AngleAxisf(alpha, Eigen::Vector3f::UnitX()).toRotationMatrix() *

Eigen::AngleAxisf(beta, Eigen::Vector3f::UnitY()).toRotationMatrix() *

Eigen::AngleAxisf(gamma, Eigen::Vector3f::UnitZ()).toRotationMatrix();

Eigen::Matrix4f curentPose = Eigen::Matrix4f::Identity();

curentPose.block<3, 3>(0, 0) = rotation;

curentPose.block<3, 1>(0, 3) = translation;

pose = curentPose * pose;

}

return pose;

}

std::vector<std::pair<size_t, size_t>> ICP::findIndicesOfCorrespondingPoints(const Eigen::Matrix4f & pose)

{

Eigen::Matrix4f estimatedPose = pose;

std::vector<std::pair<size_t, size_t>> indicesOfCorrespondingPoints;

std::vector<Eigen::Vector3f> prevFrameVertexMapGlobal = prevFrame.getVertexMapGlobal();

std::vector<Eigen::Vector3f> prevFrameNormalMapGlobal = prevFrame.getNormalMapGlobal();

std::vector<Eigen::Vector3f> curFrameVertexMapGlobal = curFrame.getVertexMapGlobal();

std::vector<Eigen::Vector3f> curFrameNormalMapGlobal = curFrame.getNormalMapGlobal();

const auto rotation = estimatedPose.block(0, 0, 3, 3);

const auto translation = estimatedPose.block(0, 3, 3, 1);

const auto estimatedPoseInv = estimatedPose.inverse();

const auto rotationInv = estimatedPoseInv.block(0, 0, 3, 3);

const auto translationInv = estimatedPoseInv.block(0, 3, 3, 1);

for (size_t idx = 0; idx < prevFrameVertexMapGlobal.size(); idx++)

{

Eigen::Vector3f prevPointGlobal = prevFrameVertexMapGlobal[idx];

Eigen::Vector3f prevNormalGlobal = prevFrameNormalMapGlobal[idx];

if (prevPointGlobal.allFinite() && prevNormalGlobal.allFinite())

{

Eigen::Vector3f prevPointCurCamera = rotationInv * prevPointGlobal + translationInv;

Eigen::Vector3f prevNormalCurCamera = rotationInv * prevFrameNormalMapGlobal[idx];

// project point from global camera system into camera system of the current frame

const Eigen::Vector3f prevPointCurFrame = curFrame.projectPointIntoFrame(prevPointCurCamera);

// project point from camera system of the previous frame onto the image plane of the current frame

const Eigen::Vector2i prevPointImgCoordCurFrame = curFrame.projectOntoImgPlane(prevPointCurFrame);

if (curFrame.containsImgPoint(prevPointImgCoordCurFrame))

{

size_t curIdx = prevPointImgCoordCurFrame[1] * curFrame.getFrameWidth() + prevPointImgCoordCurFrame[0];

Eigen::Vector3f curFramePointGlobal = rotation * curFrameVertexMapGlobal[curIdx] + translation;

Eigen::Vector3f curFrameNormalGlobal = rotation * curFrameNormalMapGlobal[curIdx];

if (curFramePointGlobal.allFinite() && curFrameNormalGlobal.allFinite() && (curFramePointGlobal - prevPointGlobal).norm() < distanceThreshold &&

std::abs(curFrameNormalGlobal.dot(prevNormalGlobal)) / curFrameNormalGlobal.norm() / prevNormalGlobal.norm() < normalThreshold)

indicesOfCorrespondingPoints.push_back(std::make_pair(idx, curIdx));

}

}

}

return indicesOfCorrespondingPoints;

}

main.cpp

#include "ICP.h"

#include "Frame.h"

#define DISTANCE_THRESHOLD 0.05

#define ANGLE_THRESHOLD 1.05

#define ICP_ITERATIONS 20

int main()

{

Eigen::Matrix3f depthIntrinsics;

depthIntrinsics << 525, 0, 319.5, 0, 525, 239.5, 0, 0, 1;

Eigen::Matrix4f depthExtrinsics = Eigen::Matrix4f::Identity();

Eigen::Matrix4f init_pose = Eigen::Matrix4f::Identity(4, 4);

cv::Mat colorMap, depthMap;

colorMap = cv::imread("./color/1305031102.175304.png", 1);

depthMap = cv::imread("./depth/1305031102.160407.png", -1);

Frame prevFrame(depthMap, colorMap, depthIntrinsics, depthExtrinsics);

colorMap = cv::imread("./color/1305031102.211214.png", 1);

depthMap = cv::imread("./depth/1305031102.194330.png", -1);

Frame curFrame(depthMap, colorMap, depthIntrinsics, depthExtrinsics);

ICP icp(prevFrame, curFrame, DISTANCE_THRESHOLD, ANGLE_THRESHOLD);

std::cout << icp.estimatePose(init_pose, ICP_ITERATIONS) << std::endl;

return 0;

}

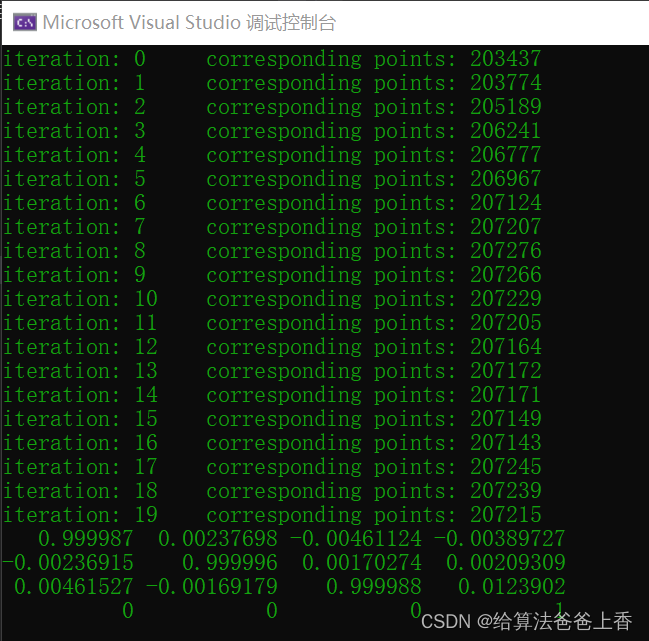

运行结果

文章描述了一种利用Point-to-PlaneICP算法进行投影数据关联的方法,通过前一帧和当前帧的顶点及法向量匹配,寻找对应点。在每次迭代中,使用非线性最小二乘法优化相对位姿变化,以减小点到平面的误差。代码实现包括了Frame类和ICP类,用于处理深度图、计算顶点和法向量,并估计相机位姿。

文章描述了一种利用Point-to-PlaneICP算法进行投影数据关联的方法,通过前一帧和当前帧的顶点及法向量匹配,寻找对应点。在每次迭代中,使用非线性最小二乘法优化相对位姿变化,以减小点到平面的误差。代码实现包括了Frame类和ICP类,用于处理深度图、计算顶点和法向量,并估计相机位姿。

2万+

2万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?