1 简介

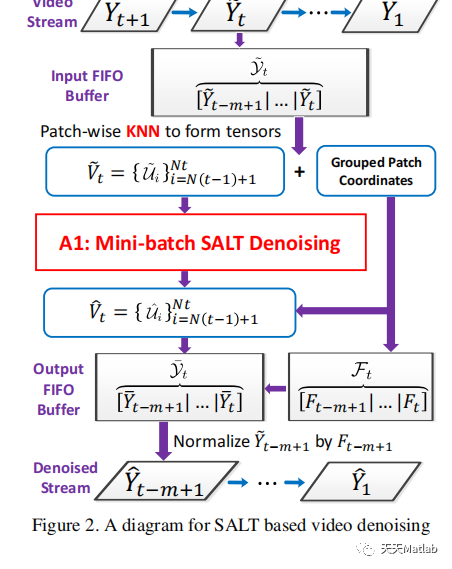

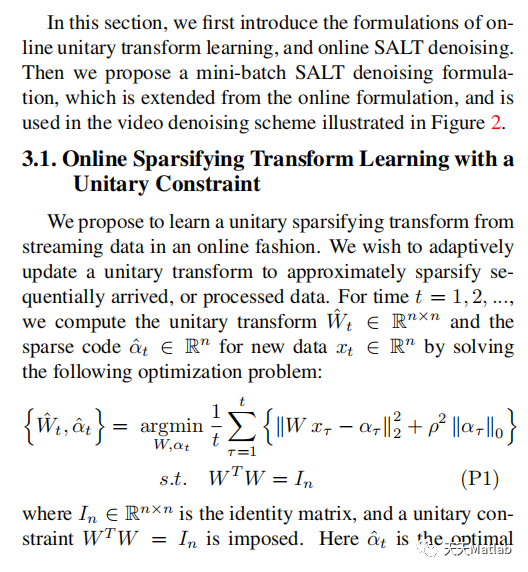

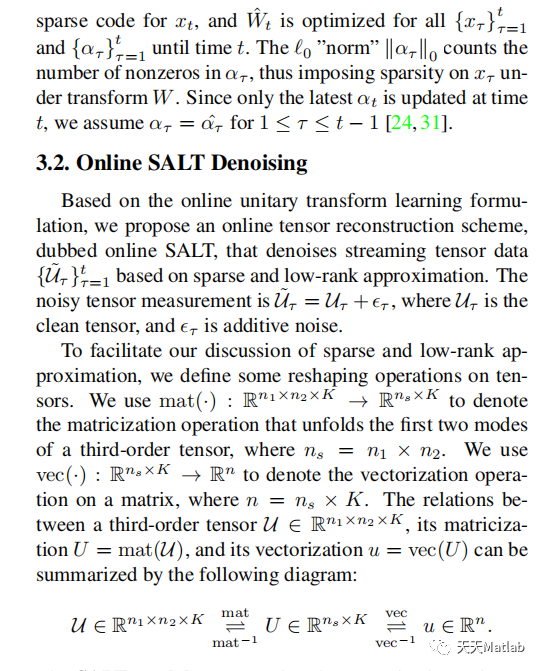

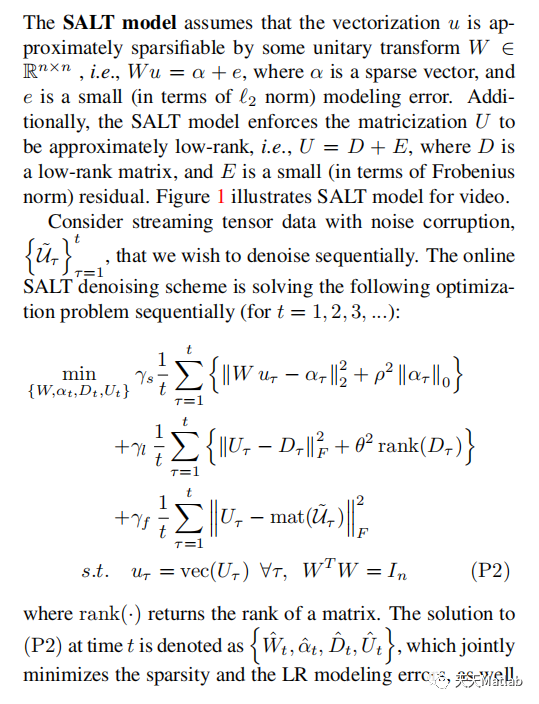

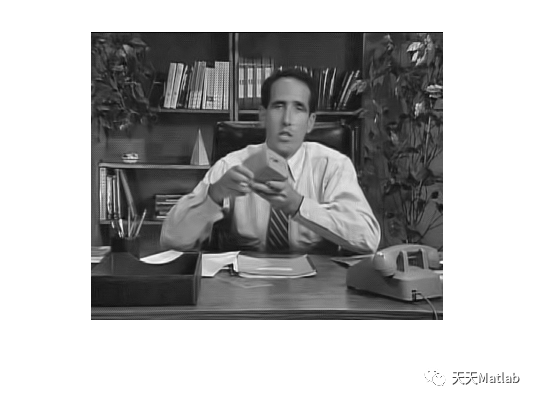

Recent works on adaptive sparse and low-rank signal modeling have demonstrated their usefulness, especially in image/video processing applications. While a patch-based sparse model imposes local structure, low-rankness of the grouped patches exploits non-local correlation. Applying either approach alone usually limits performance in various low-level vision tasks. In this work, we propose a novel video denoising method, based on an online tensor reconstruction scheme with a joint adaptive sparse and low-rank model, dubbed SALT. An efficient and unsupervised online unitary sparsifying transform learning method is introduced to impose adaptive sparsity on the fly. We develop an efficient 3D spatio-temporal data reconstruction framework based on the proposed online learning method, which exhibits low latency and can potentially handle streaming videos. To the best of our knowledge, this is the first work that combines adaptive sparsity and low-rankness for video denoising, and the first work of solving the proposed problem in an online fashion. We demonstrate video denoising results over commonly used videos from public datasets. Numerical experiments show that the proposed video denoising method outperforms competing methods.

2 部分代码

function [Xr, outputParam] = SALT_videodenoising(data, param)%Function for denoising the gray-scale video using SALT denoising%algorithm.%%Note that all input parameters need to be set prior to simulation. We%provide some example settings using function SALT_videodenoise_param.%However, the user is advised to carefully choose optimal values for the%parameters depending on the specific data or task at hand.%% The SALT_videodenoising algorithm denoises an gray-scale video based% on joint Sparse And Low-rank Tensor Reconstruction (SALT) method.% Detailed discussion can be found in%% (1) "Joint Adaptive Sparsity and Low-Rankness on the Fly:% An Online Tensor Reconstruction Scheme for Video Denoising",% written by B. Wen, Y. Li, L, Pfister, and Y Bresler, in Proc. IEEE% International Conference on Computer Vision (ICCV), Oct. 2017.%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%% Inputs -% 1. data : Video data / path. The fields are as follows -% - noisy: a*b*numFrame size gray-scale tensor for denoising% - oracle: path to the oracle video (for% PSNR calculation)%% 2. param: Structure that contains the parameters of the% VIDOSAT_videodenoising algorithm. The various fields are as follows% -% - sig: Standard deviation of the additive Gaussian% noise (Example: 20)% - onlineBMflag : set to true, if online VIDOSAT% precleaning is used.% Outputs -% 1. Xr - Image reconstructed with SALT_videodenoising algorithm.% 2. outputParam: Structure that contains the parameters of the% algorithm output for analysis as follows% -% - PSNR: PSNR of Xr, if the oracle is provided% - timeOut: run time of the denoising algorithm% - framePSNR: per-frame PSNR values%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%% parameter & initialization %%%%%%%%%%%%%%% (0) Load parameters and dataparam = SALT_videodenoise_param(param);noisy = data.noisy; % noisy% (1-1) Enlarge the frame[noisy, param] = module_videoEnlarge(noisy, param);if param.onlineBMflagdata.ref = module_videoEnlarge(data.ref, param);end[aa, bb, numFrame] = size(noisy); % height / width / depth% (1-2) parametersdim = param.dim; % patch length, i.e., 8n3D = param.n3D; % TL tensor sizetempSearchRange = param.tempSearchRange;startChangeFrameNo = tempSearchRange + 1;endChangeFrameNo = numFrame - tempSearchRange;blkSize = [dim, dim];slidingDis = param.strideTemporal;numFrameBuffer = tempSearchRange * 2 + 1;param.numFrameBuffer = numFrameBuffer;nFrame = param.nFrame;% (1-3) 2D indexidxMat = zeros([aa, bb] - blkSize + 1);idxMat([[1:slidingDis:end-1],end],[[1:slidingDis:end-1],end]) = 1;[indMatA, indMatB] = size(idxMat);param.numPatchPerFrame = indMatA * indMatB;% (1-4) buffer and output initializationIMout = zeros(aa, bb, numFrame);Weight = zeros(aa, bb, numFrame);buffer.YXT = zeros(n3D, n3D);buffer.D = kron(kron(dctmtx(dim), dctmtx(dim)), dctmtx(nFrame));%%%%%%%%%%%%%%% (2) Main Program - video streaming %%%%%%%%%%%%%tic;for frame = 1 : numFramedisplay(frame);% (0) select G_tif frame < startChangeFrameNocurFrameRange = 1 : numFrameBuffer;centerRefFrame = frame;elseif frame > endChangeFrameNocurFrameRange = numFrame - numFrameBuffer + 1 : numFrame;centerRefFrame = frame - (numFrame - numFrameBuffer);elsecurFrameRange = frame - tempSearchRange : frame + tempSearchRange;centerRefFrame = startChangeFrameNo;end% (1) Input buffertempBatch = noisy(:, :, curFrameRange);extractPatch = module_video2patch(tempBatch, param); % patch extraction% (2) KNN << Block Matching (BM) >>% Options: Online / Offline BMif param.onlineBMflag% (2-1) online BM using pre-cleaned datatempRef = data.ref(:, :, curFrameRange);refPatch = module_video2patch(tempRef, param);[blk_arr, ~, blk_pSize] = ...module_videoBM_fix(refPatch, param, centerRefFrame);else% (2-2) using offline BM resultblk_arr = data.BMresult(:, :, frame);blk_pSize = data.BMsize(:, :, frame);end% (3) Denoising current G_t using LR approximation[denoisedPatch_LR, weights_LR] = ...module_vLRapprox(extractPatch, blk_arr, blk_pSize, param);% (4) Denoising current G_t using Online TL[denoisedPatch_TL, frameWeights_TL, buffer] = ...module_TLapprox(extractPatch, buffer, blk_arr, param);% (5) fusion of the LR + TL + noisy heredenoisedPatch = denoisedPatch_LR + denoisedPatch_TL + extractPatch * param.noisyWeight;weights = weights_LR + frameWeights_TL + param.noisyWeight;% (6) Aggregation[tempBatch, tempWeight] = ...module_vblockAggreagtion(denoisedPatch, weights, param);% (7) update reconstructionIMout(:, :, curFrameRange) = IMout(:, :, curFrameRange) + tempBatch;Weight(:, :, curFrameRange) = Weight(:, :, curFrameRange) + tempWeight;endoutputParam.timeOut = toc;% (3) Normalization and OutputXr = module_videoCrop(IMout, param) ./ module_videoCrop(Weight, param);outputParam.PSNR = PSNR3D(Xr - double(data.oracle));framePSNR = zeros(1, numFrame);for i = 1 : numFrameframePSNR(1, i) = PSNR(Xr(:,:,i) - double(data.oracle(:,:,i)));endoutputParam.framePSNR = framePSNR;end

3 仿真结果

4 参考文献

[1] Wen B , Li Y , Pfister L , et al. Joint Adaptive Sparsity and Low-Rankness on the Fly: An Online Tensor Reconstruction Scheme for Video Denoising[C]// 2017 IEEE International Conference on Computer Vision (ICCV). IEEE, 2017.

博主简介:擅长智能优化算法、神经网络预测、信号处理、元胞自动机、图像处理、路径规划、无人机等多种领域的Matlab仿真,相关matlab代码问题可私信交流。

部分理论引用网络文献,若有侵权联系博主删除。

本文介绍了一种创新的视频去噪方法SALT,它结合了非线性张量重建和自适应稀疏与低秩模型。通过实时的单位化稀疏化变换学习,SALT能够在处理流视频时实现低延迟。实验表明,该方法在公开数据集上的视频去噪效果优于竞品。

本文介绍了一种创新的视频去噪方法SALT,它结合了非线性张量重建和自适应稀疏与低秩模型。通过实时的单位化稀疏化变换学习,SALT能够在处理流视频时实现低延迟。实验表明,该方法在公开数据集上的视频去噪效果优于竞品。

453

453

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?