Opencv中使用FaceDetectorYN可以很方便地进行人脸检测,上篇在Emgucv中用FaceDetectorYN做了个Demo。笔者实际使用中,OpencvSharp使用比较多,本想用OpencvSharp去实现,结果发现OpencvSharp不支持FaceDetectorYN这个类,于是想到用OpencvSharp Dnn模块加载FaceDetectorYN模型进行推理检测。上网搜了一下,貌似有用OpencvSharp使用FaceDetectorYN进行人脸检测的文章,但是是付费的,想想还是自己研究一下吧。下面代码基本实现了该效果,但是与Opencv集成的FaceDetectorYN精度和检测效果方面,还是有点差异,不知道是啥原因,希望有大佬能完善或给指点一下。

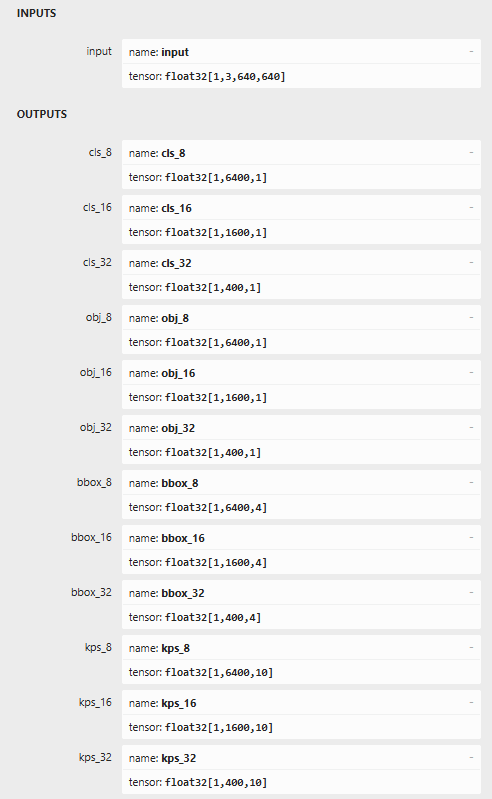

模型输入输出:

全部代码如下:

using OpenCvSharp;

using OpenCvSharp.Dnn;

using System;

using System.Collections.Generic;

using System.Linq;

public class YunetFaceDetector

{

private Net _net;

private float _confThreshold = 0.8f; // 置信度阈值

private float _nmsThreshold = 0.35f; // NMS阈值

// 初始化模型

public void LoadModel(string modelPath)

{

_net = CvDnn.ReadNet(modelPath); // 加载ONNX模型

_net.SetPreferableBackend(Backend.OPENCV); // 使用OpenCV后端

_net.SetPreferableTarget(Target.CPU); // 默认CPU推理(GPU需配置CUDA)

}

// 执行人脸检测

float ratio_height;

float ratio_width;

public Mat Detect(Mat image,out float ratio_height,out float ratio_width)

{

//图片缩放

int h = image.Rows;

int w = image.Cols;

Mat temp_image = image.Clone();

int _max = Math.Max(h, w);

Mat input_img = Mat.Zeros(new Size(_max, _max), MatType.CV_8UC3);

Rect roi = new Rect(0, 0, w, h);

temp_image.CopyTo(input_img[roi]);

ratio_height = input_img.Rows / 640.0f;

ratio_width = input_img.Cols / 640.0f;

Cv2.Resize(input_img,input_img,new Size(640,640));

Mat blob = CvDnn.BlobFromImage(

input_img

//1.0,

//size: new Size(640, 640), // 输入尺寸固定640x640

//mean: new Scalar(0, 0, 0), // 归一化均值

//swapRB: true, // BGR转RGB(OpenCV默认BGR,模型需RGB)

//crop: false

);

// --- 2. 设置输入并执行推理 ---

_net.SetInput(blob);

&nb

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

1015

1015

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?