using OpenCvSharp.Extensions;

using OpenCvSharp;

using OpenVinoSharp;

using System;

using System.Collections.Generic;

using System.ComponentModel;

using System.Data;

using System.Diagnostics;

using System.Drawing;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

using System.Windows.Forms;

using Size = OpenCvSharp.Size;

using OpenCvSharp.Dnn;

using OpenVinoSharp.Extensions.process;

using Point = OpenCvSharp.Point;

using System.IO;

using OpenVinoSharp.Extensions.result;

namespace yolo_world_opencvsharp_net4._8

{

public partial class HandPose2 : Form

{

Core core;

Model model;

CompiledModel compiledModel;

InferRequest inferRequest;

HandDetect handDetect;

string modelPath;

int inHeight;

int inWidth;

const int nPoints = 22;

const double thresh = 0.25;

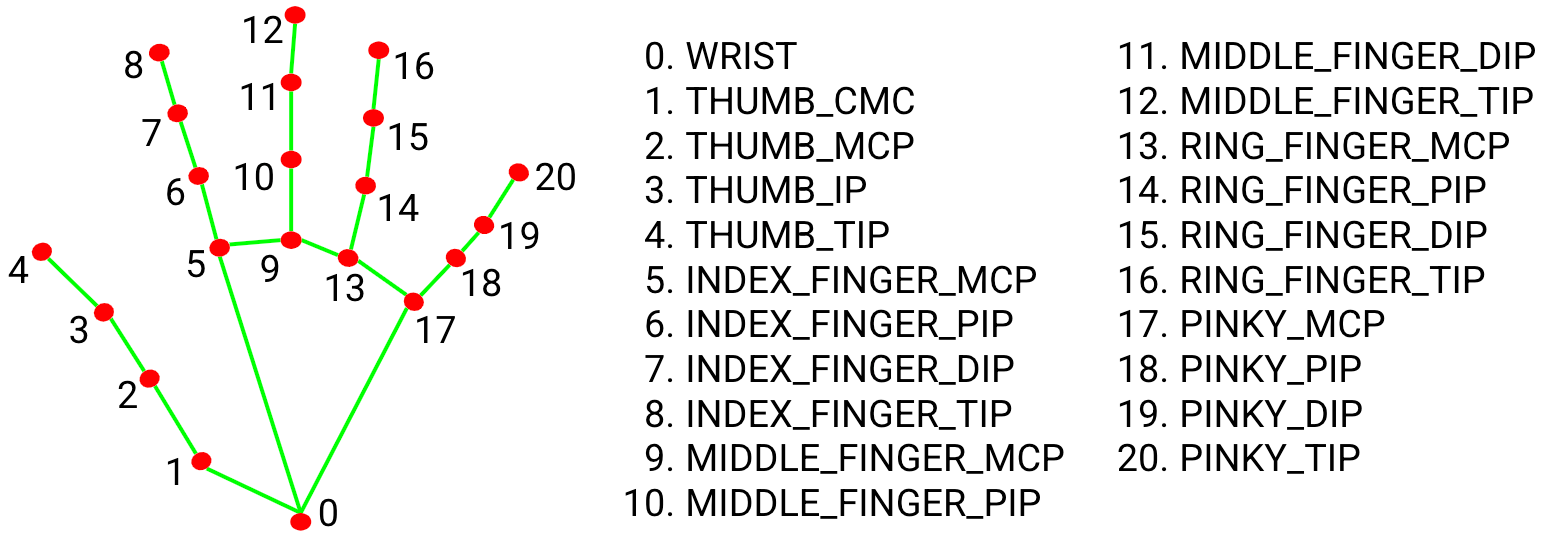

int[][] posePairs =

{

new[] {0, 1}, new[] {1, 2}, new[] {2, 3}, new[] {3, 4}, //thumb

new[] {0, 5}, new[] {5, 6}, new[] {6, 7}, new[] {7, 8}, //index

new[] {0, 9}, new[] {9, 10}, new[] {10, 11}, new[] {11, 12}, //middle

new[] {0, 13}, new[] {13, 14}, new[] {14, 15}, new[] {15, 16}, //ring

new[] {0, 17}, new[] {17, 18}, new[] {18, 19}, new[] {19, 20}, //small

new[] {5, 9}, new[] {9, 13}, new[] {13, 17}

};

public HandPose2()

{

InitializeComponent();

}

private void HandPose2_Load(object sender, EventArgs e)

{

modelPath = "D:\\caffe-onnx-master\\HandPose_256_256.onnx";

var size = Path.GetFileNameWithoutExtension(modelPath).Split('_').ToList().GetRange(1, 2).Select(x => int.Parse(x));

inWidth = size.First();

inHeight = size.ElementAt(1);

// 初始化 OpenVINO

core = new Core();

// 加载模型

model = core.read_model(modelPath);

compiledModel = core.compile_model(model, "GPU.0");

inferRequest = compiledModel.create_infer_request();

handDetect=new HandDetect();

}

private void button1_Click(object sender, EventArgs e)

{

//predict(new Mat(sampleImage));

}

// 定义手部关键点类

public class HandKeypoints

{

public List<Point> Points { get; set; } = new List<Point>();

public List<double> Confidences { get; set; } = new List<double>();

}

private HandKeypoints Predict(Mat img)

{

Stopwatch stopwatch = Stopwatch.StartNew();

int frameWidth = img.Cols;

int frameHeight = img.Rows;

Mat resized = new Mat();

Cv2.Resize(img, resized, new Size(inWidth, inHeight));

Mat normalized = new Mat();

resized.ConvertTo(normalized, MatType.CV_32FC3, 1.0 / 255.0);

float[] inputData = Permute.run(normalized);

// 创建输入张量

Tensor inputTensor = new Tensor(

inferRequest.get_input_tensor().get_shape(),

inputData

);

// 设置输入并推理

inferRequest.set_input_tensor(0, inputTensor);

inferRequest.infer();

stopwatch.Stop();

Debug.WriteLine($"推理时间: {stopwatch.ElapsedMilliseconds}ms");

// 获取输出

Tensor outputTensor = inferRequest.get_output_tensor(0);

float[] outputData = outputTensor.get_data<float>((int)outputTensor.get_size());

int H = (int)outputTensor.get_shape()[2];

int W = (int)outputTensor.get_shape()[3];

var result = new HandKeypoints();

ReadOnlySpan<float> values = new ReadOnlySpan<float>(outputData);

for (int n = 0; n < nPoints; n++)

{

var probMap = new Mat(H, W, MatType.CV_32F, values.Slice(n * H * W, H * W).ToArray());

Cv2.Resize(probMap, probMap, new Size(frameWidth, frameHeight));

Cv2.MinMaxLoc(probMap, out _, out double maxVal, out _, out Point maxLoc);

if (maxVal > thresh)

{

//result.Points.Add(maxLoc);

result.Confidences.Add(maxVal);

}

else

{

//result.Points.Add(new Point(-1, -1)); // 无效点标记

result.Confidences.Add(0);

}

result.Points.Add(maxLoc);

probMap.Dispose();

}

// 释放资源

resized.Dispose();

normalized.Dispose();

inputTensor.Dispose();

outputTensor.Dispose();

return result;

}

private void button2_Click(object sender, EventArgs e)

{

VideoCapture videoCapture = new VideoCapture(@"D:\3D\1752493178789.mp4");

//videoCapture.FrameWidth = 640;

//videoCapture.FrameHeight = 480;

Mat frame = new Mat();

while (true)

{

videoCapture.Read(frame);

if (frame.Empty()) break;

var result = handDetect.image_predict(frame);

Mat displayFrame = frame.Clone();

for (int i = 0; i < result.datas.Count; i++)

{

Rect handBox = result.datas[i].box;

Cv2.Rectangle(displayFrame, handBox, new Scalar(255, 255, 0), 2);

try

{

Mat handRoi = new Mat(frame, handBox);

HandKeypoints keypoints = Predict(handRoi);

// 转换关键点到原图坐标

List<Point> absolutePoints = keypoints.Points

.Select(p => p != new Point(-1, -1)

? new Point(p.X + handBox.X, p.Y + handBox.Y)

: new Point(-1, -1))

.ToList();

// 在原图上绘制关键点

foreach (var point in absolutePoints)

{

if (point != new Point(-1, -1))

{

Cv2.Circle(displayFrame, point, 5, new Scalar(0, 0, 255), -1);

}

}

// 绘制骨架连线

foreach (var pair in posePairs)

{

Point pt1 = absolutePoints[pair[0]];

Point pt2 = absolutePoints[pair[1]];

if (pt1 != new Point(-1, -1) && pt2 != new Point(-1, -1))

{

Cv2.Line(displayFrame, pt1, pt2, new Scalar(0, 255, 0), 2);

}

}

handRoi.Dispose();

}

catch (Exception ex)

{

Debug.WriteLine($"处理手部区域时出错: {ex.Message}");

}

}

// 显示处理后的帧

pictureBox1.Image?.Dispose();

pictureBox1.Image = OpenCvSharp.Extensions.BitmapConverter.ToBitmap(displayFrame);

Cv2.WaitKey(1);

displayFrame.Dispose();

}

videoCapture.Dispose();

frame.Dispose();

}

private void HandPose2_FormClosing(object sender, FormClosingEventArgs e)

{

inferRequest.Dispose();

compiledModel.Dispose();

model.Dispose();

core.Dispose();

}

}

}

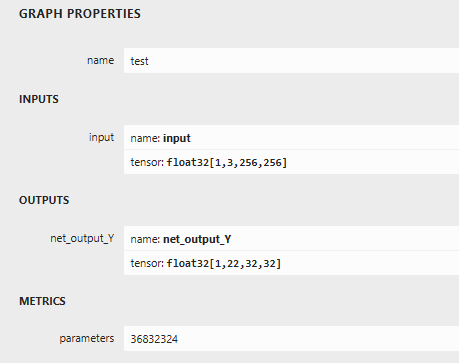

onnx模型由pose_iter_102000.caffemodel pose_deploy.prototxt转换而来,模型输入输出如下

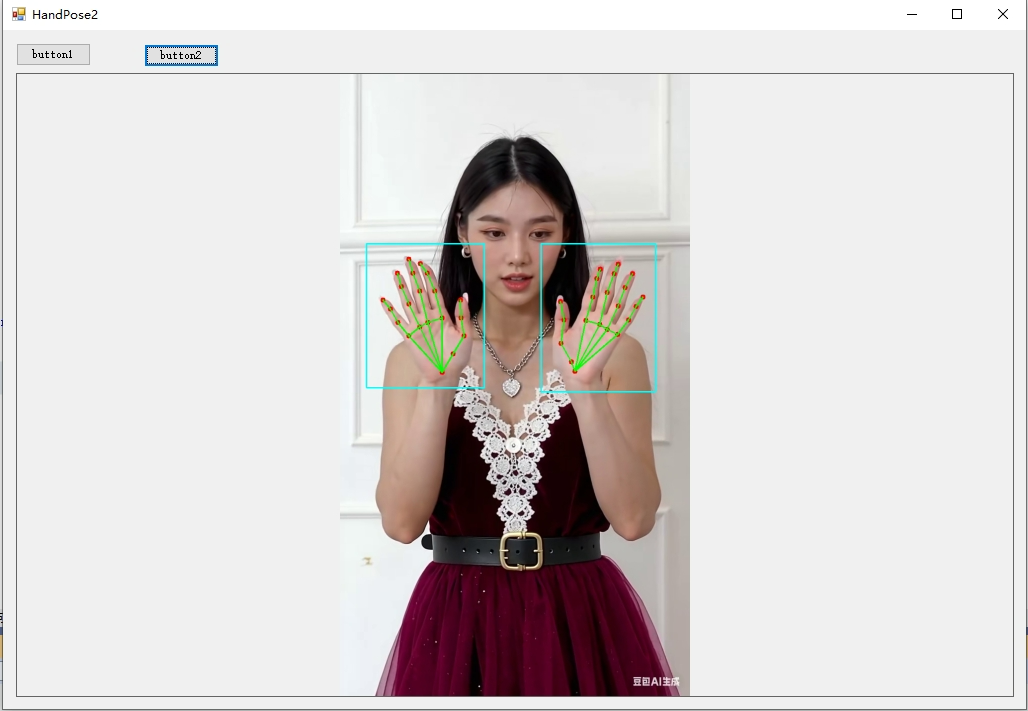

用yolov8进行手部检测(handDetect),检测到手部后,截取手部区域后,进行手部关键点检测

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?