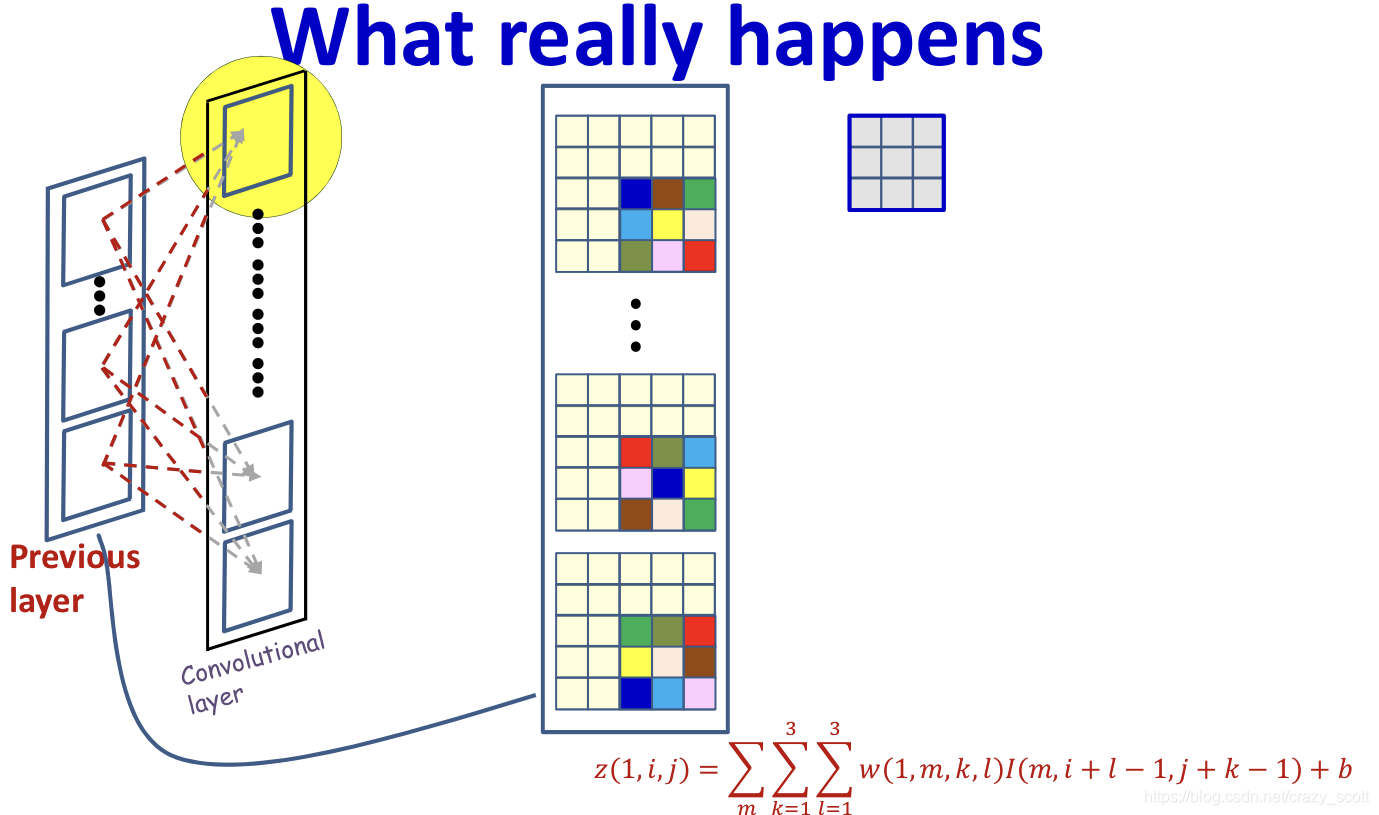

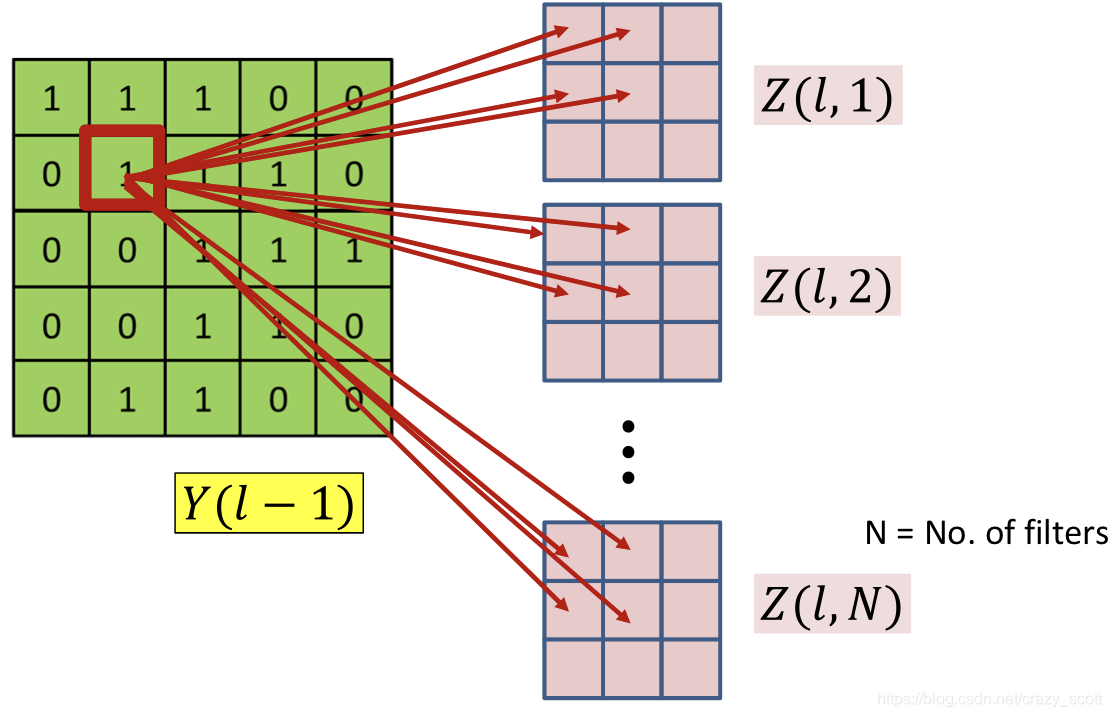

Convolution

- Each position in z z z consists of convolution result in previous map

- Way for shrinking the maps

- Stride greater than 1

- Downsampling (not necessary)

- Typically performed with strides > 1

- Pooling

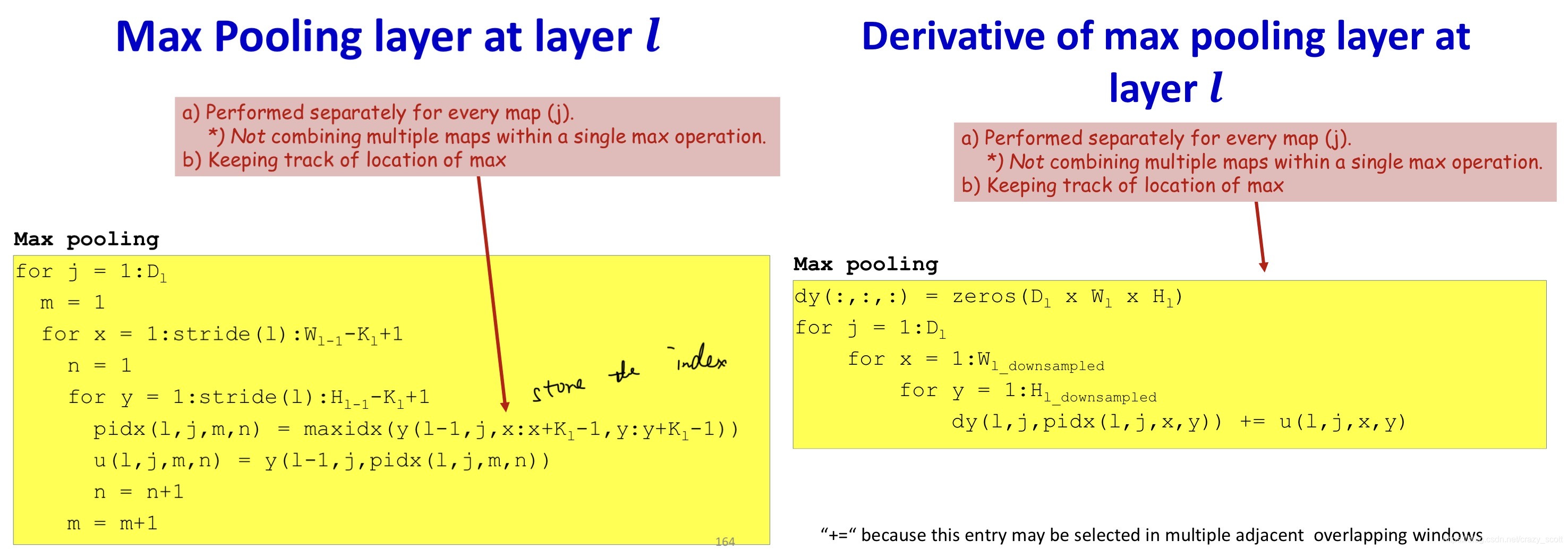

- Maxpooling

- Note: keep tracking of location of max (needed while back prop)

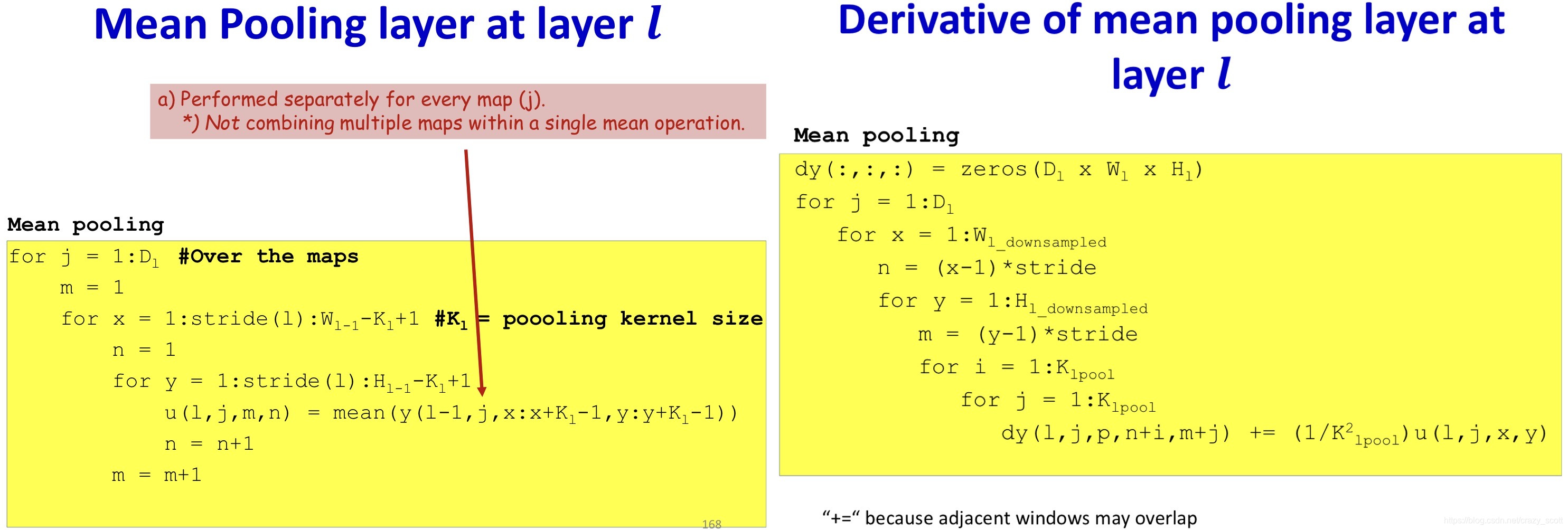

- Mean pooling

- Maxpooling

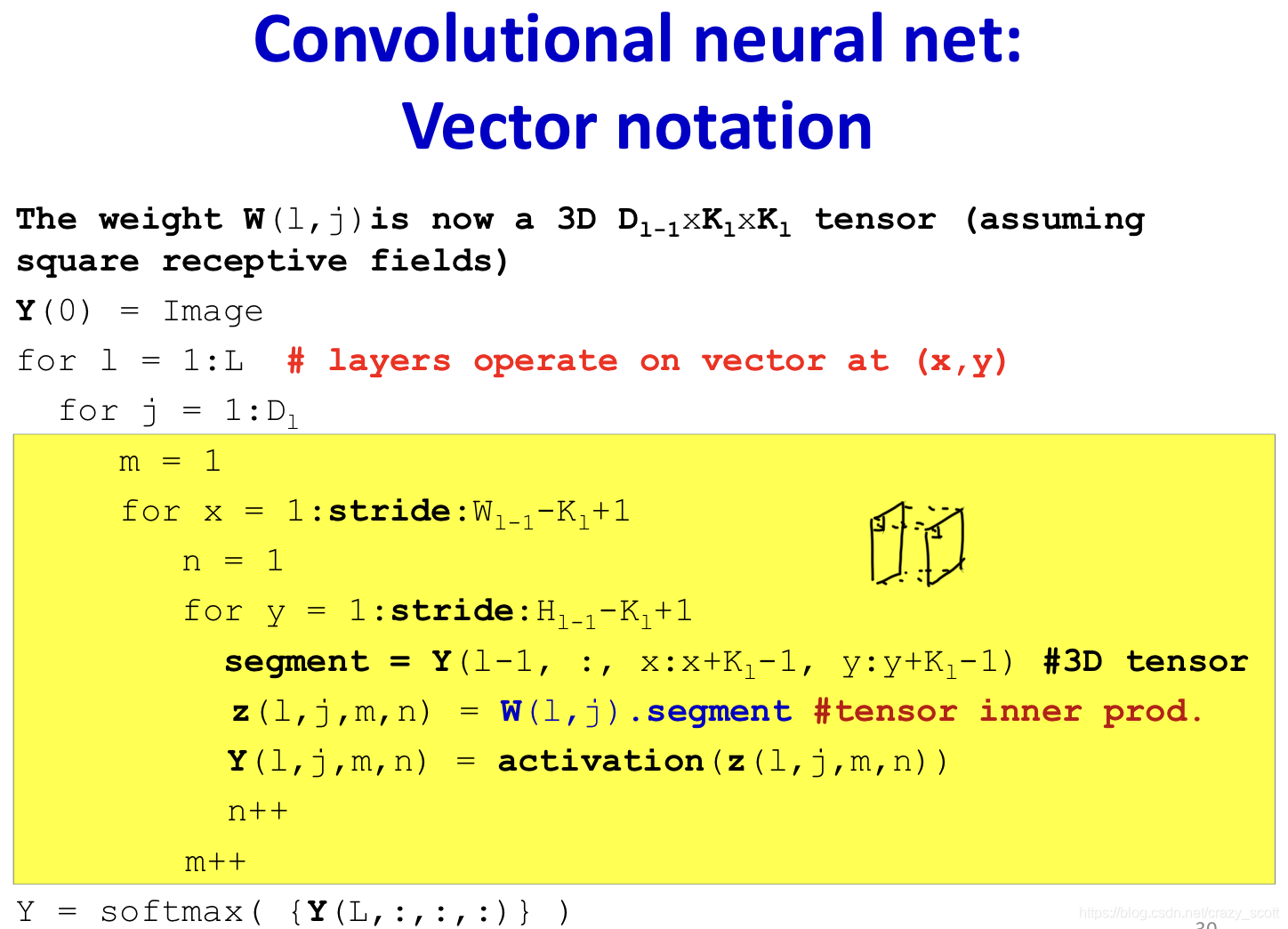

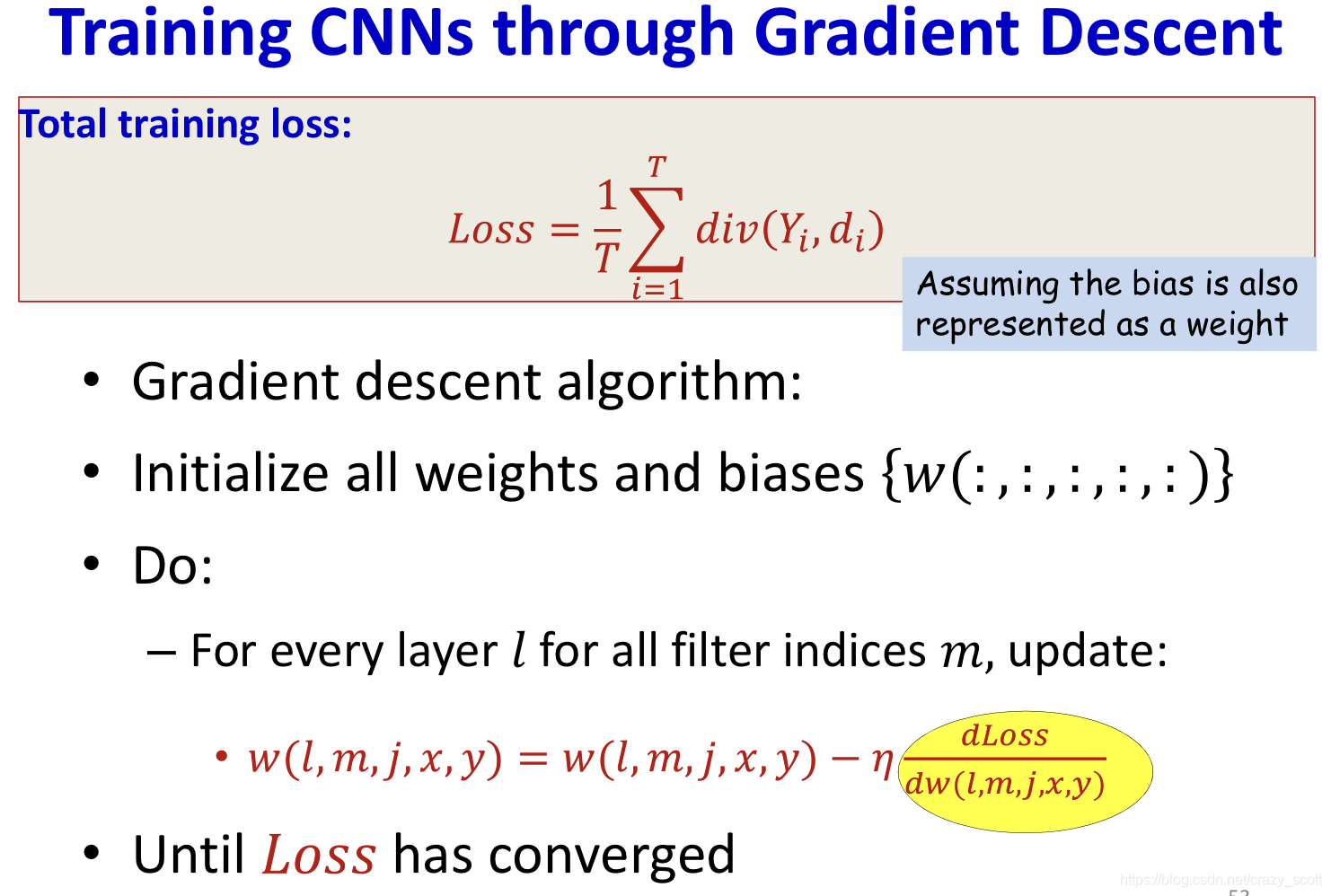

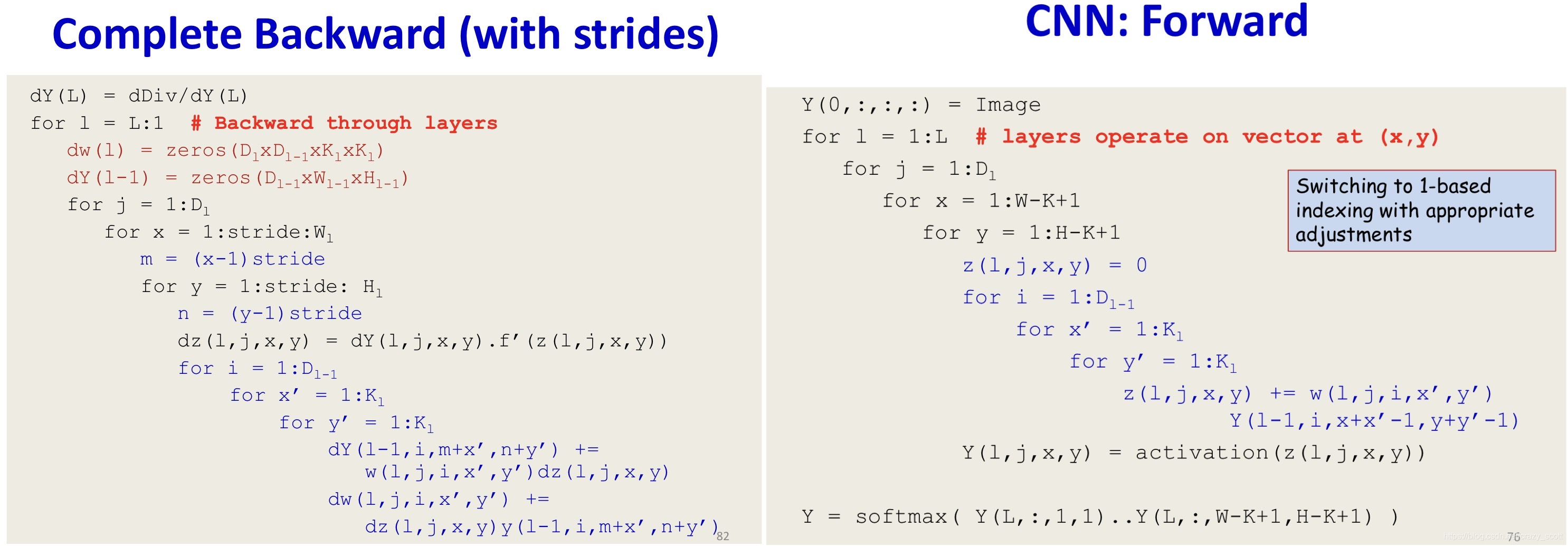

Learning the CNN

- Training is as in the case of the regular MLP

- The only difference is in the structure of the network

- Define a divergence between the desired output and true output of the network in response to any input

- Network parameters are trained through variants of gradient descent

- Gradients are computed through backpropagation

Final flat layers

- Backpropagation continues in the usual manner until the computation of the derivative of the divergence

- Recall in Backpropagation

- Step 1: compute ∂ D i v ∂ z n \frac{\partial Div}{\partial z^{n}} ∂zn∂Div、 ∂ D i v ∂ y n \frac{\partial Div}{\partial y^{n}} ∂yn∂Div

- Step 2: compute ∂ D i v ∂ w n \frac{\partial Div}{\partial w^{n}} ∂wn∂Div according to step 1

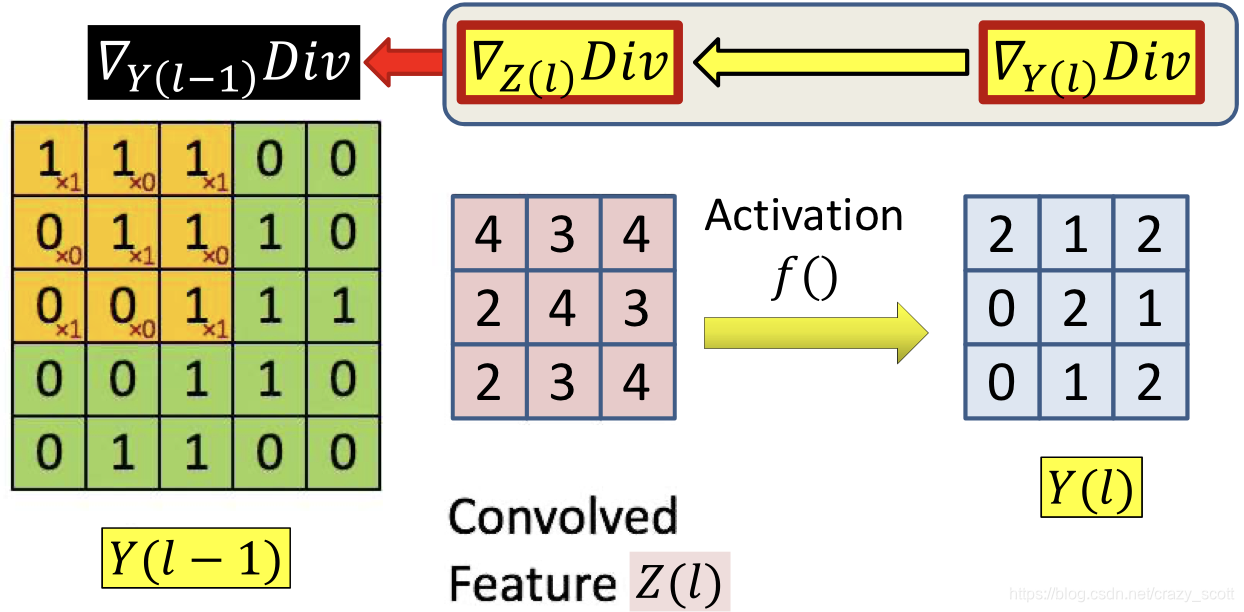

Convolutional layer

Computing ∇ Z ( l ) D i v \nabla_{Z(l)} D i v ∇Z(l)Div

-

d D i v d z ( l , m , x , y ) = d D i v d Y ( l , m , x , y ) f ′ ( z ( l , m , x , y ) ) \frac{d D i v}{d z(l, m, x, y)}=\frac{d D i v}{d Y(l, m, x, y)} f^{\prime}(z(l, m, x, y)) dz(l,m,x,y)dDiv=dY(l,m,x,y)dDivf′(z(l,m,x,y))

-

Simple compont-wise computation

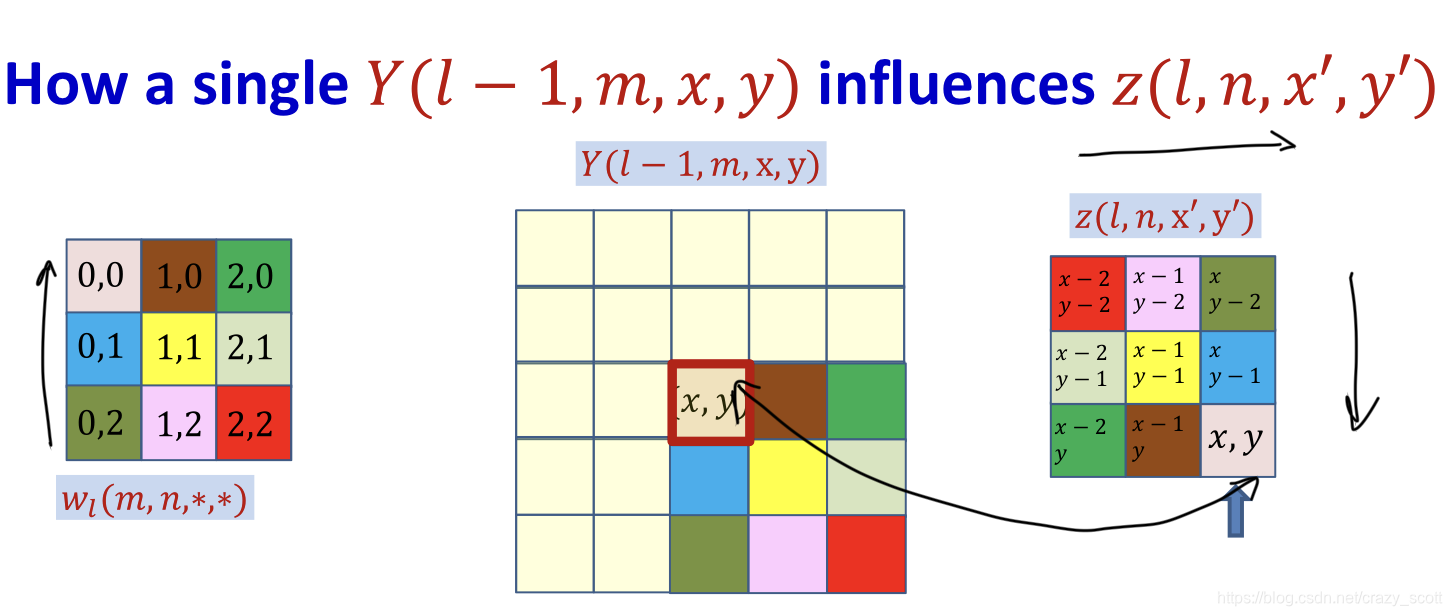

Computing ∇ Y ( l − 1 ) D i v \nabla_{Y(l-1)} D i v ∇Y(l−1)Div

-

Each Y ( l − 1 , m , x , y ) Y(l-1,m,x,y) Y(l−1,m,x,y) affects several z ( l , n , x ′ , y ′ ) z(l,n,x\prime,y\prime) z(l,n,x′,y′) terms for every n n n (map)

- Through w l ( m , n , x − x ′ , y − y ′ ) w_l(m,n,x-x\prime,y-y\prime) wl(m,n,x−x′,y−y′)

- Affects terms in all l t h l^{th} lth layer maps

- All of them contribute to the derivative of the divergence Y ( l − 1 , m , x , y ) Y(l-1,m,x,y) Y(l−1,m,x,y)

-

Derivative w.r.t a specific y y y term

d D i v d Y ( l − 1 , m , x , y ) = ∑ n ∑ x ′ , y ′ d D i v d z ( l , n , x ′ , y ′ ) d z ( l , n , x ′ , y ′ ) d Y ( l − 1 , m , x , y ) \frac{d D i v}{d Y(l-1, m, x, y)}=\sum_{n} \sum_{x^{\prime}, y^{\prime}} \frac{d D i v}{d z\left(l, n, x^{\prime}, y^{\prime}\right)} \frac{d z\left(l, n, x^{\prime}, y^{\prime}\right)}{d Y(l-1, m, x, y)} dY(l−1,m,x,y)dDiv=n∑x′,y′∑dz(l,n,x′,y′)dDivdY(l−1,m,x,y)dz(l,n,x′,y′)

d D i v d Y ( l − 1 , m , x , y ) = ∑ n ∑ x ′ , y ′ d D i v d z ( l , n , x ′ , y ′ ) w l ( m , n , x − x ′ , y − y ′ ) \frac{d D i v}{d Y(l-1, m, x, y)}=\sum_{n} \sum_{x \prime, y^{\prime}} \frac{d D i v}{d z\left(l, n, x^{\prime}, y^{\prime}\right)} w_{l}\left(m, n, x-x^{\prime}, y-y^{\prime}\right) dY(l−1,m,x,y)dDiv=n∑x′,y′∑dz(l,n,x′,y′)dDivwl(m,n,x−x′,y−y′)

Computing ∇ w ( l ) D i v \nabla_{w(l)} D i v ∇w(l)Div

- Each weight

w

l

(

m

,

n

,

x

′

,

y

′

)

w_l(m,n,x\prime,y\prime)

wl(m,n,x′,y′) also affects several

z

(

l

,

n

,

x

,

y

)

z(l,n,x,y)

z(l,n,x,y) term for every

n

n

n

- Affects terms in only one Z Z Z map (the nth map)

- All entries in the map contribute to the derivative of the divergence w.r.t. w l ( m , n , x ′ , y ′ ) w_l(m,n,x\prime,y\prime) wl(m,n,x′,y′)

- Derivative w.r.t a specific w w w term

d D i v d w l ( m , n , x , y ) = ∑ x ′ , y ′ d D i v d z ( l , n , x ′ , y ′ ) d z ( l , n , x ′ , y ′ ) d w l ( m , n , x , y ) \frac{d D i v}{d w_{l}(m, n, x, y)}=\sum_{x^{\prime}, y^{\prime}} \frac{d D i v}{d z\left(l, n, x^{\prime}, y^{\prime}\right)} \frac{d z\left(l, n, x^{\prime}, y^{\prime}\right)}{d w_{l}(m, n, x, y)} dwl(m,n,x,y)dDiv=x′,y′∑dz(l,n,x′,y′)dDivdwl(m,n,x,y)dz(l,n,x′,y′)

d D i v d w l ( m , n , x , y ) = ∑ x ′ , y ′ d D i v d z ( l , n , x ′ , y ′ ) Y ( l − 1 , m , x ′ + x , y ′ + y ) \frac{d D i v}{d w_{l}(m, n, x, y)}=\sum_{x \prime, y^{\prime}} \frac{d D i v}{d z\left(l, n, x^{\prime}, y^{\prime}\right)} Y\left(l-1, m, x^{\prime}+x, y^{\prime}+y\right) dwl(m,n,x,y)dDiv=x′,y′∑dz(l,n,x′,y′)dDivY(l−1,m,x′+x,y′+y)

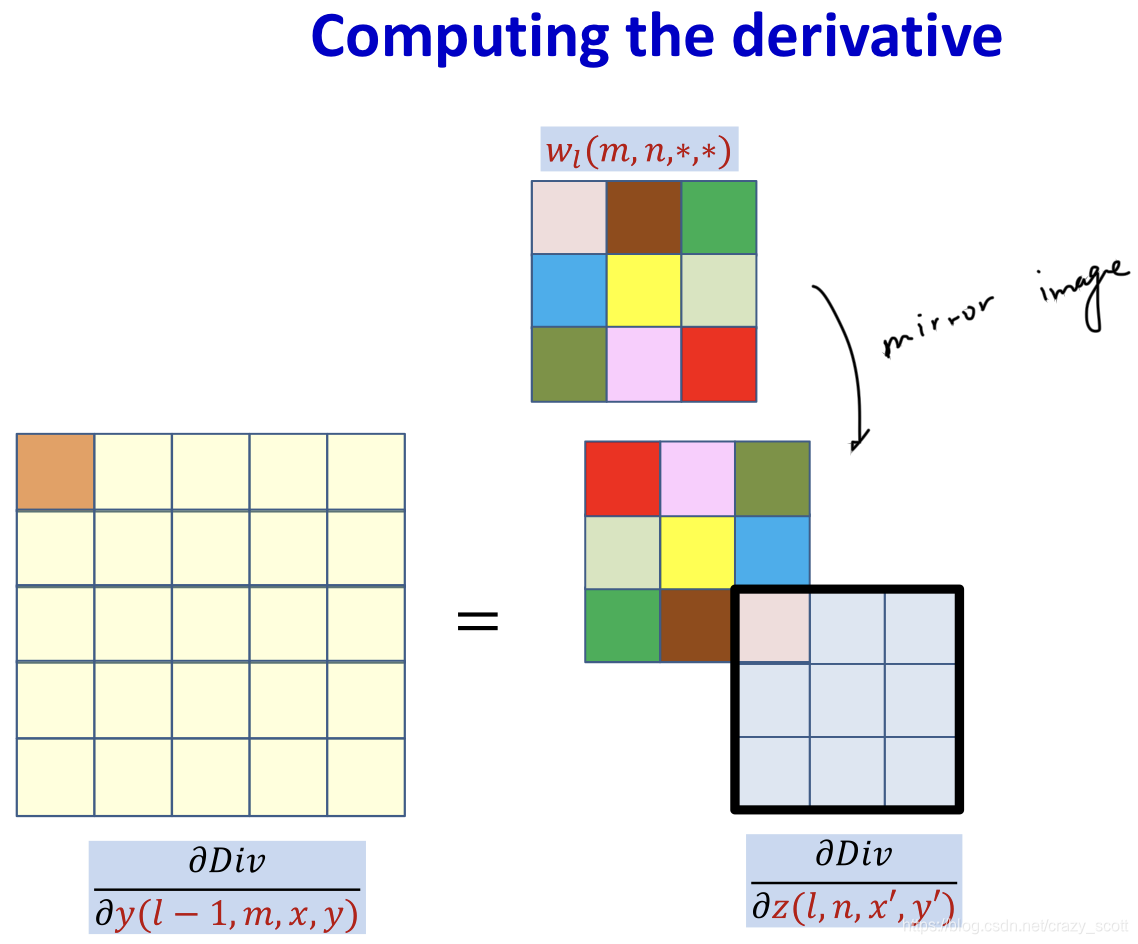

Summary

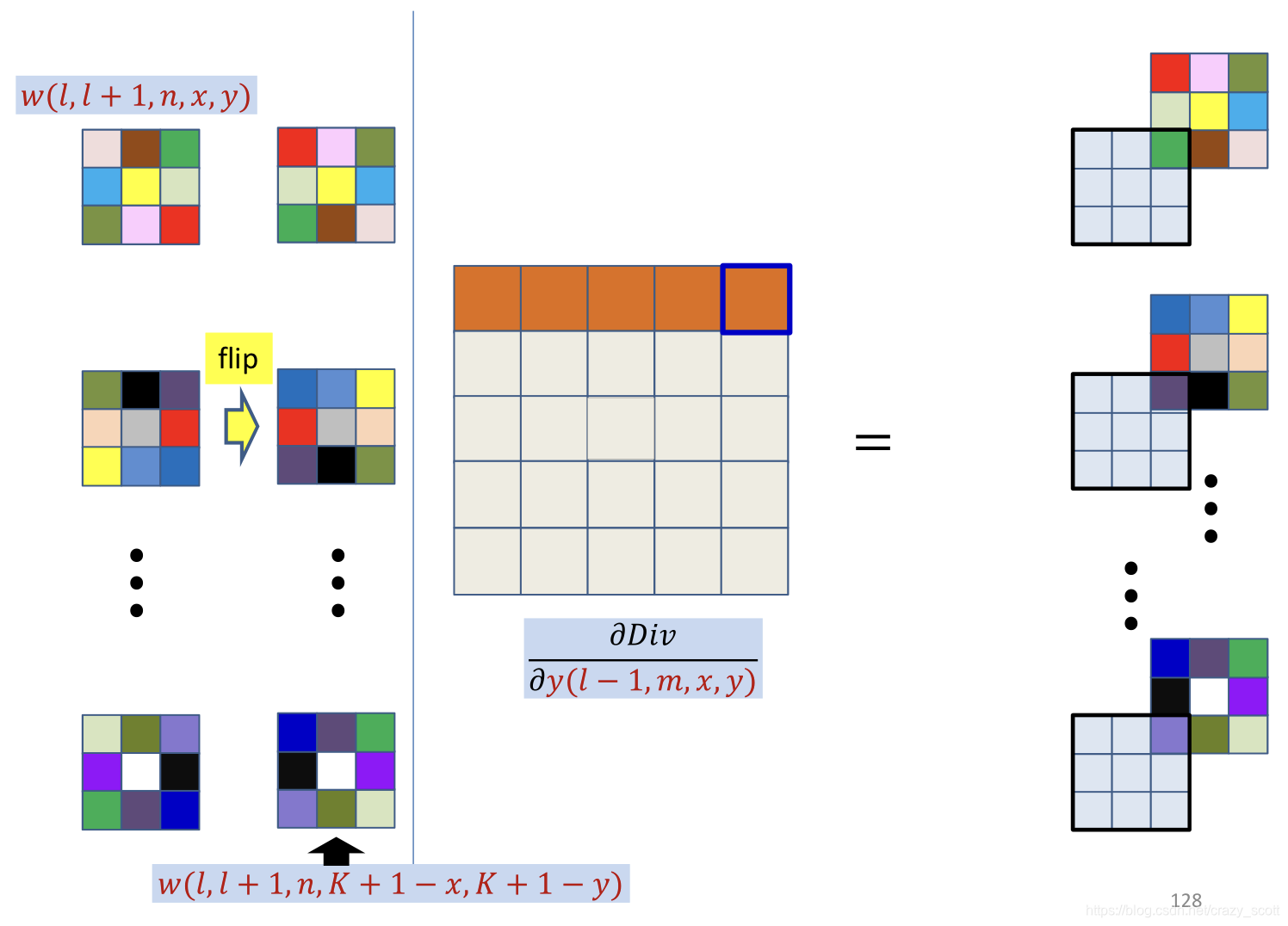

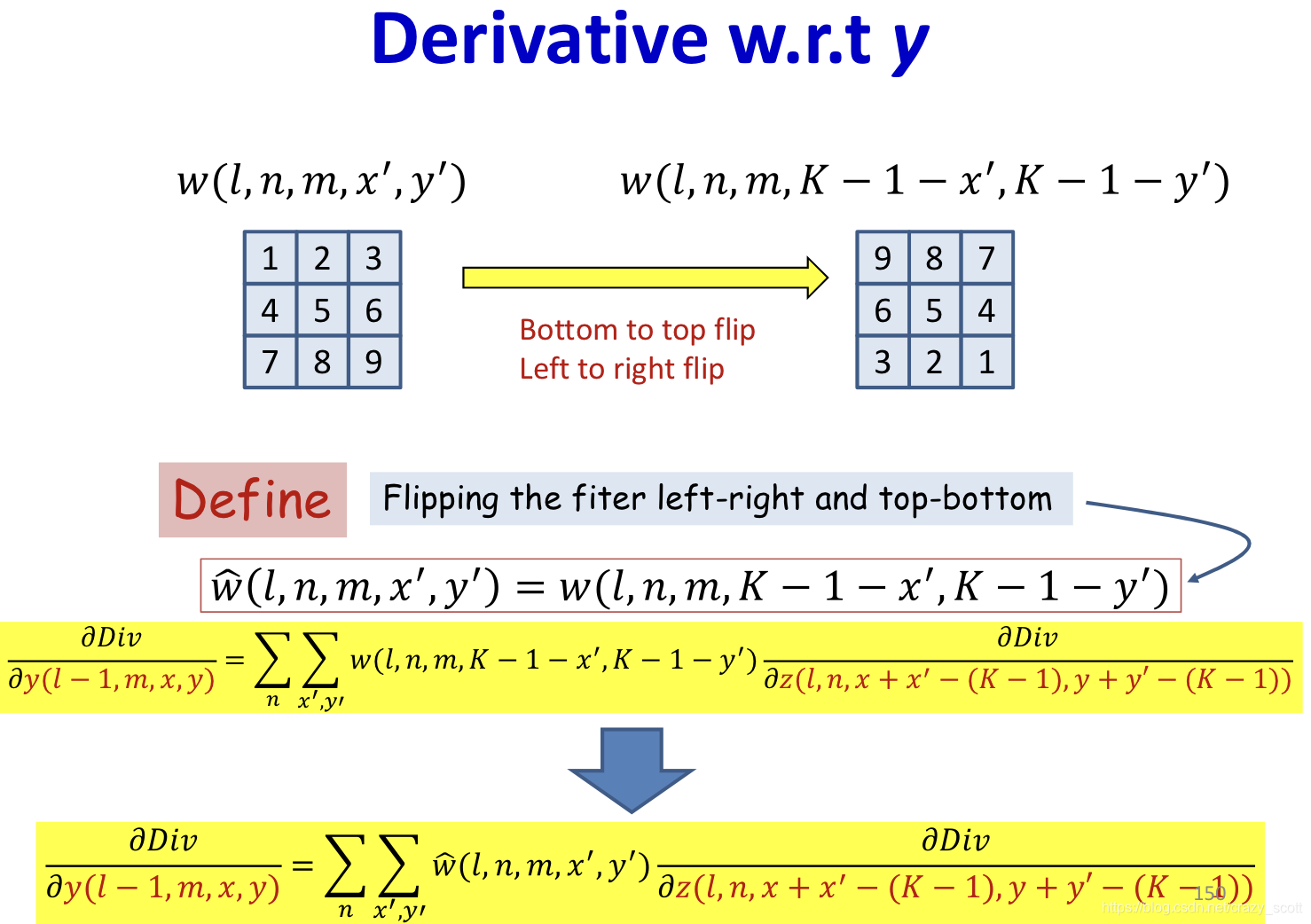

In practice

d D i v d Y ( l − 1 , m , x , y ) = ∑ n ∑ x ′ , y ′ d D i v d z ( l , n , x ′ , y ′ ) w l ( m , n , x − x ′ , y − y ′ ) \frac{d D i v}{d Y(l-1, m, x, y)}=\sum_{n} \sum_{x \prime, y^{\prime}} \frac{d D i v}{d z\left(l, n, x^{\prime}, y^{\prime}\right)} w_{l}\left(m, n, x-x^{\prime}, y-y^{\prime}\right) dY(l−1,m,x,y)dDiv=n∑x′,y′∑dz(l,n,x′,y′)dDivwl(m,n,x−x′,y−y′)

- This is a convolution, with defferent order

- Use mirror image to do normal convolution (flip up down / flip left right)

- In practice, the derivative at each (x,y) location is obtained from all Z Z Z maps

- This is just a convolution of

∂

D

i

v

∂

z

(

l

,

n

,

x

,

y

)

\frac{\partial Div}{\partial z(l,n,x,y)}

∂z(l,n,x,y)∂Div by the inverted filter

- After zero padding it first with L − 1 L-1 L−1 zeros on every side

- Note: the x ′ , y ′ x\prime, y\prime x′,y′ refer to the location in filter

- Shifting down and right by K − 1 K-1 K−1, such that 0 , 0 0,0 0,0 becomes K − 1 , K − 1 K-1,K-1 K−1,K−1

z shift ( l , n , m , x , y ) = z ( l , n , x − K + 1 , y − K + 1 ) z_{\text {shift}}(l, n, m, x, y)=z(l, n, x-K+1, y-K+1) zshift(l,n,m,x,y)=z(l,n,x−K+1,y−K+1)

∂ D i v ∂ y ( l − 1 , m , x , y ) = ∑ n ∑ x ′ , y ′ w ^ ( l , n , m , x ′ , y ′ ) ∂ D i v ∂ z s h i f t ( l , n , x + x ′ , y + y ′ ) \frac{\partial D i v}{\partial y(l-1, m, x, y)}=\sum_{n} \sum_{x^{\prime}, y^{\prime}} \widehat{w}\left(l, n, m, x^{\prime}, y^{\prime}\right) \frac{\partial D i v}{\partial z_{s h i f t}\left(l, n, x+x^{\prime}, y+y^{\prime}\right)} ∂y(l−1,m,x,y)∂Div=n∑x′,y′∑w (l,n,m,x′,y′)∂zshift(l,n,x+x′,y+y′)∂Div

- Regular convolution running on shifted derivative maps using flipped filter

Pooling

- Pooling is typically performed with strides > 1

- Results in shrinking of the map

- Downsampling

Derivative of Max pooling

KaTeX parse error: Got function '\newline' with no arguments as argument to '\left' at position 1: \̲n̲e̲w̲l̲i̲n̲e̲

- Max pooling selects the largest from a pool of elements 1

Derivative of Mean pooling

- The derivative of mean pooling is distributed over the pool

d y ( l , m , k , n ) = 1 K l p o o l 2 d u ( l , m , k , n ) d y(l, m, k, n)=\frac{1}{K_{l p o o l}^{2}} d u(l, m, k, n) dy(l,m,k,n)=Klpool21du(l,m,k,n)

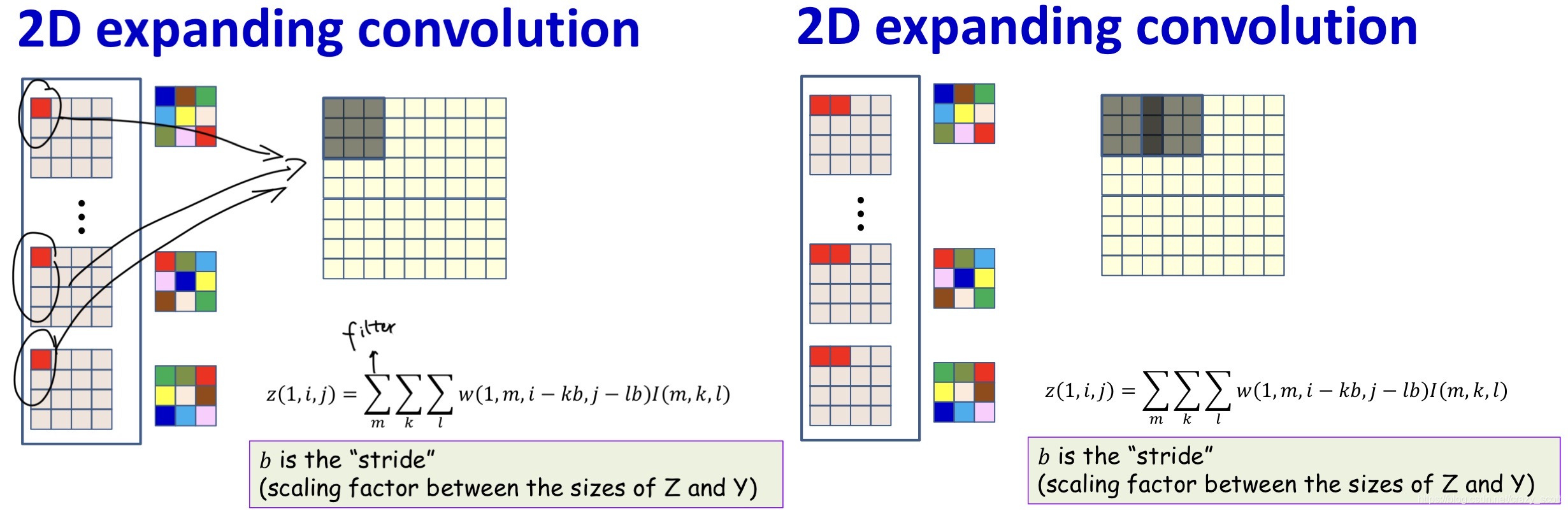

Transposed Convolution

- We’ve always assumed that subsequent steps shrink the size of the maps

- Can subsequent maps increase in size2

- Output size is typically an integer multiple of input

- +1 if filter width is odd

Model variations

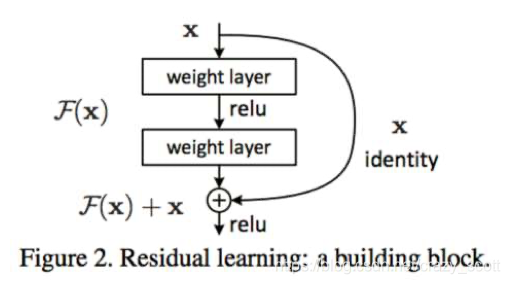

- Very deep networks

- 100 or more layers in MLP

- Formalism called “Resnet”

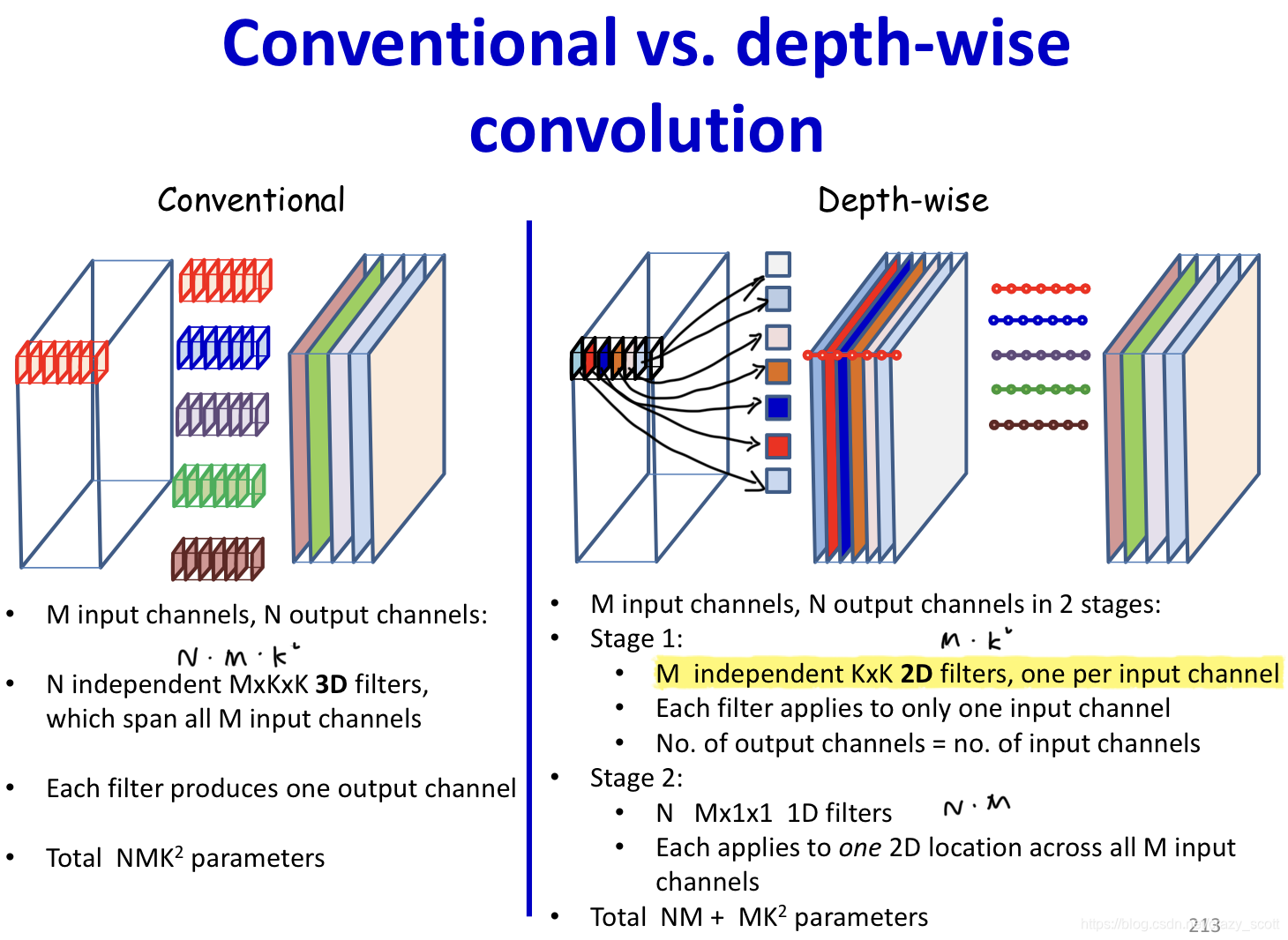

- Depth-wise convolutions

- Instead of multiple independent filters with independent parameters, use common layer-wise weights and combine the layers differently for each filter

Depth-wise convolutions

- In depth-wise convolution the convolution step is performed only once

- The simple summation is replaced by a weighted sum across channels

- Different weights (for summation) produce different output channels

Models

-

For CIFAR 10

- Le-net 53

-

For ILSVRC(Imagenet Large Scale Visual Recognition Challenge)

- AlexNet

- NN contains 60 million parameters and 650,000 neurons

- 5 convolutional layers, some of which are followed by max-pooling layers

- 3 fully-connected layers

- VGGNet

- Only used 3x3 filters, stride 1, pad 1

- Only used 2x2 pooling filters, stride 2

- ~140 million parameters in all

- Googlenet

- Multiple filter sizes simultaneously

- AlexNet

-

For ImageNet

- Resnet

- Last layer before addition must have the same number of filters as the input to the module

- Batch normalization after each convolution

- Resnet

- Densenet

- All convolutional

- Each layer looks at the union of maps from all previous layers

- Instead of just the set of maps from the immediately previous layer

https://cs.stanford.edu/people/karpathy/convnetjs/demo/cifar10.html ↩︎

本文深入探讨了卷积神经网络(CNN)的工作原理,包括卷积层、池化层、反卷积层的计算过程,以及如何通过反向传播进行权重更新。特别介绍了在训练过程中如何处理Max-Pooling层的导数,以及使用平均池化和转置卷积的技巧。

本文深入探讨了卷积神经网络(CNN)的工作原理,包括卷积层、池化层、反卷积层的计算过程,以及如何通过反向传播进行权重更新。特别介绍了在训练过程中如何处理Max-Pooling层的导数,以及使用平均池化和转置卷积的技巧。

4682

4682

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?