(如果是已经看过前面文章的朋友可以直接跳过前段部分即可)

Hello,我是小S,前两篇文章讲了“力大砖飞”的全参微调,那么这篇封笔之作,自然会讲到性价比超高,并且十分优雅的LoRA微调了!

看过前两篇的朋友都知道,我一向是对全参微调十分慷慨,每次都给他上6张4090……虽然用着十分爽,可是就和开油车一样,虽然心灵是满足的,但是钱包是在哭泣的。

虽然全参微调可以让胡言乱语的Base模型开始说人话,也可以让Instruct模型更出色。

但是人有“三高”,大模型也有“三高”:高成本、长时间、大存储。属于是赛博三高了,那么赛博三高就有请赛博医生来治——LoRA微调登场!

LoRA的全称是Low-Rank Adaptation,一看名字就感觉性价比超高的,很“Low”

所以今天,我要做出一个违背祖宗的决定,只用1张4090,搞定LoRA微调!(也是开上了高性价比的电车了)

那么事不宜迟,开始今天的LoRA微调之旅,当最后对比的时候,我会综合对比一下训练出来的结果,并且分享一些我自己的理解给大家。

准备工作

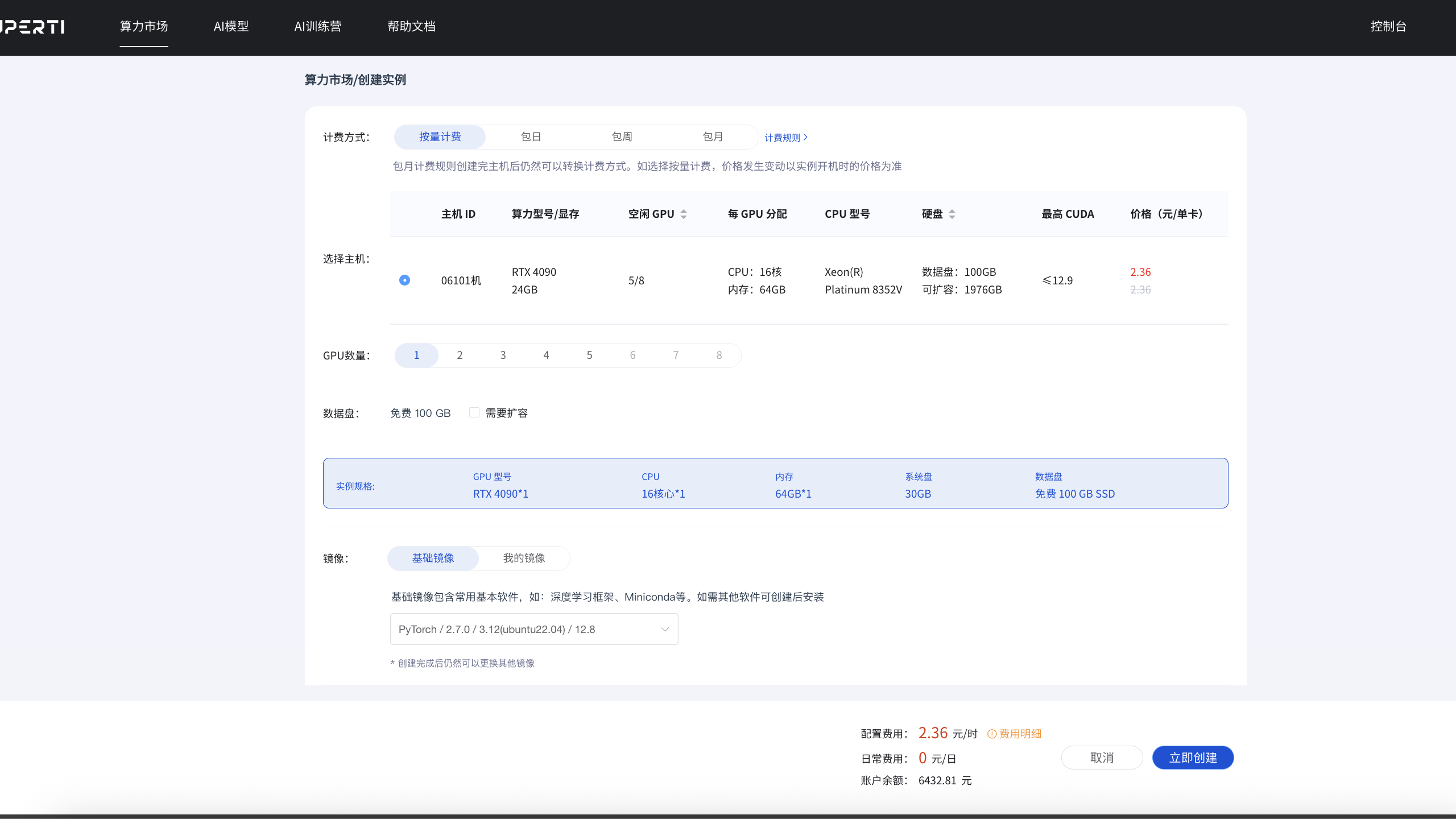

开始当然还是到我们公司的云平台中创建实例咯,和以前不一样的就是,这次我们只需要1张4090!这就是属于LoRA微调的怜悯(对钱包的怜悯)。

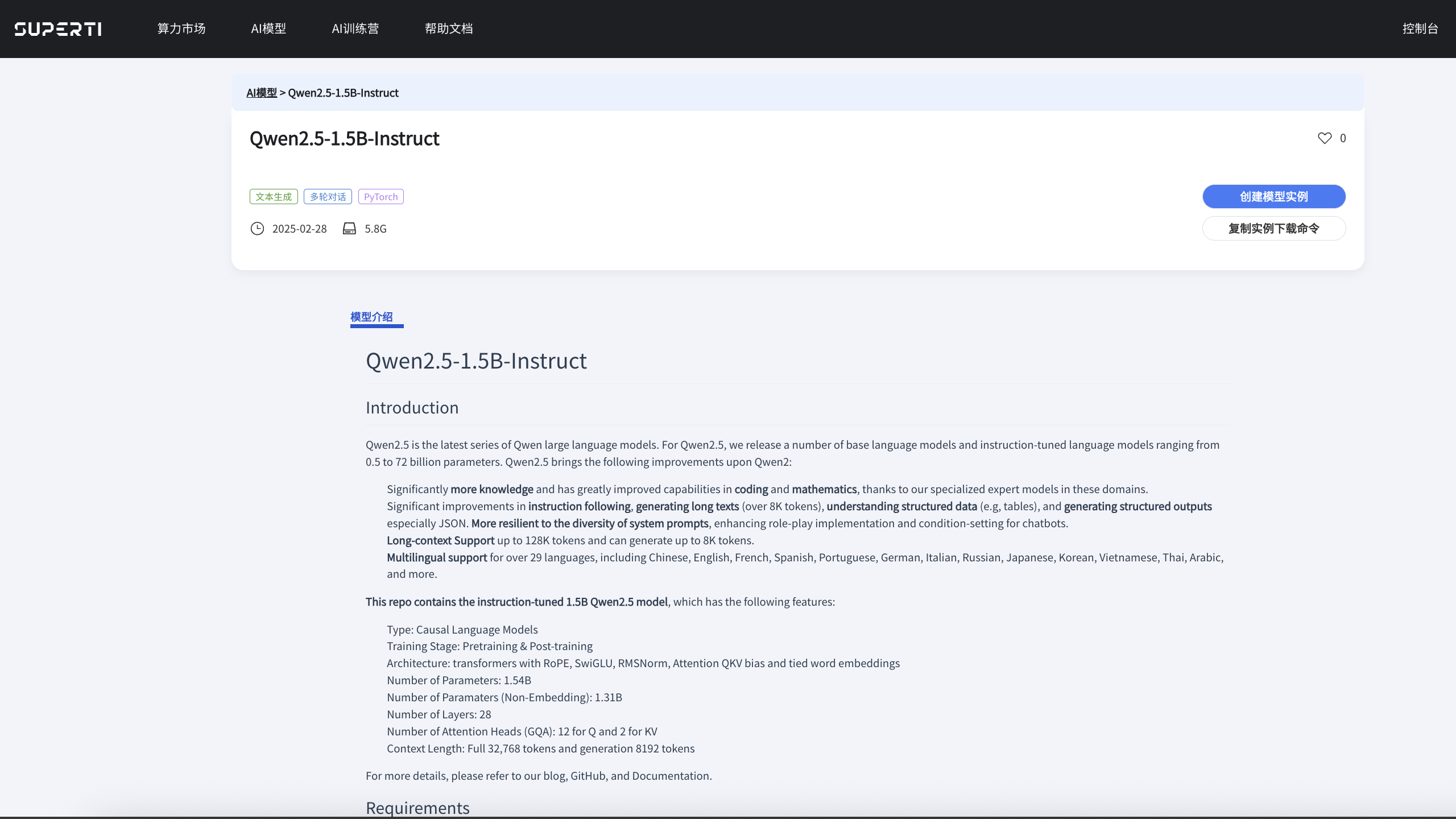

在AI模型列表中找到我们需要的那一位,也是上一期的主角,Qwen2.5-1.5B Instruct版本,正好可以对比一下相同模型使用不同的训练方法的差异。

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

2万+

2万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?