In this part , I am going to talk about how to minimize the cost function .

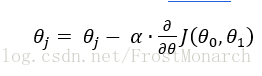

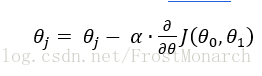

In Andrew's class , He talked about a method called gradient decent .

is the cost function ,of course ,there can be more varibles rather than theta 0,theta 1 .

is the cost function ,of course ,there can be more varibles rather than theta 0,theta 1 . 本文讨论了如何使用梯度下降法来最小化成本函数。通过调整参数θ0和θ1,并选择合适的学习率α,可以有效地使成本函数收敛。

本文讨论了如何使用梯度下降法来最小化成本函数。通过调整参数θ0和θ1,并选择合适的学习率α,可以有效地使成本函数收敛。

is the cost function ,of course ,there can be more varibles rather than theta 0,theta 1 .

is the cost function ,of course ,there can be more varibles rather than theta 0,theta 1 .

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?