量化思路介绍:

EEMD分解 + LSTM预测

- 将股票数据的开盘价与收盘价分别进行EEMD分解, 每组数据分解为6个IMFs, 6个IMFs中由2个残差项, 2个周期项和2个趋势项组成, 共12个IMFs

- 模型输入数据为滞后7天的开盘价与收盘价分解后的两个IMFs, 输出数据为下一天的收盘价, 总共构建6个LSTM模型

- LSTM模型使用较为简单的双层lstm, 每层50个神经元, Adam优化器, 共100个神经元

- 得到6个IMFs的预测结果, 求和便是预测的收盘价结果

数据方面: 分为训练集, 验证集与测试集

测试集为题目指定的2022年至2024年4月30日数据, 即后562条数据

验证集为后762至562条数据, 主要目的是控制模型早停, 避免过拟合

测试集为前2717条数据, 即去掉验证集与测试集的数据

主要原理:

- EEMD分解可以帮助提取时间序列数据中不同尺度的特征信息,将原始数据分解成多个固有模态函数(IMFs),这些IMFs反映了不同尺度上的波动和趋势, 使得模型更好地捕捉到时间序列数据的内在规律和周期性

- LSTM神经网络能够解决传统RNN存在的梯度消失或梯度爆炸等问题,同时能够更好地捕捉长期依赖关系, 能够提高模型的预测性能和泛化能力,从而更准确地预测股票价格序列的走势

- 考虑了使用区间型股票价格数据以及区间型数据的相互作用对预测精度的提高, 利用开盘价与收盘价反映波动,有效捕捉了单日股价的真实波动。

一. 下载导入必要库

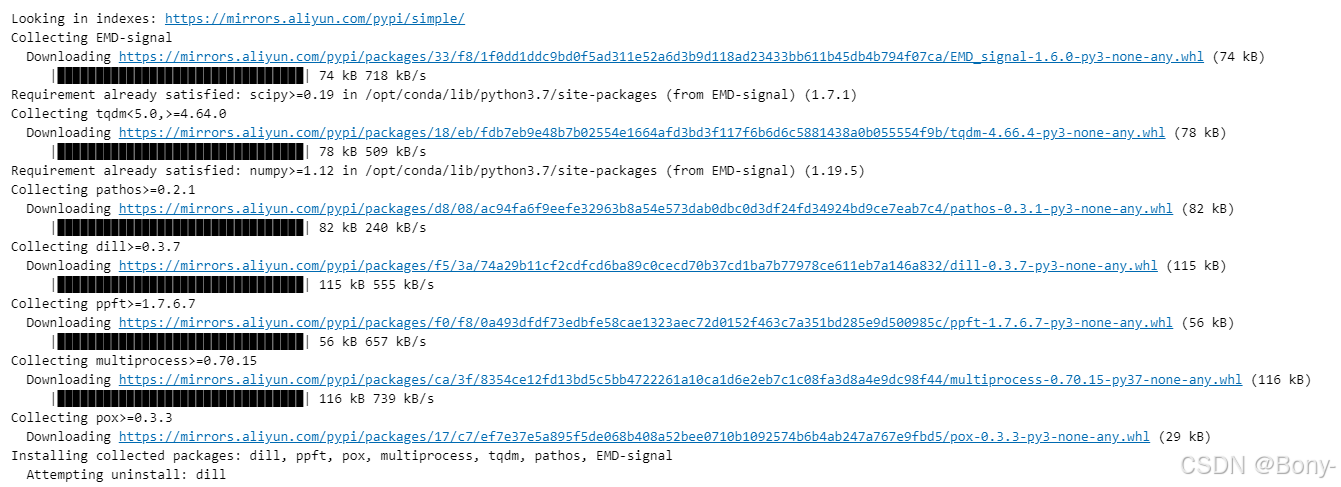

安装EEMD分解的python库--EMD-signal

! pip install -i https://mirrors.aliyun.com/pypi/simple/ EMD-signal

#导入各种库

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from pylab import mpl

from PyEMD import EEMD

import datetime

二. 数据读取与预处理

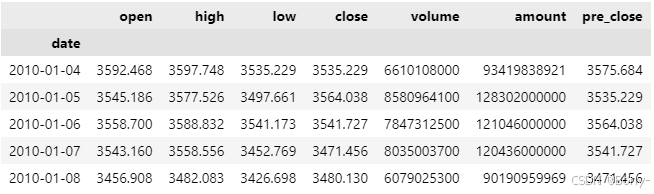

1.数据读取

df = pd.read_csv("/home/mw/input/stock9243/data_00300.csv")

2.数据类型转换

df['date'] = pd.to_datetime(df['date'],format='%Y-%m-%d %H:%M:%S%z')

df['date'] = df['date'].dt.date

df = df.set_index('date')

df.info()

df.head()

三. EEMD分解

# 初始化 EEMD 对象

eemd = EEMD()

# 执行 EEMD 分解

eIMFs_open = eemd.eemd(df['open'].values, max_imf=5)

# 绘制原始信号和分解后的各个 IMF

plt.figure(figsize=(12, 9))

plt.subplot(len(eIMFs_open) + 1, 1, 1)

plt.plot(range(0,len(df['open'])), df['open'].values, 'r')

for i, eIMF in enumerate(eIMFs_open):

plt.subplot(len(eIMFs_open) + 1, 1, i + 2)

plt.plot(range(0,len(df['open'])), eIMF, 'g')

plt.tight_layout()

plt.show()

# 初始化 EEMD 对象

eemd = EEMD()

# 执行 EEMD 分解

eIMFs_close = eemd.eemd(df['close'].values, max_imf=5)

# 绘制原始信号和分解后的各个 IMF

plt.figure(figsize=(12, 9))

plt.subplot(len(eIMFs_open) + 1, 1, 1)

plt.plot(range(0,len(df['close'])), df['close'].values, 'r')

for i, eIMF in enumerate EEMD-LSTM模型用于股票价格预测

EEMD-LSTM模型用于股票价格预测

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

583

583

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?