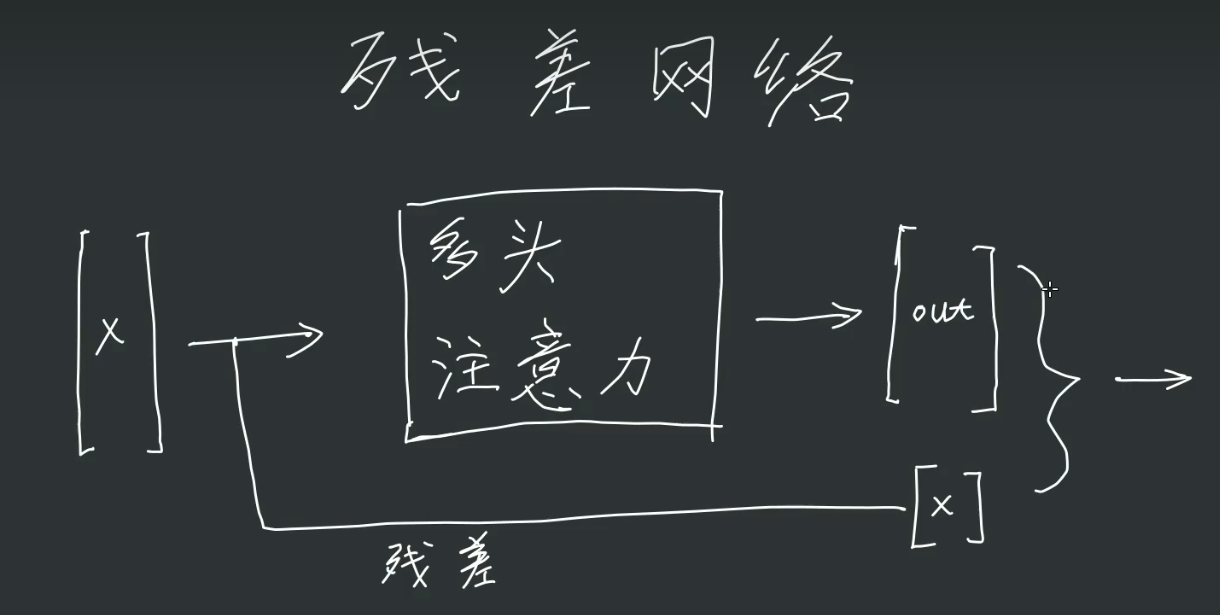

加入残差结构

残差网络哪里都能装

残差网络的作用是保持梯度。传统网络中会有梯度消失的情况,导致 f’(x) ~ 0。但是加上残差网络后,f(x)变为f(x)+x,梯度则变为1+f’(x),不担心f’(x) ~ 0.

但是梯度爆炸怎么办呢?

问了下deepseek老师,

一个是可以用正则化,限制权重的大小。loss’ = (y-y0) ~ W3 * W2 * W1 * x (不严谨),很明显,对于W1的梯度可以看作是W3 * W2 * x。正比于权重W3 * W2,那么对W进行正则化(常用L2正则化)就可以缓解梯度爆炸。

二是正确进行初始化,从公式中也看出来,梯度正比于输入x,如果x一开始就很大,那么梯度一开始就可能爆炸了。

三是normalization,前向传播时,将每一层都进行normalization,那么每一层mormalization后的W就不会有太多的变化,因为W2(W1 * x) 与 W1 * x 就会是同一个量级的,那|W2|就 ~ 1.

加入残差和layer normalization

把代码进行了一些封装,将每个多头注意力机制与其后面的FeedForward线性层封装成一个block(在transformer中,这样的block是连续存放的,我们现在只用一个block)

class FeedForward(nn.Module):

def init(self, embedding_token_dim):

super().init()

self.net = nn.Sequential(

nn.Linear(embedding_token_dim, embedding_token_dim4),

nn.ReLU(),

nn.Linear(embedding_token_dim4, embedding_token_dim),

nn.Dropout(0.2)

)

def forward(self, x):

return self.net(x)

class Block(nn.Module):

def init(self, embedding_token_dim, num_heads):

super().init()

self.sa = MultiheadAttention(num_heads, head_size)

self.ff = FeedForward(embedding_token_dim)

self.ln1 = nn.LayerNorm(embedding_token_dim)

self.ln2 = nn.LayerNorm(embedding_token_dim)

def forward(self, x):

x = x + self.sa(self.ln1(x))

x = x + self.ff(self.ln2(x))

return x

相应的模型中的代码也要修改

class SimpleModel(nn.Module):

def init(self):

super().init()

self.token_embedding_table = nn.Embedding(size, embedding_token_dim)

self.pos_embedding_table = nn.Embedding(sentence_len, embedding_token_dim)

self.block = Block(embedding_token_dim, num_heads)

self.ln_f = nn.LayerNorm(embedding_token_dim)# final layer norm

self.out = nn.Linear(embedding_token_dim, size)

def forward(self, inputs, targets = None):

batch_size, sentence_len = inputs.shape#输入为二维矩阵,此时的token是整数而非多维向量

token_emd = self.token_embedding_table(inputs)

pos_emd = self.pos_embedding_table(torch.arange(sentence_len,device=device).to(device))

x = token_emd + pos_emd #(batch_size, sentence_len, embedding_token_dim)

x = self.block(x)

x = self.ln_f(x) #在模型经过了多个block后的最后一次layer_normalization

logits = self.out(x) #(batch_size, sentence_len, size:(len(uniword))) #将block输出厚度结果转为用于预测的logits

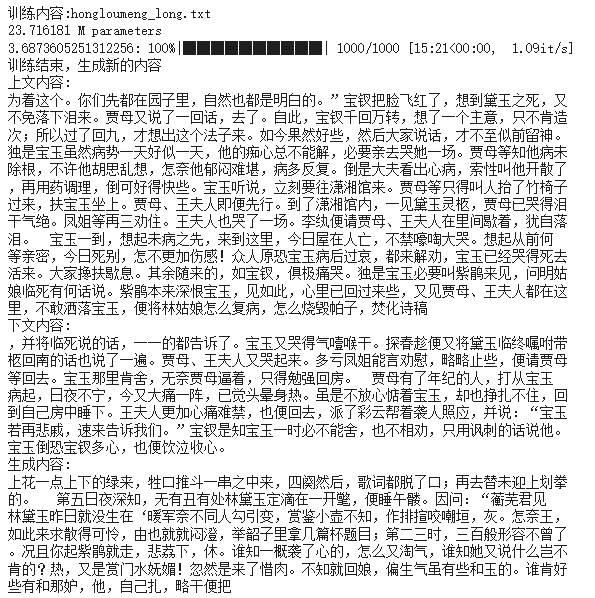

现在生成的结果好像又好了些?甚至有一些语义比较连贯的句子了。现在的模型参数是7.98M

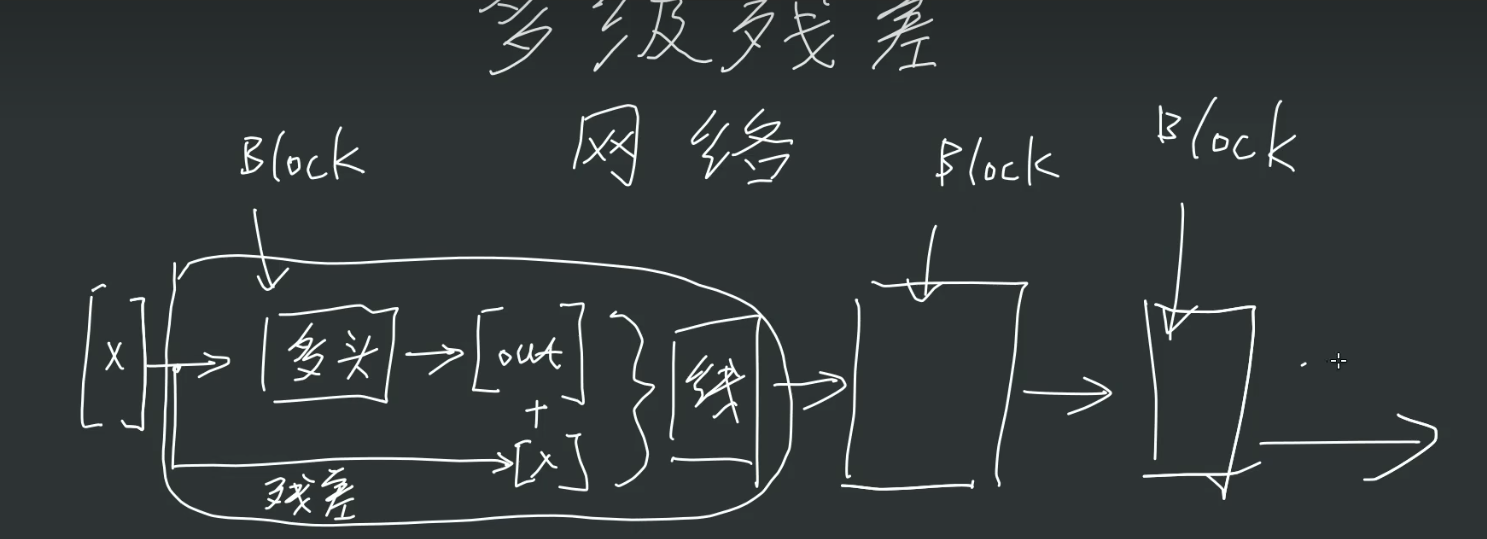

多级残差网络(transformer最终形态)

就是上面写的。我们把多头注意力机制和后面的线性层封装在一起,称为一个block。这个block的输入和输出的维度都是一样的。所以我们要增加网络的复杂度,就把这些block重复再重复就好了。

所以实现起来也很简单,修改两处即可

self.blocks = nn.Sequential( *[Block(embedding_token_dim, num_heads) for _ in range(n_blocks)] )

n_blocks自行设定,我这里设置成6个block

x = self.blocks(x)

再运行看看

生成内容好了一点吗,感觉也不太明显,但肯定没有变差。不过如果是不细看,一目十行扫一眼,和真的文章其实是差不多的。

6个block是23M,1个blcok是8M。也就是除去最后用于预测的网络,每个block使用了3M左右的参数。

与gpt3的参数分析对比

gpt3的参数是175b,它和我们的区别在于:

gpt3的token数量是50000个左右,我们的是4437个。

gpt3的embedding_token_dim是12000维左右,多头共有96个头,每个头是128维

我们的embedding_token_dim是512,头数是8个,每个头是64维

gpt3的n_blocks=96,一共有96个块,我们只有6个

多头注意力机制后面的线性层,gpt3和我们一样都是embedding_token_dim*4个节点

不过我们的位置编码是会学习的,但是gpt3的不学习是固定的

如果我们的位置编码不学习,那我们(忽略final layer)的参数就是

(3 * embedding_token_dim*(embedding_token_dim/n_heads) + (embedding_token_dim/n_heads)* embedding_token_dim) * n_heads + (((embedding_token_dim/n_heads) * n_heads) * embedding_token_dim * 4)*2

~ 3.14M

这完全接近我们运行中的真实数据

计算中忽略了编码和final layer的参数。因为它们是不重复的参数,而每个block要重复很多次,所以block重复后的参数才是参数数量的大头。

所以在这里我们的参数是3M*6(忽略final layer),大约18M,如果我们把参数都改成和chatgpt一样,那么我们的参数就是

(3 * 12000*(12000/96) + (12000/96)* 12000) * 96+ (((12000/96) * 96) * 12000* 4 ) * 2

大约为 ~ 1.75b

gpt3重复了96个block

所以是168b

这非常接近gpt3实际的175b参数,因为我们计算中存在误差,比如说gpt3的embedding_token_dim是12288而不是12000.所以可以认为大致相等。实际上使用12288计算出来的结果就是173.94b,再加上embedding和final layer层就是175.18b。

所以可以说,我们手搓下来的这个简化的transformer,就是和gpt3中使用的transformer是同样的结构,只不过模型参数更小。

最终代码

import torch

import torch.nn as nn

import torch.nn.functional as F

import random

import textwrap

from tqdm import tqdm

device = "cuda" if torch.cuda.is_available() else "cpu"

torch.cuda.empty_cache()

torch.manual_seed(1037)

random.seed(1037)

file_name = "hongloumeng_long.txt"#读取红楼梦全文

with open(file_name, 'r', encoding = 'utf-8') as f:

text = f.read()

text_list = list(set(text))

encodee_dict = {char:i for i,char in enumerate(text_list)}

decoder_dict = {i:char for i,char in enumerate(text_list)}

encoder = lambda string : [encodee_dict[char] for char in string]

decoder = lambda idx : ''.join([decoder_dict[i] for i in idx])

uniword = list(set(text))

size = len(uniword)

embedding_token_dim = 512 # 尽量是2的次方数

num_heads = 8

head_size = embedding_token_dim // num_heads

sentence_len = 512

batch_size = 32

wrap_width = 40

max_new_tokens = 256

n_blocks = 6

split = 0.8

split_len = int(split*len(text))

train_data = torch.tensor(encoder(text[:split_len]))

val_data = torch.tensor(encoder(text[split_len:]))

def get_batch(split='train'):

if split=='train':

data = train_data

else:

data = val_data

idx = torch.randint(0,len(data)-sentence_len-1, (batch_size,))

x = torch.stack([(data[i:i+sentence_len]) for i in idx])

y = torch.stack([(data[i+1:i+1+sentence_len]) for i in idx])

x,y = x.to(device), y.to(device)

return x,y

# Head类

class Head(nn.Module):

def __init__(self, head_size):

super().__init__()

self.key = nn.Linear(embedding_token_dim, head_size, bias=False) # key

self.query = nn.Linear(embedding_token_dim, head_size, bias=False) # query

self.value = nn.Linear(embedding_token_dim, head_size, bias=False) # 线性变换层

self.register_buffer("tril", torch.tril(torch.ones(sentence_len,sentence_len )))#不可变的常量

self.dropout = nn.Dropout(0.2)

def forward(self, x):

B,T,C = x.shape

key = self.key(x)

query = self.query(x)

weight = query @ key.transpose(-2,-1) * (key.shape[-1]**(-0.5))#注意力方阵, sentence_len * sentence_len, 并除以sqrt(key.shape[-1]),使方差稳定

weight = weight.masked_fill(self.tril==0, float('-inf'))

weight = F.softmax(weight, dim=-1)

weight = self.dropout(weight) #随机将一些值变成0,增加网络的稳定性(网络不会依赖于某几个节点)

v = self.value(x)

out = weight @ v

return out

class MultiheadAttention(nn.Module):

def __init__(self, num_heads, head_size):

super().__init__()

self.heads = nn.ModuleList([Head(head_size) for _ in range(num_heads)])

self.proj = nn.Linear(num_heads*head_size, embedding_token_dim)

self.dropout = nn.Dropout(0.2)

def forward(self, x):

out = torch.cat([h(x) for h in self.heads], dim=-1)

out = self.dropout(self.proj(out))

return out

class FeedForward(nn.Module):

def __init__(self, embedding_token_dim):

super().__init__()

self.net = nn.Sequential(

nn.Linear(embedding_token_dim, embedding_token_dim*4),

nn.ReLU(),

nn.Linear(embedding_token_dim*4, embedding_token_dim),

nn.Dropout(0.2)

)

def forward(self, x):

return self.net(x)

class Block(nn.Module):

def __init__(self, embedding_token_dim, num_heads):

super().__init__()

self.sa = MultiheadAttention(num_heads, head_size)

self.ff = FeedForward(embedding_token_dim)

self.ln1 = nn.LayerNorm(embedding_token_dim)

self.ln2 = nn.LayerNorm(embedding_token_dim)

def forward(self, x):

x = x + self.sa(self.ln1(x))

x = x + self.ff(self.ln2(x))

return x

#傻瓜模型

class SimpleModel(nn.Module):

def __init__(self):

super().__init__()

self.token_embedding_table = nn.Embedding(size, embedding_token_dim)

self.pos_embedding_table = nn.Embedding(sentence_len, embedding_token_dim)

self.blocks = nn.Sequential(*[Block(embedding_token_dim, num_heads) for _ in range(n_blocks)])

self.ln_f = nn.LayerNorm(embedding_token_dim)# final layer norm

self.out = nn.Linear(embedding_token_dim, size)

def forward(self, inputs, targets = None):

batch_size, sentence_len = inputs.shape#输入为二维矩阵,此时的token是整数而非多维向量

token_emd = self.token_embedding_table(inputs)

pos_emd = self.pos_embedding_table(torch.arange(sentence_len,device=device).to(device))

x = token_emd + pos_emd #(batch_size, sentence_len, embedding_token_dim)

x = self.blocks(x)

x = self.ln_f(x)

logits = self.out(x) #(batch_size, sentence_len, size:(len(uniword)))

# head_out = self.multihead(x)

# logits = torch.relu(self.network1(head_out))

# logits = self.network2(logits) #(batch_size, sentence_len, size:(len(uniword)))

if targets is None:

loss = None

else:

B,T,C = logits.shape

logits = logits.view(B*T, C)

targets = targets.view(B*T)

loss = F.cross_entropy(logits, targets)

# random_tensor = torch.rand(batch_size, sentence_len, size)

# logits = random_tensor/random_tensor.sum(dim = -1, keepdim = True)

# loss = None #傻瓜模型,只是为了跑起来,不训练

return logits, loss

def generate(self, token_seq, new_sentence_len):

for _ in range(new_sentence_len):

token_inputs = token_seq[:, -sentence_len:]

logits, loss = self.forward(token_inputs)

logits = logits[:,-1,:]

prob = F.softmax(logits, dim=-1)

new_token = torch.multinomial(prob, 1).to(device)

token_seq = torch.cat([token_seq, new_token], dim=1)

token_output = token_seq[:,-new_sentence_len:]

return token_output

def estimate_loss(model):

out = {}

estimate_time = 20

model.eval()

for state in ['train','val']:

losses = torch.zeros(estimate_time)

for i in range(estimate_time):

X,Y = get_batch(state)

logits, loss = model(X,Y)

losses[i] = loss

out[state] = losses.mean()

model.train()

return out

#试运行生成句子

learning_rate = 0.0003

max_iters = 1000

eval_iter = 100

print(f"训练内容:{file_name}")

model = SimpleModel()

# model = nn.DataParallel(model, device_ids=[0, 1, 2])

model = model.to(device)

print(sum((p.numel() for p in model.parameters()))/1e6, "M parameters")

# 优化器

optimizer = torch.optim.AdamW(model.parameters(), lr = learning_rate)

#训练循环

progress_bar = tqdm(range(max_iters))

for i in progress_bar:

# if i%eval_iter == 0 or i==max_iters-1:

# losses = estimate_loss(model)

# print(losses)

# print(f"train_loss{losses['train']:.4f}, val_loss{losses['val']:.4f}")

xb,yb = get_batch('train')

logits, loss = model(xb, yb)

optimizer.zero_grad(set_to_none=True)

loss.backward()

optimizer.step()

progress_bar.set_description(f"{loss.item()}")

print("训练结束,生成新的内容")

start_idx = random.randint(0, len(val_data)-sentence_len-max_new_tokens)

#上文内容

context = torch.zeros((1,sentence_len), dtype=torch.long, device = device)

context[0,:] = val_data[start_idx:start_idx+sentence_len]

context_str = decoder(context[0].tolist())

wrapped_context_str = textwrap.fill(context_str, width = wrap_width)

#下文内容

next_context = torch.zeros((1,max_new_tokens), dtype=torch.long, device = device)

next_context[0,:] = val_data[start_idx+sentence_len:start_idx+sentence_len+max_new_tokens]

next_context_str = decoder(next_context[0].tolist())

next_wrapped_context_str = textwrap.fill(next_context_str, width = wrap_width)

#生成下文

generated_token = model.generate(context, max_new_tokens)

generated_str = decoder(generated_token[0].tolist())

generated_wrapped_context_str = textwrap.fill(generated_str, width = wrap_width)

print("上文内容:")

print(wrapped_context_str)

print("下文内容:")

print(next_wrapped_context_str)

print("生成内容:")

print(generated_wrapped_context_str)

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?