第一章 字符串操作

1.1 切分

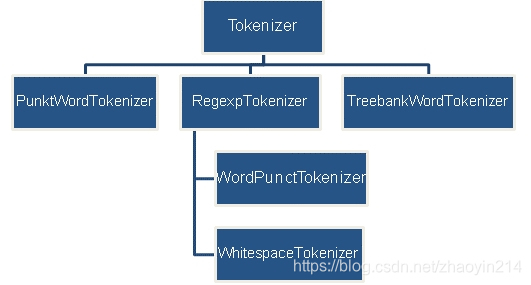

nltk模块nltk.tokenize

1.1.1 将文本切分为语句

sent_tokenize()函数,该函数对PunktSentenceTokenizer类实例化。

import nltk

from nltk.tokenize import sent_tokenize

text = "Welcome readers. I hope you find it interesting. Please do reply."

print(sent_tokenize(text))

['Welcome readers.', 'I hope you find it interesting.', 'Please do reply.']

- 批量切分文本

加载PunktSentenceTokenizer类,调用tokenize()

tokenizer = nltk.data.load("tokenizers/punkt/english.pickle") # BlanklineTokenizer

text = "Hello everyone. Hope all are fine and doing well. Hope you find the book interesting"

print(tokenizer.tokenize(text))

['Hello everyone.', 'Hope all are fine and doing well.', 'Hope you find the book interesting']

1.1.2 其他语言文本切分

tokenizer = nltk.data.load("tokenizers/punkt/french.pickle") # BlanklineTokenizer

text = "Deux agressions en quelques jours, voilà ce qui a motivé hier matin le débrayage collège franco-britanniquede Levallois-Perret. Deux agressions en quelques jours, voilà ce qui a motivé hier matin le débrayage Levallois. L'équipe pédagogique de ce collège de 750 élèves avait déjà été choquée par l'agression, janvier , d'un professeur d'histoire. L'équipe pédagogique de ce collège de 750 élèves avait déjà été choquée par l'agression, mercredi , d'un professeur d'histoire"

print(tokenizer.tokenize(text))

['Deux agressions en quelques jours, voilà ce qui a motivé hier matin le débrayage collège franco-britanniquede Levallois-Perret.', 'Deux agressions en quelques jours, voilà ce qui a motivé hier matin le débrayage Levallois.', "L'équipe pédagogique de ce collège de 750 élèves avait déjà été choquée par l'agression, janvier , d'un professeur d'histoire.", "L'équipe pédagogique de ce collège de 750 élèves avait déjà été choquée par l'agression, mercredi , d'un professeur d'histoire"]

1.1.3 将句子切分为单词

word_tokenize()函数,该函数对TreebankWordTokenizer类实例化。

text = "PierreVinken , 59 years old , will join as a nonexecutive director on Nov. 29."

print(nltk.word_tokenize(text))

['PierreVinken', ',', '59', 'years', 'old', ',', 'will', 'join', 'as', 'a', 'nonexecutive', 'director', 'on', 'Nov.', '29', '.']

加载TreebankWordTokenizer,调用tokenize()函数

1.1. 4 使用TreebankWordTokenizer执行切分

The Treebank tokenizer uses regular expressions to tokenize text as in Penn Treebank. This is the method that is invoked by word_tokenize(). It assumes that the text has already been segmented into sentences, e.g. using sent_tokenize()

from nltk.tokenize import TreebankWordTokenizer

text = "Have a nice day. I hope you find the book interesting"

tokenizer = TreebankWordTokenizer()

print(tokenizer.tokenize(text))

print(nltk.word_tokenize(text))

['Have', 'a', 'nice', 'day.', 'I', 'hope', 'you', 'find', 'the', 'book', 'interesting']

['Have', 'a', 'nice', 'day', '.', 'I', 'hope', 'you', 'find', 'the', 'book', 'interesting']

text = " Don't hesitate to ask questions."

print(nltk.word_tokenize(text))

tokenizer = TreebankWordTokenizer()

print(tokenizer.tokenize(text))

['Do', "n't", 'hesitate', 'to', 'ask', 'questions', '.']

['Do', "n't", 'hesitate', 'to', 'ask', 'questions', '.']

PunktWordTokenizer通过分离标点实现切分,保留所有单词,不创建新标识符。

WordPunctTokenizer将标点转化为新标识符实现切分。

WordPunctTokenizer() tokenize a text into a sequence of alphabetic and non-alphabetic characters, using the regexp \w+|[^\w\s]+.

from nltk.tokenize import WordPunctTokenizer

tokenizer = WordPunctTokenizer()

print(tokenizer.tokenize(text))

['Don', "'", 't', 'hesitate', 'to', 'ask', 'questions', '.']

1.1.5 使用正则表达式实现切分

RegexpTokenizer模块

A tokenizer that splits a string using a regular expression, which matches either the tokens or the separators between tokens.

from nltk.tokenize import RegexpTokenizer

- 匹配单词。

tokenizer = RegexpTokenizer("[\w]+")

print(tokenizer.tokenize(text))

['Don', 't', 'hesitate', 'to', 'ask', 'questions']

- 匹配空格或制表符。

tokenizer = RegexpTokenizer("[\w]+|\$[\d\.]+|[\S]+")

print(tokenizer.tokenize(text))

['Don', "'t", 'hesitate', 'to', 'ask', 'questions', '.']

RegexpTokenizer使用re.findall()函数时,通过匹配标识符实现切分;使用re.split()函数时,通过匹配制表符或者空格实现切分。

tokenizer = RegexpTokenizer(pattern="[\s]+", gaps=True) # find separators between tokens

print(tokenizer.tokenize(text))

tokenizer = RegexpTokenizer(pattern="[\s]+", gaps=False) # find the tokens themselves

print(tokenizer.tokenize(text))

["Don't", 'hesitate', 'to', 'ask', 'questions.']

[' ', ' ', ' ', ' ', ' ']

筛选以首字母大写单词

sent = " She secured 90.56 % in class X . She is a meritorious student"

capt = RegexpTokenizer("[A-Z]{1}[\w]+")

print(capt.tokenize(sent))

['She', 'She']

预定义正则表达式

BlanklineTokenizer按空白行切分

Tokenize a string, treating any sequence of blank lines as a delimiter. Blank lines are defined as lines containing no characters, except for space or tab characters.

from nltk.tokenize import BlanklineTokenizer

sent=" She secured 90.56 % in class X .\n\n She is a meritorious student"

tokenizer = BlanklineTokenizer()

print(tokenizer.tokenize(sent))

[' She secured 90.56 % in class X .', 'She is a meritorious student']

WhitespaceTokenizer通过空格、制表符、换行实现切分

Tokenize a string on whitespace (space, tab, newline). In general, users should use the string split() method instead.

from nltk.tokenize import WhitespaceTokenizer

tokenizer = WhitespaceTokenizer()

print(tokenizer.tokenize(sent))

['She', 'secured', '90.56', '%', 'in', 'class', 'X', '.', 'She', 'is', 'a', 'meritorious', 'student']

WordPunctTokenizer使用正则表达式\w+|[^\w\s]+将文本切分为字母与非字母字符。

Tokenize a string on whitespace (space, tab, newline). In general, users should use the string split() method instead.

split()切分

print(sent.split())

print(sent.split(" "))

print(sent.split("\n"))

['She', 'secured', '90.56', '%', 'in', 'class', 'X', '.', 'She', 'is', 'a', 'meritorious', 'student']

['', 'She', 'secured', '90.56', '%', 'in', 'class', 'X', '.\n\n', 'She', 'is', 'a', 'meritorious', 'student']

[' She secured 90.56 % in class X .', '', ' She is a meritorious student']

LineTokenizer通过将文本切分为行来执行切分,类似s.split('\n')

Tokenize a string into its lines, optionally discarding blank lines. This is similar to s.split('\n').

from nltk.tokenize import LineTokenizer

tokenizer = LineTokenizer(blanklines="discard")

print(tokenizer.tokenize(sent))

tokenizer = LineTokenizer(blanklines="keep")

print(tokenizer.tokenize(sent))

tokenizer = BlanklineTokenizer()

print(tokenizer.tokenize(sent))

[' She secured 90.56 % in class X .', ' She is a meritorious student']

[' She secured 90.56 % in class X .', '', ' She is a meritorious student']

[' She secured 90.56 % in class X .', 'She is a meritorious student']

SpaceTokenizer与sent.split(" ")类似

Tokenize a string using the space character as a delimiter, which is the same as s.split(' ').

from nltk.tokenize import SpaceTokenizer

tokenizer = SpaceTokenizer()

print(tokenizer.tokenize(sent))

['', 'She', 'secured', '90.56', '%', 'in', 'class', 'X', '.\n\n', 'She', 'is', 'a', 'meritorious', 'student']

nltk.tokenize.util模块

span_tokenize()以元组形式返回的切分序列,该序列表示标识符在语句中的位置和偏移量

Identify the tokens using integer offsets (start_i, end_i), where s[start_i:end_i] is the corresponding token.

tokenizer = WhitespaceTokenizer()

print(tokenizer.tokenize(text=sent))

print(list(tokenizer.span_tokenize(text=sent)))

['She', 'secured', '90.56', '%', 'in', 'class', 'X', '.', 'She', 'is', 'a', 'meritorious', 'student']

[(1, 4), (5, 12), (13, 18), (19, 20), (21, 23), (2

本文详细介绍了Python在自然语言处理中的字符串操作,包括切分(如nltk模块的使用、正则表达式的切分)、标准化(去除标点、大小写转换、去除停用词)、替换和校正标识符,以及Zipf定律和各种相似性度量方法(编辑距离、Jaccard系数、Smith Waterman距离等)。

本文详细介绍了Python在自然语言处理中的字符串操作,包括切分(如nltk模块的使用、正则表达式的切分)、标准化(去除标点、大小写转换、去除停用词)、替换和校正标识符,以及Zipf定律和各种相似性度量方法(编辑距离、Jaccard系数、Smith Waterman距离等)。

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

72

72

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?