在输入320×320的图片后,YOLOv3能在22毫秒内完成处理,并取得28.2mAP的成绩。它的精度和SSD相当,但速度要快上3倍。和旧版数据相比,v3版进步明显。在Titan X环境下,YOLOv3的检测精度为57.9AP5057.9AP50,用时51ms;而RetinaNet的精度只有57.5AP5057.5AP50,但却需要198ms,相当于YOLOv3的3.8倍。

以下原文翻译:https://blog.youkuaiyun.com/just_sort/article/details/80882474

外接矩形的处理:

YOLOv3用逻辑回归预测每个边界框的objectness score。如果当前预测的边界框比之前的更好地与ground truth对象重合,那它的分数就是1。如果当前的预测不是最好的,但它和ground truth对象重合到了一定阈值以上,神经网络会忽视这个预测。使用的阈值是.5。与[17]不同,系统只为每个ground truth对象分配一个边界框。如果先前的边界框并未分配给相应对象,那它只是检测错了对象,而不会对坐标或分类预测造成影响。

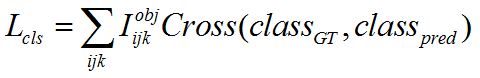

分类处理:

每个边界框都会使用多标记分类来预测框中可能包含的类。我们不用softmax,而是用单独的逻辑分类器,因为我们发现前者对于提升网络性能没什么用。在训练过程中,我们用二元交叉熵损失来预测类别。

这个选择有助于我们把YOLO用于更复杂的领域,如Open Images Dataset 。这个数据集中包含了大量重叠的标签(如女性和人)。如果我们用的是softmax,它会强加一个假设,使得每个框只包含一个类别。但通常情况下这样做是不妥的,相比之下,多标记的分类方法能更好地模拟数据。

源码分析:

1. 训练

def _main():

annotation_path = 'train.txt'

log_dir = 'logs/000/'

classes_path = 'model_data/voc_classes.txt'

anchors_path = 'model_data/yolo_anchors.txt'

class_names = get_classes(classes_path)

num_classes = len(class_names)

anchors = get_anchors(anchors_path) #【9,2】

input_shape = (416,416) # multiple of 32, hw

is_tiny_version = len(anchors)==6 # default setting

if is_tiny_version:

model = create_tiny_model(input_shape, anchors, num_classes,

freeze_body=2, weights_path='model_data/tiny_yolo_weights.h5')

else:

model = create_model(input_shape, anchors, num_classes,

freeze_body=2, weights_path='model_data/yolo_weights.h5') # make sure you know what you freeze

logging = TensorBoard(log_dir=log_dir)

checkpoint = ModelCheckpoint(log_dir + 'ep{epoch:03d}-loss{loss:.3f}-val_loss{val_loss:.3f}.h5',

monitor='val_loss', save_weights_only=True, save_best_only=True, period=3)

reduce_lr = ReduceLROnPlateau(monitor='val_loss', factor=0.1, patience=3, verbose=1)

early_stopping = EarlyStopping(monitor='val_loss', min_delta=0, patience=10, verbose=1)

val_split = 0.1 '''训练集验证集比例9:1'''

with open(annotation_path) as f:

lines = f.readlines()

np.random.seed(10101)

np.random.shuffle(lines)

np.random.seed(None)

num_val = int(len(lines)*val_split)

num_train = len(lines) - num_val

# Train with frozen layers first, to get a stable loss.

# Adjust num epochs to your dataset. This step is enough to obtain a not bad model.

if True: '''前50次迭代不更新参数'''

model.compile(optimizer=Adam(lr=1e-3), loss={

# use custom yolo_loss Lambda layer.

'yolo_loss': lambda y_true, y_pred: y_pred})

batch_size = 32 '''批次大小为32'''

print('Train on {} samples, val on {} samples, with batch size {}.'.format(num_train, num_val, batch_size))

model.fit_generator(data_generator_wrapper(lines[:num_train], batch_size, input_shape, anchors, num_classes),

steps_per_epoch=max(1, num_train//batch_size),

validation_data=data_generator_wrapper(lines[num_train:], batch_size, input_shape, anchors, num_classes),

validation_steps=max(1, num_val//batch_size),

epochs=50,

initial_epoch=0,

callbacks=[logging, checkpoint])

model.save_weights(log_dir + 'trained_weights_stage_1.h5')

# Unfreeze and continue training, to fine-tune.

# Train longer if the result is not good.

if True: ''' 后50次迭代更新参数,网络微调'''

for i in range(len(model.layers)):

model.layers[i].trainable = True

model.compile(optimizer=Adam(lr=1e-4), loss={'yolo_loss': lambda y_true, y_pred: y_pred}) # recompile to apply the change

print('Unfreeze all of the layers.')

batch_size = 32 # note that more GPU memory is required after unfreezing the body

print('Train on {} samples, val on {} samples, with batch size {}.'.format(num_train, num_val, batch_size))

model.fit_generator(data_generator_wrapper(lines[:num_train], batch_size, input_shape, anchors, num_classes),

steps_per_epoch=max(1, num_train//batch_size),

validation_data=data_generator_wrapper(lines[num_train:], batch_size, input_shape, anchors, num_classes),

validation_steps=max(1, num_val//batch_size),

epochs=100,

initial_epoch=50,

callbacks=[logging, checkpoint, reduce_lr, early_stopping])

model.save_weights(log_dir + 'trained_weights_final.h5')主要调用的函数有:

def create_model(input_shape, anchors, num_classes, load_pretrained=True, freeze_body=2,

weights_path='model_data/yolo_weights.h5'):

def data_generator(annotation_lines, batch_size, input_shape, anchors, num_classes):

(1) def create_model(input_shape, anchors, num_classes, load_pretrained=True, freeze_body=2,

weights_path='model_data/yolo_weights.h5'):

输入:

- input_shape:图片尺寸;【416,416】

- anchors:9个通过Kmens聚类得到的anchor box的长和宽;【9,2】

- num_classes:类别数,COCO为80;

- freeze_body:冻结模式,1是冻结DarkNet53的层,2是冻结全部,只保留最后3层;

- weights_path:预训练模型的权重,后缀*.h5。

输出:model, model = Model([model_body.input, *y_true], model_loss)

def create_model(input_shape, anchors, num_classes, load_pretrained=True, freeze_body=2,

weights_path='model_data/yolo_weights.h5'):

'''create the training model'''

K.clear_session() # get a new session

image_input = Input(shape=(None, None, 3))

'''Input的tensor中,参数为shape时,不包含batch,即实际shape为[?, ?, ?, 3]'''

h, w = input_shape

num_anchors = len(anchors)

''' [(?, 13, 13, 3, 5+num_class), (?, 26, 26, 3, 5+num_class), (?, 52, 52, 3, 5+num_class)]'''

y_true = [Input(shape=(h//{0:32, 1:16, 2:8}[l], w//{0:32, 1:16, 2:8}[l], \

num_anchors//3, num_classes+5)) for l in range(3)]

model_body = yolo_body(image_input, num_anchors//3, num_classes)

print('Create YOLOv3 model with {} anchors and {} classes.'.format(num_anchors, num_classes))

if load_pretrained:

model_body.load_weights(weights_path, by_name=True, skip_mismatch=True)

print('Load weights {}.'.format(weights_path))

if freeze_body in [1, 2]:

# Freeze darknet53 body or freeze all but 3 output layers.

'''前185层Freeze,或者除最后3层外Freeze'''

num = (185, len(model_body.layers)-3)[freeze_body-1]

for i in range(num): model_body.layers[i].trainable = False

print('Freeze the first {} layers of total {} layers.'.format(num, len(model_body.layers)))

model_loss = Lambda(yolo_loss, output_shape=(1,), name='yolo_loss',

arguments={'anchors': anchors, 'num_classes': num_classes, 'ignore_thresh': 0.5})(

[*model_body.output, *y_true])

model = Model([model_body.input, *y_true], model_loss)

return model模型的body:DarkNet-53

def yolo_body(inputs, num_anchors, num_classes):

输入:

- input:图像tensor;

- num_anchors:anchor个数

- num_classes:类别个数

输出: Model(inputs, [y1,y2,y3])

以下图片来源:https://blog.youkuaiyun.com/leviopku/article/details/82660381

def yolo_body(inputs, num_anchors, num_classes):

"""Create YOLO_V3 model CNN body in Keras."""

darknet = Model(inputs, darknet_body(inputs))

x, y1 = make_last_layers(darknet.output, 512, num_anchors*(num_classes+5))

x = compose(

DarknetConv2D_BN_Leaky(256, (1,1)),

UpSampling2D(2))(x)

x = Concatenate()([x,darknet.layers[152].output])

x, y2 = make_last_layers(x, 256, num_anchors*(num_classes+5))

x = compose(

DarknetConv2D_BN_Leaky(128, (1,1)),

UpSampling2D(2))(x)

x = Concatenate()([x,darknet.layers[92].output])

x, y3 = make_last_layers(x, 128, num_anchors*(num_classes+5))

return Model(inputs, [y1,y2,y3])def darknet_body(x):

'''Darknent body having 52 Convolution2D layers'''

x = DarknetConv2D_BN_Leaky(32, (3,3))(x)

x = resblock_body(x, 64, 1)

x = resblock_body(x, 128, 2)

x = resblock_body(x, 256, 8)

x = resblock_body(x, 512, 8)

x = resblock_body(x, 1024, 4)

return x

def resblock_body(x, num_filters, num_blocks):

'''A series of resblocks starting with a downsampling Convolution2D'''

# Darknet uses left and top padding instead of 'same' mode

x = ZeroPadding2D(((1,0),(1,0)))(x)

x = DarknetConv2D_BN_Leaky(num_filters, (3,3), strides=(2,2))(x)

for i in range(num_blocks):

y = compose(

DarknetConv2D_BN_Leaky(num_filters//2, (1,1)),

DarknetConv2D_BN_Leaky(num_filters, (3,3)))(x)

x = Add()([x,y])

return x

def DarknetConv2D(*args, **kwargs):

"""Wrapper to set Darknet parameters for Convolution2D."""

darknet_conv_kwargs = {'kernel_regularizer': l2(5e-4)}

darknet_conv_kwargs['padding'] = 'valid' if kwargs.get('strides')==(2,2) else 'same'

darknet_conv_kwargs.update(kwargs)

return Conv2D(*args, **darknet_conv_kwargs)

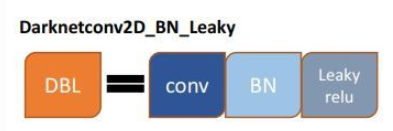

def DarknetConv2D_BN_Leaky(*args, **kwargs):

"""Darknet Convolution2D followed by BatchNormalization and LeakyReLU."""

no_bias_kwargs = {'use_bias': False}

no_bias_kwargs.update(kwargs)

return compose(

DarknetConv2D(*args, **no_bias_kwargs),

BatchNormalization(),

LeakyReLU(alpha=0.1))def make_last_layers(x, num_filters, out_filters):

'''6 Conv2D_BN_Leaky layers followed by a Conv2D_linear layer'''

x = compose(

DarknetConv2D_BN_Leaky(num_filters, (1,1)),

DarknetConv2D_BN_Leaky(num_filters*2, (3,3)),

DarknetConv2D_BN_Leaky(num_filters, (1,1)),

DarknetConv2D_BN_Leaky(num_filters*2, (3,3)),

DarknetConv2D_BN_Leaky(num_filters, (1,1)))(x)

y = compose(

DarknetConv2D_BN_Leaky(num_filters*2, (3,3)),

DarknetConv2D(out_filters, (1,1)))(x)

return x, y

模型的损失:

def yolo_loss(args, anchors, num_classes, ignore_thresh=.5, print_loss=False):

输入:

yolo_outputs: yolo_body or tiny_yolo_body 的输出,即model_body.output

y_true: preprocess_true_boxes的输出 :见下文,大小:

model_body.output: [(?, 13, 13, 3* (5+num_class)),

(?, 26, 26, 3*(5+num_class)),

(?, 52, 52, 3* (5+num_class))]

y_true: [(?, 13, 13, 3, (5+num_class)),

(?, 26, 26, 3, (5+num_class)),

(?, 52, 52, 3, (5+num_class))]

anchors: array, shape=(N, 2), wh

num_classes: 类别数

ignore_thresh: iou阈值

输出:loss: tensor, shape=(1,)

softmax来分类依赖于这样一个前提,即分类是相互独立的,换句话说,如果一个目标属于一种类别,那么它就不能属于另一种。但是,当我们的数据集中存在人或女人的标签时,上面所提到的前提就是去了意义。这就是作者为什么不用softmax,而用logistic regression来预测每个类别得分并使用一个阈值来对目标进行多标签预测。

其中:核心函数:def yolo_head(feats, anchors, num_classes, input_shape, calc_loss=False):

输入:

feats:特征图特征,维度【?,13,13,3*(5+num_classes)】

anchors:【9,2】

num_classes:类别数

input_shape:输入的维度

calc_loss=False:是否计算损失、即训练or测试

输出:

训练模式:

grid, feats, box_xy, box_whgrid: 网格信息,获取网格的尺寸grid_shape,即预测图feats的第1~2位,如13x13;

grid_y和grid_x用于生成网格grid,通过arange、reshape、tile的组合,创建y轴的0~12的组合grid_y,再创建x轴的0~12的组合grid_x,将两者拼接concatenate,就是grid;

grid是遍历二元数值组合的数值,结构是(13, 13, 1, 2);

feats:【?,13,13,3,5+num_classes】

box_xy:Box_xy和box_wh即换算为正常的Box信息,x y w h同y_true,范围【0-1】

box_wh:测试模式:

box_xy, box_wh, box_confidence, box_class_probsbox_confidence:逻辑回归后的置信度

box_class_probs:逻辑回归后的分类值

def yolo_head(feats, anchors, num_classes, input_shape, calc_loss=False):

"""Convert final layer features to bounding box parameters."""

num_anchors = len(anchors)

# Reshape to batch, height, width, num_anchors, box_params.

anchors_tensor = K.reshape(K.constant(anchors), [1, 1, 1, num_anchors, 2])

grid_shape = K.shape(feats)[1:3] # height, width

grid_y = K.tile(K.reshape(K.arange(0, stop=grid_shape[0]), [-1, 1, 1, 1]),

[1, grid_shape[1], 1, 1])

grid_x = K.tile(K.reshape(K.arange(0, stop=grid_shape[1]), [1, -1, 1, 1]),

[grid_shape[0], 1, 1, 1])

grid = K.concatenate([grid_x, grid_y])

grid = K.cast(grid, K.dtype(feats))

feats = K.reshape(

feats, [-1, grid_shape[0], grid_shape[1], num_anchors, num_classes + 5])

# Adjust preditions to each spatial grid point and anchor size.

box_xy = (K.sigmoid(feats[..., :2]) + grid) / K.cast(grid_shape[::-1], K.dtype(feats))

box_wh = K.exp(feats[..., 2:4]) * anchors_tensor / K.cast(input_shape[::-1], K.dtype(feats))

box_confidence = K.sigmoid(feats[..., 4:5])

box_class_probs = K.sigmoid(feats[..., 5:])

if calc_loss == True:

return grid, feats, box_xy, box_wh

return box_xy, box_wh, box_confidence, box_class_probs各部分的损失:

![]()

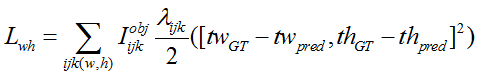

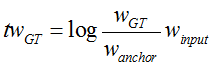

Iijk第i,j个网格的第k个Anchor是否负责预测该GTBox

xGT:[0, 1],相对于当前网格左上角的偏移量

Cross为交叉熵损失

![]()

![]()

def yolo_loss(args, anchors, num_classes, ignore_thresh=.5, print_loss=False):

'''Return yolo_loss tensor

Parameters

----------

yolo_outputs: list of tensor, the output of yolo_body or tiny_yolo_body

y_true: list of array, the output of preprocess_true_boxes

anchors: array, shape=(N, 2), wh

num_classes: integer

ignore_thresh: float, the iou threshold whether to ignore object confidence loss

Returns

-------

loss: tensor, shape=(1,)

'''

num_layers = len(anchors)//3 # default setting

yolo_outputs = args[:num_layers]

y_true = args[num_layers:]

anchor_mask = [[6,7,8], [3,4,5], [0,1,2]] if num_layers==3 else [[3,4,5], [1,2,3]]

'''416*416'''

input_shape = K.cast(K.shape(yolo_outputs[0])[1:3] * 32, K.dtype(y_true[0]))

'''[[13, 13], [26, 26], [52, 52]]'''

grid_shapes = [K.cast(K.shape(yolo_outputs[l])[1:3], K.dtype(y_true[0])) for l in range(num_layers)]

loss = 0

m = K.shape(yolo_outputs[0])[0] # batch size, tensor #批次大小

mf = K.cast(m, K.dtype(yolo_outputs[0]))

for l in range(num_layers):

object_mask = y_true[l][..., 4:5]

true_class_probs = y_true[l][..., 5:]

'''将预测图yolo_outputs[l],拆分为边界框的起始点xy、宽高wh、置信度confidence和类别概率class_probs'''

grid, raw_pred, pred_xy, pred_wh = yolo_head(yolo_outputs[l],

anchors[anchor_mask[l]], num_classes, input_shape, calc_loss=True)

'''预测值raw_pred:经过reshape变换,将anchors分离,结构是(?, 13, 13, 3, 5+类别数)

# pred_xy和pred_wh归一化的起始点xy和宽高wh,xy的结构是(?, 13, 13, 3, 2),wh的结构是(?, 13, 13, 3, 2);

#raw_true_xy:在网格中的中心点xy,偏移数据,值的范围是0~1;

#y_true的第0和1位是中心点xy的相对位置,范围是0~1;

#raw_true_wh:在网络中的wh针对于anchors的比例,再转换为log形式,范围是有正有负;

#y_true的第2和3位是宽高wh的相对位置,范围是0~1;

#box_loss_scale:计算wh权重,取值范围(1~2);2-wh'''

pred_box = K.concatenate([pred_xy, pred_wh])

# Darknet raw box to calculate loss.

raw_true_xy = y_true[l][..., :2]*grid_shapes[l][::-1] - grid

raw_true_wh = K.log(y_true[l][..., 2:4] / anchors[anchor_mask[l]] * input_shape[::-1])

raw_true_wh = K.switch(object_mask, raw_true_wh, K.zeros_like(raw_true_wh)) # avoid log(0)=-inf

box_loss_scale = 2 - y_true[l][...,2:3]*y_true[l][...,3:4]

# Find ignore mask, iterate over each of batch.

ignore_mask = tf.TensorArray(K.dtype(y_true[0]), size=1, dynamic_size=True)

object_mask_bool = K.cast(object_mask, 'bool')

def loop_body(b, ignore_mask):

true_box = tf.boolean_mask(y_true[l][b,...,0:4], object_mask_bool[b,...,0])

iou = box_iou(pred_box[b], true_box)

best_iou = K.max(iou, axis=-1)

ignore_mask = ignore_mask.write(b, K.cast(best_iou<ignore_thresh, K.dtype(true_box)))

return b+1, ignore_mask

_, ignore_mask = K.control_flow_ops.while_loop(lambda b,*args: b<m, loop_body, [0, ignore_mask])

ignore_mask = ignore_mask.stack()

ignore_mask = K.expand_dims(ignore_mask, -1)

# K.binary_crossentropy is helpful to avoid exp overflow.

xy_loss = object_mask * box_loss_scale * K.binary_crossentropy(raw_true_xy, raw_pred[...,0:2], from_logits=True)

wh_loss = object_mask * box_loss_scale * 0.5 * K.square(raw_true_wh-raw_pred[...,2:4])

confidence_loss = object_mask * K.binary_crossentropy(object_mask, raw_pred[...,4:5], from_logits=True)+ \

(1-object_mask) * K.binary_crossentropy(object_mask, raw_pred[...,4:5], from_logits=True) * ignore_mask

class_loss = object_mask * K.binary_crossentropy(true_class_probs, raw_pred[...,5:], from_logits=True)

xy_loss = K.sum(xy_loss) / mf

wh_loss = K.sum(wh_loss) / mf

confidence_loss = K.sum(confidence_loss) / mf

class_loss = K.sum(class_loss) / mf

loss += xy_loss + wh_loss + confidence_loss + class_loss#所有损失求和

if print_loss:

loss = tf.Print(loss, [loss, xy_loss, wh_loss, confidence_loss, class_loss, K.sum(ignore_mask)], message='loss: ')

return loss(2) def data_generator(annotation_lines, batch_size, input_shape, anchors, num_classes):

输入:

- annotation_lines:标注数据的行,每行数据包含图片路径,和框的位置信息;

annotation_lines[i], 路径名 x1,y1,w1,h1,c1, x2,y2,w2,h2,c2

- batch_size:批次数,每批生成的数据个数;

- input_shape:图像输入尺寸,如(416, 416);

- anchors:anchor box列表,9个宽高值;

- num_classes:类别的数量;

输出:yield [image_data, *y_true], np.zeros(batch_size)

def data_generator(annotation_lines, batch_size, input_shape, anchors, num_classes):

'''data generator for fit_generator'''

n = len(annotation_lines)

i = 0

while True:

image_data = []

box_data = []

for b in range(batch_size):

if i==0:

np.random.shuffle(annotation_lines)

image, box = get_random_data(annotation_lines[i], input_shape, random=True)

image_data.append(image)

box_data.append(box)

i = (i+1) % n

image_data = np.array(image_data)

box_data = np.array(box_data)

y_true = preprocess_true_boxes(box_data, input_shape, anchors, num_classes)

yield [image_data, *y_true], np.zeros(batch_size)其中:生成函数:def get_random_data(annotation_line, input_shape, random=True, max_boxes=20, jitter=.3, hue=.1, sat=1.5, val=1.5, proc_img=True):

用于生成并读取图像、box

annotation_lines[i], 路径名 x1,y1,w1,h1,c1, x2,y2,w2,h2,c2

def get_random_data(annotation_line, input_shape, random=True, max_boxes=20, jitter=.3, hue=.1, sat=1.5, val=1.5, proc_img=True):

'''random preprocessing for real-time data augmentation'''

line = annotation_line.split()

image = Image.open(line[0])

iw, ih = image.size

h, w = input_shape

box = np.array([np.array(list(map(int,box.split(',')))) for box in line[1:]])

'''短边放缩,长边等比例缩放,抠取input的大小'''

if not random:

# resize image

scale = min(w/iw, h/ih)

nw = int(iw*scale)

nh = int(ih*scale)

dx = (w-nw)//2

dy = (h-nh)//2

image_data=0

if proc_img:

image = image.resize((nw,nh), Image.BICUBIC)

new_image = Image.new('RGB', (w,h), (128,128,128))

new_image.paste(image, (dx, dy))

image_data = np.array(new_image)/255.

''' box等比例放缩'''

box_data = np.zeros((max_boxes,5))

if len(box)>0:

np.random.shuffle(box)

if len(box)>max_boxes: box = box[:max_boxes]

box[:, [0,2]] = box[:, [0,2]]*scale + dx

box[:, [1,3]] = box[:, [1,3]]*scale + dy

box_data[:len(box)] = box

return image_data, box_data

''' 通过jitter参数,随机计算new_ar和scale,生成新的nh和nw,将原始图像随机转换为nw和nh尺寸的图像,即非等比例变换图像。'''

# resize image

new_ar = w/h * rand(1-jitter,1+jitter)/rand(1-jitter,1+jitter)

scale = rand(.25, 2)

if new_ar < 1:

nh = int(scale*h)

nw = int(nh*new_ar)

else:

nw = int(scale*w)

nh = int(nw/new_ar)

image = image.resize((nw,nh), Image.BICUBIC)

# place image

dx = int(rand(0, w-nw))

dy = int(rand(0, h-nh))

new_image = Image.new('RGB', (w,h), (128,128,128))

new_image.paste(image, (dx, dy))

image = new_image

# flip image or not 随机翻转

flip = rand()<.5

if flip: image = image.transpose(Image.FLIP_LEFT_RIGHT)

# distort image 随机色调、亮度、饱和度

hue = rand(-hue, hue)

sat = rand(1, sat) if rand()<.5 else 1/rand(1, sat)

val = rand(1, val) if rand()<.5 else 1/rand(1, val)

x = rgb_to_hsv(np.array(image)/255.)

x[..., 0] += hue

x[..., 0][x[..., 0]>1] -= 1

x[..., 0][x[..., 0]<0] += 1

x[..., 1] *= sat

x[..., 2] *= val

x[x>1] = 1

x[x<0] = 0

image_data = hsv_to_rgb(x) # numpy array, 0 to 1

# correct boxes 外接矩形等比例放缩

box_data = np.zeros((max_boxes,5))

if len(box)>0:

np.random.shuffle(box)

box[:, [0,2]] = box[:, [0,2]]*nw/iw + dx

box[:, [1,3]] = box[:, [1,3]]*nh/ih + dy

if flip: box[:, [0,2]] = w - box[:, [2,0]]

box[:, 0:2][box[:, 0:2]<0] = 0

box[:, 2][box[:, 2]>w] = w

box[:, 3][box[:, 3]>h] = h

box_w = box[:, 2] - box[:, 0]

box_h = box[:, 3] - box[:, 1]

box = box[np.logical_and(box_w>1, box_h>1)] # discard invalid box

if len(box)>max_boxes: box = box[:max_boxes]

box_data[:len(box)] = box

return image_data, box_datadef preprocess_true_boxes(true_boxes, input_shape, anchors, num_classes):

在preprocess_true_boxes中,

输入:

true_boxes:检测框,批次数?,最大框数20,每个框5个值,4个边界点和1个类别序号,如(?, 20, 5);

input_shape:图片尺寸,如(416, 416);

anchors:anchor box列表;

num_classes:类别的数量;

输出:

y_true 【m,grid_x,grid_y,3, 5+num_class】

def preprocess_true_boxes(true_boxes, input_shape, anchors, num_classes):

'''Preprocess true boxes to training input format

Parameters

----------

true_boxes: array, shape=(m, T, 5)

Absolute x_min, y_min, x_max, y_max, class_id relative to input_shape.

input_shape: array-like, hw, multiples of 32

anchors: array, shape=(N, 2), wh

num_classes: integer

Returns

-------

y_true: list of array, shape like yolo_outputs, xywh are reletive value

'''

assert (true_boxes[..., 4]<num_classes).all(), 'class id must be less than num_classes'

num_layers = len(anchors)//3 # default setting

anchor_mask = [[6,7,8], [3,4,5], [0,1,2]] if num_layers==3 else [[3,4,5], [1,2,3]]

true_boxes = np.array(true_boxes, dtype='float32')

input_shape = np.array(input_shape, dtype='int32')

boxes_xy = (true_boxes[..., 0:2] + true_boxes[..., 2:4]) // 2

boxes_wh = true_boxes[..., 2:4] - true_boxes[..., 0:2] '''box由左上角、右下角变形为XYWH'''

true_boxes[..., 0:2] = boxes_xy/input_shape[::-1] '''box归一化【0-1】'''

true_boxes[..., 2:4] = boxes_wh/input_shape[::-1]

m = true_boxes.shape[0]

grid_shapes = [input_shape//{0:32, 1:16, 2:8}[l] for l in range(num_layers)]

'''【m,grid_x,grid_y,3,5+num_class】'''

y_true = [np.zeros((m,grid_shapes[l][0],grid_shapes[l][1],len(anchor_mask[l]),5+num_classes),

dtype='float32') for l in range(num_layers)]

# Expand dim to apply broadcasting.

anchors = np.expand_dims(anchors, 0)

anchor_maxes = anchors / 2.

anchor_mins = -anchor_maxes

valid_mask = boxes_wh[..., 0]>0

for b in range(m): '''对于每一个批次'''

# Discard zero rows.

wh = boxes_wh[b, valid_mask[b]]

if len(wh)==0: continue

# Expand dim to apply broadcasting.

wh = np.expand_dims(wh, -2) '''wh倒数第2个添加1位,即(K,2)->(K,1,2);'''

box_maxes = wh / 2.

box_mins = -box_maxes

# 每个Box选择一个与其最接近大小的Anchor

intersect_mins = np.maximum(box_mins, anchor_mins) ''' (K,9,2)'''

intersect_maxes = np.minimum(box_maxes, anchor_maxes) ''' (K,9,2)'''

intersect_wh = np.maximum(intersect_maxes - intersect_mins, 0.) '''(K,9, 2)'''

intersect_area = intersect_wh[..., 0] * intersect_wh[..., 1] ''' (K,9)'''

box_area = wh[..., 0] * wh[..., 1] '''(K,1)'''

anchor_area = anchors[..., 0] * anchors[..., 1] '''# (1,9)'''

iou = intersect_area / (box_area + anchor_area - intersect_area) ''' (K,9)'''

# Find best anchor for each true box

best_anchor = np.argmax(iou, axis=-1) ''' (K,1)'''

for t, n in enumerate(best_anchor):

for l in range(num_layers):

if n in anchor_mask[l]:

i = np.floor(true_boxes[b,t,0]*grid_shapes[l][1]).astype('int32')

j = np.floor(true_boxes[b,t,1]*grid_shapes[l][0]).astype('int32')

k = anchor_mask[l].index(n)

c = true_boxes[b,t, 4].astype('int32')

y_true[l][b, j, i, k, 0:4] = true_boxes[b,t, 0:4]

y_true[l][b, j, i, k, 4] = 1

y_true[l][b, j, i, k, 5+c] = 1

return y_true2. 测试:

YOLO类:

模型路径名:*.h5

class YOLO(object):

_defaults = {

"model_path": 'model_data/yolo.h5',

"anchors_path": 'model_data/yolo_anchors.txt',

"classes_path": 'model_data/coco_classes.txt',

"score" : 0.3,

"iou" : 0.45,

"model_image_size" : (416, 416),

"gpu_num" : 1,

}

@classmethod

def get_defaults(cls, n):

if n in cls._defaults:

return cls._defaults[n]

else:

return "Unrecognized attribute name '" + n + "'"

def __init__(self, **kwargs):

self.__dict__.update(self._defaults) # set up default values

self.__dict__.update(kwargs) # and update with user overrides

self.class_names = self._get_class()

self.anchors = self._get_anchors()

self.sess = K.get_session()

self.boxes, self.scores, self.classes = self.generate()

def _get_class(self):

classes_path = os.path.expanduser(self.classes_path)

with open(classes_path) as f:

class_names = f.readlines()

class_names = [c.strip() for c in class_names]

return class_names

def _get_anchors(self):

anchors_path = os.path.expanduser(self.anchors_path)

with open(anchors_path) as f:

anchors = f.readline()

anchors = [float(x) for x in anchors.split(',')]

return np.array(anchors).reshape(-1, 2)boxes、scores、classes是在模型的基础上,继续封装,由函数generate()所生成,其中:

- •boxes:框的四个点坐标,(top, left, bottom, right);

- scores:框的类别置信度,融合框置信度和类别置信度;

- •classes:框的类别(非极大抑制后);

Generate函数:生成boxes, scores, classes

输入:

输出:

boxes, scores, classes

def generate(self):

model_path = os.path.expanduser(self.model_path)

assert model_path.endswith('.h5'), 'Keras model or weights must be a .h5 file.'

# Load model, or construct model and load weights.

num_anchors = len(self.anchors)

num_classes = len(self.class_names)

is_tiny_version = num_anchors==6 # default setting

try:

self.yolo_model = load_model(model_path, compile=False)

except:

self.yolo_model = tiny_yolo_body(Input(shape=(None,None,3)), num_anchors//2, num_classes) \

if is_tiny_version else yolo_body(Input(shape=(None,None,3)), num_anchors//3, num_classes)

self.yolo_model.load_weights(self.model_path) # make sure model, anchors and classes match

else:

assert self.yolo_model.layers[-1].output_shape[-1] == \

num_anchors/len(self.yolo_model.output) * (num_classes + 5), \

'Mismatch between model and given anchor and class sizes'

print('{} model, anchors, and classes loaded.'.format(model_path))

# Generate colors for drawing bounding boxes.

hsv_tuples = [(x / len(self.class_names), 1., 1.)

for x in range(len(self.class_names))]

self.colors = list(map(lambda x: colorsys.hsv_to_rgb(*x), hsv_tuples))

self.colors = list(

map(lambda x: (int(x[0] * 255), int(x[1] * 255), int(x[2] * 255)),

self.colors))

np.random.seed(10101) # Fixed seed for consistent colors across runs.

np.random.shuffle(self.colors) # Shuffle colors to decorrelate adjacent classes.

np.random.seed(None) # Reset seed to default.

# Generate output tensor targets for filtered bounding boxes.

self.input_image_shape = K.placeholder(shape=(2, ))

if self.gpu_num>=2:

self.yolo_model = multi_gpu_model(self.yolo_model, gpus=self.gpu_num)

boxes, scores, classes = yolo_eval(self.yolo_model.output, self.anchors,

len(self.class_names), self.input_image_shape,

score_threshold=self.score, iou_threshold=self.iou)

return boxes, scores, classes其中:

def yolo_eval(yolo_outputs, anchors, num_classes, image_shape, max_boxes=20,score_threshold=.6,iou_threshold=.5):

输入:

yolo_outputs:模型的输出:【?,13,13,3*(5+num_classes)】

anchors:9个anchor box的值;

num_classes:类别个数;

image_shape:placeholder类型的TF参数,默认(416, 416);

max_boxes:图中最大的检测框数,20个;

score_threshold:框置信度阈值,小于阈值的框被删除,需要的框较多,则调低阈值,需要的框较少,则调高阈值;

ou_threshold:同类别框的IoU阈值,大于阈值的重叠框被删除,重叠物体较多,则调高阈值,重叠物体较少,则调低阈值;输出

输出:boxes、scores、classes

def yolo_eval(yolo_outputs,

anchors,

num_classes,

image_shape,

max_boxes=20,

score_threshold=.6,

iou_threshold=.5):

"""Evaluate YOLO model on given input and return filtered boxes."""

num_layers = len(yolo_outputs)

anchor_mask = [[6,7,8], [3,4,5], [0,1,2]] if num_layers==3 else [[3,4,5], [1,2,3]] # default setting

input_shape = K.shape(yolo_outputs[0])[1:3] * 32

boxes = []

box_scores = []

for l in range(num_layers):

_boxes, _box_scores = yolo_boxes_and_scores(yolo_outputs[l],

anchors[anchor_mask[l]], num_classes, input_shape, image_shape)

boxes.append(_boxes)

box_scores.append(_box_scores)

boxes = K.concatenate(boxes, axis=0)

box_scores = K.concatenate(box_scores, axis=0)

mask = box_scores >= score_threshold

max_boxes_tensor = K.constant(max_boxes, dtype='int32')

boxes_ = []

scores_ = []

classes_ = []

for c in range(num_classes):

# TODO: use keras backend instead of tf.

class_boxes = tf.boolean_mask(boxes, mask[:, c])

class_box_scores = tf.boolean_mask(box_scores[:, c], mask[:, c])

nms_index = tf.image.non_max_suppression(

class_boxes, class_box_scores, max_boxes_tensor, iou_threshold=iou_threshold)

class_boxes = K.gather(class_boxes, nms_index)

class_box_scores = K.gather(class_box_scores, nms_index)

classes = K.ones_like(class_box_scores, 'int32') * c

boxes_.append(class_boxes)

scores_.append(class_box_scores)

classes_.append(classes)

boxes_ = K.concatenate(boxes_, axis=0)

scores_ = K.concatenate(scores_, axis=0)

classes_ = K.concatenate(classes_, axis=0)

return boxes_, scores_, classes_def yolo_boxes_and_scores(feats, anchors, num_classes, input_shape, image_shape):

'''Process Conv layer output'''

box_xy, box_wh, box_confidence, box_class_probs = yolo_head(feats,

anchors, num_classes, input_shape)

boxes = yolo_correct_boxes(box_xy, box_wh, input_shape, image_shape)

boxes = K.reshape(boxes, [-1, 4])

box_scores = box_confidence * box_class_probs

box_scores = K.reshape(box_scores, [-1, num_classes])

return boxes, box_scores将生成的box按照图像的放缩比例缩放至原始图像的大小,并换算至左上角、右下角坐标

def yolo_correct_boxes(box_xy, box_wh, input_shape, image_shape):

‘’‘Get corrected boxes’‘’

box_yx = box_xy[..., ::-1]

box_hw = box_wh[..., ::-1]

input_shape = K.cast(input_shape, K.dtype(box_yx))

image_shape = K.cast(image_shape, K.dtype(box_yx))

new_shape = K.round(image_shape * K.min(input_shape/image_shape))

offset = (input_shape-new_shape)/2./input_shape

scale = input_shape/new_shape

box_yx = (box_yx - offset) * scale

box_hw *= scale

box_mins = box_yx - (box_hw / 2.)

box_maxes = box_yx + (box_hw / 2.)

boxes = K.concatenate([

box_mins[..., 0:1], # y_min

box_mins[..., 1:2], # x_min

box_maxes[..., 0:1], # y_max

box_maxes[..., 1:2] # x_max

])

# Scale boxes back to original image shape.

boxes *= K.concatenate([image_shape, image_shape])

return boxes检测图像:

输入:PIL image

def detect_image(self, image):

start = timer()

'''必须为32的倍数'''

if self.model_image_size != (None, None):

assert self.model_image_size[0]%32 == 0, 'Multiples of 32 required'

assert self.model_image_size[1]%32 == 0, 'Multiples of 32 required'

boxed_image = letterbox_image(image, tuple(reversed(self.model_image_size)))

else:

new_image_size = (image.width - (image.width % 32),

image.height - (image.height % 32))

boxed_image = letterbox_image(image, new_image_size)

image_data = np.array(boxed_image, dtype='float32')

print(image_data.shape)

image_data /= 255.

image_data = np.expand_dims(image_data, 0) # Add batch dimension.

'''计算图运行'''

out_boxes, out_scores, out_classes = self.sess.run(

[self.boxes, self.scores, self.classes],

feed_dict={

self.yolo_model.input: image_data,

self.input_image_shape: [image.size[1], image.size[0]],

K.learning_phase(): 0

})

print('Found {} boxes for {}'.format(len(out_boxes), 'img'))

font = ImageFont.truetype(font='font/FiraMono-Medium.otf',

size=np.floor(3e-2 * image.size[1] + 0.5).astype('int32'))

thickness = (image.size[0] + image.size[1]) // 300

for i, c in reversed(list(enumerate(out_classes))):

predicted_class = self.class_names[c] #类名称

box = out_boxes[i] #外接矩形

score = out_scores[i]#得分

label = '{} {:.2f}'.format(predicted_class, score)

draw = ImageDraw.Draw(image)

label_size = draw.textsize(label, font)

top, left, bottom, right = box

top = max(0, np.floor(top + 0.5).astype('int32'))

left = max(0, np.floor(left + 0.5).astype('int32'))

bottom = min(image.size[1], np.floor(bottom + 0.5).astype('int32'))

right = min(image.size[0], np.floor(right + 0.5).astype('int32'))

print(label, (left, top), (right, bottom))

if top - label_size[1] >= 0:

text_origin = np.array([left, top - label_size[1]])

else:

text_origin = np.array([left, top + 1])

# My kingdom for a good redistributable image drawing library.

for i in range(thickness):

draw.rectangle(

[left + i, top + i, right - i, bottom - i],

outline=self.colors[c])

draw.rectangle(

[tuple(text_origin), tuple(text_origin + label_size)],

fill=self.colors[c])

draw.text(text_origin, label, fill=(0, 0, 0), font=font)

del draw

end = timer()

print(end - start)

return image#短边放缩,长边等比例缩放,抠取同短边大小的图像

def letterbox_image(image, size):

'''resize image with unchanged aspect ratio using padding'''

iw, ih = image.size

w, h = size

scale = min(w/iw, h/ih)

nw = int(iw*scale)

nh = int(ih*scale)

image = image.resize((nw,nh), Image.BICUBIC)

new_image = Image.new('RGB', size, (128,128,128))

new_image.paste(image, ((w-nw)//2, (h-nh)//2))

return new_image

YOLOv3实现实时目标检测,处理320x320图片仅需22毫秒,精度与SSD相当,速度为其三倍。在TitanX环境下,检测精度达57.9AP50,耗时51ms。采用逻辑回归预测边界框的objectness score,多标签分类预测框中可能的类别。

YOLOv3实现实时目标检测,处理320x320图片仅需22毫秒,精度与SSD相当,速度为其三倍。在TitanX环境下,检测精度达57.9AP50,耗时51ms。采用逻辑回归预测边界框的objectness score,多标签分类预测框中可能的类别。

4911

4911

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?