1.前言

ncnn 是一个高性能的神经网络前向计算框架,专门针对移动设备和嵌入式设备设计。它由腾讯优图实验室开发,旨在提供高效的神经网络推理能力,特别是在资源受限的环境中,如智能手机和嵌入式系统。

ncnn 被广泛应用于移动端和嵌入式设备上的各种深度学习应用,包括但不限于:图像分类/目标检测/语义分割/人脸识别/图像生成与处理

2.NCNN的CMakeLists.txt编写

ncnn的头文件,链接文件,静态链接库根据源码编译以后的路径修改(如果是编译好的,直接指定路径)

# ncnn的头文件

include_directories(/home/ubuntu/ncnn/build/install/include/ncnn)

# ncnn的链接文件

link_directories(/home/ubuntu/ncnn/build/install/lib)

# 生成可执行文件

add_executable(ncnnTest main.cpp)

# 链接ncnn静态链接库

target_link_libraries(ncnnTest ncnn ${OpenCV_LIBS} /home/ubuntu/ncnn/build/install/lib/libncnn.a)

3.模型推理

3.1 导入标准库

//包括 OpenCV 的图像处理和显示功能,以及用于加载神经网络模型的头文件和标准输入输出流。

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/opencv.hpp>

#include "net.h"

#include "iostream"3.2 数据前处理

ncnn::Mat::from_pixels_resize(original_img.data, ncnn::Mat::PIXEL_BGR2RGB, w, h, w, h);

const float mean_vals[3] = {180.795f,97.155f, 57.12f};

const float norm_vals[3] = {0.127f, 0.079f, 0.043f};

in.substract_mean_normalize(mean_vals, norm_vals);

(1)关于减去均值和除方差的操作,pytorch里面是(0~1范围)

mean = (0.709, 0.381, 0.224)

std = (0.127, 0.079, 0.043)

ncnn的处理中,由于opencv读取的是uint8的格式,所以mean需要乘以255,std没有变化

(2) ncnn的数据枚举,根据自己的实际使用,本例使用的是PIXEL_BGR2RGB

其中的四个shape参数中:

1)第一个w, h:input数据的宽和高

2)第二个w, h:可以实现resize的功能,由于本例模型不用resize(例如448×448),所以直接重复填写,但是这4个参数必须要填写

enum PixelType

{

PIXEL_CONVERT_SHIFT = 16,

PIXEL_FORMAT_MASK = 0x0000ffff,

PIXEL_CONVERT_MASK = 0xffff0000,

PIXEL_RGB = 1,

PIXEL_BGR = 2,

PIXEL_GRAY = 3,

PIXEL_RGBA = 4,

PIXEL_BGRA = 5,

PIXEL_RGB2BGR = PIXEL_RGB | (PIXEL_BGR << PIXEL_CONVERT_SHIFT),

PIXEL_RGB2GRAY = PIXEL_RGB | (PIXEL_GRAY << PIXEL_CONVERT_SHIFT),

PIXEL_RGB2RGBA = PIXEL_RGB | (PIXEL_RGBA << PIXEL_CONVERT_SHIFT),

PIXEL_RGB2BGRA = PIXEL_RGB | (PIXEL_BGRA << PIXEL_CONVERT_SHIFT),

PIXEL_BGR2RGB = PIXEL_BGR | (PIXEL_RGB << PIXEL_CONVERT_SHIFT),

PIXEL_BGR2GRAY = PIXEL_BGR | (PIXEL_GRAY << PIXEL_CONVERT_SHIFT),

PIXEL_BGR2RGBA = PIXEL_BGR | (PIXEL_RGBA << PIXEL_CONVERT_SHIFT),

PIXEL_BGR2BGRA = PIXEL_BGR | (PIXEL_BGRA << PIXEL_CONVERT_SHIFT),

PIXEL_GRAY2RGB = PIXEL_GRAY | (PIXEL_RGB << PIXEL_CONVERT_SHIFT),

PIXEL_GRAY2BGR = PIXEL_GRAY | (PIXEL_BGR << PIXEL_CONVERT_SHIFT),

PIXEL_GRAY2RGBA = PIXEL_GRAY | (PIXEL_RGBA << PIXEL_CONVERT_SHIFT),

PIXEL_GRAY2BGRA = PIXEL_GRAY | (PIXEL_BGRA << PIXEL_CONVERT_SHIFT),

PIXEL_RGBA2RGB = PIXEL_RGBA | (PIXEL_RGB << PIXEL_CONVERT_SHIFT),

PIXEL_RGBA2BGR = PIXEL_RGBA | (PIXEL_BGR << PIXEL_CONVERT_SHIFT),

PIXEL_RGBA2GRAY = PIXEL_RGBA | (PIXEL_GRAY << PIXEL_CONVERT_SHIFT),

PIXEL_RGBA2BGRA = PIXEL_RGBA | (PIXEL_BGRA << PIXEL_CONVERT_SHIFT),

PIXEL_BGRA2RGB = PIXEL_BGRA | (PIXEL_RGB << PIXEL_CONVERT_SHIFT),

PIXEL_BGRA2BGR = PIXEL_BGRA | (PIXEL_BGR << PIXEL_CONVERT_SHIFT),

PIXEL_BGRA2GRAY = PIXEL_BGRA | (PIXEL_GRAY << PIXEL_CONVERT_SHIFT),

PIXEL_BGRA2RGBA = PIXEL_BGRA | (PIXEL_RGBA << PIXEL_CONVERT_SHIFT),

};

const char* img_path = "/home/ubuntu/ncnn_test/img/01_test.tif";

const char* roi_mask_path = "/home/ubuntu/ncnn_test/img/01_test_mask.png";

const char* param_path = "/home/ubuntu/ncnn_test/model/unet.param";

const char* bin_path = "/home/ubuntu/ncnn_test/model/unet.bin";

// 加载灰度格式的ROI掩码图像

cv::Mat roi_img = imread(roi_mask_path, cv::IMREAD_GRAYSCALE);

if (roi_img.empty()) {

cout << "Image not found: " << roi_mask_path << endl;

return -1;

}

// 加载原始彩色图像,如果加载失败则输出错误信息并退出程序

cv::Mat original_img = cv::imread(img_path);

if (original_img.empty()) {

cout << "Image not found: " << img_path << endl;

return -1;

}

// 将原始图像数据转换为 ncnn::Mat 格式,并进行均值减法和归一化处理, in为输入数据

int w = original_img.cols;

int h = original_img.rows;

ncnn::Mat in = ncnn::Mat::from_pixels_resize(original_img.data, ncnn::Mat::PIXEL_BGR2RGB, w, h, w, h);

const float mean_vals[3] = {180.795f,97.155f, 57.12f};

const float norm_vals[3] = {0.127f, 0.079f, 0.043f};

in.substract_mean_normalize(mean_vals, norm_vals);

3.3 模型推理

输入数据in是前处理的数据,out是模型输出数据,输入节点和输出节点名根据onnx模型可以查看(onnx转ncnn时不会修改节点名)

// 创建网络提取器对象并进行推理

ncnn::Extractor ex = net.create_extractor();

ncnn::Mat out;

ex.input("input.1", in);

ex.extract("437", out);

// 输出的nchw

// cout << out.d << " " << out.c << " " << out.h << " " << out.w << endl;

// 遍历输出数据

/*

for (int c = 0; c < output_data.c; c++) {

const float* data = output_data.channel(c);

for (int h = 0; h < output_data.h; h++) {

for (int w = 0; w < output_data.w; w++) {

float value = data[h * output_data.w + w];

std::cout << "Output [" << c << "][" << h << "][" << w << "] = " << value << std::endl;

}

}

}else {

std::cerr << "Error extracting output" << std::endl;

}

*/3.4 数据后处理

根据自己的后处理修改

ncnn::Mat out_flattened = out.reshape(out.w * out.h * out.c);

float* output_data = (float*)out_flattened.data;

cv::Mat result_img = cv::Mat::zeros(roi_img.rows, roi_img.cols, CV_8UC1);

int index = 0;

for (int row = 0; row < roi_img.rows; row++) {

for (int col = 0; col < roi_img.cols; col++) {

float channel_1 = *(output_data + index);

float channel_2 = *(output_data + index + roi_img.rows * roi_img.cols);

uchar roi_pixel = roi_img.at<uchar>(row, col);

// 如果第一个通道值小于第二个通道值,并且 ROI 像素不是黑色

if (channel_1 < channel_2 && roi_pixel != 0) {

result_img.at<uchar>(row, col) = 255; // 设置为白色

} else {

result_img.at<uchar>(row, col) = 0; // 设置为黑色

}

index++;

}

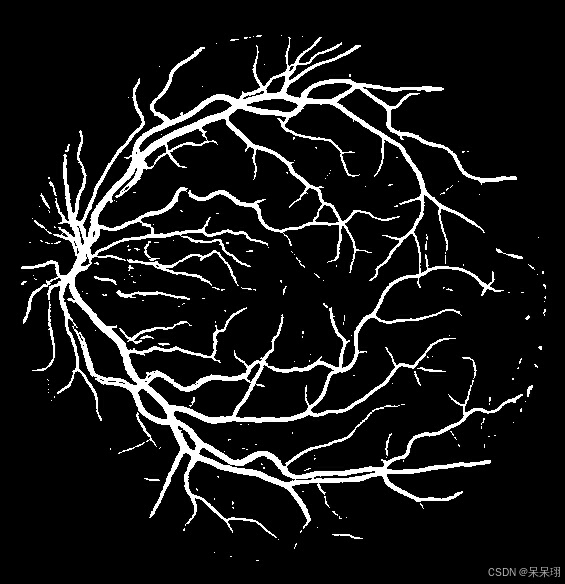

}4.完整代码和推理结果

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/opencv.hpp>

#include "net.h"

#include "iostream"

using namespace std;

int main()

{

// 定义输入图像路径、ROI掩码路径、ncnn模型参数路径和二进制权重文件路径。

const char* img_path = "/home/ubuntu/ncnn_test/img/01_test.tif";

const char* roi_mask_path = "/home/ubuntu/ncnn_test/img/01_test_mask.png";

const char* param_path = "/home/ubuntu/ncnn_test/model/unet.param";

const char* bin_path = "/home/ubuntu/ncnn_test/model/unet.bin";

// 加载灰度格式的ROI掩码图像

cv::Mat roi_img = imread(roi_mask_path, cv::IMREAD_GRAYSCALE);

if (roi_img.empty()) {

cout << "Image not found: " << roi_mask_path << endl;

return -1;

}

// 加载原始彩色图像,如果加载失败则输出错误信息并退出程序

cv::Mat original_img = cv::imread(img_path);

if (original_img.empty()) {

cout << "Image not found: " << img_path << endl;

return -1;

}

int w = original_img.cols;

int h = original_img.rows;

// 将原始图像数据转换为 ncnn::Mat 格式,并进行均值减法和归一化处理, in为输入数据

ncnn::Mat in = ncnn::Mat::from_pixels_resize(original_img.data, ncnn::Mat::PIXEL_BGR2RGB, w, h, w, h);

const float mean_vals[3] = {180.795f,97.155f, 57.12f};

const float norm_vals[3] = {0.127f, 0.079f, 0.043f};

in.substract_mean_normalize(mean_vals, norm_vals);

ncnn::Net net;

// 加载模型参数

if (net.load_param(param_path)) {

fprintf(stderr, "Load param failed.\n");

return -1;

}

// 加载模型二进制权重

if (net.load_model(bin_path)) {

fprintf(stderr, "Load model failed.\n");

return -1;

}

// 创建网络提取器对象并进行推理

ncnn::Extractor ex = net.create_extractor();

ncnn::Mat out;

ex.input("input.1", in);

ex.extract("437", out);

ncnn::Mat out_flattened = out.reshape(out.w * out.h * out.c);

float* output_data = (float*)out_flattened.data;

cv::Mat result_img = cv::Mat::zeros(roi_img.rows, roi_img.cols, CV_8UC1);

int index = 0;

for (int row = 0; row < roi_img.rows; row++) {

for (int col = 0; col < roi_img.cols; col++) {

float channel_1 = *(output_data + index);

float channel_2 = *(output_data + index + roi_img.rows * roi_img.cols);

uchar roi_pixel = roi_img.at<uchar>(row, col);

// 如果第一个通道值小于第二个通道值,并且 ROI 像素不是黑色

if (channel_1 < channel_2 && roi_pixel != 0) {

result_img.at<uchar>(row, col) = 255; // 设置为白色

} else {

result_img.at<uchar>(row, col) = 0; // 设置为黑色

}

index++;

}

}

// 保存结果图像

cv::imwrite("result.jpg", result_img);

return 0;

}

|  |

432

432

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?