ncnn 是腾讯开源的神经网络推理框架,既然是一个负责推理的框架,所以不能像TF那样创建网络并且训练,它的最大用处是运行在端侧,解析并执行网络推理,输出推理结果。它可以支持各种类型的框架生成的网络。

官方仓库有详细介绍:

https://github.com/Tencent/ncnn.git下面在普通PC的ubuntu18.04环境上搭建环境,运行一把。

1.下载代码:

git clone https://github.com/Tencent/ncnn.git

2.配置环境:

首先安装依赖:

sudo apt-get install libprotobuf-dev protobuf-compiler libopencv-dev cmakecd ncnn

mkdir build

cd build

#cmake -DCMAKE_BUILD_TYPE=relwithdebinfo -DNCNN_OPENMP=OFF -DNCNN_THREADS=OFF -DNCNN_RUNTIME_CPU=OFF -DNCNN_RVV=ON -DNCNN_SIMPLEOCV=ON -DNCNN_BUILD_EXAMPLES=ON ..

cmake ../

protobuf 为3.0.0版,可以和上面的配置对应。

3.编译

make -j4(base) caozilong@caozilong-Vostro-3268:~/Workspace/ncnn/ncnn/build$ make -j4

[ 1%] Built target ncnnmerge

[ 1%] Built target ncnn-generate-spirv

[ 1%] Built target mxnet2ncnn

[ 2%] Built target caffe2ncnn

[ 2%] Built target darknet2ncnn

[ 3%] Built target onnx2ncnn

[ 87%] Built target ncnn

[ 87%] Built target rvm

[ 87%] Built target scrfd_crowdhuman

[ 88%] Built target yolov4

[ 88%] Built target benchncnn

[ 88%] Built target simplepose

[ 88%] Built target yolov3

[ 89%] Built target scrfd

[ 90%] Built target nanodet

[ 91%] Built target shufflenetv2

[ 92%] Built target squeezenet

[ 92%] Built target squeezenet_c_api

[ 92%] Built target yolov5

[ 93%] Built target yolox

[ 94%] Built target rfcn

[ 95%] Built target yolov2

[ 96%] Built target mobilenetv2ssdlite

[ 97%] Built target squeezenetssd

[ 97%] Built target mobilenetssd

[ 98%] Built target peleenetssd_seg

[ 99%] Built target fasterrcnn

[ 99%] Built target retinaface

[ 99%] Built target yolact

[100%] Built target ncnn2mem

[100%] Built target ncnnoptimize

[100%] Built target ncnn2int8

[100%] Built target ncnn2table

(base) caozilong@caozilong-Vostro-3268:~/Workspace/ncnn/ncnn/build$

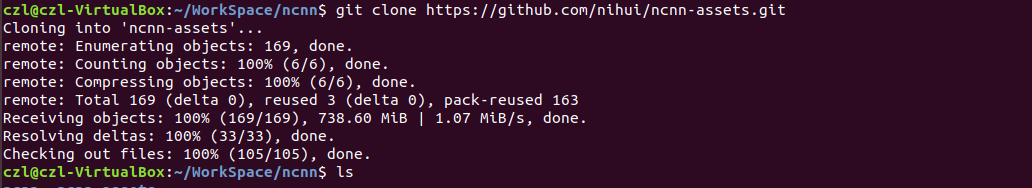

4.下载模型文件.

ncnn只是一个推理框架,需要吃一个训练好的算法模型文件才能正常工作,可以理解NCNN是一个模型的运行环境,所以需要准备好模型文件,NCNN官方维护了一个模型仓库,里面有常见网络算法的已经转换好的模型和权重文件。

git clone https://github.com/nihui/ncnn-assets.git

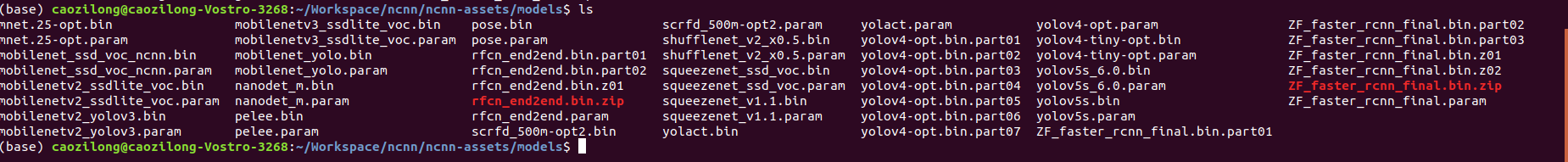

模型库中的已经转换好的模型和权重文件:

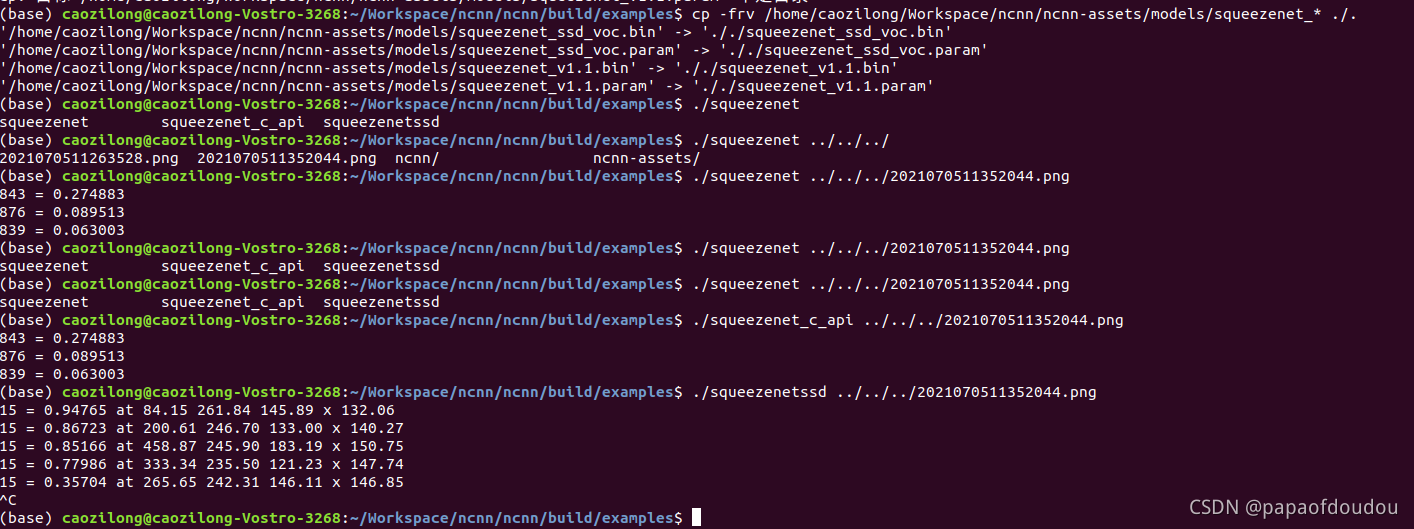

5.识别图片

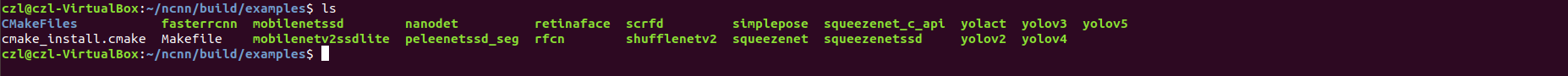

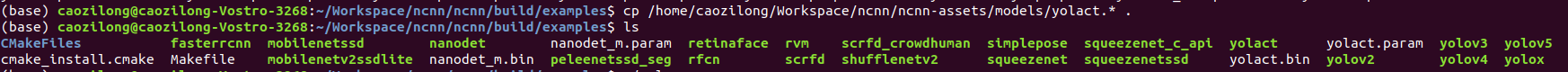

进入到ncnn/build/examples目录,可以看到很多的可执行测试程序,分别用来测试不同的网络

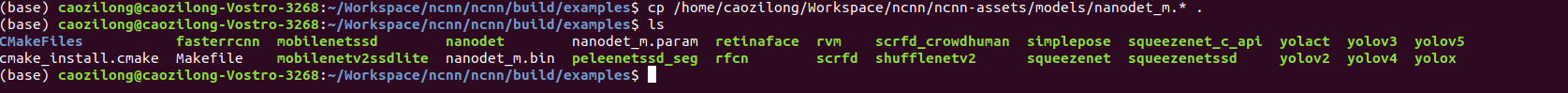

以nanodet为例,我们先把模型文件nanodet_m.param和模型权重文件nanodet_m.bin拷贝到当前目录

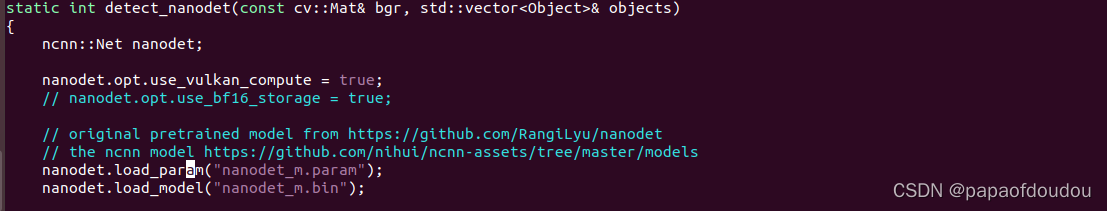

模型文件是代码中写死的:

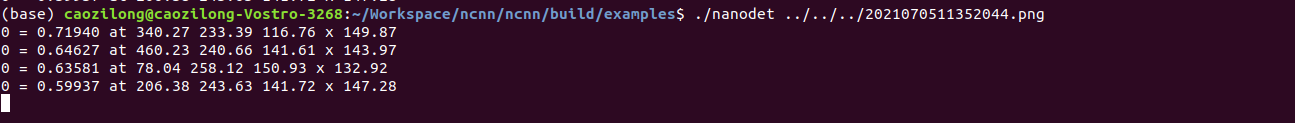

接着,运行测试用例:

./nanodet ../../../2021070511263528.png

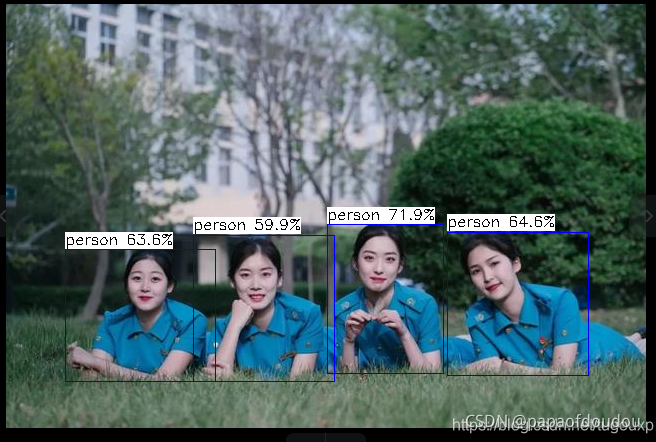

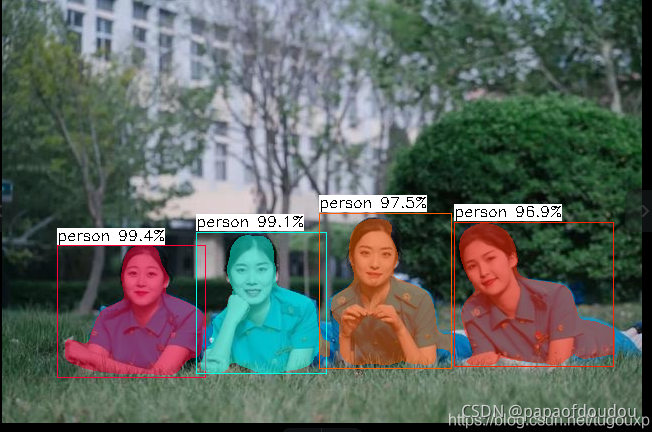

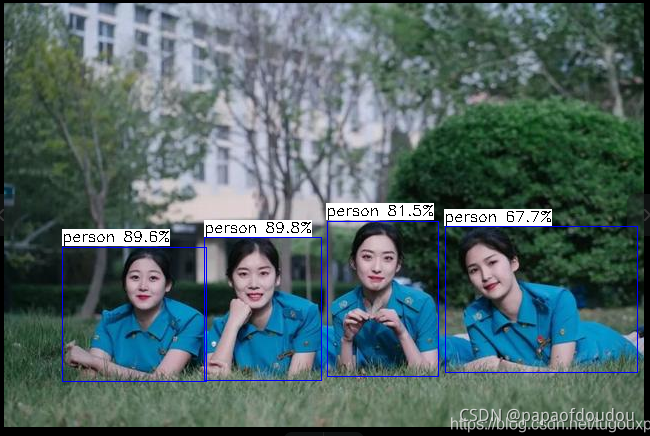

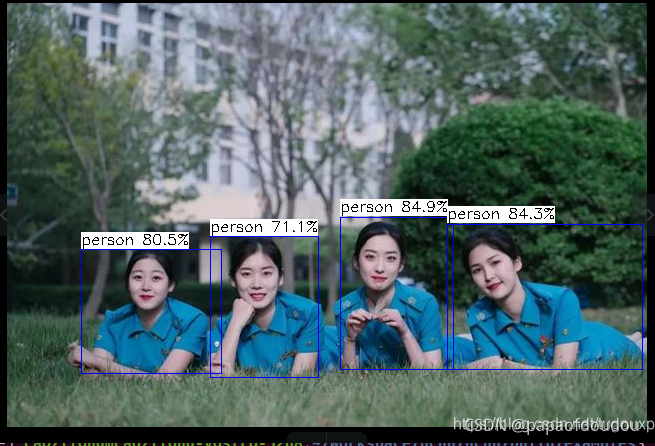

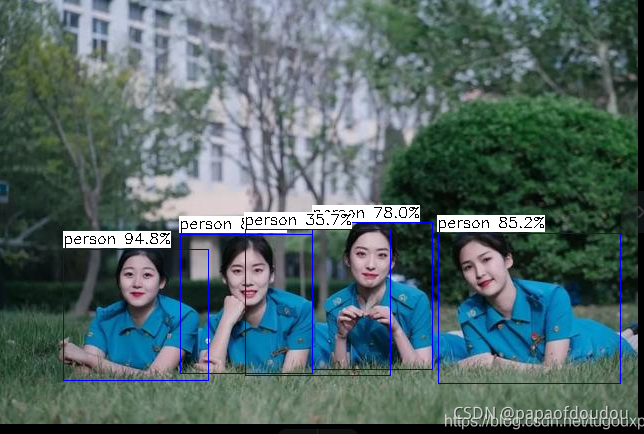

选择一张难度大一点儿的图片,下图照片中有四朵金花,看能否识别出来,运行程序:

检测结果:

可以看到,效果非常不错,四个人的坐标位置都成功标识出来了。

yolact测试:

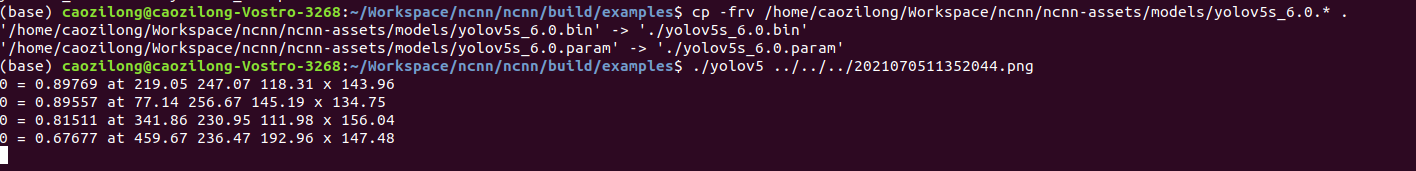

按照上面的步骤,将模型库中的yolact.bin和yolact.param拷贝到用例目录下,执行测试命令

./yolact ../../../2021070511352044.png

语义分割通过对每个像素进行密集的预测、推断标签来实现细粒度的推理,从而使每个像素都被标记为其封闭对象区域类别,为每个像素找到主人。

测试YOLO用例,编译发现必须安装OPENCV才能编译生成YOLO的测试程序。

从检测结果来看,可以看出YOLO5的检测概率要大于nanodet检测概率。

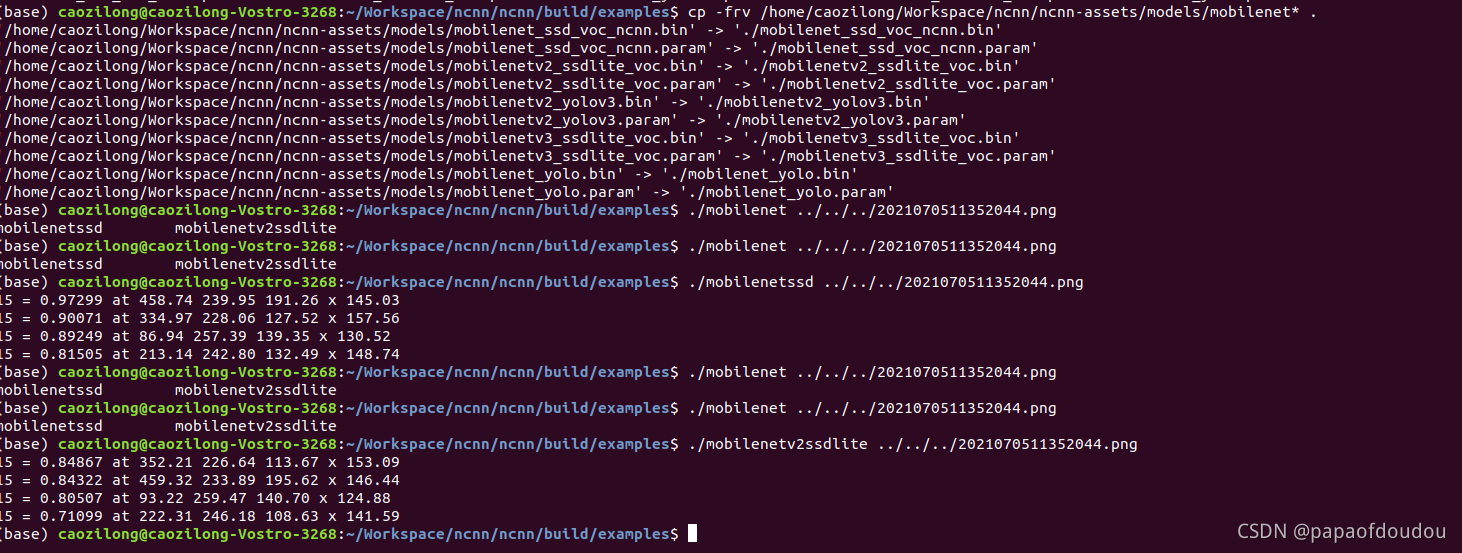

其余的用例,比如mobilenetssd和mobilenetv2ssdlite方法类似:

mobilenetssd

mobilenetv2ssdlite结果:

squeezenetssd用例:

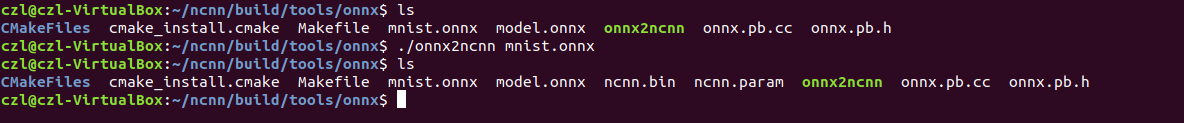

onnx网络到ncnn网络的转换

ncnn代码提供了将onnx模型转换为ncnn模型的工具,编译后工具输出在ncnn/build/tools/onnx目录下面:

![]()

例,将mnist.onnx 转换为 ncnn网络格式:

生成了ncnn.bin和ncnn.param文件,按照NCNN惯例,前者存储的是权重和偏置,后者存储的是网络结构描述。

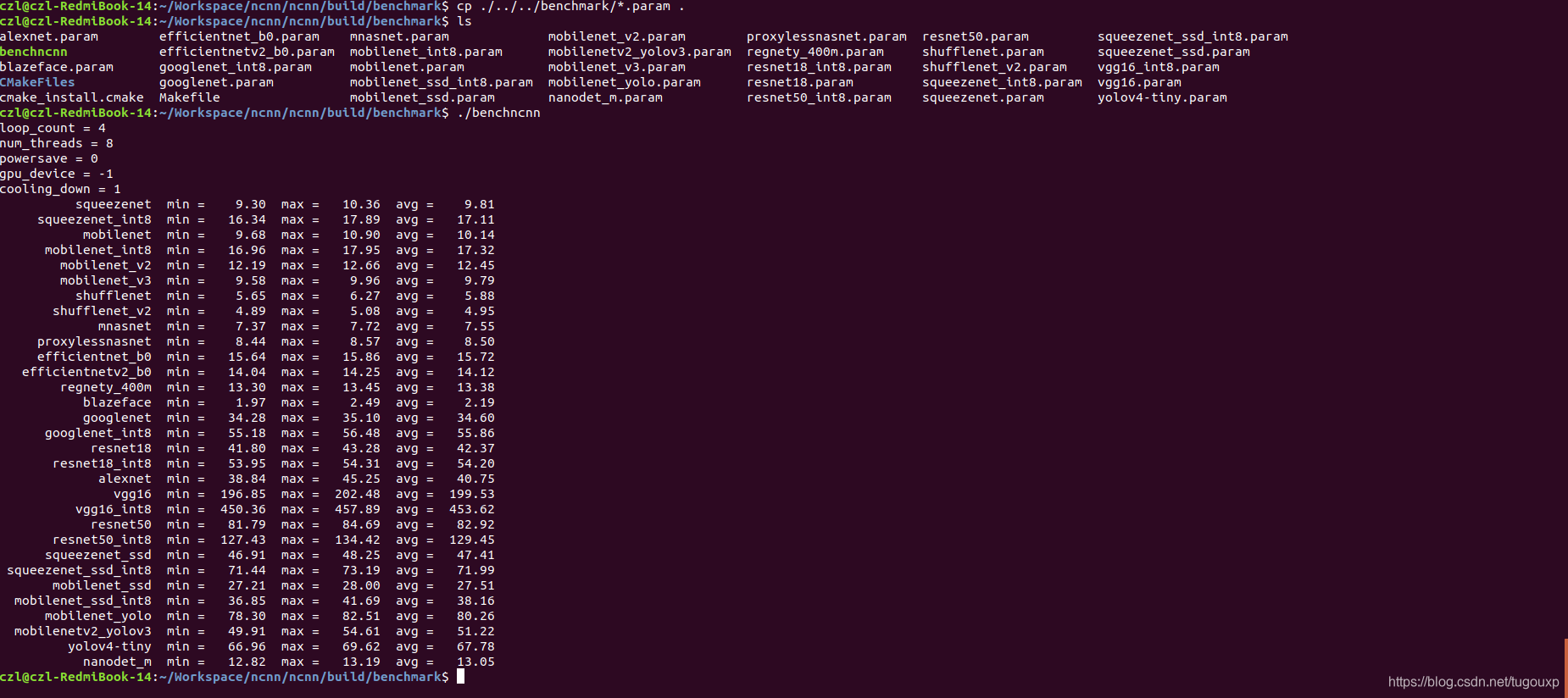

运行benchmark

拷贝benchmark目录下的*.param文件到当前目录:

czl@czl-RedmiBook-14:~/Workspace/ncnn/ncnn/build/benchmark$ cp ./../../benchmark/*.param .

czl@czl-RedmiBook-14:~/Workspace/ncnn/ncnn/build/benchmark$ ls

alexnet.param efficientnet_b0.param mnasnet.param mobilenet_v2.param proxylessnasnet.param resnet50.param squeezenet_ssd_int8.param

benchncnn efficientnetv2_b0.param mobilenet_int8.param mobilenetv2_yolov3.param regnety_400m.param shufflenet.param squeezenet_ssd.param

blazeface.param googlenet_int8.param mobilenet.param mobilenet_v3.param resnet18_int8.param shufflenet_v2.param vgg16_int8.param

CMakeFiles googlenet.param mobilenet_ssd_int8.param mobilenet_yolo.param resnet18.param squeezenet_int8.param vgg16.param

cmake_install.cmake Makefile mobilenet_ssd.param nanodet_m.param resnet50_int8.param squeezenet.param yolov4-tiny.param

czl@czl-RedmiBook-14:~/Workspace/ncnn/ncnn/build/benchmark$ ./benchncnn

loop_count = 4

num_threads = 8

powersave = 0

gpu_device = -1

cooling_down = 1

squeezenet min = 9.30 max = 10.36 avg = 9.81

squeezenet_int8 min = 16.34 max = 17.89 avg = 17.11

mobilenet min = 9.68 max = 10.90 avg = 10.14

mobilenet_int8 min = 16.96 max = 17.95 avg = 17.32

mobilenet_v2 min = 12.19 max = 12.66 avg = 12.45

mobilenet_v3 min = 9.58 max = 9.96 avg = 9.79

shufflenet min = 5.65 max = 6.27 avg = 5.88

shufflenet_v2 min = 4.89 max = 5.08 avg = 4.95

mnasnet min = 7.37 max = 7.72 avg = 7.55

proxylessnasnet min = 8.44 max = 8.57 avg = 8.50

efficientnet_b0 min = 15.64 max = 15.86 avg = 15.72

efficientnetv2_b0 min = 14.04 max = 14.25 avg = 14.12

regnety_400m min = 13.30 max = 13.45 avg = 13.38

blazeface min = 1.97 max = 2.49 avg = 2.19

googlenet min = 34.28 max = 35.10 avg = 34.60

googlenet_int8 min = 55.18 max = 56.48 avg = 55.86

resnet18 min = 41.80 max = 43.28 avg = 42.37

resnet18_int8 min = 53.95 max = 54.31 avg = 54.20

alexnet min = 38.84 max = 45.25 avg = 40.75

vgg16 min = 196.85 max = 202.48 avg = 199.53

vgg16_int8 min = 450.36 max = 457.89 avg = 453.62

resnet50 min = 81.79 max = 84.69 avg = 82.92

resnet50_int8 min = 127.43 max = 134.42 avg = 129.45

squeezenet_ssd min = 46.91 max = 48.25 avg = 47.41

squeezenet_ssd_int8 min = 71.44 max = 73.19 avg = 71.99

mobilenet_ssd min = 27.21 max = 28.00 avg = 27.51

mobilenet_ssd_int8 min = 36.85 max = 41.69 avg = 38.16

mobilenet_yolo min = 78.30 max = 82.51 avg = 80.26

mobilenetv2_yolov3 min = 49.91 max = 54.61 avg = 51.22

yolov4-tiny min = 66.96 max = 69.62 avg = 67.78

nanodet_m min = 12.82 max = 13.19 avg = 13.05

czl@czl-RedmiBook-14:~/Workspace/ncnn/ncnn/build/benchmark$

实际运行输出:

benchmark测试一切正常!

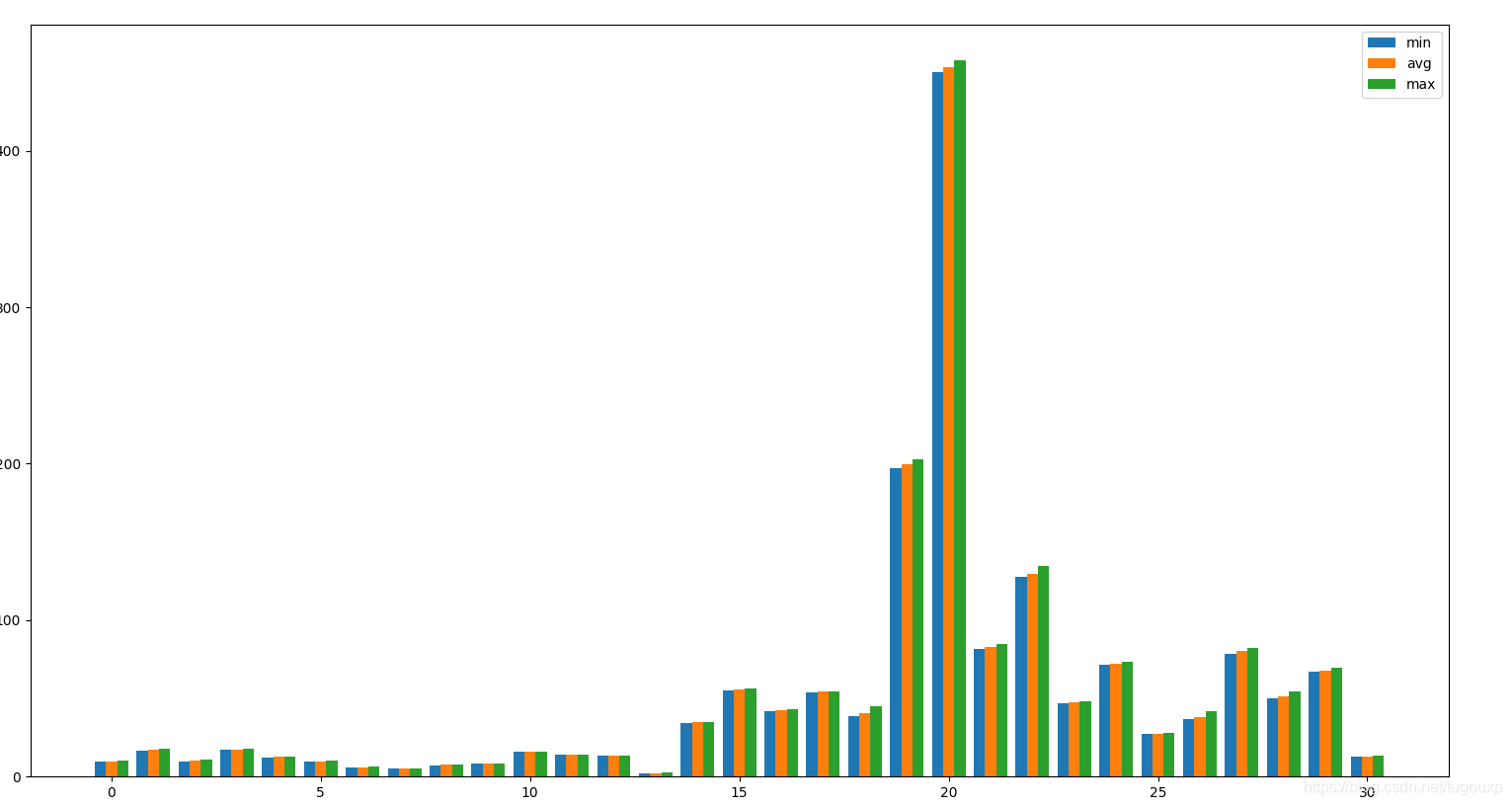

按照LOG序号显示的模型计算时间分布图:

VGG16必须要提一下,推理过程时间单位特别高,为何它这么突出呢?

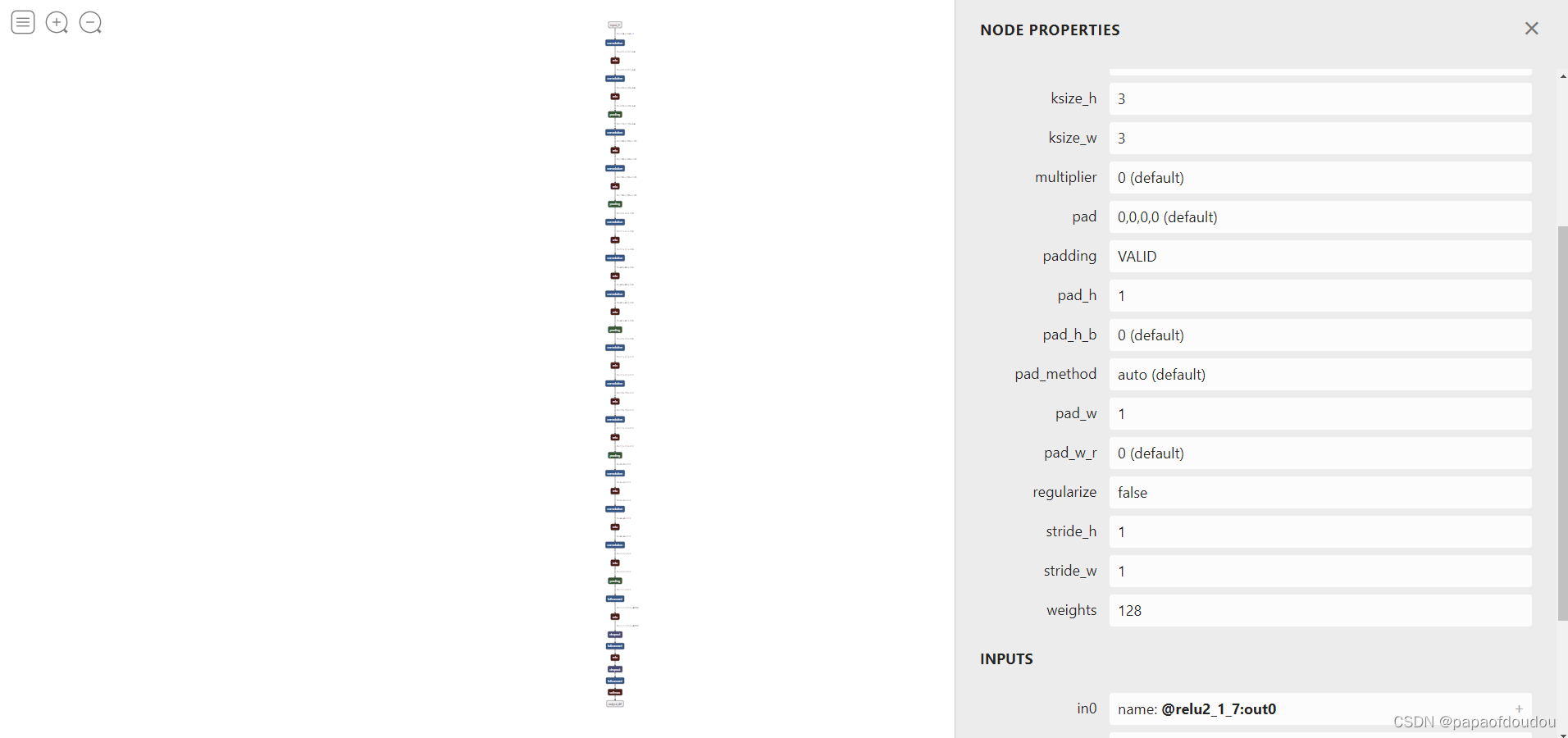

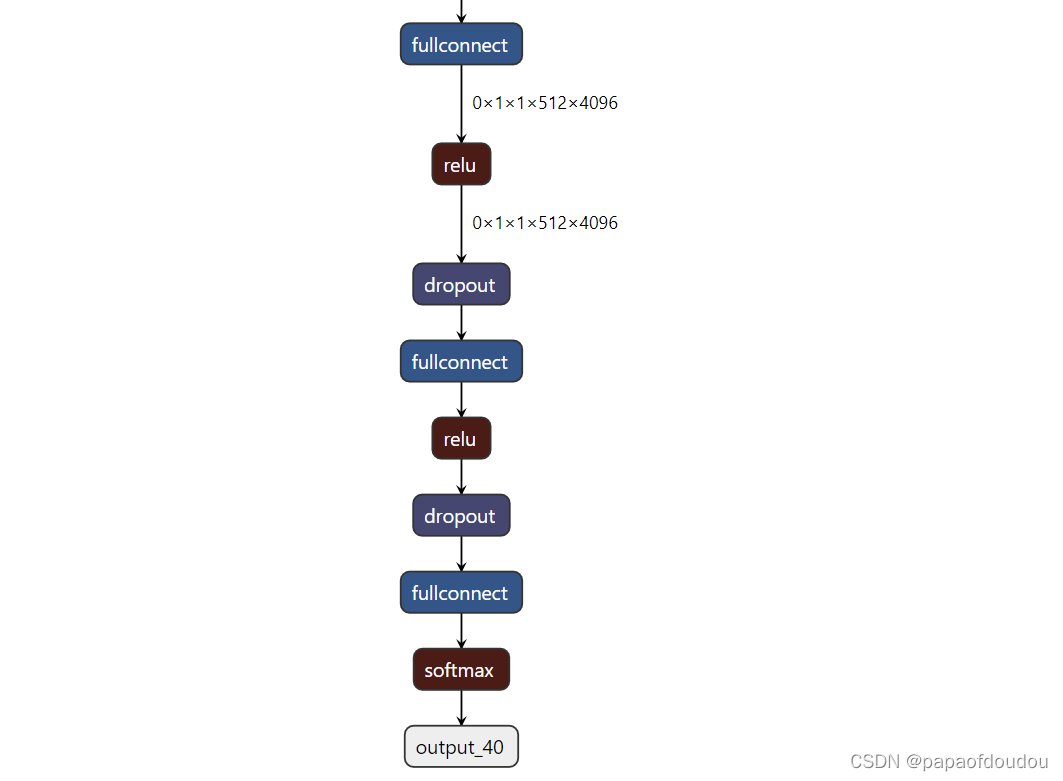

先看VGG16的网络结构:

结构如上图,可以看到,VGG16的网络结构并不算复杂,没有像YOLO那样的多输出,也没有YOLO那么多的层数,为何对算力的要求这么高呢?

vgg16模型挺大的,它的大并不体现在层数,层数多并不一定算力要求高,VGG16网络单层的计算量特别大,权重数据500多MB,也非常大,它最后有三层FC都是4096*4096的计算量,非常非常的大。

所以,层数虽少,可是每层的计算量都是神斗士级别的,当然难干了。

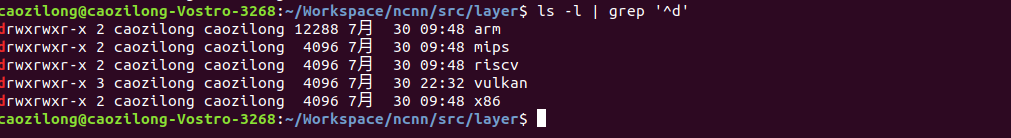

针对特定处理器的算子优化在下面的位置:

其它的转换用例可以参考下面博客:

YOLOV4:

FAQ

基于众所周知的原因,如果再安装依赖工具的时候遇到问题,请将/etc/apt/source.list中的源换位下面的ALI源,如下源只针对于ubuntu18.04系统,不同的系统源是不同的。

deb http://mirrors.aliyun.com/ubuntu/ bionic main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ bionic-security main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ bionic-updates main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ bionic-proposed main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ bionic-backports main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ bionic main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ bionic-security main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ bionic-updates main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ bionic-proposed main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ bionic-backports main restricted universe multiverse结束!

本文介绍了腾讯的神经网络推理框架ncnn,包括squeezenetssd和YOLOV4等用例的运行,以及onnx模型到ncnn的转换。在Ubuntu18.04上搭建环境,演示了模型识别图片的过程,展示了ncnn的高效推理性能,并探讨了VGG16模型计算量大的原因。此外,还提到了针对特定处理器的算子优化和YOLOV4的转换方法。

本文介绍了腾讯的神经网络推理框架ncnn,包括squeezenetssd和YOLOV4等用例的运行,以及onnx模型到ncnn的转换。在Ubuntu18.04上搭建环境,演示了模型识别图片的过程,展示了ncnn的高效推理性能,并探讨了VGG16模型计算量大的原因。此外,还提到了针对特定处理器的算子优化和YOLOV4的转换方法。

https://blog.youkuaiyun.com/tugouxp/article/details/121143770

https://blog.youkuaiyun.com/tugouxp/article/details/121143770

2910

2910