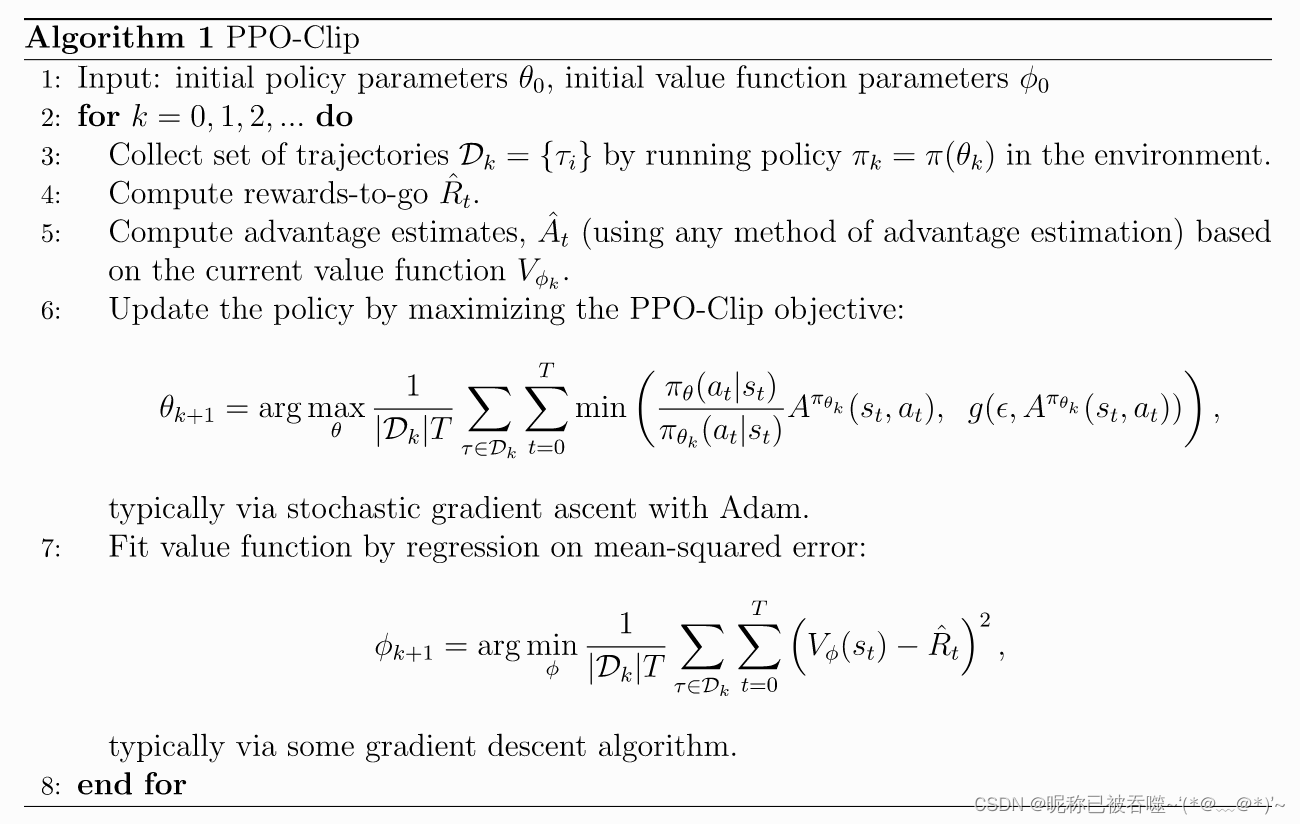

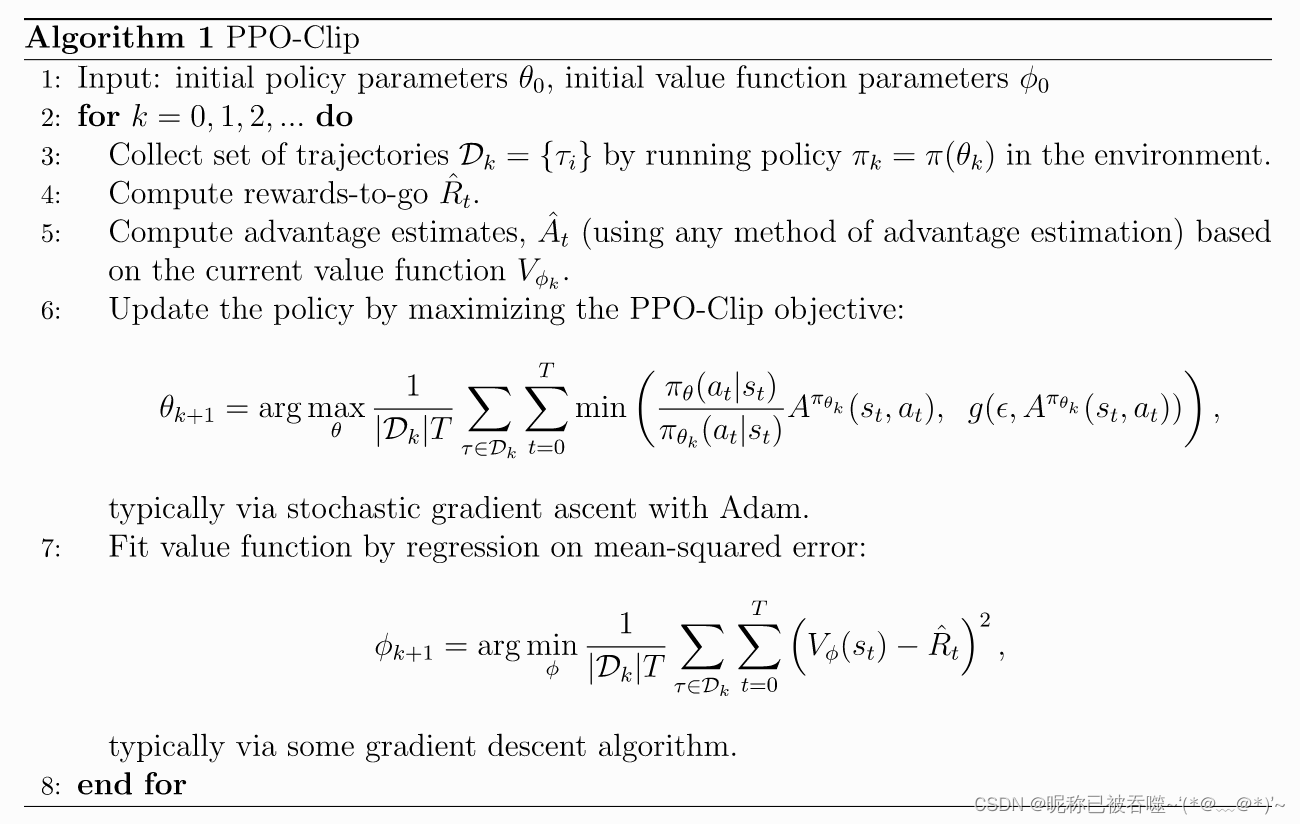

算法流程

代码

import matplotlib.pyplot as plt

import tensorflow as tf

import numpy as np

import gym

import copy

def build_actor_network(state_dim, action_dim):

model = tf.keras.Sequential([

tf.keras.layers.Dense(units=128, activation='relu'),

tf.keras.layers.Dense(units=action_dim, activation='softmax')

])

model.build(input_shape=(None, state_dim))

return model

def build_critic_network(state_dim):

model = tf.keras.Sequential([

tf.keras.layers.Dense(units=128, activation='relu'),

tf.keras.layers.Dense(units=1, activation='linear')

])

model.build(input_shape=(None, state_dim))

return model

class Actor(object):

def __init__(self, state_dim, action_dim, lr):

self.action_dim = action_dim

self.old_policy = build_actor_network(state_dim, action_dim)

self.new_policy = build_actor_network(state_dim, action_dim)

self.update_policy()

self.optimizer = tf.keras.optimizers.Adam(learning_rate=lr)

def choice_action(self, state):

po

该代码示例展示了如何在Python中使用TensorFlow库构建和训练一个Actor-Critic模型来解决OpenAIGym的CartPole-v1环境。Actor网络用于选择动作,Critic网络估计状态值函数,通过强化学习更新策略。

该代码示例展示了如何在Python中使用TensorFlow库构建和训练一个Actor-Critic模型来解决OpenAIGym的CartPole-v1环境。Actor网络用于选择动作,Critic网络估计状态值函数,通过强化学习更新策略。

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

797

797

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?