吴恩达机器学习 笔记整理 Chapter 2 单变量线性回归 Linear regression with one variable

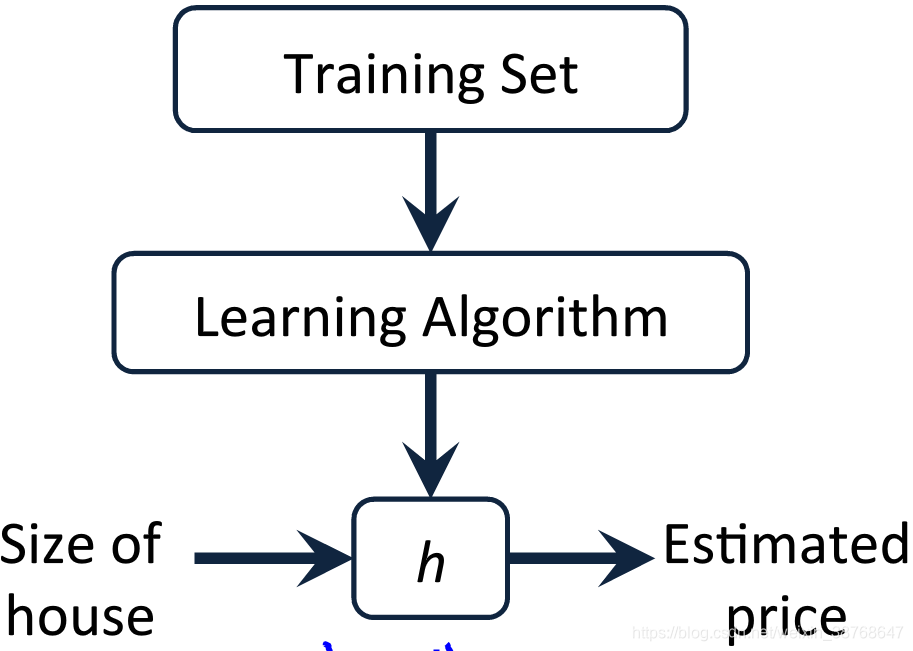

Main Procedure

hypothesis

a function that maps input to output

Univariate linear regression

h θ ( x ) = θ 0 + θ 1 x h_\theta(x) = \theta_0 + \theta_1x hθ(x)=θ0+θ1x

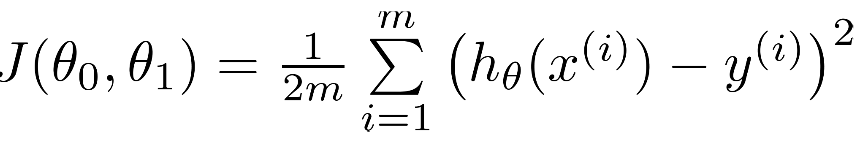

Cost function

Idea

Choose

θ

\theta

θ so that

h

θ

(

x

)

h_\theta(x)

hθ(x) is close to y for our training examples (x,y).

To fit the function to training data => To minimized the cost function

To minimize the square difference between the hypothesis and the actual value

Cost function for Univariate linear regression

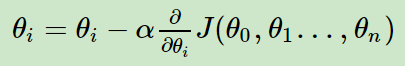

Gradient Descent

An algorithm for minimizing the cost function.

Intuition: contour plot for J ( θ ) J(\theta) J(θ)

To minimize as quickly as possible

Outline

- Start with some θ 0 \theta_0 θ0, θ 1 \theta_1 θ1 (Random Initialize)

- Keep changing θ 0 \theta_0 θ0, θ 1 \theta_1 θ1 to reduce J ( θ 0 , θ 1 ) J(\theta_0, \theta_1) J(θ0,θ1) until end up at minimum (Although sensitive of local mini, usually get global mini)

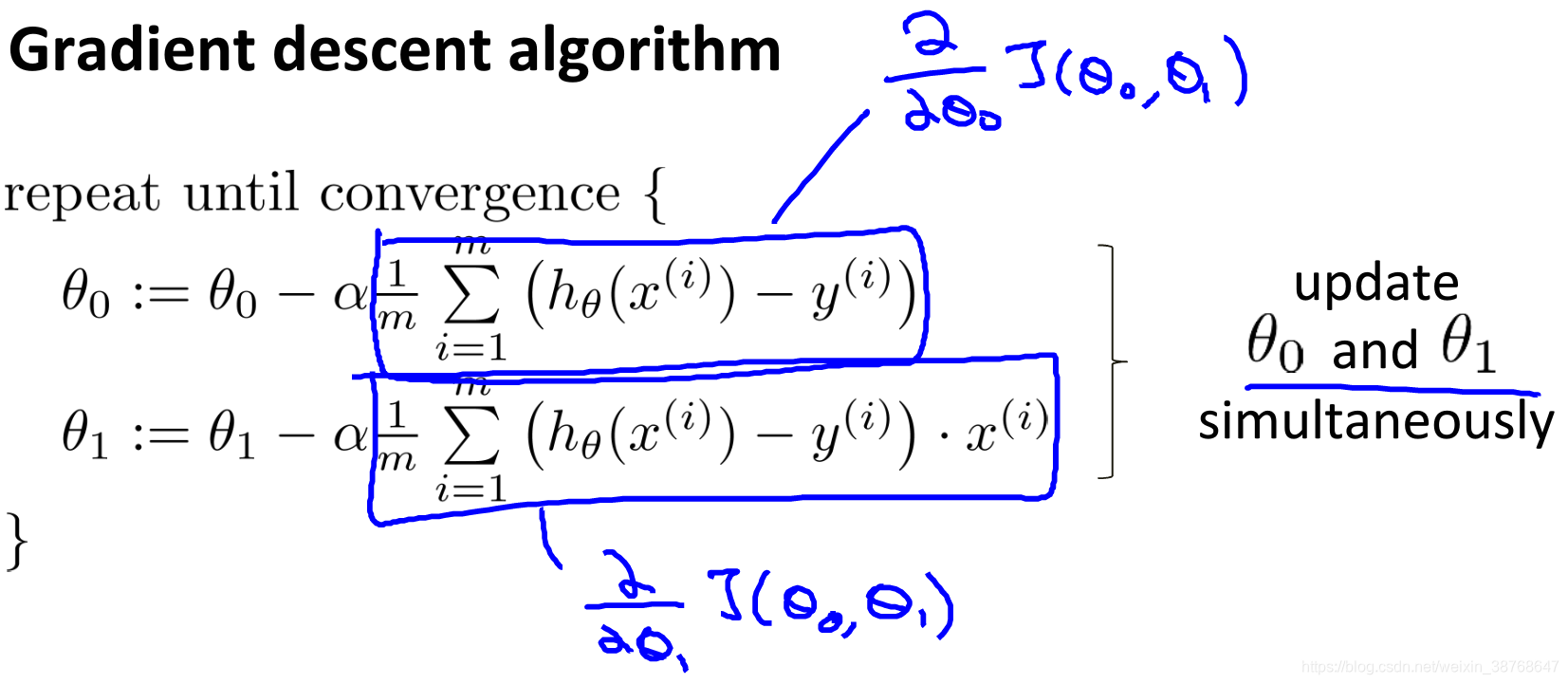

Algorithm: To minimize as quickly as possible

Repeat util convergence{

}

NOTE: All the parameter

θ

i

\theta_i

θi should be update simultaneously.

α \alpha α: learning rate

Need to choose carefully.

- Too small: gradient descent can be slow

- Too large: it can be overshoot the minimum. It may be fail to converge, or even diverge

Gradient descent can converge to a local minimum, even with learning rate a fixed

As we approach a local minimum, gradient descent will automatically take smaller steps(because the partial derivative will become smaller). So there is no need to decrease

α

\alpha

α overtime.

Gradient Descent for Univariate Linear Regression

Problem: susceptible to local optima

It’s okay because the cost function for linear regression is a convex function

Batch Gradient Descent

Each step uses all the training examples.

这篇博客详细介绍了吴恩达机器学习课程中的单变量线性回归,包括假设函数hθ(x)=θ0+θ1x,以及成本函数的概念,旨在通过最小化误差平方和来拟合训练数据。文章进一步探讨了梯度下降法,解释了其工作原理和学习率α的影响,并指出在单变量线性回归中,梯度下降通常能找到全局最小值,尤其是在面对凸函数如成本函数时。批量梯度下降法在每次迭代时使用所有训练样本以求最优解。

这篇博客详细介绍了吴恩达机器学习课程中的单变量线性回归,包括假设函数hθ(x)=θ0+θ1x,以及成本函数的概念,旨在通过最小化误差平方和来拟合训练数据。文章进一步探讨了梯度下降法,解释了其工作原理和学习率α的影响,并指出在单变量线性回归中,梯度下降通常能找到全局最小值,尤其是在面对凸函数如成本函数时。批量梯度下降法在每次迭代时使用所有训练样本以求最优解。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?