简洁版

SinCos位置编码(加上去的)

sincos-2D(Rk,d)=(sin0θ0cos0θ0sin0θ1cos0θ1sin0θ2⋯sin0θd2−2cos0θd2−2sin0θd2−1cos0θd2−1⋮⋮⋮⋮⋱⋱⋮⋮⋮sin(k−2)θ0cos(k−2)θ0sin(k−2)θ1cos(k−2)θ1sin(k−2)θ2⋯sin(k−2)θd2−2cos(k−2)θd2−2sin(k−2)θd2−1cos(k−2)θd2−1sin(k−1)θ0cos(k−1)θ0sin(k−1)θ1cos(k−1)θ1sin(k−1)θ2⋯sin(k−1)θd2−2cos(k−1)θd2−2sin(k−1)θd2−1cos(k−1)θd2−1)\text{\textcolor{blue}{sincos-2D}} \left( \mathcal{R}_{k,d} \right) = \begin{pmatrix} \textcolor{blue}{\sin 0 \theta_0} & \textcolor{blue}{\cos 0 \theta_0} & \textcolor{blue}{\sin 0 \theta_1} & \textcolor{blue}{\cos 0 \theta_1} & \textcolor{blue}{\sin 0 \theta_2} & \cdots & \textcolor{blue}{\sin 0 \theta_{\frac{d}{2}-2}} & \textcolor{blue}{\cos 0 \theta_{\frac{d}{2}-2}} & \textcolor{blue}{\sin 0 \theta_{\frac{d}{2}-1}} & \textcolor{blue}{\cos 0 \theta_{\frac{d}{2}-1}} \\ \vdots & \vdots & \vdots & \vdots & \ddots & \ddots & \vdots & \vdots & \vdots \\ \textcolor{blue}{\sin(k-2) \theta_0} & \textcolor{blue}{\cos(k-2) \theta_0} & \textcolor{blue}{\sin(k-2) \theta_1} & \textcolor{blue}{\cos(k-2) \theta_1} & \textcolor{blue}{\sin(k-2) \theta_2} & \cdots & \textcolor{blue}{\sin(k-2) \theta_{\frac{d}{2}-2}} & \textcolor{blue}{\cos(k-2) \theta_{\frac{d}{2}-2}} & \textcolor{blue}{\sin(k-2) \theta_{\frac{d}{2}-1}} & \textcolor{blue}{\cos(k-2) \theta_{\frac{d}{2}-1}} \\ \textcolor{blue}{\sin(k-1) \theta_0} & \textcolor{blue}{\cos(k-1) \theta_0} & \textcolor{blue}{\sin(k-1) \theta_1} & \textcolor{blue}{\cos(k-1) \theta_1} & \textcolor{blue}{\sin(k-1) \theta_2} & \cdots & \textcolor{blue}{\sin(k-1) \theta_{\frac{d}{2}-2}} & \textcolor{blue}{\cos(k-1) \theta_{\frac{d}{2}-2}} & \textcolor{blue}{\sin(k-1) \theta_{\frac{d}{2}-1}} & \textcolor{blue}{\cos(k-1) \theta_{\frac{d}{2}-1}} \end{pmatrix}sincos-2D(Rk,d)=sin0θ0⋮sin(k−2)θ0sin(k−1)θ0cos0θ0⋮cos(k−2)θ0cos(k−1)θ0sin0θ1⋮sin(k−2)θ1sin(k−1)θ1cos0θ1⋮cos(k−2)θ1cos(k−1)θ1sin0θ2⋱sin(k−2)θ2sin(k−1)θ2⋯⋱⋯⋯sin0θ2d−2⋮sin(k−2)θ2d−2sin(k−1)θ2d−2cos0θ2d−2⋮cos(k−2)θ2d−2cos(k−1)θ2d−2sin0θ2d−1⋮sin(k−2)θ2d−1sin(k−1)θ2d−1cos0θ2d−1cos(k−2)θ2d−1cos(k−1)θ2d−1

import torch

def getPositionEncoding(seq_len, dim, freqs=1000):

P = torch.zeros(seq_len, dim)

for k in range(seq_len):

for i in torch.arange(dim//2):

denominator = freqs ** (2*i/dim)

P[k, 2*i] = torch.sin(k/denominator)

P[k, 2*i+1] = torch.cos(k/denominator)

return P

P = getPositionEncoding(seq_len=3, dim=4)

print(P)

def get_sin_cos(seq_len, dim, freqs=1000):

res = torch.zeros(seq_len, dim)

theta_row = 1.0 / (freqs ** (torch.arange(0, dim, 2).float() / dim ))

seq_row = torch.arange(seq_len)

mat = torch.outer(seq_row, theta_row)

res[:,0:dim:2] = mat.sin()

res[:,1:dim:2] = mat.cos()

return res

P2 = get_sin_cos(seq_len=3, dim=4)

print(P2)

'''

P和P2的输出都是:

tensor([[ 0.0000, 1.0000, 0.0000, 1.0000],

[ 0.8415, 0.5403, 0.0316, 0.9995],

[ 0.9093, -0.4161, 0.0632, 0.9980]])

tensor([[ 0.0000, 1.0000, 0.0000, 1.0000],

[ 0.8415, 0.5403, 0.0316, 0.9995],

[ 0.9093, -0.4161, 0.0632, 0.9980]])

'''

RoPE

使用复数的理解

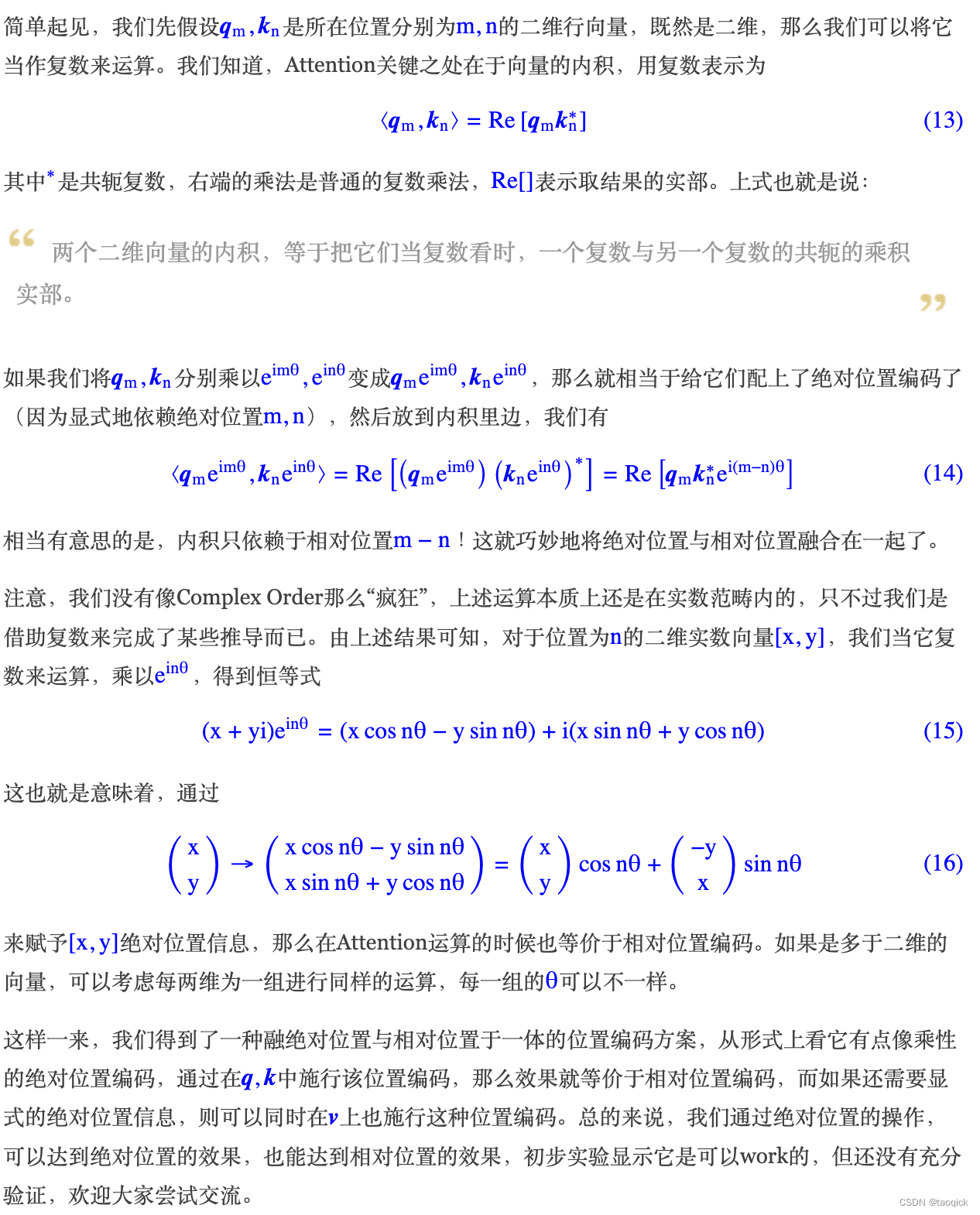

这篇堪称苏剑林老师的代表作了,简单来说RoPE就是乘上复数形式即可。也就是说两个二维向量的内积,等于把它们当复数看时,一个复数与另一个复数的共轭的乘积实部。也就是说(x1+y1i)(x2−y2i)=x1x2+y1y2+(x2y1−x1y2)i(x_1+y_1i)(x_2-y_2i)=x_1x_2+y_1y_2+(x_2y_1-x_1y_2)i(x1+y1i)(x2−y2i)=x1x2+y1y2+(x2y1−x1y2)i,如果我们把位置m的向量qmq_mqm和位置n的向量knk_nkn分别乘以eimθe^{im\theta}eimθ和einθe^{in\theta}einθ,就会变成qmeimθq_me^{im\theta}qmeimθ和kneinθk_ne^{in\theta}kneinθ,那么存在

<qmeimθ,kneinθ>=Re[qmkn∗ei(m−n)θ)]

<q_me^{im\theta},k_ne^{in\theta}>=Re[q_mk_n^*e^{i(m-n)\theta)}]

<qmeimθ,kneinθ>=Re[qmkn∗ei(m−n)θ)]

其中Re[]表示实数部分,kn∗k_n^*kn∗表示共轭部分,相对位置m-n隐含在复数的共轭里,也就是上述表达式的右边;机器学习中的位置编码都是实数运算,也就是上述表达式的左边,所以RoPE实际上就是qmq_mqm乘以eimθe^{im\theta}eimθ,其中m表示序列第m个位置,i表示d维embedding中第i维度。另外值得注意的是,准确点说qmq_mqm此时是一个长度为2d维度向量中的第2i-1维和2i维组成的2维向量,RoPE在最后处理的code里都是以2为单位分组,每组内进行复数的乘法。

上图里(q0,q1,...,qd−1)T(q_0,q_1,...,q_{d-1})^T(q0,q1,...,qd−1)T实际上表示的是第m位置对应的token向量

插入一个复数代码实现:

import torch

from typing import Tuple

def precompute_freqs_cis(dim: int, seq_len: int, freqs: float = 10000.0):

# 计算词向量元素两两分组之后,每组元素对应的旋转角度

theta = 1.0 / (freqs ** (torch.arange(0, dim, 2).float() / dim))

# 生成 token 序列索引 t = [0, 1,..., seq_len-1]

t = torch.arange(seq_len, device=freqs.device)

# theta.shape = [seq_len, dim // 2]

theta = torch.outer(t, theta).float()

# torch.polar的文档, https://pytorch.org/docs/stable/generated/torch.polar.html

# torch.polar输入参数是abs和angle,abs所有值都一样,abs和angle的shape都一样

# torch.polar输入参数是abs和angle,则theta_cis = abs*(cos(angle) + sin(angle)i)

theta_cis = torch.polar(torch.ones_like(theta), theta)

return theta_cis

def apply_rotary_emb(

xq: torch.Tensor,

xk: torch.Tensor,

theta_cis: torch.Tensor,

) -> Tuple[torch.Tensor, torch.Tensor]:

# xq.shape = [batch_size, seq_len, dim]

# xq_.shape = [batch_size, seq_len, dim // 2, 2]

xq_ = xq.float().reshape(*xq.shape[:-1], -1, 2)

xk_ = xk.float().reshape(*xk.shape[:-1], -1, 2)

# 转为复数域, xq_.shape = [batch_size, seq_len, dim // 2]

xq_ = torch.view_as_complex(xq_)

xk_ = torch.view_as_complex(xk_)

# 应用旋转操作,然后将结果转回实数域

# xq_out.shape = [batch_size, seq_len, dim]

xq_out = torch.view_as_real(xq_ * theta_cis).flatten(2) #从dim=2维度开始拍平

xk_out = torch.view_as_real(xk_ * theta_cis).flatten(2)

return xq_out.type_as(xq), xk_out.type_as(xk)

if __name__ == '__main__':

seq_len,dim=3,4

freqs_cis = precompute_freqs_cis(dim=dim, seq_len=seq_len, theta=10000.0)

xq = torch.rand(1, seq_len, dim)

xk = torch.rand(1, seq_len, dim)

res = apply_rotary_emb(xq, xk, freqs_cis)

# res的shape是1, seq_len, dim

'''

class Attention(nn.Module):

def __init__(self, args: ModelArgs):

super().__init__()

self.wq = Linear(...)

self.wk = Linear(...)

self.wv = Linear(...)

self.freqs_cis = precompute_freqs_cis(dim, max_seq_len * 2)

def forward(self, x: torch.Tensor):

bsz, seqlen, _ = x.shape

xq, xk, xv = self.wq(x), self.wk(x), self.wv(x)

xq = xq.view(batch_size, seq_len, dim)

xk = xk.view(batch_size, seq_len, dim)

xv = xv.view(batch_size, seq_len, dim)

# attention 操作之前,应用旋转位置编码

xq, xk = apply_rotary_emb(xq, xk, freqs_cis=freqs_cis)

# scores.shape = (bs, seqlen, seqlen)

scores = torch.matmul(xq, xk.transpose(1, 2)) / math.sqrt(dim)

scores = F.softmax(scores.float(), dim=-1)

output = torch.matmul(scores, xv) # (batch_size, seq_len, dim)

# ......

'''

不用复数的理解

Rn\mathcal{R}_nRn和Rx,y\mathcal{R}_{x,y}Rx,y其实都是都是d维(d列)的方阵,Rn\mathcal{R}_nRn和Rx,y\mathcal{R}_{x,y}Rx,y刚好还是正交的(orthogonal matrix),xmx_mxm和xnx_nxn分别是m位置和n位置的一维向量,对应的shape是(d,1),所以有了下面的公式,再次强调,这下面的Rn\mathcal{R}_nRn和Rx,y\mathcal{R}_{x,y}Rx,y都是针对一个位置n或者(x,y)的,后面不需要矩阵乘法的实现才是针对整个序列:

RoPE-1D(Rn)=(cosnθ0−sinnθ0000⋯0000sinnθ0cosnθ0000⋯000000cosnθ1−sinnθ10⋯000000sinnθ1cosnθ10⋯0000⋮⋮⋮⋮⋱⋱⋮⋮⋮0000⋯cosnθd2−2−sinnθd2−20000000⋯sinnθd2−2cosnθd2−20000000⋯00⋱⋮⋮0000⋯000cosnθd2−1−sinnθd2−10000⋯000sinnθd2−1cosnθd2−1)\text{\textcolor{blue}{RoPE-1D}} \left( \mathcal{R}_n \right) =

\begin{pmatrix}

\textcolor{blue}{\cos n \theta_0} & \textcolor{blue}{-\sin n \theta_0} & 0 & 0 & 0 & \cdots & 0 & 0 & 0 & 0 \\

\textcolor{blue}{\sin n \theta_0} & \textcolor{blue}{\cos n \theta_0} & 0 & 0 & 0 & \cdots & 0 & 0 & 0 & 0 \\

0 & 0 & \textcolor{blue}{\cos n \theta_1} & \textcolor{blue}{-\sin n \theta_1} & 0 & \cdots & 0 & 0 & 0 & 0 \\

0 & 0 & \textcolor{blue}{\sin n \theta_1} & \textcolor{blue}{\cos n \theta_1} & 0 & \cdots & 0 & 0 & 0 & 0 \\

\vdots & \vdots & \vdots & \vdots & \ddots & \ddots & \vdots & \vdots & \vdots \\

0 & 0 & 0 & 0 & \cdots & \textcolor{blue}{\cos n \theta_{\frac{d}{2}-2}} & \textcolor{blue}{-\sin n \theta_{\frac{d}{2}-2}} & 0 & 0 & 0 \\

0 & 0 & 0 & 0 & \cdots & \textcolor{blue}{\sin n \theta_{\frac{d}{2}-2}} & \textcolor{blue}{\cos n \theta_{\frac{d}{2}-2}} & 0 & 0 & 0 \\

0 & 0 & 0 & 0 & \cdots & 0 & 0 & \ddots & \vdots & \vdots \\

0 & 0 & 0 & 0 & \cdots & 0 & 0 & 0 & \textcolor{blue}{\cos n \theta_{\frac{d}{2}-1}} & \textcolor{blue}{-\sin n \theta_{\frac{d}{2}-1}} \\

0 & 0 & 0 & 0 & \cdots & 0 & 0 & 0 & \textcolor{blue}{\sin n \theta_{\frac{d}{2}-1}} & \textcolor{blue}{\cos n \theta_{\frac{d}{2}-1}}

\end{pmatrix}RoPE-1D(Rn)=cosnθ0sinnθ000⋮00000−sinnθ0cosnθ000⋮0000000cosnθ1sinnθ1⋮0000000−sinnθ1cosnθ1⋮000000000⋱⋯⋯⋯⋯⋯⋯⋯⋯⋯⋱cosnθ2d−2sinnθ2d−20000000⋮−sinnθ2d−2cosnθ2d−20000000⋮00⋱000000⋮00⋮cosnθ2d−1sinnθ2d−1000000⋮−sinnθ2d−1cosnθ2d−1

RoPE-2D(Rx,y)=(cosxθ0−sinxθ0000⋯0000sinxθ0cosxθ0000⋯000000cosyθ1−sinyθ10⋯000000sinyθ1cosyθ10⋯0000⋮⋮⋮⋮⋱⋱⋮⋮⋮0000⋯cosxθd/2−2−sinxθd/2−20000000⋯sinxθd/2−2cosxθd/2−20000000⋯00⋱⋮⋮0000⋯000cosyθd/2−1−sinyθd/2−10000⋯000sinyθd/2−1cosyθd/2−1)\text{\color{blue}RoPE-2D} \left( \mathcal{R}_{x, y} \right) =

\begin{pmatrix}

\color{blue}{\cos x \theta_0} & \color{blue}{-\sin x \theta_0} & 0 & 0 & 0 & \cdots & 0 & 0 & 0 & 0 \\

\color{blue}{\sin x \theta_0} & \color{blue}{\cos x \theta_0} & 0 & 0 & 0 & \cdots & 0 & 0 & 0 & 0 \\

0 & 0 & \color{blue}{\cos y \theta_1} & \color{blue}{-\sin y \theta_1} & 0 & \cdots & 0 & 0 & 0 & 0 \\

0 & 0 & \color{blue}{\sin y \theta_1} & \color{blue}{\cos y \theta_1} & 0 & \cdots & 0 & 0 & 0 & 0 \\

\vdots & \vdots & \vdots & \vdots & \ddots & \ddots & \vdots & \vdots & \vdots \\

0 & 0 & 0 & 0 & \cdots & \color{blue}{\cos x \theta_{d/2-2}} & \color{blue}{-\sin x \theta_{d/2-2}} & 0 & 0 & 0 \\

0 & 0 & 0 & 0 & \cdots & \color{blue}{\sin x \theta_{d/2-2}} & \color{blue}{\cos x \theta_{d/2-2}} & 0 & 0 & 0 \\

0 & 0 & 0 & 0 & \cdots & 0 & 0 & \ddots & \vdots & \vdots \\

0 & 0 & 0 & 0 & \cdots & 0 & 0 & 0 & \color{blue}{\cos y \theta_{d/2-1}} & \color{blue}{-\sin y \theta_{d/2-1}} \\

0 & 0 & 0 & 0 & \cdots & 0 & 0 & 0 & \color{blue}{\sin y \theta_{d/2-1}} & \color{blue}{\cos y \theta_{d/2-1}}

\end{pmatrix}RoPE-2D(Rx,y)=cosxθ0sinxθ000⋮00000−sinxθ0cosxθ000⋮0000000cosyθ1sinyθ1⋮0000000−sinyθ1cosyθ1⋮000000000⋱⋯⋯⋯⋯⋯⋯⋯⋯⋯⋱cosxθd/2−2sinxθd/2−20000000⋮−sinxθd/2−2cosxθd/2−20000000⋮00⋱000000⋮00⋮cosyθd/2−1sinyθd/2−1000000⋮−sinyθd/2−1cosyθd/2−1

下面的公式也需要牢记,说明Rope是发生在WqW_qWq和WkW_kWk矩阵后才开始左乘旋矩阵的!

qm⊤kn=(RmWqxm)⊤(RnWkxn)=x⊤WqRn−mWkxnq_m^\top k_n = (\mathcal{R}_{m} W_q x_m)^\top (\mathcal{R}_{n} W_k x_n) = x^\top W_q \mathcal{R}_{n-m} W_k x_n

qm⊤kn=(RmWqxm)⊤(RnWkxn)=x⊤WqRn−mWkxn

不需要矩阵乘法的实现,其中⊗\otimes⊗是直接逐位相乘:

RΘ,mdx=(x1x2x3x4⋮xd−1xd)⊗(cosmθ1cosmθ1cosmθ2cosmθ2⋮cosmθd/2cosmθd/2)+(−x2x1−x4x3⋮−xdxd−1)⊗(sinmθ1sinmθ1sinmθ2sinmθ2⋮sinmθd/2sinmθd/2)

R_{\Theta, m}^d x =

\begin{pmatrix}

x_1 \\

x_2 \\

x_3 \\

x_4 \\

\vdots \\

x_{d-1} \\

x_d \\

\end{pmatrix}

\otimes

\begin{pmatrix}

\cos m \theta_1 \\

\cos m \theta_1 \\

\cos m \theta_2 \\

\cos m \theta_2 \\

\vdots \\

\cos m \theta_{d/2} \\

\cos m \theta_{d/2} \\

\end{pmatrix}

+

\begin{pmatrix}

-x_2 \\

x_1 \\

-x_4 \\

x_3 \\

\vdots \\

-x_d \\

x_{d-1} \\

\end{pmatrix}

\otimes

\begin{pmatrix}

\sin m \theta_1 \\

\sin m \theta_1 \\

\sin m \theta_2 \\

\sin m \theta_2 \\

\vdots \\

\sin m \theta_{d/2} \\

\sin m \theta_{d/2} \\

\end{pmatrix}

RΘ,mdx=x1x2x3x4⋮xd−1xd⊗cosmθ1cosmθ1cosmθ2cosmθ2⋮cosmθd/2cosmθd/2+−x2x1−x4x3⋮−xdxd−1⊗sinmθ1sinmθ1sinmθ2sinmθ2⋮sinmθd/2sinmθd/2

插入一个来自qwen2 vl(transformers/models/qwen2_vl/modeling_qwen2_vl.py)的实现:

def rotate_half(x):

"""Rotates half the hidden dims of the input."""

x1 = x[..., : x.shape[-1] // 2]

x2 = x[..., x.shape[-1] // 2 :]

return torch.cat((-x2, x1), dim=-1)

def apply_multimodal_rotary_pos_emb(q, k, cos, sin, mrope_section, unsqueeze_dim=1):

# ...

q_embed = (q * cos) + (rotate_half(q) * sin)

k_embed = (k * cos) + (rotate_half(k) * sin)

return q_embed, k_embed

以上实现中,rotate_half的x拼接有点反直觉,那是因为qwen的code中之前做过调整,下面是复数版和不用复数版的对比:

import torch

from typing import Tuple

def precompute_theta_cis(dim: int, seq_len: int, freq: float = 10000.0):

# 计算词向量元素两两分组之后,每组元素对应的旋转角度

theta_vec = 1.0 / (freq ** (torch.arange(0, dim, 2).float() / dim))

print('theta_vec={}'.format(theta_vec))

# 生成 token 序列索引 t = [0, 1,..., seq_len-1]

seq_vec = torch.arange(seq_len)

# freqs.shape = [seq_len, dim // 2]

theta_mat = torch.outer(seq_vec, theta_vec).float()

# torch.polar的文档, https://pytorch.org/docs/stable/generated/torch.polar.html

# torch.polar输入参数是abs和angle,abs所有值都一样,abs和angle的shape都一样

# torch.polar输入参数是abs和angle,则theta_cis = abs*(cos(angle) + sin(angle)i)

theta_cis = torch.polar(torch.ones_like(theta_mat), theta_mat)

return theta_cis

def apply_rotary_emb(

q: torch.Tensor,

k: torch.Tensor,

theta_cis: torch.Tensor,

) -> Tuple[torch.Tensor, torch.Tensor]:

# xq.shape = [batch_size, seq_len, dim]

# xq_.shape = [batch_size, seq_len, dim // 2, 2]

q = q.float().reshape(*q.shape[:-1], -1, 2)

k = k.float().reshape(*k.shape[:-1], -1, 2)

# 转为复数域, xq_.shape = [batch_size, seq_len, dim // 2]

q_c = torch.view_as_complex(q)

k_c = torch.view_as_complex(k)

# 应用旋转操作,然后将结果转回实数域

# xq_out.shape = [batch_size, seq_len, dim]

xq_out = torch.view_as_real(q_c * theta_cis).flatten(2) #从dim=2维度开始拍平

xk_out = torch.view_as_real(k_c * theta_cis).flatten(2)

return xq_out, xk_out

def precompute_cos_sin(dim, seq_len, freqs=10000.0):

theta_vec = 1.0 / (freqs ** (torch.arange(0, dim, 2).float().repeat_interleave(2) / dim))

seq_vec = torch.arange(seq_len)

theta_mat = torch.outer(seq_vec, theta_vec).float()

cos, sin = theta_mat.cos(), theta_mat.sin()

return cos, sin

def rotate_half(x):

# 在dim这一维cat就行

dim = x.shape[-1]

x1 = x[..., 0:dim:2]

x2 = x[..., 1:dim:2]

return torch.cat((-x2, x1), dim=-1)

def apply_multimodal_rotary_pos_emb(q, k, cos, sin):

# 不用复数的实现

q_embed = (q * cos) + (rotate_half(q) * sin)

k_embed = (k * cos) + (rotate_half(k) * sin)

return q_embed, k_embed

if __name__ == '__main__':

seq_len,dim=3,4

cos, sin = precompute_cos_sin(dim=dim, seq_len=seq_len, freqs=10000.0)

xq = torch.rand(1, seq_len, dim)

xk = torch.rand(1, seq_len, dim)

res1 = apply_multimodal_rotary_pos_emb(xq, xk, cos, sin)

print('res1={}'.format(res1))

theta_cis = precompute_theta_cis(dim=dim, seq_len=seq_len, freq=10000.0)

res2 = apply_rotary_emb(xq, xk, theta_cis)

print('res2={}'.format(res2))

'''

输出为:

res1=(tensor([[[ 0.0758, 0.4353, 0.1800, 0.4360],

[-0.3335, -0.1384, 0.0956, 0.6422],

[-0.9090, -0.8136, 0.6493, 0.6571]]]), tensor([[[ 0.8717, 0.7018, 0.1076, 0.1226],

[ 0.0404, 0.1006, 0.1455, 0.1104],

[-0.4338, -0.2872, 0.5524, 0.3026]]]))

theta_vec=tensor([1.0000, 0.0100])

res2=(tensor([[[ 0.0758, 0.4353, 0.1800, 0.4360],

[-0.3335, 0.8553, 0.0838, 0.6422],

[-0.9090, 0.6731, 0.6166, 0.6571]]]), tensor([[[ 0.8717, 0.7018, 0.1076, 0.1226],

[ 0.0404, 0.7218, 0.1381, 0.1104],

[-0.4338, 0.8226, 0.5280, 0.3026]]]))

'''

EulerFormer

来自于论文 EulerFormer: Sequential User Behavior Modeling with Complex Vector Attention

调整query和key之间的语义旋转角ΔS。因为rope的原理是ΔS+ (位置旋转角ΔP)。而transformer不同层间的 ΔS 分布可能差异很大,而RoPE用同一套ΔP去适配所有层的ΔS 可能不是最优解,因此我们去调整不同层的ΔS以寻求更优的融合方式

博客

RoPE

以下转载自https://kexue.fm/archives/8265

后面转载自https://kexue.fm/archives/8265:

RoPE-Tie(RoPE for Text-image)

RoPE-Tie-v2

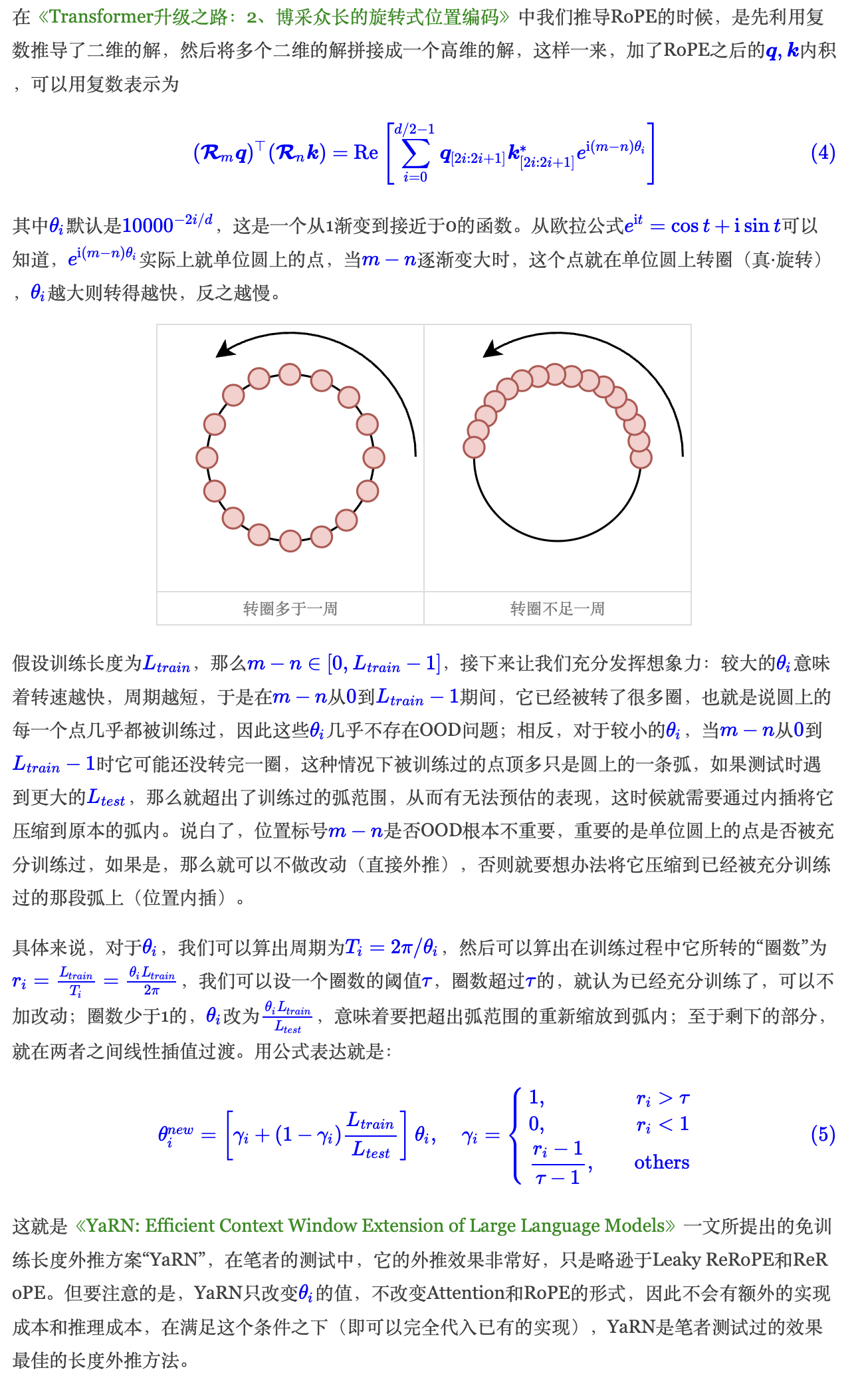

YARN (Yet another RoPE Extention)

来自 https://spaces.ac.cn/archives/9948

文章介绍了RoPE(RotaryPositionalEncoding),一种在Transformer模型中使用复数运算进行位置编码的方法。通过将向量乘以复数形式的旋转因子,RoPE能够在保持实数运算的同时引入位置信息。提供的代码示例展示了如何在PyTorch中实现这一过程,应用于注意力机制的输入向量,增强模型对序列位置的敏感性。

文章介绍了RoPE(RotaryPositionalEncoding),一种在Transformer模型中使用复数运算进行位置编码的方法。通过将向量乘以复数形式的旋转因子,RoPE能够在保持实数运算的同时引入位置信息。提供的代码示例展示了如何在PyTorch中实现这一过程,应用于注意力机制的输入向量,增强模型对序列位置的敏感性。

1961

1961

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?