该问题发给 NVIDIA 论坛,下面是与管理员的交流内容:

https://forums.developer.nvidia.com/t/can-deepstream-test1-run-on-jetson-nano-2g/179912

Hi,

After I compile deepstream-test1 directly, the program can’t run on my Jetson Nano 2G. However, after removing the deep learning model nvinferfrom the codes, the program can run. Is it because my nano 2G memory is too small, so the deep learning model can’t run? Or other reasons?

What kind of error have you met?

When I enter the command line ./deepstream-test1-app sample_720p.h264, the window deepstream-test1-app was showed. However, the window only draws the title and the border, the image is not displayed in it. The canvas of the window we saw is the desktop of Jetson Nano. The output of this program is as follows:

jetson@jetson-desktop:/opt/nvidia/deepstream/deepstream/sources/apps/sample_apps/deepstream-test1$ ./deepstream-test1-app sample_720p.h264

Now playing: sample_720p.h264

Using winsys: x11

Opening in BLOCKING MODE

0:00:11.198570435 8436 0x55934fcad0 INFO nvinfer gstnvinfer.cpp:619:gst_nvinfer_logger:<primary-nvinference-engine> NvDsInferContext[UID 1]: Info from NvDsInferContextImpl::deserializeEngineAndBackend() <nvdsinfer_context_impl.cpp:1702> [UID = 1]: deserialized trt engine from :/opt/nvidia/deepstream/deepstream-5.1/samples/models/Primary_Detector/resnet10.caffemodel_b1_gpu0_fp16.engine

INFO: [Implicit Engine Info]: layers num: 3

0 INPUT kFLOAT input_1 3x368x640

1 OUTPUT kFLOAT conv2d_bbox 16x23x40

2 OUTPUT kFLOAT conv2d_cov/Sigmoid 4x23x40

0:00:11.218911904 8436 0x55934fcad0 INFO nvinfer gstnvinfer.cpp:619:gst_nvinfer_logger:<primary-nvinference-engine> NvDsInferContext[UID 1]: Info from NvDsInferContextImpl::generateBackendContext() <nvdsinfer_context_impl.cpp:1806> [UID = 1]: Use deserialized engine model: /opt/nvidia/deepstream/deepstream-5.1/samples/models/Primary_Detector/resnet10.caffemodel_b1_gpu0_fp16.engine

0:00:11.316350994 8436 0x55934fcad0 INFO nvinfer gstnvinfer_impl.cpp:313:notifyLoadModelStatus:<primary-nvinference-engine> [UID 1]: Load new model:dstest1_pgie_config.txt sucessfully

Running...

NvMMLiteOpen : Block : BlockType = 261

NVMEDIA: Reading vendor.tegra.display-size : status: 6

NvMMLiteBlockCreate : Block : BlockType = 261

ERROR: [TRT]: ../rtSafe/cuda/reformat.cu (925) - Cuda Error in NCHWToNCHHW2: 719 (unspecified launch failure)

ERROR: [TRT]: FAILED_EXECUTION: std::exception

ERROR: Failed to enqueue inference batch

ERROR: Infer context enqueue buffer failed, nvinfer error:NVDSINFER_TENSORRT_ERROR

0:00:12.668284961 8436 0x5593091de0 WARN nvinfer gstnvinfer.cpp:1225:gst_nvinfer_input_queue_loop:<primary-nvinference-engine> error: Failed to queue input batch for inferencing

ERROR: Failed to make stream wait on event, cuda err_no:4, err_str:cudaErrorLaunchFailure

ERROR: Preprocessor transform input data failed., nvinfer error:NVDSINFER_CUDA_ERROR

ERROR from element primary-nvinference-engine: Failed to queue input batch for inferencing

0:00:12.668905003 8436 0x5593091de0 WARN nvinfer gstnvinfer.cpp:1225:gst_nvinfer_input_queue_loop:<primary-nvinference-engine> error: Failed to queue input batch for inferencing

ERROR: Failed to make stream wait on event, cuda err_no:4, err_str:cudaErrorLaunchFailure

ERROR: Preprocessor transform input data failed., nvinfer error:NVDSINFER_CUDA_ERROR

0:00:12.669423588 8436 0x5593091de0 WARN nvinfer gstnvinfer.cpp:1225:gst_nvinfer_input_queue_loop:<primary-nvinference-engine> error: Failed to queue input batch for inferencing

ERROR: Failed to make stream wait on event, cuda err_no:4, err_str:cudaErrorLaunchFailure

ERROR: Preprocessor transform input data failed., nvinfer error:NVDSINFER_CUDA_ERROR

0:00:12.669702021 8436 0x5593091de0 WARN nvinfer gstnvinfer.cpp:1225:gst_nvinfer_input_queue_loop:<primary-nvinference-engine> error: Failed to queue input batch for inferencing

Error details: /dvs/git/dirty/git-master_linux/deepstream/sdk/src/gst-plugins/gst-nvinfer/gstnvinfer.cpp(1225): gst_nvinfer_input_queue_loop (): /GstPipeline:dstest1-pipeline/GstNvInfer:primary-nvinference-engine

Returned, stopping playback

Frame Number = 0 Number of objects = 0 Vehicle Count = 0 Person Count = 0

** (deepstream-test1-app:8436): WARNING **: 14:51:19.402: Use gst_egl_image_allocator_alloc() to allocate from this allocator

0:00:13.099598980 8436 0x5593091c00 WARN nvinfer gstnvinfer.cpp:1984:gst_nvinfer_output_loop:<primary-nvinference-engine> error: Internal data stream error.

0:00:13.099640229 8436 0x5593091c00 WARN nvinfer gstnvinfer.cpp:1984:gst_nvinfer_output_loop:<primary-nvinference-engine> error: streaming stopped, reason error (-5)

Frame Number = 1 Number of objects = 0 Vehicle Count = 0 Person Count = 0

** (deepstream-test1-app:8436): WARNING **: 14:51:19.417: Use gst_egl_image_allocator_alloc() to allocate from this allocator

Frame Number = 2 Number of objects = 0 Vehicle Count = 0 Person Count = 0

** (deepstream-test1-app:8436): WARNING **: 14:51:19.429: Use gst_egl_image_allocator_alloc() to allocate from this allocator

Frame Number = 3 Number of objects = 0 Vehicle Count = 0 Person Count = 0

** (deepstream-test1-app:8436): WARNING **: 14:51:19.442: Use gst_egl_image_allocator_alloc() to allocate from this allocator

I just tried with Nano board with deepstream-test1-app, it can run and I can see the video displayed.

Can you provide platform information?

| **• | Hardware Platform (Jetson / GPU)** |

|---|---|

| **• | DeepStream Version** |

| **• | JetPack Version (valid for Jetson only)** |

| **• | TensorRT Version** |

| **• | NVIDIA GPU Driver Version (valid for GPU only)** |

Thank you so much! My running environment is as follows:

| **• | Hardware Platform: Jetson Nano 2G ** |

|---|---|

| **• | DeepStream Version: DeepStream 5.1 ** |

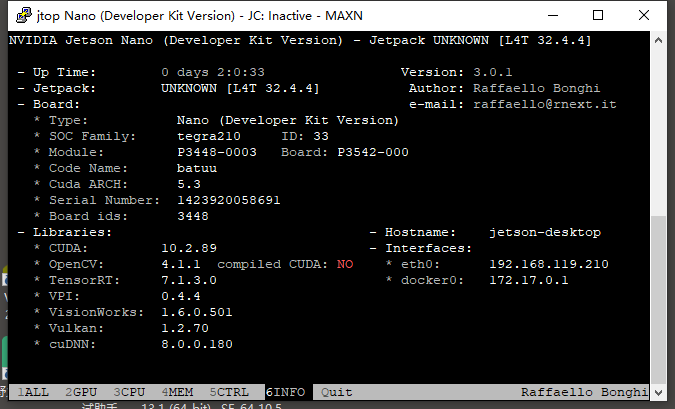

| **• | JetPack Version UNKNOWN [L4T 32.4.4] (this information is from JTOP)** |

| **• | TensorRT Version: 7.1.3.0 ** |

| **• | NVIDIA GPU Driver Version (valid for GPU only)** |

The information displayed in jtop is shown in the figure below:

Deepstream5.1 is based on JetPack 4.5.1 GA(corresponding to L4T 32.5.1 release) .

Please install Deepstream5.1 and the dependencies correctly.

https://docs.nvidia.com/metropolis/deepstream/dev-guide/text/DS_Quickstart.html#jetson-setup

按照 Fiona.Chen 的答复,问题原因应该是我的 Jetson Nano 相关软件版本不匹配所致。我会按照所提的 URL 网址下载系统镜像,重新制作系统。稍后我会把实验结果详细写成文章与大家共享!

用户在Jetson Nano 2G上运行DeepStream测试时遇到问题,移除深度学习模型后程序能正常运行。通过与NVIDIA论坛管理员交流发现,问题可能源于软件版本不匹配。

用户在Jetson Nano 2G上运行DeepStream测试时遇到问题,移除深度学习模型后程序能正常运行。通过与NVIDIA论坛管理员交流发现,问题可能源于软件版本不匹配。

765

765

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?