简介:Google Inception Net首次出现在ILSVRC 2014的比赛中,并获得第一名。

特点:

1、控制了计算量和参数量的同时,获得了非常好的分类性能,有22层深。

2、计算量只有15亿次浮点运算,同时只有500万的参数量。

3、去除了最后的全连接层,用全局平均池化层(即将图片尺寸变为1x1)来取代它,防止过拟合。

4、精心设计的Inception Module提高了参数的利用效率,比NIN更进一步地增加了分支网络。

扩充说明:

卷积层要提升表达能力,主要依靠增加输出通道数,但会导致计算量增大和过拟合。每一个输出通道对应一个滤波器,同一个滤波器共享参数,只能提取一类特征,因此一个输出通道只能做一种特征处理。

NIN介绍:

1、主要是级联的卷积层和MLPConv层。

2、NIN中的MLPConv允许在输出通道之间组合信息。

3、MLPConv基本等效于普通卷积层后再连接1x1的卷积和ReLU激活函数。

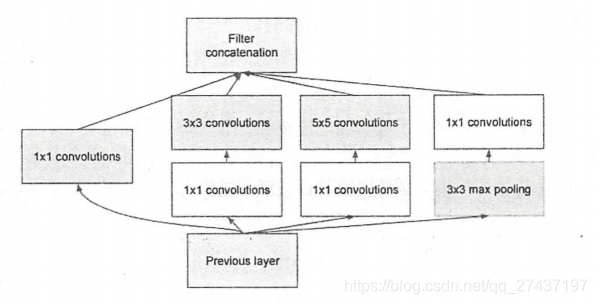

Inception Module的基本结构说明:

1、1x1的卷积,可以跨通道组织信息,提高网络的表达能力,同时对输出通道升维和降维。

2、4个分支都用到了1x1的卷积,来进行低成本的跨通道的特征变换。

3、1x1卷积的性价比很高,用很小的计算量就能增加一层特征变换和非线性化。

4、4个分支在最后通过一个聚合操作合并(在输出通道数这个维度上聚合)。

5、3种不同尺寸的卷积和1个最大池化,增加了网络对不同尺度的适应性。

6、Inception Module可以让网络的深度和宽度高效率地扩充,提升准确率且不至于过拟合。

Inception Net的主要目标就是找到最优的稀疏结构单元(即Inception Module)。

Hebbian原理:

1、稀疏结构基于Hebbian原理。

2、神经反射活动的持续与重复会导致神经元连接稳定性的持久提升。

3、神经元细胞A和B距离很近,A参与了对B重复、持续的兴奋,那么某些代谢变化会导致A将作为能使B兴奋的细胞。

4、一起发射的神经元会连在一起,学习过程中的刺激会使神经元间的突触强度增加。

5、如果数据集的概率分布可以被一个很大很稀疏的神经网络所表达,最佳构筑网络的方法是,逐层构筑网络:将上一层高度相关的节点聚类,并将聚类出来的每一个小簇连接到一起。

Inception Net对Hebbian原理的应用:

1、在图片数据中,天然的就是临近区域的数据相关性高,因此相邻的像素点被卷积操作连接在一起。

2、有多个卷积核,在同一空间位置但在不同通道的卷积核的输出结果相关性极高。

3、1x1卷积核很自然地就把上述特征连接在一起。

4、可以适当地使用一些大尺寸地卷积,增加多样性。

Inception Net网络介绍:

Inception Net有22层深,除了最后一层的输出,其中间节点的分类效果也很好。可使用辅助分类节点,即将中间某一层的输出用作分类,并按一个较小的权重(0.3)加到最终分类结果中。这样做,相当于做了模型融合,同时给网络增加了反向传播的梯度信号,也提供了额外的正则化。

Inception Net家族介绍:

V1:一开始跑在TensorFlow的前辈DistBelief上,且只运行在CPU上。使用了异步SGD训练,学习速率每迭代8个epoch降低4%。也是用了Multi-Scale、Multi-Crop等数据增强方法,并在不同的采样数据上训练了7个模型进行融合。

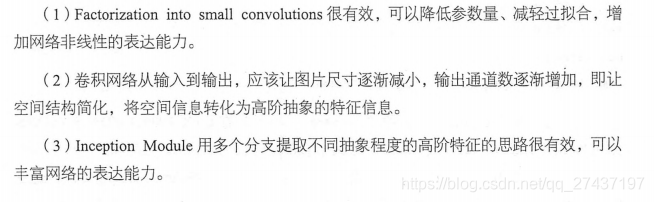

V2:学习了VGGNet,用两个3x3的卷积代替5x5的大卷积,用以降低参数量并减轻过拟合,还提出了BN方法,该方法是一个非常有效的正则化方法,可以让大型卷积网络的训练速度加快很多倍,同时收敛后的分类准确率也可以得到大幅提高。

使用了BN后需要,增大学习速率并加快学习衰减速度;去除Dropout并减轻L2正则;去除LRN;更彻底地对训练样本进行shuffle;减少数据增强过程中对数据地光学畸变。

扩充说明:BN在用于神经网络某层时,会对每一个mini-batch数据的内部进行标准化处理,使输出规范化到N(0,1)的正态分布,减少了内部神经元分布的改变。如此,学习速率可以增大很多倍,达到之前的准确率所需要的迭代次数只有1/14,训练时间大大缩短。

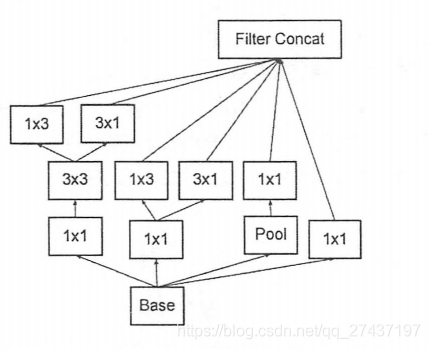

V3:引入了Factorization into small convolutions的思想,将一个较大的二维卷积拆成两个较小的一维卷积。比如将7x7卷积拆成1x7卷积和7x1卷积。

一方面节约了大量参数,加速运算并减轻了过拟合,同时增加了一层非线性扩展模型表达能力。这种非对称的卷积结构拆分,可以处理更多、更丰富的空间特征,增加特征多样性。

另一方面,优化了Inception Module的结构,现有35x35、17x17、8x8三种不同的结构。这些结构只在网络的后部出现,前部还是普通的卷积层。这些结构除了使用分支,还在分支中使用了分支。

V4:结合了微软的ResNet。

下面复现Inception V3,contrib.slim中的一些功能和组件可以大大减少设计Inception Net的代码量。

import tensorflow as tf

slim = tf.contrib.slim

trunc_normal = lambda stddev: tf.truncated_normal_initializer(0.0, stddev)

def inception_v3_arg_scope(weight_decay=0.00004,

stddev=0.1,

batch_norm_var_collection='moving_vars'):

batch_norm_params = {

'decay': 0.9997, # 衰减系数

'epsilon': 0.001,

'updates_collections': tf.GraphKeys.UPDATE_OPS,

'variables_collections': {

'beta': None,

'gamma': None,

'moving_mean': [batch_norm_var_collection],

'moving_variance': [batch_norm_var_collection],

}

}

# 它可以给函数的参数自动赋予某些默认值

# 为slim.conv2d, slim.fully_connected这两个函数的参数自动赋值

# 使用了slim.arg_scope后就不需要每次都重复设置参数了

with slim.arg_scope([slim.conv2d, slim.fully_connected],

weights_regularizer=slim.l2_regularizer(weight_decay)):

with slim.arg_scope(

[slim.conv2d],

weights_initializer=trunc_normal(stddev), # 权重初始化器

activation_fn=tf.nn.relu, # 激活函数

normalizer_fn=slim.batch_norm, # 标准化器

normalizer_params=batch_norm_params) as sc: # 标准化器参数

return sc

# Inception Module,一般有4个分支

# 1分支1x1卷积、2分支1x1卷积再分解后的1xn和nx1卷积

# 3分支和2分支类似、4分支具有最大池化或平均池化

# 4种不同程度的特征抽象和变换来有选择地保留不同层次的高阶特征,可丰富网络的表达能力

# inputs为输入图片数据的tensor,scope为包含了函数默认参数的环境

def inception_v3_base(inputs, scope=None):

end_points = {} # 用来保存某些关键节点供之后使用

with tf.variable_scope(scope, 'InceptionV3', [inputs]):

with slim.arg_scope([slim.conv2d, slim.max_pool2d, slim.avg_pool2d],

stride=1, padding='VALID'):

# 299 x 299 x 3

net = slim.conv2d(inputs, 32, [3, 3], stride=2, scope='Conv2d_1a_3x3')

# 149 x 149 x 32

net = slim.conv2d(net, 32, [3, 3], scope='Conv2d_2a_3x3')

# 147 x 147 x 32

net = slim.conv2d(net, 64, [3, 3], padding='SAME', scope='Conv2d_2b_3x3')

# 147 x 147 x 64

net = slim.max_pool2d(net, [3, 3], stride=2, scope='MaxPool_3a_3x3')

# 73 x 73 x 64

net = slim.conv2d(net, 80, [1, 1], scope='Conv2d_3b_1x1')

# 73 x 73 x 80.

net = slim.conv2d(net, 192, [3, 3], scope='Conv2d_4a_3x3')

# 71 x 71 x 192.

net = slim.max_pool2d(net, [3, 3], stride=2, scope='MaxPool_5a_3x3')

# 35 x 35 x 192.

# Inception blocks

with slim.arg_scope([slim.conv2d, slim.max_pool2d, slim.avg_pool2d],

stride=1, padding='SAME'):

# mixed: 35 x 35 x 256.(4个分支的输出通道数之和64+64+96+32=256)

with tf.variable_scope('Mixed_5b'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 64, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 48, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 64, [5, 5], scope='Conv2d_0b_5x5')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 64, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 96, [3, 3], scope='Conv2d_0b_3x3')

branch_2 = slim.conv2d(branch_2, 96, [3, 3], scope='Conv2d_0c_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 32, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3) # 在输出通道上合并

# mixed_1: 35 x 35 x 288.

with tf.variable_scope('Mixed_5c'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 64, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 48, [1, 1], scope='Conv2d_0b_1x1')

branch_1 = slim.conv2d(branch_1, 64, [5, 5], scope='Conv_1_0c_5x5')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 64, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 96, [3, 3], scope='Conv2d_0b_3x3')

branch_2 = slim.conv2d(branch_2, 96, [3, 3], scope='Conv2d_0c_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 64, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3)

# mixed_2: 35 x 35 x 288.

with tf.variable_scope('Mixed_5d'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 64, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 48, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 64, [5, 5], scope='Conv2d_0b_5x5')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 64, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 96, [3, 3], scope='Conv2d_0b_3x3')

branch_2 = slim.conv2d(branch_2, 96, [3, 3], scope='Conv2d_0c_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 64, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3)

# mixed_3: 17 x 17 x 768.(384+96+288=768)

with tf.variable_scope('Mixed_6a'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 384, [3, 3], stride=2,

padding='VALID', scope='Conv2d_1a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 64, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 96, [3, 3], scope='Conv2d_0b_3x3')

branch_1 = slim.conv2d(branch_1, 96, [3, 3], stride=2,

padding='VALID', scope='Conv2d_1a_1x1')

with tf.variable_scope('Branch_2'):

branch_2 = slim.max_pool2d(net, [3, 3], stride=2, padding='VALID',

scope='MaxPool_1a_3x3')

net = tf.concat([branch_0, branch_1, branch_2], 3)

# mixed4: 17 x 17 x 768.

# 下面串联的1x7卷积和7x1卷积相当于合成了一个7x7卷积

# 参数量大大减少了并减轻了过拟合

# 同时多了一个激活函数,增强了非线性特征变换

with tf.variable_scope('Mixed_6b'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 192, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 128, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 128, [1, 7], scope='Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1, 192, [7, 1], scope='Conv2d_0c_7x1')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 128, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 128, [7, 1], scope='Conv2d_0b_7x1')

branch_2 = slim.conv2d(branch_2, 128, [1, 7], scope='Conv2d_0c_1x7')

branch_2 = slim.conv2d(branch_2, 128, [7, 1], scope='Conv2d_0d_7x1')

branch_2 = slim.conv2d(branch_2, 192, [1, 7], scope='Conv2d_0e_1x7')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 192, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3)

# mixed_5: 17 x 17 x 768.

with tf.variable_scope('Mixed_6c'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 192, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 160, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 160, [1, 7], scope='Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1, 192, [7, 1], scope='Conv2d_0c_7x1')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 160, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 160, [7, 1], scope='Conv2d_0b_7x1')

branch_2 = slim.conv2d(branch_2, 160, [1, 7], scope='Conv2d_0c_1x7')

branch_2 = slim.conv2d(branch_2, 160, [7, 1], scope='Conv2d_0d_7x1')

branch_2 = slim.conv2d(branch_2, 192, [1, 7], scope='Conv2d_0e_1x7')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 192, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3)

# mixed_6: 17 x 17 x 768.

with tf.variable_scope('Mixed_6d'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 192, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 160, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 160, [1, 7], scope='Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1, 192, [7, 1], scope='Conv2d_0c_7x1')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 160, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 160, [7, 1], scope='Conv2d_0b_7x1')

branch_2 = slim.conv2d(branch_2, 160, [1, 7], scope='Conv2d_0c_1x7')

branch_2 = slim.conv2d(branch_2, 160, [7, 1], scope='Conv2d_0d_7x1')

branch_2 = slim.conv2d(branch_2, 192, [1, 7], scope='Conv2d_0e_1x7')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 192, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3)

# mixed_7: 17 x 17 x 768.

with tf.variable_scope('Mixed_6e'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 192, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 192, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 192, [1, 7], scope='Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1, 192, [7, 1], scope='Conv2d_0c_7x1')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 192, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 192, [7, 1], scope='Conv2d_0b_7x1')

branch_2 = slim.conv2d(branch_2, 192, [1, 7], scope='Conv2d_0c_1x7')

branch_2 = slim.conv2d(branch_2, 192, [7, 1], scope='Conv2d_0d_7x1')

branch_2 = slim.conv2d(branch_2, 192, [1, 7], scope='Conv2d_0e_1x7')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 192, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3)

end_points['Mixed_6e'] = net

# mixed_8: 8 x 8 x 1280.

with tf.variable_scope('Mixed_7a'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 192, [1, 1], scope='Conv2d_0a_1x1')

branch_0 = slim.conv2d(branch_0, 320, [3, 3], stride=2,

padding='VALID', scope='Conv2d_1a_3x3')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 192, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 192, [1, 7], scope='Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1, 192, [7, 1], scope='Conv2d_0c_7x1')

branch_1 = slim.conv2d(branch_1, 192, [3, 3], stride=2,

padding='VALID', scope='Conv2d_1a_3x3')

with tf.variable_scope('Branch_2'):

branch_2 = slim.max_pool2d(net, [3, 3], stride=2, padding='VALID',

scope='MaxPool_1a_3x3')

net = tf.concat([branch_0, branch_1, branch_2], 3)

# mixed_9: 8 x 8 x 2048.

with tf.variable_scope('Mixed_7b'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 320, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 384, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = tf.concat([

slim.conv2d(branch_1, 384, [1, 3], scope='Conv2d_0b_1x3'),

slim.conv2d(branch_1, 384, [3, 1], scope='Conv2d_0b_3x1')], 3)

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 448, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(

branch_2, 384, [3, 3], scope='Conv2d_0b_3x3')

branch_2 = tf.concat([

slim.conv2d(branch_2, 384, [1, 3], scope='Conv2d_0c_1x3'),

slim.conv2d(branch_2, 384, [3, 1], scope='Conv2d_0d_3x1')], 3)

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(

branch_3, 192, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3)

# mixed_10: 8 x 8 x 2048.

with tf.variable_scope('Mixed_7c'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 320, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 384, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = tf.concat([

slim.conv2d(branch_1, 384, [1, 3], scope='Conv2d_0b_1x3'),

slim.conv2d(branch_1, 384, [3, 1], scope='Conv2d_0c_3x1')], 3)

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 448, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(

branch_2, 384, [3, 3], scope='Conv2d_0b_3x3')

branch_2 = tf.concat([

slim.conv2d(branch_2, 384, [1, 3], scope='Conv2d_0c_1x3'),

slim.conv2d(branch_2, 384, [3, 1], scope='Conv2d_0d_3x1')], 3)

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(

branch_3, 192, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3)

return net, end_points

def inception_v3(inputs,

num_classes=1000, # 最后需要分类的数量

is_training=True, # 是否是训练过程

dropout_keep_prob=0.8, # 训练时Dropout所需保留节点的比例

prediction_fn=slim.softmax, # 最后用来进行分类的函数

spatial_squeeze=True, # squeeze操作,去除维数为1的维度

reuse=None, # 是否会对网络和Variable进行重复使用

scope='InceptionV3'): # 包含了函数默认参数的环境

with tf.variable_scope(scope, 'InceptionV3', [inputs, num_classes],

reuse=reuse) as scope:

with slim.arg_scope([slim.batch_norm, slim.dropout],

is_training=is_training):

net, end_points = inception_v3_base(inputs, scope=scope)

# Auxiliary Head logits

with slim.arg_scope([slim.conv2d, slim.max_pool2d, slim.avg_pool2d],

stride=1, padding='SAME'):

aux_logits = end_points['Mixed_6e']

# Auxiliary Logits作为辅助分类节点,对分类结果预测有很大帮助

with tf.variable_scope('AuxLogits'):

# 在Mixed_6e之后再接一个5x5的平均池化

# 尺寸从17x17x768变为5x5x768(17/3=5)

aux_logits = slim.avg_pool2d(

aux_logits, [5, 5], stride=3, padding='VALID',

scope='AvgPool_1a_5x5')

aux_logits = slim.conv2d(aux_logits, 128, [1, 1],

scope='Conv2d_1b_1x1')

# Shape of feature map before the final layer.

aux_logits = slim.conv2d(

aux_logits, 768, [5, 5],

weights_initializer=trunc_normal(0.01), # 权重初始化设为标准差为0.01的正态分布

padding='VALID', scope='Conv2d_2a_5x5')

aux_logits = slim.conv2d(

aux_logits, num_classes, [1, 1], activation_fn=None,

normalizer_fn=None, weights_initializer=trunc_normal(0.001),

scope='Conv2d_2b_1x1')

if spatial_squeeze:

aux_logits = tf.squeeze(aux_logits, [1, 2], name='SpatialSqueeze')

end_points['AuxLogits'] = aux_logits

# 处理正常的分类预测的逻辑

with tf.variable_scope('Logits'):

# 直接对Mixed_7e即最后一个卷积层的输出进行一个8x8全局平均池化

net = slim.avg_pool2d(net, [8, 8], padding='VALID',

scope='AvgPool_1a_8x8')

# 1 x 1 x 2048

net = slim.dropout(net, keep_prob=dropout_keep_prob, scope='Dropout_1b')

end_points['PreLogits'] = net

# 2048

logits = slim.conv2d(net, num_classes, [1, 1], activation_fn=None,

normalizer_fn=None, scope='Conv2d_1c_1x1')

if spatial_squeeze:

# 去除输出tensor中维数为1的维度

logits = tf.squeeze(logits, [1, 2], name='SpatialSqueeze')

# 1000

end_points['Logits'] = logits

end_points['Predictions'] = prediction_fn(logits, scope='Predictions')

return logits, end_points

from datetime import datetime

import math

import time

def time_tensorflow_run(session, target, info_string):

num_steps_burn_in = 10

total_duration = 0.0

total_duration_squared = 0.0

for i in range(num_batches + num_steps_burn_in):

start_time = time.time()

_ = session.run(target)

duration = time.time() - start_time

if i >= num_steps_burn_in:

if not i % 10:

print('%s: step %d, duration = %.3f' %

(datetime.now(), i - num_steps_burn_in, duration))

total_duration += duration

total_duration_squared += duration * duration

mn = total_duration / num_batches

vr = total_duration_squared / num_batches - mn * mn

sd = math.sqrt(vr)

print('%s: %s across %d steps, %.3f +/- %.3f sec / batch' %

(datetime.now(), info_string, num_batches, mn, sd))

batch_size = 32

height, width = 299, 299

# 生成随机图片数据作为input

inputs = tf.random_uniform((batch_size, height, width, 3))

with slim.arg_scope(inception_v3_arg_scope()):

logits, end_points = inception_v3(inputs, is_training=False)

init = tf.global_variables_initializer()

sess = tf.Session()

sess.run(init)

num_batches = 100

time_tensorflow_run(sess, logits, "Forward")

在GTX 1080、CUDA 8、cuDNN5.1的环境下,每个batch预测耗时仅为0.145s。

Inception V3网络仅有2500万个参数,浮点计算量仅为50亿次。

较小的计算量让Inception V3可移植到普通服务器上,提供快速响应的服务,甚至是移植到手机上进行实时的图像识别。

网络设计技巧总结:

InceptionNet在ILSVRC2014比赛中首次亮相并夺冠,其核心在于InceptionModule,通过1x1卷积和多尺度卷积实现高效特征提取。网络深度达22层,参数量仅500万,计算量15亿次浮点运算。采用全局平均池化防止过拟合,引入BN加速训练。InceptionV3通过拆分大卷积提高效率。

InceptionNet在ILSVRC2014比赛中首次亮相并夺冠,其核心在于InceptionModule,通过1x1卷积和多尺度卷积实现高效特征提取。网络深度达22层,参数量仅500万,计算量15亿次浮点运算。采用全局平均池化防止过拟合,引入BN加速训练。InceptionV3通过拆分大卷积提高效率。

805

805

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?