博主介绍:✌全网粉丝50W+,前互联网大厂软件研发、集结硕博英豪成立软件开发工作室,专注于计算机相关专业项目实战6年之久,累计开发项目作品上万套。凭借丰富的经验与专业实力,已帮助成千上万的学生顺利毕业,选择我们,就是选择放心、选择安心毕业✌

> 🍅想要获取完整文章或者源码,或者代做,拉到文章底部即可与我联系了。🍅

2、大数据、计算机专业选题(Python/Java/大数据/深度学习/机器学习)(建议收藏)✅

1、项目介绍

- 技术栈:Python语言、YOLOv8、ByteTrack算法、YOLOv8测速+测距+车辆计数、目标计数+目标测速

- 这个项目的研究背景:当前交通监控、公共区域安全管理等场景中,传统人工监控方式存在效率低、实时性差的问题,难以精准完成目标(如车辆、行人)的检测、跟踪及测速测距;同时,传统系统对遮挡目标的识别率低,易出现漏检和轨迹碎片化,导致流量统计、速度监测数据不准确,无法为交通调度、安全管控提供可靠数据支撑,这些痛点制约了监控场景的智能化升级。

- 这个项目的研究意义:技术层面,通过YOLOv8提升目标检测精度,结合ByteTrack算法解决遮挡目标漏检问题,实现高效多目标跟踪;功能层面,集成测速、测距、车辆计数功能,满足实时监控数据需求;行业层面,为交通监控、智慧园区等领域提供智能化分析工具,助力提升管理效率与决策准确性,推动监控场景从“人工值守”向“智能分析”转型,具备实际应用价值。

2、项目界面

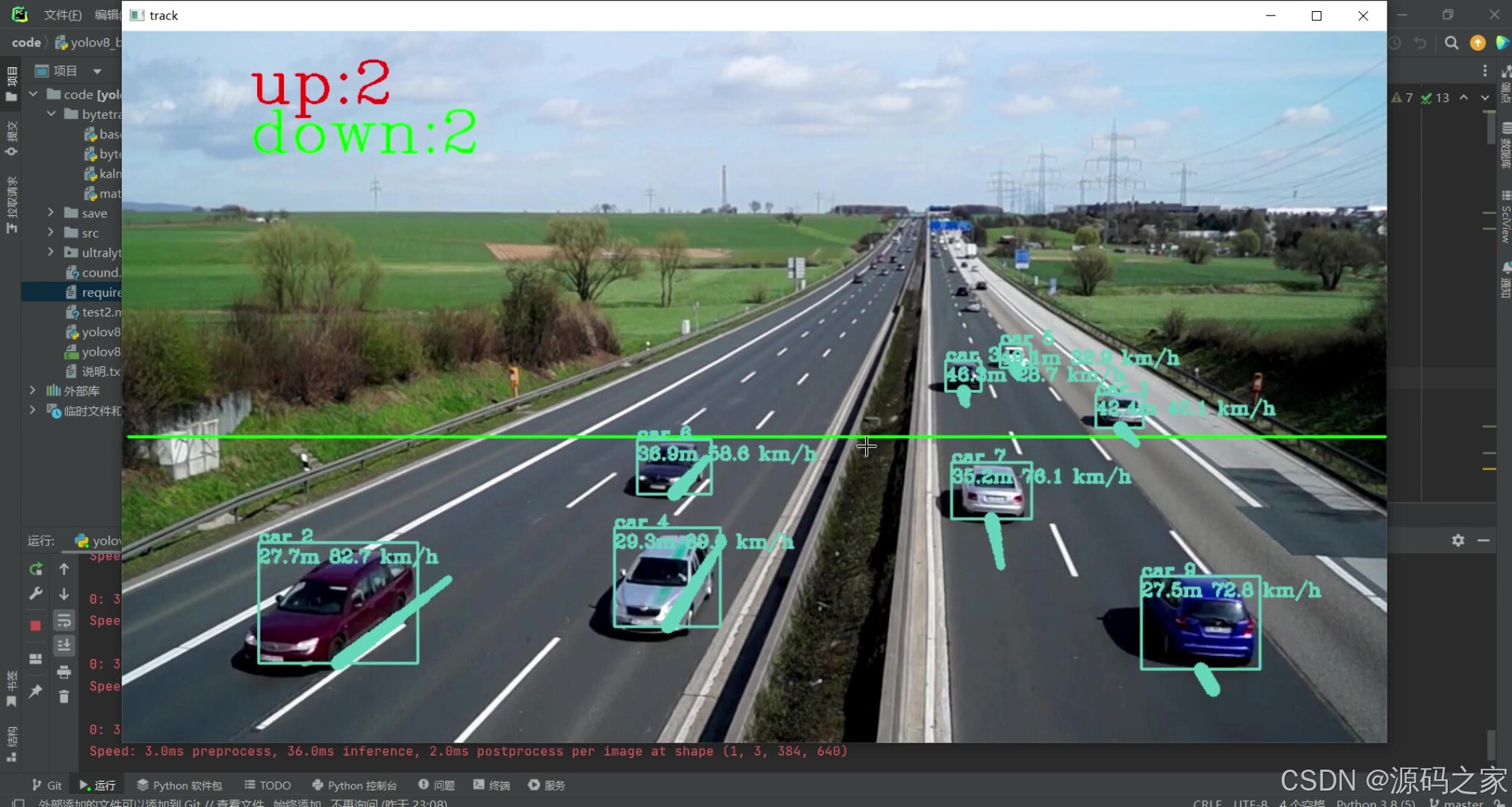

(1)测速测距车辆计数界面1

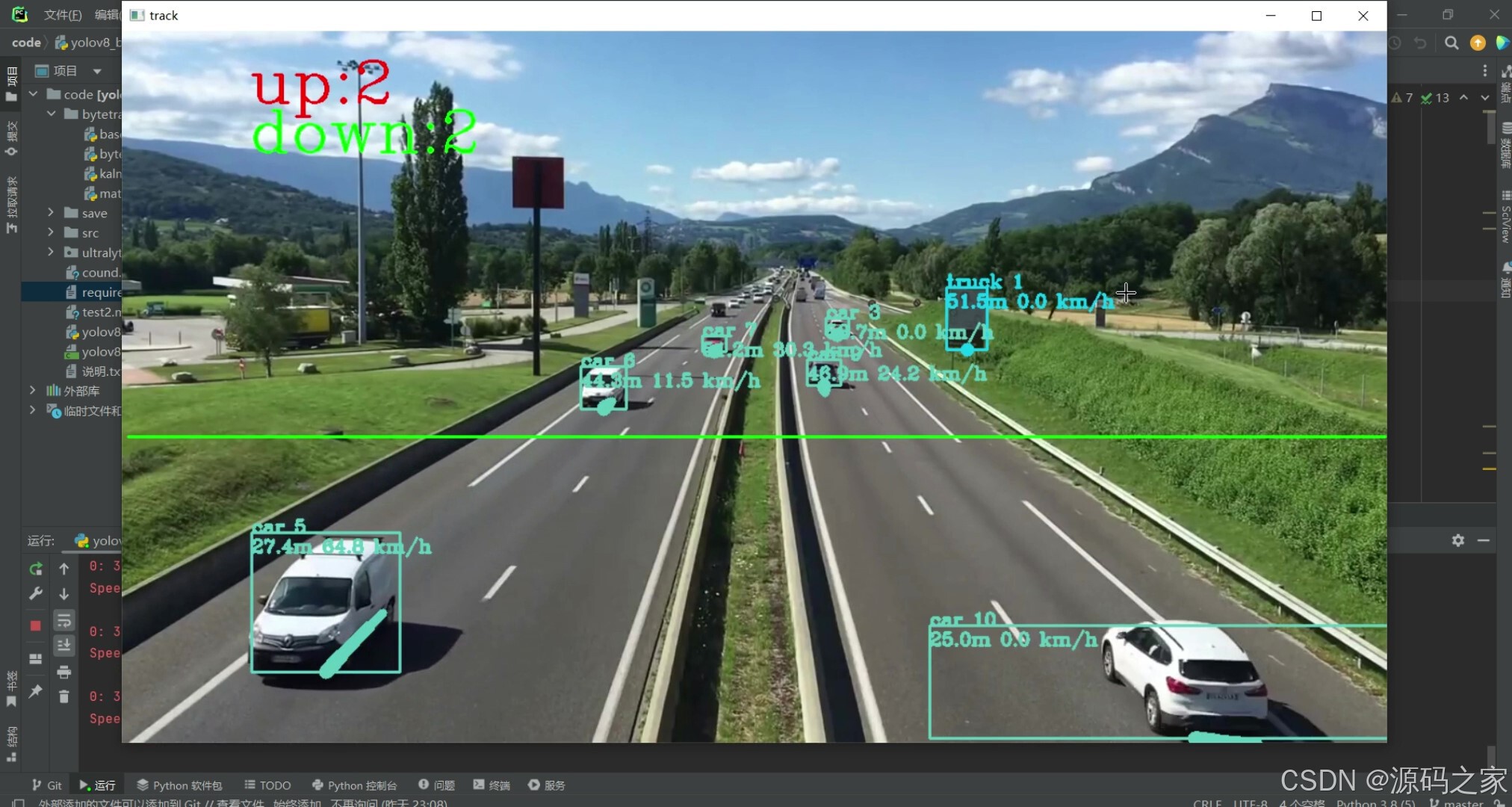

(2)测速测距车辆计数界面2

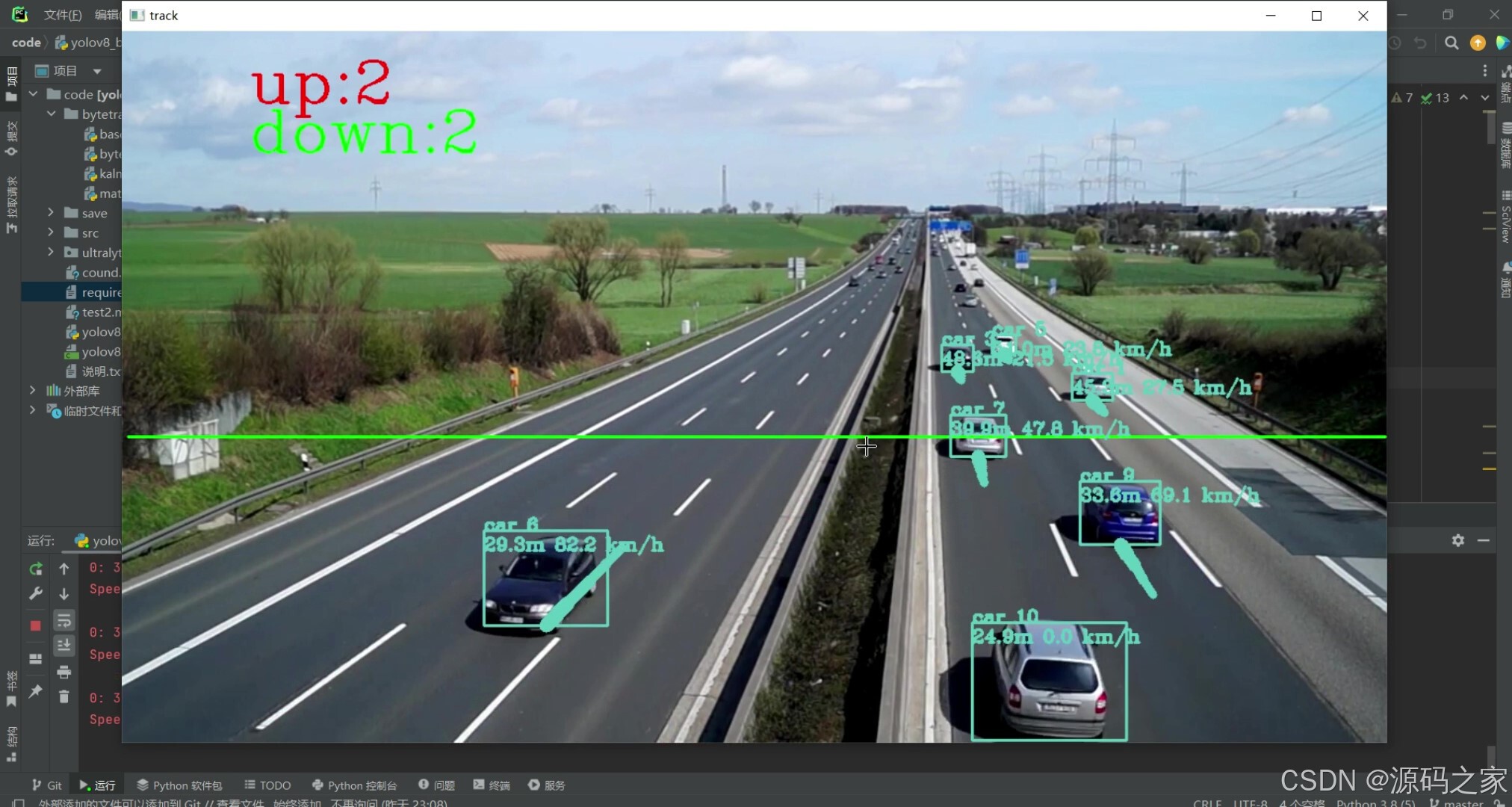

(3)测速测距车辆计数界面3

3、项目说明

本项目是基于Python语言开发的目标检测与跟踪分析系统,融合YOLOv8目标检测算法与ByteTrack多目标跟踪算法,核心实现车辆等目标的测速、测距及计数功能,旨在解决传统监控场景中检测精度低、数据统计不准确的问题。项目首先通过argparse模块解析命令行参数,配置检测模型权重路径、输入输出视频路径、置信度阈值、相机参数(高度、角度、内参)等,为后续功能提供基础配置;接着将相机内参转换为3×3numpy数组,用于后续深度计算。在目标检测环节,加载ultralytics的YOLO模型,对视频每一帧图像进行检测,获取目标检测框、置信度及类别信息,并将结果传递至跟踪模块。跟踪模块初始化BYTETracker对象,基于卡尔曼滤波预测目标下一帧位置,以IoU为相似度指标,通过匈牙利算法完成检测框与轨迹的匹配,同时关联低分检测框以恢复遮挡目标,避免漏检与轨迹碎片化,为每个目标分配唯一跟踪ID。系统还实现轨迹绘制与流量统计功能:为每个目标维护轨迹队列,记录运动轨迹并在图像上绘制;通过设置虚拟计数线,判断目标是否穿过线条,统计进入与离开的目标数量。在距离与速度计算上,结合相机模型、目标图像位置及相机参数计算目标深度,利用连续两帧的深度变化与时间间隔,将速度换算为公里/小时单位。图像可视化环节,在帧图像上叠加检测框、类别、跟踪ID、速度及计数结果,若开启可视化参数则实时展示结果。视频处理流程中,通过cv2.VideoCapture读取输入视频,逐帧调用检测处理方法,最后将处理后的帧写入输出视频文件。整体而言,系统整合检测、跟踪、计算、可视化全流程,适用于交通监控、人群分析等场景,为相关领域提供精准的实时数据支撑,具备较强的智能化与实用性。

4、核心代码

import cv2

import torch

from ultralytics import YOLO

import os

import argparse

from bytetrack_tracker.byte_tracker import BYTETracker

from collections import deque

import numpy as np

import math

parser = argparse.ArgumentParser()

# 检测参数

parser.add_argument('--weights', default=r"yolov8m.pt", type=str, help='weights path')

# 设置检测的视频路径

parser.add_argument('--source', default=r"cound.mp4", type=str, help='img or video(.mp4)path')

# parser.add_argument('--source', default=r"test2.mp4", type=str, help='img or video(.mp4)path')

parser.add_argument('--save', default=r"./save", type=str, help='save img or video path')

parser.add_argument('--vis', default=True, action='store_true', help='visualize image')

parser.add_argument('--conf_thre', type=float, default=0.5, help='conf_thre')

parser.add_argument('--iou_thre', type=float, default=0.5, help='iou_thre')

# 跟踪参数

parser.add_argument('--track_thresh', type=float, default=0.5, help='track_thresh')

parser.add_argument('--track_buffer', type=int, default=70, help='track_buffer')

parser.add_argument('--match_thresh', type=float, default=0.8, help='match_thresh')

# 添加高度(H)、角度(alpha)和相机内参(calib)参数

parser.add_argument('--H', type=float, default=6.5, help='Height of the camera from the ground')

parser.add_argument('--alpha', type=int, default=8, help='Angle of the camera view')

parser.add_argument('--calib', type=str, default='2900,0.0,640; 0.0,2900,360; 0.0,0.0,1.0',

help='Camera intrinsic parameters')

# 解析参数

opt = parser.parse_args()

# 将字符串形式的 calib 转换为 numpy 数组

calib_values = [float(x) for x in opt.calib.replace(';', ',').split(',')]

opt.calib = np.array(calib_values).reshape(3, 3)

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

class Detector(object):

def __init__(self, weight_path, conf_threshold=0.5, iou_threshold=0.5):

self.device = device

self.model = YOLO(weight_path).to(self.device)

self.conf_threshold = conf_threshold

self.iou_threshold = iou_threshold

self.tracker = BYTETracker(track_thresh=opt.track_thresh, track_buffer=opt.track_buffer,

match_thresh=opt.match_thresh)

self.pointlist = [(6, 410), (1278, 410)]

self.trajectories = {}

self.max_trajectory_length = 5

self.id_in = 0

self.id_in_list = []

self.id_out = 0

self.id_out_list = []

@staticmethod

def get_color(idx):

idx = idx * 3

color = ((37 * idx) % 255, (17 * idx) % 255, (29 * idx) % 255)

return color

def draw_measure_line(self, H, calib, u, v, alpha):

alpha = alpha # 角度a

# 相机焦距

fy = calib[1][1]

# 相机光心

u0 = calib[0][2]

v0 = calib[1][2]

pi = math.pi

Q_pie = [u - u0, v - v0]

gamma_pie = math.atan(Q_pie[1] / fy) * 180 / np.pi

beta_pie = alpha + gamma_pie

if beta_pie == 0:

beta_pie = 1e-2

z_in_cam = (H / math.sin(beta_pie / 180 * pi)) * math.cos(gamma_pie * pi / 180)

return z_in_cam

def detect_image(self, img_bgr):

results = self.model(img_bgr, conf=self.conf_threshold, iou=self.iou_threshold)

# Extracting the necessary details from the results

boxes = results[0].boxes.xyxy.cpu().numpy() # xyxy format

confidences = results[0].boxes.conf

class_preds = results[0].boxes.cls

confidences_expanded = confidences.unsqueeze(1)

class_preds_expanded = class_preds.unsqueeze(1)

boxes_tensor = torch.from_numpy(boxes).to(class_preds_expanded.device)

result = torch.cat((boxes_tensor, confidences_expanded, class_preds_expanded), dim=1)

online_targets = self.tracker.update(result.cpu(), [results[0].orig_shape[0], results[0].orig_shape[1]])

cv2.line(img_bgr, self.pointlist[0], self.pointlist[1], (0, 255, 0), 2)

for t in online_targets:

speed_km_per_h = 0

tlwh = t.tlwh

xmin, ymin, xmax, ymax = tlwh[0], tlwh[1], tlwh[0] + tlwh[2], tlwh[1] + tlwh[3]

tid = t.track_id

cls = t.cls.item()

class_pred = int(cls)

color = self.get_color(class_pred + 2)

bottom_center = (int(tlwh[0] + tlwh[2] / 2), int(tlwh[1] + tlwh[3]))

bbox_label = results[0].names[class_pred]

cv2.rectangle(img_bgr, (int(xmin), int(ymin)), (int(xmax), int(ymax)), color, 2)

zc_cam_other = self.draw_measure_line(opt.H, opt.calib, int(tlwh[0] + tlwh[2] / 2), int(tlwh[1] + tlwh[3]),

opt.alpha)

if tid not in self.trajectories:

self.trajectories[tid] = deque(maxlen=self.max_trajectory_length)

trajectory_point = {

'bottom_center': bottom_center,

'zc_cam_other': zc_cam_other

}

self.trajectories[tid].appendleft(trajectory_point)

# 截断轨迹长度

if len(self.trajectories[tid]) > self.max_trajectory_length:

self.trajectories[tid] = self.trajectories[tid][:self.max_trajectory_length]

for i in range(1, len(self.trajectories[tid])):

if self.trajectories[tid][i - 1] is None or self.trajectories[tid][i] is None:

continue

thickness = int(np.sqrt(64 / float(i + 1)) * 2.5)

cv2.line(img_bgr, self.trajectories[tid][i - 1]['bottom_center'],

self.trajectories[tid][i]['bottom_center'], color,

thickness)

# 通过阈值判断是竖线还是横线,若x2和x1之间的差值小于20 判断为竖线 否则为横线

"""

例如:如下垂线上下两点的x保持不变,y存在变化 所以垂线的x2-x1=0

*

*

*

*

如下横线左右两点的y保持不变,x存在变化 所以横线的y2-y1=0

***********

"""

if abs(self.pointlist[1][0] - self.pointlist[0][0]) < 20:

# 通过判断同一个目标上下两帧是否过线 来进行计数

# self.trajectories[tid][i - 1][0]代表上一帧 self.trajectories[tid][i][0]代表当前帧

if ((self.trajectories[tid][i - 1]['bottom_center'][0] <= self.pointlist[0][0]) and

(self.trajectories[tid][i]['bottom_center'][0] > self.pointlist[0][0])) and \

((self.trajectories[tid][i]['bottom_center'][1] > self.pointlist[1][

1]) and # 设置目标的撞线范围 不得超线

(self.trajectories[tid][i]['bottom_center'][1] < self.pointlist[0][1])):

# 如果目标ID已经计数过,则忽略

if tid in self.id_in_list:

continue

# 否则,增加进入计数,并将ID添加到已计数列表中

else:

self.id_in += 1

self.id_in_list.append(tid)

if ((self.trajectories[tid][i - 1]['bottom_center'][0] >= self.pointlist[0][0]) and

(self.trajectories[tid][i]['bottom_center'][0] < self.pointlist[0][0])) and \

((self.trajectories[tid][i]['bottom_center'][1] > self.pointlist[1][1]) and

(self.trajectories[tid][i]['bottom_center'][1] < self.pointlist[0][1])):

if tid in self.id_out_list:

continue

else:

self.id_out += 1

self.id_out_list.append(tid)

else:

if ((self.trajectories[tid][i - 1]['bottom_center'][1] >= self.pointlist[0][1]) and

(self.trajectories[tid][i]['bottom_center'][1] < self.pointlist[0][1])) and \

((self.trajectories[tid][i]['bottom_center'][0] > self.pointlist[0][0]) and

(self.trajectories[tid][i]['bottom_center'][0] < self.pointlist[1][0])):

if tid in self.id_in_list:

continue

else:

self.id_in += 1

self.id_in_list.append(tid)

if ((self.trajectories[tid][i - 1]['bottom_center'][1] <= self.pointlist[0][1]) and

(self.trajectories[tid][i]['bottom_center'][1] > self.pointlist[0][1])) and \

((self.trajectories[tid][i]['bottom_center'][0] > self.pointlist[0][0]) and

(self.trajectories[tid][i]['bottom_center'][0] < self.pointlist[1][0])):

if tid in self.id_out_list:

continue

else:

self.id_out += 1

self.id_out_list.append(tid)

time_interval = 1 / fps

speed_m_per_s = abs(self.trajectories[tid][i]['zc_cam_other'] - self.trajectories[tid][i - 1][

'zc_cam_other']) / time_interval

speed_km_per_h = speed_m_per_s * 3.6 # 转换为公里/小时

# 显示类名和跟踪ID

cv2.putText(img_bgr, f"{bbox_label} {int(tid)}",

(int(tlwh[0]), int(tlwh[1])), cv2.FONT_HERSHEY_COMPLEX, 0.6, color, 2)

# 显示速度,纵坐标比类名和跟踪ID的位置稍低一些

cv2.putText(img_bgr, f"{zc_cam_other:.1f}m {speed_km_per_h:.1f} km/h",

(int(tlwh[0]), int(tlwh[1]) + 20), cv2.FONT_HERSHEY_COMPLEX, 0.6, color, 2)

cv2.putText(img_bgr, str(f"down:{self.id_in}"),

(int(img_bgr.shape[1] * 0.1), int(img_bgr.shape[0] * 0.17)), cv2.FONT_HERSHEY_COMPLEX,

2,

(0, 255, 0), 2)

cv2.putText(img_bgr, str(f"up:{self.id_out}"),

(int(img_bgr.shape[1] * 0.1), int(img_bgr.shape[0] * 0.1)), cv2.FONT_HERSHEY_COMPLEX,

2,

(0, 0, 255), 2)

return img_bgr

# Example usage

if __name__ == '__main__':

model = Detector(weight_path=opt.weights, conf_threshold=opt.conf_thre, iou_threshold=opt.iou_thre)

capture = cv2.VideoCapture(opt.source)

fps = capture.get(cv2.CAP_PROP_FPS)

size = (int(capture.get(cv2.CAP_PROP_FRAME_WIDTH)),

int(capture.get(cv2.CAP_PROP_FRAME_HEIGHT)))

fourcc = cv2.VideoWriter_fourcc('m', 'p', '4', 'v')

outVideo = cv2.VideoWriter(os.path.join(opt.save, os.path.basename(opt.source).split('.')[-2] + "_out.mp4"), fourcc,

fps, size)

while True:

ret, frame = capture.read()

if not ret:

break

img_vis = model.detect_image(frame)

outVideo.write(img_vis)

img_vis = cv2.resize(img_vis, None, fx=1, fy=1, interpolation=cv2.INTER_NEAREST)

cv2.imshow('track', img_vis)

cv2.waitKey(30)

capture.release()

outVideo.release()

🍅✌感兴趣的可以先收藏起来,点赞关注不迷路,想学习更多项目可以查看主页,大家在毕设选题,项目编程以及论文编写等相关问题都可以给我留言咨询,希望可以帮助同学们顺利毕业!🍅✌

5、源码获取方式

🍅由于篇幅限制,获取完整文章或源码、代做项目的,拉到文章底部即可看到个人联系方式。🍅

点赞、收藏、关注,不迷路,下方查看👇🏻获取联系方式👇🏻

✅&spm=1001.2101.3001.5002&articleId=152281749&d=1&t=3&u=2e7e3e6f22af42f9ab300d761b5d74c9)

592

592

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?