Sources

[1] general introduction for floating point error: https://www.cs.ovgu.de/~elkner/ASM/sparc-6.html#:~:text=Since%20fp%20representations%20always%20include,big%20can%20relative%20error%20get?

[2] IEEE floating point representation (for single precision) https://en.wikipedia.org/wiki/Single-precision_floating-point_format

[3] Gemini with confirmed sources but indirect quote

[4] simple examples: https://en.wikipedia.org/wiki/Unit_in_the_last_place#:~:text=//%20%CF%80%20with%2020%20decimal,:%200x1.0p%2D51)

[5] reference sheet:

https://www.emmtrix.com/wiki/ULP_Difference_of_Float_Numbers#:~:text=The%20Unit%20in%20the%20Last,the%20nearest%20floating%2Dpoint%20number.

What

Unit in Last Place or ULP in short, is a common measure for floating point representation error in relative terms.

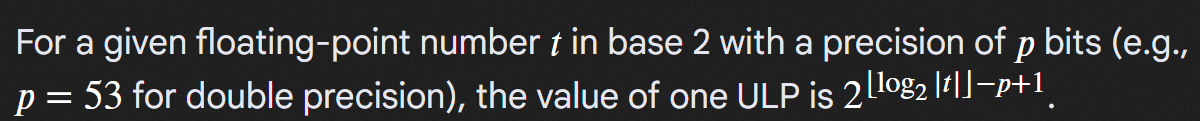

ULP itself is simply, for given exponent, quite literally unit of the last representable digit, or mathematically[1]:

B^(-(p-1))* B^e,

where e is the exponent of the particular number being represented, B is base and p is precision or significand of the represented value.

In most cases, floating point representation is directly tied to binary system for digitical logics, hence the above definition simplified to[3]:

where "number t" means the numerical value of the representation is t.

Mantissa vs. Significand

Note that for a double precision, S-E-M bitwidths are 1-11-52 and it brings up the point, though mantissa is often used interchangeably with significand, here we clarify:

p = 1.M [2]

or Mantissa is the fractional part of significand; this tracks with IEEE standard, since normal values have an implied 1 in significand and the bit pattern only records mantissa.

It's worth pointing out Mantissa historically defines the fractional part of a logrithm[3], hence the modern computer science definition is really a misnomer, as IEEE mantissa represents "negative" part relative to current E. When describing precision of a floating point number, use only significand.

Why

As mentioned, we use ULP as a measure for relative error for fp[1]:

Every fp representation has an error associated with it. It is attempting to represent some true value, z, with an fp representation, f. The absolute error then is:

| f - z |

A measure of error that does not depend on the size of the number being represent is called relative error. Relative error is defined as:

| f - z | / z

In practice we can't handle real number, so z above is really the "golden" precision. Since it only makes sense to measure low precision fp against higher ones in digital logic, I will use ULP loss interchangeably with ULP error, and I propose a practical ULP loss to be:

| upgrade_cast(f) - z | / ULP(z)

and we follow the defnition to compute ulp loss as:

Obviously for a true real number, the ULP can be arbitrarily small, hence ULP error against real values can be arbitrarily large; while on the other end, the most accurate fp representation, with proper rounding, can resolve a value in representable range to leq 0.5 ULP loss.

For f, z both being fp, i.e. ULP is quantized to units of 1, because we can't possibly specify rounding rule per datum, a loss of 1 ULP is good enough.

How

Simple examples seen in [4], and C implementation guide is shown in [5].

Since 3.9, python provides a direct math methodhttps://docs.python.org/3/library/math.html#math.ulp

More

Read [1] for how fp produces error during computations.

2420

2420

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?