1 简介

概率神经网络(Probabilistic Neural Network,简称PNN)是利用贝叶斯定理和基于风险最小的贝叶斯决策规则对新样本进行分类的神经网络,具有训练时间短且不易收敛到局部极值的优点,但是传统PNN采用相同平滑系数容易导致识别率低和误分类的问题,其次平滑系数对分类结果影响巨大并且难以确定,模式层神经元数目由训练样本数目确定,当训练样本集规模巨大时,导致网络结构复杂。本文采用灰狼算法选择PNN网络的平滑系数向量并优化PNN的网络结构.

2 部分代码

% Grey Wolf Optimizerfunction [Alpha_score,Alpha_pos,Convergence_curve]=GWO(SearchAgents_no,Max_iter,lb,ub,dim,fobj)% initialize alpha, beta, and delta_posAlpha_pos=zeros(1,dim);Alpha_score=inf; %change this to -inf for maximization problemsBeta_pos=zeros(1,dim);Beta_score=inf; %change this to -inf for maximization problemsDelta_pos=zeros(1,dim);Delta_score=inf; %change this to -inf for maximization problems%Initialize the positions of search agentsPositions=initialization(SearchAgents_no,dim,ub,lb);Convergence_curve=zeros(1,Max_iter);l=0;% Loop counter% Main loopwhile l<Max_iterfor i=1:size(Positions,1)% Return back the search agents that go beyond the boundaries of the search spaceFlag4ub=Positions(i,:)>ub;Flag4lb=Positions(i,:)<lb;Positions(i,:)=(Positions(i,:).*(~(Flag4ub+Flag4lb)))+ub.*Flag4ub+lb.*Flag4lb;% Calculate objective function for each search agentfitness=fobj(Positions(i,:));% Update Alpha, Beta, and Deltaif fitness<Alpha_scoreAlpha_score=fitness; % Update alphaAlpha_pos=Positions(i,:);endif fitness>Alpha_score && fitness<Beta_scoreBeta_score=fitness; % Update betaBeta_pos=Positions(i,:);endif fitness>Alpha_score && fitness>Beta_score && fitness<Delta_scoreDelta_score=fitness; % Update deltaDelta_pos=Positions(i,:);endenda=2-l*((2)/Max_iter); % a decreases linearly fron 2 to 0% Update the Position of search agents including omegasfor i=1:size(Positions,1)for j=1:size(Positions,2)r1=rand(); % r1 is a random number in [0,1]r2=rand(); % r2 is a random number in [0,1]A1=2*a*r1-a; % Equation (3.3)C1=2*r2; % Equation (3.4)D_alpha=abs(C1*Alpha_pos(j)-Positions(i,j)); % Equation (3.5)-part 1X1=Alpha_pos(j)-A1*D_alpha; % Equation (3.6)-part 1r1=rand();r2=rand();A2=2*a*r1-a; % Equation (3.3)C2=2*r2; % Equation (3.4)D_beta=abs(C2*Beta_pos(j)-Positions(i,j)); % Equation (3.5)-part 2X2=Beta_pos(j)-A2*D_beta; % Equation (3.6)-part 2r1=rand();r2=rand();A3=2*a*r1-a; % Equation (3.3)C3=2*r2; % Equation (3.4)D_delta=abs(C3*Delta_pos(j)-Positions(i,j)); % Equation (3.5)-part 3X3=Delta_pos(j)-A3*D_delta; % Equation (3.5)-part 3Positions(i,j)=(X1+X2+X3)/3;% Equation (3.7)endendl=l+1;Convergence_curve(l)=Alpha_score;end

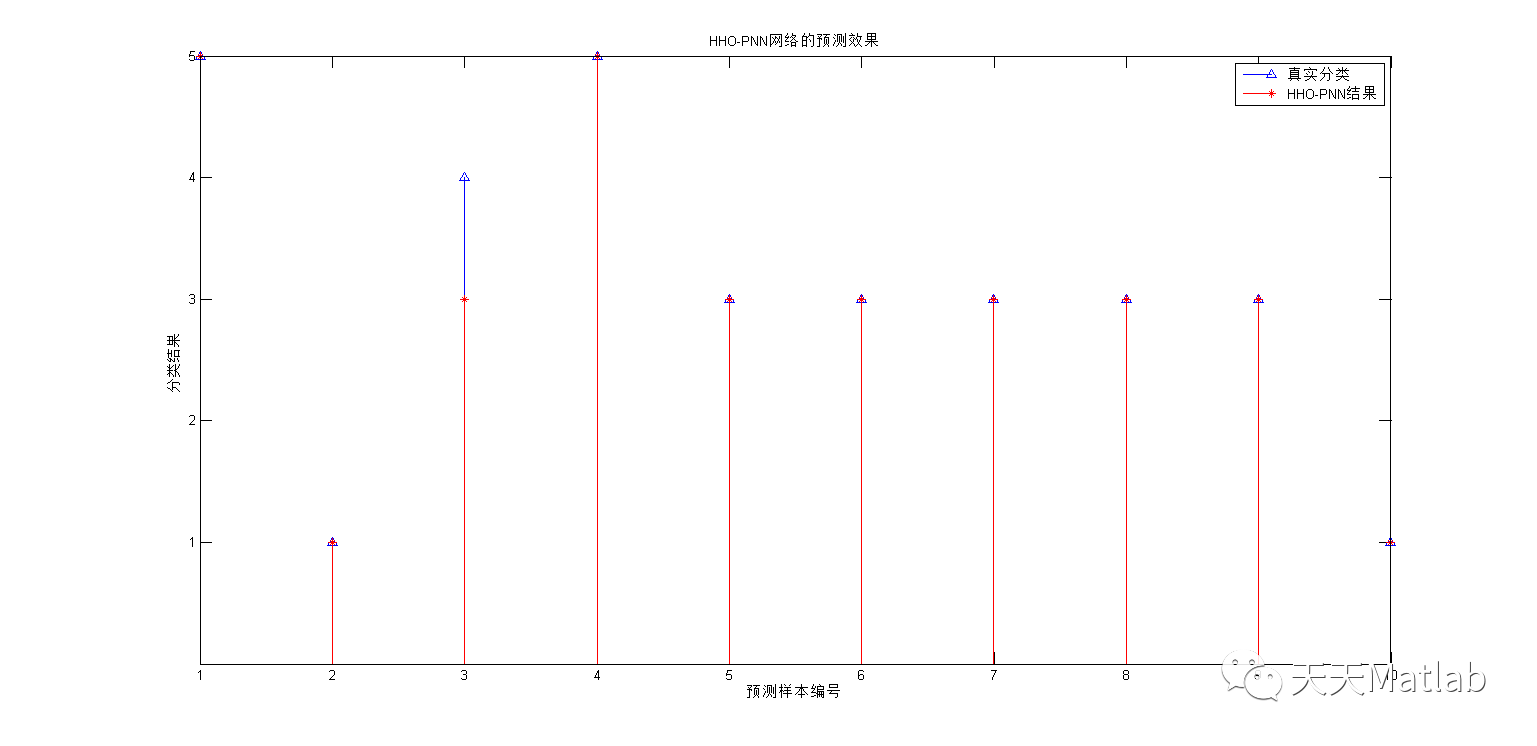

3 仿真结果

4 参考文献

[1]李敏, 余正涛. 结合概率型神经网络(PNN)和学习矢量量化(LVQ)算法的文本分类方法[J]. 计算机系统应用, 2012.

博主简介:擅长智能优化算法、神经网络预测、信号处理、元胞自动机、图像处理、路径规划、无人机等多种领域的Matlab仿真,相关matlab代码问题可私信交流。

部分理论引用网络文献,若有侵权联系博主删除。

本文介绍了一种使用灰狼优化算法优化概率神经网络(PNN)的方法,针对传统PNN中平滑系数选择和网络结构复杂的问题。通过GWO算法动态调整平滑系数,有效提高了PNN在文本分类任务中的准确性和效率。

本文介绍了一种使用灰狼优化算法优化概率神经网络(PNN)的方法,针对传统PNN中平滑系数选择和网络结构复杂的问题。通过GWO算法动态调整平滑系数,有效提高了PNN在文本分类任务中的准确性和效率。

1021

1021

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?