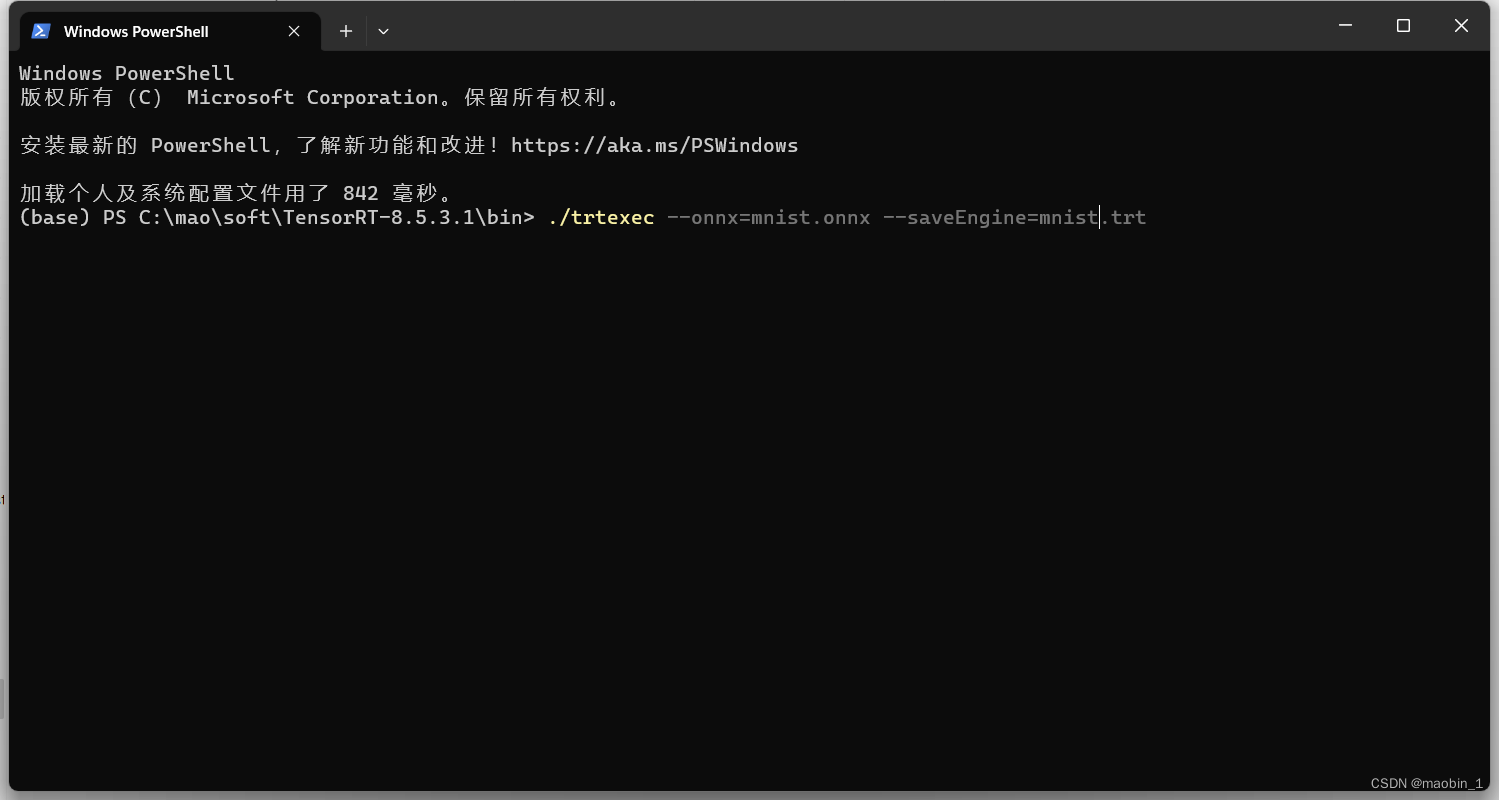

1、利用官方自带的trtexec.exe文件将Tensorrt自带的onnx导出为trt文件。命令为

./trtexec --onnx=mnist.onnx --saveEngine=mnist.trt

2、导入相关的库,trt目录下有对应的tensorrt包。torch安装GPU版。参考

import tensorrt as trt

import numpy as np

import pycuda.driver as cuda

import pycuda.autoinit

from pycuda.compiler import SourceModule

from PIL import Image

import torchvision.transforms as transforms

# 加载MNIST TensorRT模型文件

TRT_LOGGER = trt.Logger(trt.Logger.WARNING)

#从文件加载引擎、Runtime接口反序列化引擎、可以从内存缓冲区反序列化引擎

with open('mnist.trt', 'rb') as f, trt.Runtime(TRT_LOGGER) as runtime:

engine = runtime.deserialize_cuda_engine(f.read())

# 创建推理引擎

context = engine.create_execution_context()

# 创建输入和输出变量

input_shape = (1, 1, 28, 28)

output_shape = (1, 10)

input_host = np.empty(input_shape, dtype=np.float32)

print(input_shape)

output_host = np.empty(output_shape, dtype=np.float32)

input_device = cuda.mem_alloc(input_host.nbytes)

output_device = cuda.mem_alloc(output_host.nbytes)

bindings = [int(input_device), int(output_device)]

# 准备输入数据

input_host = Image.open("9.png").convert('L')

input_host = transforms.Resize((28, 28))(input_host)

input_host = transforms.ToTensor()(input_host)

input_host = input_host.numpy().reshape(1, 1, 28, 28).astype(np.float32)

# np.copyto(input_host, input_data.ravel())

# 执行推理

stream = cuda.Stream()

cuda.memcpy_htod_async(input_device, input_host, stream)

context.execute_async_v2(bindings=bindings, stream_handle=stream.handle)

cuda.memcpy_dtoh_async(output_host, output_device, stream)

# 等待推理完成

stream.synchronize()

# 输出结果

print(output_host)

print(np.argmax(output_host))

该文详细介绍了如何利用TensorRT提供的trtexec工具将ONNX模型(mnist.onnx)转换为TRT引擎(mnist.trt),然后在Python环境中导入相关库,如TensorRT、NumPy和PyCUDA,加载并执行这个TRT引擎进行MNIST手写数字识别的推理过程。通过创建输入输出变量,准备数据,执行推理并获取结果,展示了TensorRT在GPU加速上的应用。

该文详细介绍了如何利用TensorRT提供的trtexec工具将ONNX模型(mnist.onnx)转换为TRT引擎(mnist.trt),然后在Python环境中导入相关库,如TensorRT、NumPy和PyCUDA,加载并执行这个TRT引擎进行MNIST手写数字识别的推理过程。通过创建输入输出变量,准备数据,执行推理并获取结果,展示了TensorRT在GPU加速上的应用。

&spm=1001.2101.3001.5002&articleId=131708502&d=1&t=3&u=11436fe9a971424fa1f36121808492e1)

636

636

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?