MCP中的Tool(获取天气信息实战操作步骤详解)

1. Tool介绍

MCP服务可以提供三种能力,分别是工具(Tool)、资源(Resource)和提示词(Prompt)。

工具的作用是为大模型提供某种行为能力,比如获取指定网址的数据、执行某个操作等。服务端的工具定义是使用@mcp.tool()装饰器来定义(实例化后使用@app.tool())。示例代码如下:(必须要在定义函数中添加详细的函数功能描述,方便后续大模型进行调用)

@app.tool()

def plus_tool(a:float, b:float) -> float:

'''

计算两个浮点数相加的结果

:param a: 第一个浮点数

:param b: 第二个浮点数

:return: 返回两个数相加的结果

'''

return a + b

这里我们以获取天气预报为例,来讲解-下MCP工具的使用

2. 获取天气预报实战

在项目中,新建一个文件夹,命名为Tool_mcp,然后创建两个py文件,服务端名称为weather_search_server.py,客户端为weather_search_client.py

2.1 服务端

基础版本

进行天气查询,需要外调接口数据,比如选用大家常用的weatherapi网址中的接口,如下。点击View Docs可以进入使用文档。

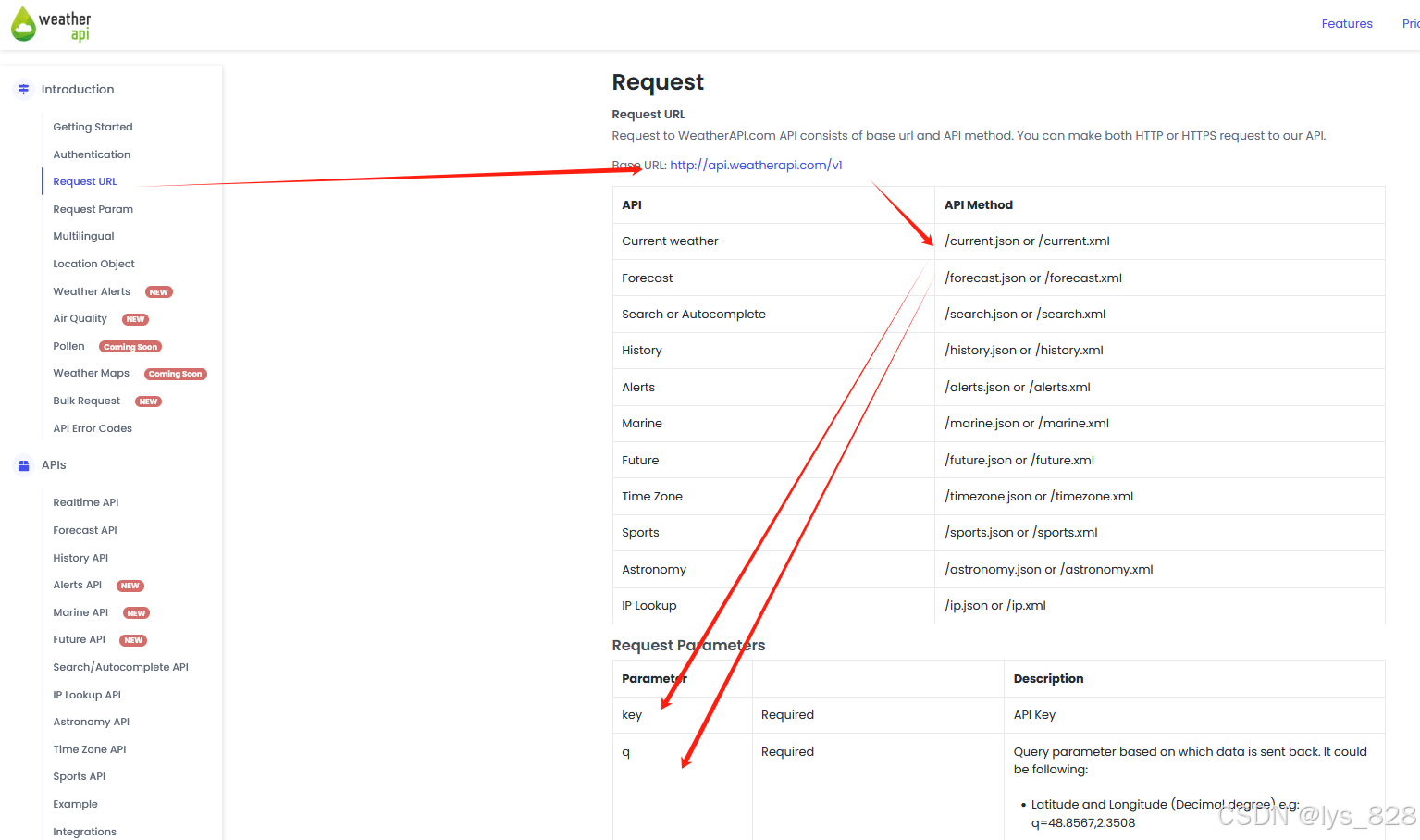

然后查询接口使用文档,如下

可以确定获取天气的接口网址和对应需要传递的参数:http://api.weatherapi.com/v1/current.json, key为调用这个网站的api需要填写的密匙,在注册后可以免费获取(有一段的试用期,足够用来学习),q为要查询的地点/区域。

完整的服务端的代码如下:(版本1: 使用requests库进行api数据的获取)

from mcp.server.fastmcp import FastMCP

import requests

weather_api_key = "eba0c4c0ad97xxxxx1632250206" #需要填写自己的KEY

weather_base_url = "http://api.weatherapi.com/v1/current.json"

app = FastMCP()

@app.tool()

def get_weather(city: str) :

'''

获取城市当前的天气信息

:param city: 具体城市,需要拼音

:return:

'''

params = {

"key": weather_api_key,

"q": city,

}

result = requests.get(weather_base_url, params=params)

print(result.json())

return result.json() #需要以json的数据格式返回,客服端才可以解析

if __name__ == "__main__":

app.run(transport='stdio')

2.2 客户端

然后客户端的代码只需要修改一行,就是最后执行的服务端的文件名称修改,并根据工具进行具体内容的修改

client = MCPClient('weather_search_server.py')

asyncio.run(client.run("请帮查询深圳的天气"))

此时weather_search_server.pyw文件中的代码如下:(就是最后执行部分的两行代码更换了)

class MCPClient:

def __init__(self, server_path: str):

self.server_path = server_path

self.deepseek = OpenAI(

api_key="sk-e2bec0xxxxxfaad44b849",

base_url="https://api.deepseek.com"

)

self.exit_stack = AsyncExitStack()

async def run(self, query: str):

# 1. 创建连接服务端的参数

server_parameters = StdioServerParameters(

command="python",

args=[self.server_path]

)

# 改成用异步上下文堆栈来处理

read_stream, write_stream = await self.exit_stack.enter_async_context(stdio_client(server=server_parameters))

session = await self.exit_stack.enter_async_context(ClientSession(read_stream=read_stream, write_stream=write_stream))

# 4. 初始化通信

await session.initialize()

# 5. 获取服务端有的tools

response = await session.list_tools()

# 6. 将工具封装成Function Calling格式的对象

tools = []

for tool in response.tools:

name = tool.name

description = tool.description

input_schema = tool.inputSchema

tools.append({

"type": "function",

"function": {

"name": name,

"description": description,

"input_schema": input_schema,

}

})

# 7. 发送消息给大模型,让大模型自主选择哪个工具(大模型不会自己调用)

# role:

# 1. user:用户发送给大模型的消息

# 2. assistant:大模型发送给用户的消息

# 3. system:给大模型的系统提示词

# 4. tool:函数执行完后返回的信息

messages = [{

"role": "user",

"content": query

}]

deepseek_response = self.deepseek.chat.completions.create(

messages=messages,

model="deepseek-chat",

tools=tools

)

choice = deepseek_response.choices[0]

# 如果finish_reason=tool_calls,那么说明大模型选择了一个工具

if choice.finish_reason == 'tool_calls':

# 为了后期,大模型能够更加精准的回复。把大模型选择的工具的message,重新添加到messages中

messages.append(choice.message.model_dump())

# 获取工具

tool_calls = choice.message.tool_calls

# 依次调用工具

for tool_call in tool_calls:

tool_call_id = tool_call.id

function = tool_call.function

function_name = function.name

function_arguments = json.loads(function.arguments)

result = await session.call_tool(name=function_name, arguments=function_arguments)

content = result.content[0].text

messages.append({

"role": "tool",

"content": content,

"tool_call_id": tool_call_id

})

# 重新把消息发送给大模型,让大模型生成最终的回应

response = self.deepseek.chat.completions.create(

model="deepseek-chat",

messages=messages

)

print(f"AI:{response.choices[0].message.content}")

else:

print('回复错误!')

async def aclose(self):

await self.exit_stack.aclose()

async def main():

client = MCPClient('weather_search_server.py')

try:

await client.run("请帮查询深圳的天气"))

finally:

# 不管上面代码是否出现异常,都要执行关闭操作

await client.aclose()

if __name__ == '__main__':

asyncio.run(main())

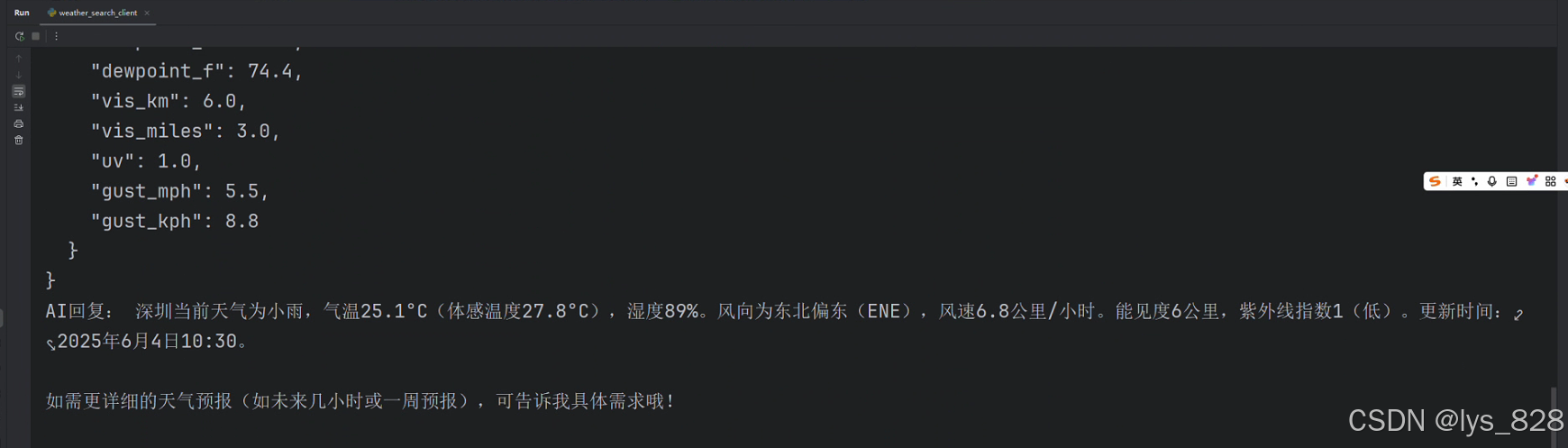

执行代码输出结果如下:(上面的一次输出就是打印获取到的天气数据格式,没有经过任何处理,直接把数据投递给大模型,由于进行了两次大模型的调用,第二次大模型直接给出了想要的结果)

考虑上下文管理的工具开发

在第一个MCP项目中,我们就考虑到上下文管理的需求,来避免资源的泄漏,工具中获取数据的代码可以优化为:

with requests.get(weather_base_url, params=params) as result:

print(result.json())

return result.json()

此外,除了使用requests库进行获取数据外,还可以使用httpx库进行数据的获取,这里介绍这种方式,作为知识点的扩充,可以在添加一下异常处理。

import httpx

with httpx.Client() as client:

try:

response = client.get(weather_base_url, params=params)

print(response.json())

return response.json()

except Exception as err:

print(f"查询接口异常:{err}")

return {"error": f"查询接口异常:{err}"}

也可以考虑添加异步处理和前面介绍的AsyncExitStack()函数

async def get_weather(city: str) :

'''

获取城市当前的天气信息

:param city: 具体城市,需要拼音

:return:

'''

params = {

"key": weather_api_key,

"q": city,

}

exit_stack = AsyncExitStack()

client = await exit_stack.enter_async_context(httpx.AsyncClient())

try:

response = await client.get(weather_base_url, params=params)

print(response.json())

return response.json()

except Exception as err:

print(f"查询接口异常:{err}")

return {"error": f"查询接口异常:{err}"}

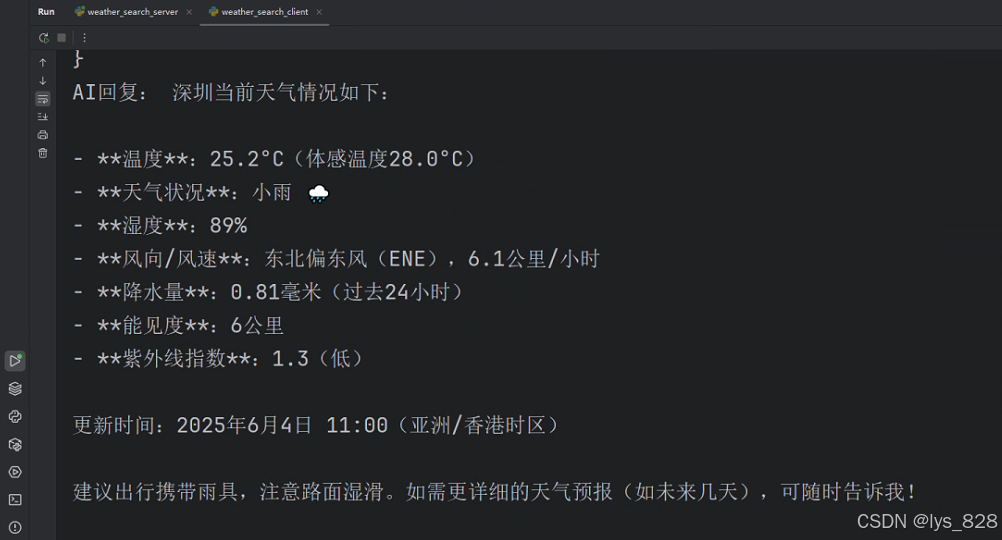

此时,运行客户端,输出结果如下

3. 服务端的全部代码

最后,服务端的全部代码如下

"""

-------------------------------------------------------------------------------

@Project : MCP projects

File : weather_search_server.py

Time : 2025-06-04 9:50

author : musen

Email : xianl828@163.com

-------------------------------------------------------------------------------

"""

from contextlib import AsyncExitStack

import httpx

from mcp.server.fastmcp import FastMCP

import requests

weather_api_key = "eba0c4c0axxxxx32250206" #自己注册账户后的KEY

weather_base_url = "http://api.weatherapi.com/v1/current.json"

app = FastMCP()

@app.tool()

async def get_weather(city: str) :

'''

获取城市当前的天气信息

:param city: 具体城市,需要拼音

:return:

'''

params = {

"key": weather_api_key,

"q": city,

}

exit_stack = AsyncExitStack()

client = await exit_stack.enter_async_context(httpx.AsyncClient())

try:

response = await client.get(weather_base_url, params=params)

print(response.json())

return response.json()

except Exception as err:

print(f"查询接口异常:{err}")

return {"error": f"查询接口异常:{err}"}

#async with httpx.AsyncClient() as client:

# try:

# response = await client.get(weather_base_url, params=params)

# return response.json()

# except Exception as err:

# print(f"查询接口异常:{err}")

# return {"error": f"查询接口异常:{err}"}

# with requests.get(weather_base_url, params=params) as result:

# print(result.json())

# return result.json()

if __name__ == "__main__":

app.run(transport='stdio')

4. 客户端的全部代码

最后,客户端的全部代码如下

"""

-------------------------------------------------------------------------------

@Project : MCP projects

File : client_stdio.py

Time : 2025-06-03 9:40

author : musen

Email : xianl828@163.com

-------------------------------------------------------------------------------

"""

import asyncio

import json

from contextlib import AsyncExitStack

from openai import OpenAI

from mcp.client.stdio import StdioServerParameters,stdio_client

from mcp import ClientSession

# stdio: 在客户端中,启动一个新的子进程来执行服务端的脚本代码

class MCPClient(OpenAI):

def __init__(self,server_path):

self.server_path = server_path

self.deepseek = OpenAI(

api_key="sk-5dxxxxxe4575ff9",

base_url="https://api.deepseek.com",

)

self.exit_stack = AsyncExitStack()

async def _run(self, query: str):

# 1. 创建连接服务端的参数

server_parameters = StdioServerParameters(

command="python",

args=[self.server_path]

)

# 2. 创建读、写流通道

async with stdio_client(server=server_parameters) as (read_stream, write_stream):

# 3. 创建客户端与服务端进行通信的session对象

async with ClientSession(read_stream=read_stream, write_stream=write_stream) as session:

# 4. 初始化通信

await session.initialize()

# 5. 获取服务端有的tools

response = await session.list_tools()

# 6. 将工具封装成Function Calling格式的对象

tools = []

for tool in response.tools:

name = tool.name

description = tool.description

input_schema = tool.inputSchema

tools.append({

"type": "function",

"function": {

"name": name,

"description": description,

"input_schema": input_schema,

}

})

# 7. 发送消息给大模型,让大模型自主选择哪个工具(大模型不会自己调用)

# role:

# 1. user:用户发送给大模型的消息

# 2. assistant:大模型发送给用户的消息

# 3. system:给大模型的系统提示词

# 4. tool:函数执行完后返回的信息

messages = [{

"role": "user",

"content": query

}]

deepseek_response = self.deepseek.chat.completions.create(

messages=messages,

model="deepseek-chat",

tools=tools

)

choice = deepseek_response.choices[0]

# 如果finish_reason=tool_calls,那么说明大模型选择了一个工具

if choice.finish_reason == 'tool_calls':

# 为了后期,大模型能够更加精准的回复。把大模型选择的工具的message,重新添加到messages中

messages.append(choice.message.model_dump())

# 获取工具

tool_calls = choice.message.tool_calls

# 依次调用工具

for tool_call in tool_calls:

tool_call_id = tool_call.id

function = tool_call.function

function_name = function.name

function_arguments = json.loads(function.arguments)

result = await session.call_tool(name=function_name, arguments=function_arguments)

content = result.content[0].text

messages.append({

"role": "tool",

"content": content,

"tool_call_id": tool_call_id

})

# 重新把消息发送给大模型,让大模型生成最终的回应

response = self.deepseek.chat.completions.create(

model="deepseek-chat",

messages=messages

)

print(f"AI:{response.choices[0].message.content}")

else:

print('回复错误!')

async def run(self, query: str):

# 1. 创建连接服务端的参数

server_parameters = StdioServerParameters(

command="python",

args=[self.server_path]

)

# 改成用异步上下文堆栈来处理

read_stream, write_stream = await self.exit_stack.enter_async_context(

stdio_client(server=server_parameters))

session = await self.exit_stack.enter_async_context(

ClientSession(read_stream=read_stream, write_stream=write_stream))

# 4. 初始化通信

await session.initialize()

# 5. 获取服务端有的tools

response = await session.list_tools()

# 6. 将工具封装成Function Calling格式的对象

tools = []

for tool in response.tools:

name = tool.name

description = tool.description

input_schema = tool.inputSchema

tools.append({

"type": "function",

"function": {

"name": name,

"description": description,

"input_schema": input_schema,

}

})

# 7. 发送消息给大模型,让大模型自主选择哪个工具(大模型不会自己调用)

# role:

# 1. user:用户发送给大模型的消息

# 2. assistant:大模型发送给用户的消息

# 3. system:给大模型的系统提示词

# 4. tool:函数执行完后返回的信息

messages = [{

"role": "user",

"content": query

}]

deepseek_response = self.deepseek.chat.completions.create(

messages=messages,

model="deepseek-chat",

tools=tools

)

choice = deepseek_response.choices[0]

# 如果finish_reason=tool_calls,那么说明大模型选择了一个工具

if choice.finish_reason == 'tool_calls':

# 为了后期,大模型能够更加精准的回复。把大模型选择的工具的message,重新添加到messages中

messages.append(choice.message.model_dump())

# 获取工具

tool_calls = choice.message.tool_calls

# 依次调用工具

for tool_call in tool_calls:

tool_call_id = tool_call.id

function = tool_call.function

function_name = function.name

function_arguments = json.loads(function.arguments)

result = await session.call_tool(name=function_name, arguments=function_arguments)

content = result.content[0].text

messages.append({

"role": "tool",

"content": content,

"tool_call_id": tool_call_id

})

# 重新把消息发送给大模型,让大模型生成最终的回应

response = self.deepseek.chat.completions.create(

model="deepseek-chat",

messages=messages

)

print(f"AI:{response.choices[0].message.content}")

else:

print('回复错误!')

async def aclose(self):

await self.exit_stack.aclose()

async def main():

client = MCPClient('weather_search_server.py')

try:

await client.run("请帮查询深圳的天气")

finally:

# 不管上面代码是否出现异常,都要执行关闭操作

await client.aclose()

if __name__ == '__main__':

asyncio.run(main())

至此,关于MCP中的Tool工具的开发,就详细梳理完毕,此外,关于数据获取的部分,没有进行处理,而是直接丢给大模型,后续可以将对应的数据字段提取之后再丢给大模型,提高回复的效率和针对性,同时减少Token的消耗。

完结撒花,✿✿ヽ(°▽°)ノ✿

5414

5414

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?