背景

前段时间,项目需求,需要开发一个服务端,高频率的接收采集的数据,于是选择采用grpc 进行开发。大致讲下核心步骤,附上源码,请自行下载使用。

实现效果

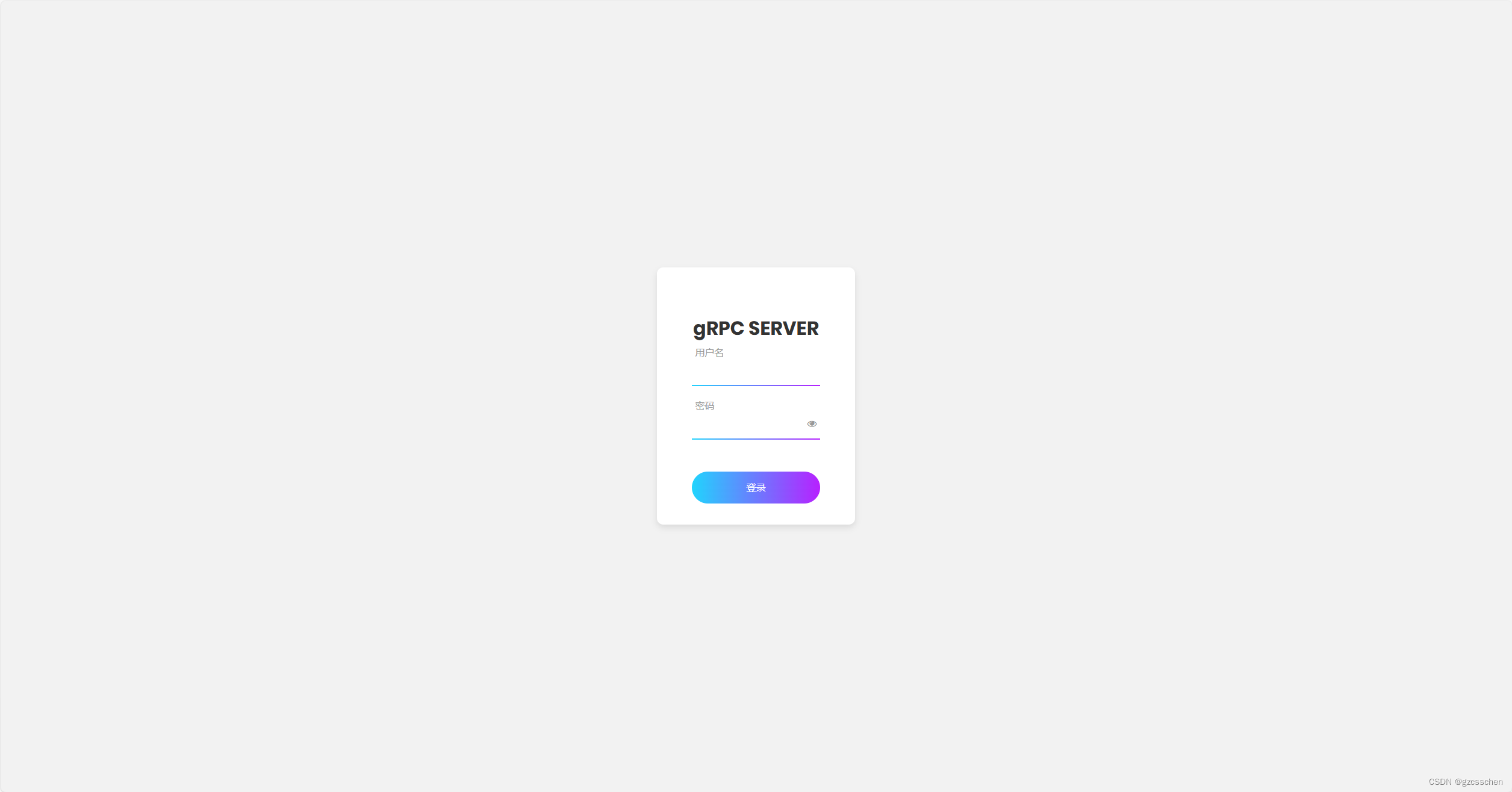

server登陆界面

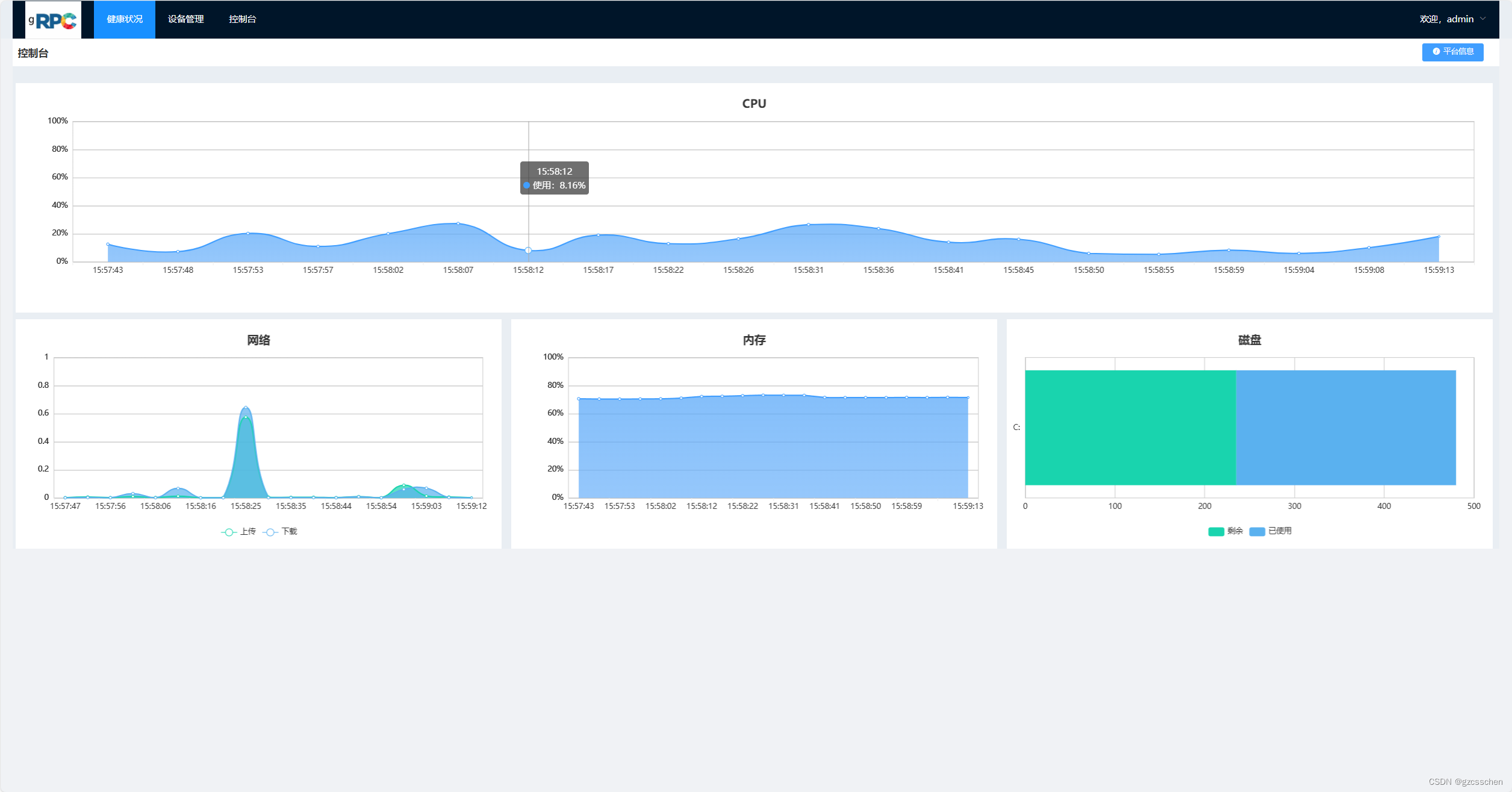

服务端监控

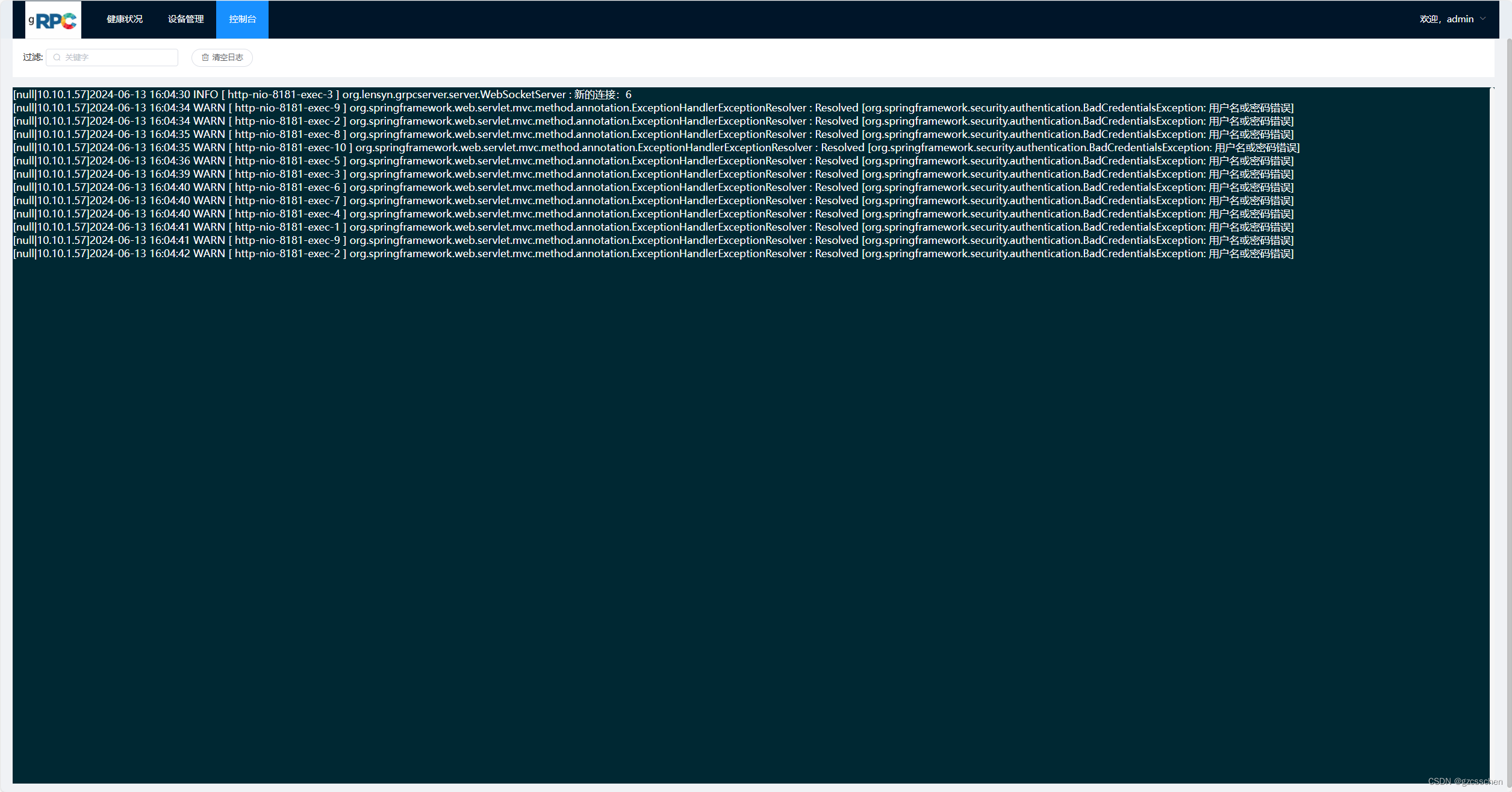

grpc服务日志监控

一、maven pom文件

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.7.10</version>

<relativePath/> <!-- lookup parent from repository -->

</parent>

<groupId>org.lensyn</groupId>

<artifactId>grpc-server</artifactId>

<version>1.0.0</version>

<name>grpc-server</name>

<description>grpc-server</description>

<properties>

<java.version>11</java.version>

<spring-boot.version>2.7.10</spring-boot.version>

<spring-data.version>2.7.10</spring-data.version>

<spring.version>5.3.26</spring.version>

<slf4j.version>1.7.32</slf4j.version>

<log4j.version>2.17.1</log4j.version>

<logback.version>1.2.10</logback.version>

<guava.version>31.1-jre</guava.version>

<caffeine.version>2.6.1</caffeine.version>

<commons-lang3.version>3.12.0</commons-lang3.version>

<commons-codec.version>1.15</commons-codec.version>

<commons-io.version>2.11.0</commons-io.version>

<commons-logging.version>1.2</commons-logging.version>

<commons-csv.version>1.4</commons-csv.version>

<jackson.version>2.13.4</jackson.version>

<jackson-databind.version>2.13.4.2</jackson-databind.version>

<gson.version>2.9.0</gson.version>

<freemarker.version>2.3.30</freemarker.version>

<protobuf.version>3.21.9</protobuf.version>

<grpc.version>1.42.1</grpc.version>

<lombok.version>1.18.18</lombok.version>

<netty.version>4.1.91.Final</netty.version>

<netty-tcnative.version>2.0.51.Final</netty-tcnative.version>

<!-- IMPORTANT: If you change the version of the kafka device, make sure to synchronize our overwritten implementation of the

org.apache.kafka.common.network.NetworkReceive class in the application module. It addresses the issue https://issues.apache.org/jira/browse/KAFKA-4090.

Here is the source to track https://github.com/apache/kafka/tree/trunk/clients/src/main/java/org/apache/kafka/common/network -->

<kafka.version>3.2.0</kafka.version>

<google.common.protos.version>2.1.0</google.common.protos.version> <!-- required by io.grpc:grpc-protobuf:1.38.0-->

<commons-beanutils.version>1.9.4</commons-beanutils.version>

<commons-collections.version>3.2.2</commons-collections.version>

<protobuf-dynamic.version>1.0.4TB</protobuf-dynamic.version>

<!-- BLACKBOX TEST SCOPE -->

<springfox-swagger.version>3.0.4</springfox-swagger.version>

<swagger-annotations.version>1.6.3</swagger-annotations.version>

<postgresql.driver.version>42.5.0</postgresql.driver.version>

<docker.image.prefix>repo.lensyn.com</docker.image.prefix>

<docker.repo>repo.lensyn.com</docker.repo>

<docker.name>grpc-server</docker.name>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter</artifactId>

</dependency>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-core</artifactId>

<version>${spring.version}</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

<version>${spring-boot.version}</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<version>${spring-boot.version}</version>

<scope>test</scope>

<exclusions>

<exclusion>

<groupId>com.vaadin.external.google</groupId>

<artifactId>android-json</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.postgresql</groupId>

<artifactId>postgresql</artifactId>

<version>${postgresql.driver.version}</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-devtools</artifactId>

<scope>runtime</scope>

<optional>true</optional>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-configuration-processor</artifactId>

<optional>true</optional>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<optional>true</optional>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-security</artifactId>

</dependency>

<!--websocket -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-websocket</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.data</groupId>

<artifactId>spring-data-commons</artifactId>

<version>${spring-data.version}</version>

</dependency>

<dependency>

<groupId>com.google.code.gson</groupId>

<artifactId>gson</artifactId>

<version>${gson.version}</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-api</artifactId>

<version>${slf4j.version}</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>log4j-over-slf4j</artifactId>

<version>${slf4j.version}</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>jul-to-slf4j</artifactId>

<version>${slf4j.version}</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-api</artifactId>

<version>${log4j.version}</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-core</artifactId>

<version>${log4j.version}</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-to-slf4j</artifactId>

<version>${log4j.version}</version>

</dependency>

<dependency>

<groupId>ch.qos.logback</groupId>

<artifactId>logback-core</artifactId>

<version>${logback.version}</version>

</dependency>

<dependency>

<groupId>ch.qos.logback</groupId>

<artifactId>logback-classic</artifactId>

<version>${logback.version}</version>

</dependency>

<dependency>

<groupId>com.google.guava</groupId>

<artifactId>guava</artifactId>

<version>${guava.version}</version>

</dependency>

<dependency>

<groupId>com.google.protobuf</groupId>

<artifactId>protobuf-java</artifactId>

<version>${protobuf.version}</version>

</dependency>

<dependency>

<groupId>com.google.protobuf</groupId>

<artifactId>protobuf-java-util</artifactId>

<version>${protobuf.version}</version>

</dependency>

<dependency>

<groupId>org.thingsboard</groupId>

<artifactId>protobuf-dynamic</artifactId>

<version>${protobuf-dynamic.version}</version>

</dependency>

<!-- https://mvnrepository.com/artifact/com.google.protobuf/protoc -->

<dependency>

<groupId>com.google.protobuf</groupId>

<artifactId>protoc</artifactId>

<version>3.21.9</version>

<type>pom</type>

</dependency>

<dependency>

<groupId>io.grpc</groupId>

<artifactId>grpc-netty-shaded</artifactId>

<version>${grpc.version}</version>

</dependency>

<dependency>

<groupId>io.grpc</groupId>

<artifactId>grpc-protobuf</artifactId>

<version>${grpc.version}</version>

</dependency>

<dependency>

<groupId>io.grpc</groupId>

<artifactId>grpc-stub</artifactId>

<version>${grpc.version}</version>

</dependency>

<dependency>

<groupId>io.grpc</groupId>

<artifactId>grpc-alts</artifactId>

<version>${grpc.version}</version>

</dependency>

<dependency>

<groupId>io.grpc</groupId>

<artifactId>grpc-auth</artifactId>

<version>${grpc.version}</version>

</dependency>

<dependency>

<groupId>io.grpc</groupId>

<artifactId>grpc-api</artifactId>

<version>${grpc.version}</version>

</dependency>

<dependency>

<groupId>commons-beanutils</groupId>

<artifactId>commons-beanutils</artifactId>

<version>${commons-beanutils.version}</version>

</dependency>

<dependency>

<groupId>commons-collections</groupId>

<artifactId>commons-collections</artifactId>

<version>${commons-collections.version}</version>

</dependency>

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-lang3</artifactId>

<version>${commons-lang3.version}</version>

</dependency>

<dependency>

<groupId>commons-io</groupId>

<artifactId>commons-io</artifactId>

<version>${commons-io.version}</version>

</dependency>

<dependency>

<groupId>commons-codec</groupId>

<artifactId>commons-codec</artifactId>

<version>${commons-codec.version}</version>

</dependency>

<dependency>

<groupId>commons-logging</groupId>

<artifactId>commons-logging</artifactId>

<version>${commons-logging.version}</version>

</dependency>

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-csv</artifactId>

<version>${commons-csv.version}</version>

</dependency>

<dependency>

<groupId>io.netty</groupId>

<artifactId>netty-all</artifactId>

<version>${netty.version}</version>

</dependency>

<dependency>

<groupId>io.netty</groupId>

<artifactId>netty-tcnative-boringssl-static</artifactId>

<version>${netty-tcnative.version}</version>

</dependency>

<dependency>

<groupId>io.netty</groupId>

<artifactId>netty-tcnative-classes</artifactId>

<version>${netty-tcnative.version}</version>

</dependency>

<dependency>

<groupId>io.netty</groupId>

<artifactId>netty-buffer</artifactId>

<version>${netty.version}</version>

</dependency>

<dependency>

<groupId>io.netty</groupId>

<artifactId>netty-codec</artifactId>

<version>${netty.version}</version>

</dependency>

<dependency>

<groupId>io.netty</groupId>

<artifactId>netty-codec-http</artifactId>

<version>${netty.version}</version>

</dependency>

<dependency>

<groupId>io.netty</groupId>

<artifactId>netty-codec-http2</artifactId>

<version>${netty.version}</version>

</dependency>

<dependency>

<groupId>io.netty</groupId>

<artifactId>netty-codec-mqtt</artifactId>

<version>${netty.version}</version>

</dependency>

<dependency>

<groupId>io.netty</groupId>

<artifactId>netty-codec-socks</artifactId>

<version>${netty.version}</version>

</dependency>

<dependency>

<groupId>io.netty</groupId>

<artifactId>netty-common</artifactId>

<version>${netty.version}</version>

</dependency>

<dependency>

<groupId>io.netty</groupId>

<artifactId>netty-handler</artifactId>

<version>${netty.version}</version>

</dependency>

<dependency>

<groupId>io.netty</groupId>

<artifactId>netty-handler-proxy</artifactId>

<version>${netty.version}</version>

</dependency>

<dependency>

<groupId>io.netty</groupId>

<artifactId>netty-resolver</artifactId>

<version>${netty.version}</version>

</dependency>

<dependency>

<groupId>io.netty</groupId>

<artifactId>netty-transport</artifactId>

<version>${netty.version}</version>

</dependency>

<dependency> <!-- brought by com.microsoft.azure:azure-servicebus -->

<groupId>io.netty</groupId>

<artifactId>netty-transport-native-epoll</artifactId>

<version>${netty.version}</version>

<classifier>linux-x86_64</classifier>

</dependency>

<dependency> <!-- brought by com.microsoft.azure:azure-servicebus -->

<groupId>io.netty</groupId>

<artifactId>netty-transport-native-kqueue</artifactId>

<version>${netty.version}</version>

<classifier>osx-x86_64</classifier>

</dependency>

<dependency>

<groupId>io.netty</groupId>

<artifactId>netty-transport-native-unix-common</artifactId>

<version>${netty.version}</version>

</dependency>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>${kafka.version}</version>

</dependency>

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<version>${lombok.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>io.swagger</groupId>

<artifactId>swagger-annotations</artifactId>

<version>${swagger-annotations.version}</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-jpa</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-jdbc</artifactId>

</dependency>

<!-- https://mvnrepository.com/artifact/org.xerial/sqlite-jdbc -->

<dependency>

<groupId>org.xerial</groupId>

<artifactId>sqlite-jdbc</artifactId>

<version>3.36.0.3</version>

</dependency>

<dependency>

<groupId>com.github.gwenn</groupId>

<artifactId>sqlite-dialect</artifactId>

<version>0.1.2</version>

</dependency>

<!-- https://mvnrepository.com/artifact/com.alibaba/fastjson -->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>2.0.49</version>

</dependency>

<!--在线文档 -->

<!-- https://mvnrepository.com/artifact/io.springfox/springfox-swagger-ui -->

<dependency>

<groupId>io.springfox</groupId>

<artifactId>springfox-swagger-ui</artifactId>

<version>3.0.0</version>

</dependency>

<dependency>

<groupId>io.springfox</groupId>

<artifactId>springfox-swagger2</artifactId>

<version>3.0.0</version>

</dependency>

<!-- 获取系统信息 -->

<dependency>

<groupId>com.github.oshi</groupId>

<artifactId>oshi-core</artifactId>

<version>6.2.2</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-websocket</artifactId>

</dependency>

<!--Mybatis分页插件 -->

<dependency>

<groupId>com.github.pagehelper</groupId>

<artifactId>pagehelper-spring-boot-starter</artifactId>

<version>1.4.6</version>

</dependency>

<!-- jwt实现 -->

<dependency>

<groupId>org.bitbucket.b_c</groupId>

<artifactId>jose4j</artifactId>

<version>0.9.3</version>

</dependency>

<dependency>

<groupId>org.jetbrains</groupId>

<artifactId>annotations</artifactId>

<version>24.1.0</version>

</dependency>

</dependencies>

<build>

<finalName>${project.artifactId}</finalName>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.8.1</version>

<configuration>

<source>11</source>

<target>11</target>

</configuration>

</plugin>

<plugin>

<groupId>pl.project13.maven</groupId>

<artifactId>git-commit-id-plugin</artifactId>

<version>3.0.1</version>

<configuration>

<offline>true</offline>

<failOnNoGitDirectory>false</failOnNoGitDirectory>

<dateFormat>yyyyMMdd</dateFormat>

</configuration>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-surefire-plugin</artifactId>

<version>2.22.2</version>

<configuration>

<skipTests>true</skipTests>

</configuration>

</plugin>

<plugin>

<groupId>org.codehaus.mojo</groupId>

<artifactId>exec-maven-plugin</artifactId>

<version>3.0.0</version>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-dependency-plugin</artifactId>

<executions>

<execution>

<id>copy-protoc</id>

<phase>generate-sources</phase>

<goals>

<goal>copy</goal>

</goals>

<configuration>

<artifactItems>

<artifactItem>

<groupId>com.google.protobuf</groupId>

<artifactId>protoc</artifactId>

<version>${protobuf.version}</version>

<classifier>${os.detected.classifier}</classifier>

<type>exe</type>

<overWrite>true</overWrite>

<outputDirectory>${project.build.directory}</outputDirectory>

</artifactItem>

</artifactItems>

</configuration>

</execution>

</executions>

</plugin>

<plugin>

<groupId>org.xolstice.maven.plugins</groupId>

<artifactId>protobuf-maven-plugin</artifactId>

<version>0.6.1</version>

<configuration>

<!--

The version of protoc must match protobuf-java. If you don't depend on

protobuf-java directly, you will be transitively depending on the

protobuf-java version that grpc depends on.

-->

<protocArtifact>com.google.protobuf:protoc:${protobuf.version}:exe:${os.detected.classifier}

</protocArtifact>

<pluginId>grpc-java</pluginId>

<pluginArtifact>io.grpc:protoc-gen-grpc-java:${grpc.version}:exe:${os.detected.classifier}

</pluginArtifact>

<protoSourceRoot>${project.basedir}/src/main/java/org/lensyn/grpcserver/proto</protoSourceRoot>

<!--默认值,proto目标java文件路径-->

</configuration>

<executions>

<execution>

<goals>

<goal>compile</goal>

<goal>compile-custom</goal>

<goal>test-compile</goal>

</goals>

</execution>

</executions>

</plugin>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

<configuration>

<fork>true</fork>

<includeSystemScope>true</includeSystemScope>

<excludes>

<exclude>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

</exclude>

</excludes>

</configuration>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-resources-plugin</artifactId>

<executions>

<execution>

<id>copy-docker-config</id>

<phase>process-resources</phase>

<goals>

<goal>copy-resources</goal>

</goals>

<configuration>

<outputDirectory>${project.build.directory}</outputDirectory>

<resources>

<resource>

<directory>docker</directory>

<includes>

<include>Dockerfile</include>

<include>Dockerfile-arm64</include>

</includes>

<filtering>true</filtering>

</resource><resource>

<directory>src/main/resources/</directory>

<includes>

<include>logback-spring-local.xml</include>

</includes>

<filtering>false</filtering>

</resource>

<resource>

<directory>src/main/resources/sql</directory>

<filtering>false</filtering>

</resource>

</resources>

</configuration>

</execution>

</executions>

</plugin>

<!--<plugin>

<groupId>com.spotify</groupId>

<artifactId>dockerfile-maven-plugin</artifactId>

<version>1.4.13</version>

<executions>

<execution>

<id>default</id>

<goals>

<goal>build</goal>

<goal>push</goal>

</goals>

</execution>

</executions>

<configuration>

<repository>${docker.image.prefix}/${project.artifactId}</repository>

<tag>${project.version}</tag>

<buildArgs>

<JAR_FILE>${project.build.finalName}.jar</JAR_FILE>

</buildArgs>

</configuration>

</plugin>-->

<plugin>

<groupId>org.codehaus.mojo</groupId>

<artifactId>exec-maven-plugin</artifactId>

<version>3.1.1</version>

<executions>

<!--<execution>

<id>push-amd-images</id>

<phase>install</phase>

<goals>

<goal>exec</goal>

</goals>

<configuration>

<executable>docker</executable>

<workingDirectory>${project.build.directory}</workingDirectory>

<arguments>

<argument>buildx</argument>

<argument>build</argument>

<argument>-fDockerfile </argument>

<argument>-t</argument>

<argument>${docker.repo}/${docker.name}:${project.version}</argument>

<argument>platform=linux/amd64</argument>

<argument>-o</argument>

<argument>type=docker</argument>

<argument>.</argument>

</arguments>

</configuration>

</execution>-->

<execution>

<id>push-arm-images</id>

<phase>install</phase>

<goals>

<goal>exec</goal>

</goals>

<configuration>

<executable>docker</executable>

<workingDirectory>${project.build.directory}</workingDirectory>

<arguments>

<argument>buildx</argument>

<argument>build</argument>

<argument>-fDockerfile-arm64 </argument>

<argument>-t</argument>

<argument>${docker.repo}/${docker.name}-arm64:${project.version}</argument>

<argument>--platform=linux/arm64</argument>

<argument>-o</argument>

<argument>type=docker</argument>

<argument>.</argument>

</arguments>

</configuration>

</execution>

</executions>

</plugin>

</plugins>

<resources>

<resource>

<directory>src/main/resources</directory>

</resource>

<resource>

<directory>src/main/java</directory>

<includes>

<include>**/*.xml</include>

</includes>

</resource>

</resources>

</build>

<distributionManagement>

<repository>

<id>thingsboard-repo-deploy</id>

<name>ThingsBoard Repo Deployment</name>

<url>https://repo.thingsboard.io/artifactory/libs-release-public</url>

</repository>

</distributionManagement>

<repositories>

<repository>

<id>central</id>

<url>https://repo1.maven.org/maven2/</url>

</repository>

<repository>

<id>spring-snapshots</id>

<name>Spring Snapshots</name>

<url>https://repo.spring.io/snapshot</url>

<snapshots>

<enabled>true</enabled>

</snapshots>

</repository>

<repository>

<id>spring-milestones</id>

<name>Spring Milestones</name>

<url>https://repo.spring.io/milestone</url>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

<repository>

<id>typesafe</id>

<name>Typesafe Repository</name>

<url>https://repo.typesafe.com/typesafe/releases/</url>

</repository>

<repository>

<id>sonatype</id>

<url>https://oss.sonatype.org/content/groups/public</url>

</repository>

</repositories>

<profiles>

<profile>

<id>default</id>

<activation>

<activeByDefault>true</activeByDefault>

</activation>

</profile>

<!-- download sources under target/dependencies -->

<!-- mvn package -Pdownload-dependencies -Dclassifier=sources dependency:copy-dependencies -->

<profile>

<id>download-dependencies</id>

<properties>

<downloadSources>true</downloadSources>

<downloadJavadocs>true</downloadJavadocs>

</properties>

</profile>

</profiles>

</project>

二、核心sever代码

package org.lensyn.grpcserver.server;

import com.google.common.util.concurrent.FutureCallback;

import com.google.common.util.concurrent.Futures;

import com.google.common.util.concurrent.ListenableFuture;

import com.google.common.util.concurrent.SettableFuture;

import io.grpc.Server;

import io.grpc.netty.shaded.io.grpc.netty.NettyServerBuilder;

import io.grpc.stub.StreamObserver;

import lombok.extern.slf4j.Slf4j;

import org.apache.nifi.processors.grpc.gen.edge.v1.RequestMsg;

import org.apache.nifi.processors.grpc.gen.edge.v1.ResponseMsg;

import org.lensyn.grpcserver.common.executors.ThingsBoardThreadFactory;

import org.lensyn.grpcserver.common.util.ResourceUtils;

import org.lensyn.grpcserver.data.entity.Device;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.boot.autoconfigure.condition.ConditionalOnProperty;

import org.springframework.stereotype.Service;

import javax.annotation.PostConstruct;

import javax.annotation.PreDestroy;

import java.io.IOException;

import java.io.InputStream;

import java.util.*;

import java.util.concurrent.*;

import java.util.concurrent.locks.Lock;

import java.util.concurrent.locks.ReentrantLock;

@Service

@Slf4j

@ConditionalOnProperty(prefix = "edges", value = "enabled", havingValue = "true")

public class GrpcServer extends org.apache.nifi.processors.grpc.gen.edge.v1.EdgeRpcServiceGrpc.EdgeRpcServiceImplBase {

private final ConcurrentMap<UUID, GrpcSession> sessions = new ConcurrentHashMap<>();

private final ConcurrentMap<UUID, Lock> sessionNewEventsLocks = new ConcurrentHashMap<>();

private final Map<Long, Boolean> sessionNewEvents = new HashMap<>();

private final ConcurrentMap<Long, ScheduledFuture<?>> sessionEdgeEventChecks = new ConcurrentHashMap<>();

private ScheduledFuture clientConfigCheckTask;

//private final ConcurrentMap<UUID, Consumer<FromEdgeSyncResponse>> localSyncEdgeRequests = new ConcurrentHashMap<>();

@Value("${edges.rpc.port}")

private int rpcPort;

@Value("${edges.rpc.ssl.enabled}")

private boolean sslEnabled;

@Value("${edges.rpc.ssl.cert}")

private String certFileResource;

@Value("${edges.rpc.ssl.private_key}")

private String privateKeyResource;

@Value("${edges.state.persistToTelemetry:false}")

private boolean persistToTelemetry;

@Value("${edges.rpc.client_max_keep_alive_time_sec:1}")

private int clientMaxKeepAliveTimeSec;

@Value("${edges.rpc.max_inbound_message_size:4194304}")

private int maxInboundMessageSize;

@Value("${edges.rpc.keep_alive_time_sec:10}")

private int keepAliveTimeSec;

@Value("${edges.rpc.keep_alive_timeout_sec:5}")

private int keepAliveTimeoutSec;

@Value("${edges.scheduler_pool_size}")

private int schedulerPoolSize;

@Value("${edges.send_scheduler_pool_size}")

private int sendSchedulerPoolSize;

@Autowired

private GrpcContextComponent ctx;

private Server server;

private ScheduledExecutorService edgeEventProcessingExecutorService;

private ScheduledExecutorService sendDownlinkExecutorService;

private ScheduledExecutorService executorService;

@PostConstruct

public void init() {

log.info("Initializing Edge RPC service!");

NettyServerBuilder builder = NettyServerBuilder.forPort(rpcPort)

.permitKeepAliveTime(clientMaxKeepAliveTimeSec, TimeUnit.SECONDS)

.keepAliveTime(keepAliveTimeSec, TimeUnit.SECONDS)

.keepAliveTimeout(keepAliveTimeoutSec, TimeUnit.SECONDS)

.permitKeepAliveWithoutCalls(true)

.maxInboundMessageSize(maxInboundMessageSize)

.addService(this);

if (sslEnabled) {

try {

InputStream certFileIs = ResourceUtils.getInputStream(this, certFileResource);

InputStream privateKeyFileIs = ResourceUtils.getInputStream(this, privateKeyResource);

builder.useTransportSecurity(certFileIs, privateKeyFileIs);

} catch (Exception e) {

log.error("Unable to set up SSL context. Reason: " + e.getMessage(), e);

throw new RuntimeException("Unable to set up SSL context!", e);

}

}

server = builder.build();

log.info("Going to start Edge RPC server using port: {}", rpcPort);

try {

server.start();

} catch (IOException e) {

log.error("Failed to start Edge RPC server!", e);

throw new RuntimeException("Failed to start Edge RPC server!");

}

this.edgeEventProcessingExecutorService = Executors.newScheduledThreadPool(schedulerPoolSize, ThingsBoardThreadFactory.forName("edge-event-check-scheduler"));

this.sendDownlinkExecutorService = Executors.newScheduledThreadPool(sendSchedulerPoolSize, ThingsBoardThreadFactory.forName("edge-send-scheduler"));

this.executorService = Executors.newSingleThreadScheduledExecutor(ThingsBoardThreadFactory.forName("edge-service"));

log.info("Edge RPC service initialized!");

}

@PreDestroy

public void destroy() {

log.info("关闭GrpcServer服务");

if (server != null) {

server.shutdownNow();

}

for (Map.Entry<Long, ScheduledFuture<?>> entry : sessionEdgeEventChecks.entrySet()) {

Long clientId = entry.getKey();

ScheduledFuture<?> sessionEdgeEventCheck = entry.getValue();

if (sessionEdgeEventCheck != null && !sessionEdgeEventCheck.isCancelled() && !sessionEdgeEventCheck.isDone()) {

sessionEdgeEventCheck.cancel(true);

sessionEdgeEventChecks.remove(clientId);

}

}

if (edgeEventProcessingExecutorService != null) {

edgeEventProcessingExecutorService.shutdownNow();

}

if (sendDownlinkExecutorService != null) {

sendDownlinkExecutorService.shutdownNow();

}

if (executorService != null) {

executorService.shutdownNow();

}

}

@Override

public StreamObserver<RequestMsg> handleMsgs(StreamObserver<ResponseMsg> outputStream) {

return new GrpcSession(ctx, outputStream, this::onEdgeConnect, this::onEdgeDisconnect, sendDownlinkExecutorService, this.maxInboundMessageSize).getInputStream();

}

private void onEdgeConnect(Long clentId, GrpcSession grpcSession) {

sessions.put(grpcSession.getSessionId(), grpcSession);

final Lock newEventLock = sessionNewEventsLocks.computeIfAbsent(grpcSession.getSessionId(), id -> new ReentrantLock());

newEventLock.lock();

try {

sessionNewEvents.put(clentId, true);

} finally {

newEventLock.unlock();

}

cancelScheduleEdgeEventsCheck(clentId);

scheduleEdgeEventsCheck(grpcSession);

}

private ListenableFuture<Boolean> clientConfigCheck(GrpcSession session){

SettableFuture<Boolean> result = SettableFuture.create();

result.set(true);

try {

//更新数据库配置信息

Optional<Device> optional = ctx.getClientService().findClientByRoutingKey(session.getDevice().getRoutingKey());

if (optional.isPresent()) {

session.setDevice(optional.get());

}

} catch (Exception e) {

result.set(false);

return result;

//log.warn("[{}] Failed to process edge events for edge [{}]!", "tenantId", grpcSession.getClient().getId(), e);

}

return result;

}

private void scheduleEdgeEventsCheck(GrpcSession session) {

Long clientId = session.getDevice().getId();

UUID sessionId = session.getSessionId();

if (sessions.containsKey(sessionId)) {

ScheduledFuture<?> edgeEventCheckTask = edgeEventProcessingExecutorService.schedule(() -> {

try {

final Lock newEventLock = sessionNewEventsLocks.computeIfAbsent(sessionId, id -> new ReentrantLock());

newEventLock.lock();

try {

/*if (Boolean.TRUE.equals(sessionNewEvents.get(clientId))) {

log.trace("[{}][{}] Set session new events flag to false", "tenantId", session.getClient().getId());

sessionNewEvents.put(clientId, false);

*//*Futures.addCallback(session.processEdgeEvents(), new FutureCallback<>() {

@Override

public void onSuccess(Boolean newEventsAdded) {

if (Boolean.TRUE.equals(newEventsAdded)) {

sessionNewEvents.put(clientId, true);

}

scheduleEdgeEventsCheck(session);

}

@Override

public void onFailure(Throwable t) {

log.warn("[{}] Failed to process edge events for edge [{}]!", "tenantId", session.getClient().getId(), t);

scheduleEdgeEventsCheck(session);

}

}, ctx.getGrpcCallbackExecutorService());*//*

} else {

scheduleEdgeEventsCheck(session);

}*/

//检查数据库客户端配置是否更新

ListenableFuture<Boolean> clientconfigCheck = clientConfigCheck(session);

Futures.addCallback(clientconfigCheck,new FutureCallback<>() {

@Override

public void onSuccess(Boolean result) {

scheduleEdgeEventsCheck(session);

}

@Override

public void onFailure(Throwable t) {

scheduleEdgeEventsCheck(session);

}

}, ctx.getGrpcCallbackExecutorService());

} finally {

newEventLock.unlock();

}

} catch (Exception e) {

log.warn("[{}] Failed to process edge events for edge [{}]!", "tenantId", session.getDevice().getId(), e);

}

}, ctx.getEdgeEventStorageSettings().getNoRecordsSleepInterval(), TimeUnit.MILLISECONDS);

sessionEdgeEventChecks.put(clientId, edgeEventCheckTask);

log.trace("[{}] Check edge event scheduled for edge [{}]", "tenantId", clientId);

} else {

log.debug("[{}] Session was removed and edge event check schedule must not be started [{}]",

"tenantId", clientId);

}

}

private void cancelScheduleEdgeEventsCheck(Long clientId) {

log.trace("[{}] cancelling edge event check for edge", clientId);

if (sessionEdgeEventChecks.containsKey(clientId)) {

ScheduledFuture<?> sessionEdgeEventCheck = sessionEdgeEventChecks.get(clientId);

if (sessionEdgeEventCheck != null && !sessionEdgeEventCheck.isCancelled() && !sessionEdgeEventCheck.isDone()) {

sessionEdgeEventCheck.cancel(true);

sessionEdgeEventChecks.remove(clientId);

}

}

}

private void onEdgeDisconnect(Long clentId, UUID sessionId) {

log.info("[{}][{}] edge disconnected!", clentId, sessionId);

GrpcSession toRemove = sessions.get(sessionId);

if (Objects.nonNull(toRemove) && toRemove.getSessionId().equals(sessionId)) {

toRemove = sessions.remove(sessionId);

final Lock newEventLock = sessionNewEventsLocks.computeIfAbsent(sessionId, id -> new ReentrantLock());

newEventLock.lock();

try {

sessionNewEvents.remove(clentId);

} finally {

newEventLock.unlock();

}

} else {

log.debug("[{}] edge session [{}] is not available anymore, nothing to remove. most probably this session is already outdated!", clentId, sessionId);

}

cancelScheduleEdgeEventsCheck(clentId);

}

}

三、GrpcSession 连接管理

/**

* Copyright © 2016-2023 The Thingsboard Authors

* <p>

* Licensed under the Apache License, Version 2.0 (the "License");

* you may not use this file except in compliance with the License.

* You may obtain a copy of the License at

* <p>

* http://www.apache.org/licenses/LICENSE-2.0

* <p>

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package org.lensyn.grpcserver.server;

import com.alibaba.fastjson.JSON;

import com.alibaba.fastjson.JSONArray;

import com.alibaba.fastjson.JSONObject;

import com.google.common.util.concurrent.FutureCallback;

import com.google.common.util.concurrent.Futures;

import com.google.common.util.concurrent.ListenableFuture;

import com.google.common.util.concurrent.SettableFuture;

import io.grpc.stub.StreamObserver;

import lombok.Data;

import lombok.extern.slf4j.Slf4j;

import org.apache.commons.lang3.StringUtils;

import org.apache.commons.lang3.time.StopWatch;

import org.apache.nifi.processors.grpc.gen.edge.v1.*;

import org.checkerframework.checker.nullness.qual.Nullable;

import org.lensyn.grpcserver.common.util.EdgeUtils;

import org.lensyn.grpcserver.common.util.SpringContextUtil;

import org.lensyn.grpcserver.data.entity.Device;

import org.lensyn.grpcserver.data.page.PageLink;

import org.lensyn.grpcserver.kafka.ProducerService;

import org.springframework.context.ApplicationContext;

import org.springframework.data.util.Pair;

import org.springframework.util.CollectionUtils;

import java.io.Closeable;

import java.util.*;

import java.util.concurrent.CountDownLatch;

import java.util.concurrent.ScheduledExecutorService;

import java.util.concurrent.TimeUnit;

import java.util.concurrent.locks.ReentrantLock;

import java.util.function.BiConsumer;

@Slf4j

@Data

public final class GrpcSession implements Closeable {

private static final ReentrantLock downlinkMsgLock = new ReentrantLock();

private static final int MAX_DOWNLINK_ATTEMPTS = 10; // max number of attemps to send downlink message if edge connected

private static final String QUEUE_START_TS_ATTR_KEY = "queueStartTs";

private static final String QUEUE_START_SEQ_ID_ATTR_KEY = "queueStartSeqId";

private final UUID sessionId;

private final BiConsumer<Long, GrpcSession> sessionOpenListener;

private final BiConsumer<Long, UUID> sessionCloseListener;

private final GrpcSessionState sessionState = new GrpcSessionState();

private GrpcContextComponent ctx;

private Device device;

private StreamObserver<RequestMsg> inputStream;

private StreamObserver<ResponseMsg> outputStream;

private boolean connected;

private boolean syncCompleted;

private Long newStartTs;

private Long previousStartTs;

private Long newStartSeqId;

private Long previousStartSeqId;

private Long seqIdEnd;

private EdgeVersion edgeVersion;

private int maxInboundMessageSize;

private int clientMaxInboundMessageSize;

private ScheduledExecutorService sendDownlinkExecutorService;

private ApplicationContext appCtx;

private ProducerService producerService;

private final static String PROTOCOL_NODB_DATA_PREFIX = "PROTOCOL_NODB_DATA_";//非数据库采集的数据上送的KEY前缀

private final static String PROTOCOL_BATCH_DATA_PREFIX = "PROTOCOL_BATCH_DATA_";//数据库的数据上送的KEY前缀

public static void main(String[] args) {

String aa = "PROTOCOL_BATCH_DATA_JX_WA_JSJ";

CountDownLatch countDownLatch = new CountDownLatch(1);

try {

countDownLatch.await(10, TimeUnit.SECONDS);

countDownLatch.countDown();

} catch (InterruptedException e) {

throw new RuntimeException(e);

}

System.out.println(aa.substring(PROTOCOL_BATCH_DATA_PREFIX.length(), aa.length()));

}

GrpcSession(GrpcContextComponent ctx, StreamObserver<ResponseMsg> outputStream, BiConsumer<Long, GrpcSession> sessionOpenListener,

BiConsumer<Long, UUID> sessionCloseListener, ScheduledExecutorService sendDownlinkExecutorService, int maxInboundMessageSize) {

this.sessionId = UUID.randomUUID();

this.ctx = ctx;

this.outputStream = outputStream;

this.sessionOpenListener = sessionOpenListener;

this.sessionCloseListener = sessionCloseListener;

this.sendDownlinkExecutorService = sendDownlinkExecutorService;

this.maxInboundMessageSize = maxInboundMessageSize;

initInputStream();

appCtx = SpringContextUtil.getAppContext();

producerService = appCtx.getBean(ProducerService.class);

}

private void initInputStream() {

this.inputStream = new StreamObserver<>() {

@Override

public void onNext(RequestMsg requestMsg) {

//log.info("grpc session ID {} " , sessionId );

if (!connected && requestMsg.getMsgType().equals(RequestMsgType.CONNECT_RPC_MESSAGE)) {

ConnectResponseMsg responseMsg = processConnect(requestMsg.getConnectRequestMsg());

outputStream.onNext(ResponseMsg.newBuilder()

.setConnectResponseMsg(responseMsg)

.build());

if (ConnectResponseCode.ACCEPTED != responseMsg.getResponseCode()) {

outputStream.onError(new RuntimeException(responseMsg.getErrorMsg()));

} else {

if (requestMsg.getConnectRequestMsg().hasMaxInboundMessageSize()) {

log.debug("[{}][{}] Client max inbound message size: {}", "", sessionId, requestMsg.getConnectRequestMsg().getMaxInboundMessageSize());

clientMaxInboundMessageSize = requestMsg.getConnectRequestMsg().getMaxInboundMessageSize();

}

log.debug("[{}][{}] Client connect success : {}", sessionId, requestMsg.getConnectRequestMsg().getEdgeRoutingKey(), "");

connected = true;

}

}

if (connected) {

if (requestMsg.getMsgType().equals(RequestMsgType.SYNC_REQUEST_RPC_MESSAGE)) {

if (requestMsg.hasSyncRequestMsg()) {

boolean fullSync = false;

if (requestMsg.getSyncRequestMsg().hasFullSync()) {

fullSync = requestMsg.getSyncRequestMsg().getFullSync();

}

startSyncProcess(fullSync);

} else {

syncCompleted = true;

}

}

if (requestMsg.getMsgType().equals(RequestMsgType.UPLINK_RPC_MESSAGE)) {

if (requestMsg.hasUplinkMsg()) {

log.debug("[{}][{}] Client onUplinkMsg : {}", sessionId,

requestMsg.getConnectRequestMsg().getEdgeRoutingKey(), requestMsg);

onUplinkMsg(ctx, requestMsg.getUplinkMsg());

}

if (requestMsg.hasDownlinkResponseMsg()) {

log.debug("[{}][{}] Client onDownlinkResponse : {}", sessionId,

requestMsg.getConnectRequestMsg().getEdgeRoutingKey(), requestMsg);

onDownlinkResponse(ctx, requestMsg.getDownlinkResponseMsg());

}

}

}

}

@Override

public void onError(Throwable t) {

log.error("[{}][{}] Stream was terminated due to error:", "tenantId", sessionId, t);

closeSession();

}

@Override

public void onCompleted() {

log.info("[{}][{}] Stream was closed and completed successfully!", "tenantId", sessionId);

closeSession();

}

private void closeSession() {

connected = false;

if (device != null) {

try {

// todo

sessionCloseListener.accept(device.getId(), sessionId);

} catch (Exception e) {

//ignored.printStackTrace();

log.error("",e);

}

}

try {

outputStream.onCompleted();

} catch (Exception ignored) {

}

}

};

}

private ConnectResponseMsg processConnect(ConnectRequestMsg request) {

//log.trace("[{}] processConnect [{}]", this.sessionId, request);

Optional<Device> optional = ctx.getClientService().findClientByRoutingKey(request.getEdgeRoutingKey());

if (optional.isPresent()) {

device = optional.get();

try {

if (device.getSecret().equals(request.getEdgeSecret())) {

sessionOpenListener.accept(device.getId(), this);

this.edgeVersion = request.getEdgeVersion();

//processSaveEdgeVersionAsAttribute(request.getEdgeVersion().name());

return ConnectResponseMsg.newBuilder()

.setResponseCode(ConnectResponseCode.ACCEPTED)

.setErrorMsg("")

//.setConfiguration(ctx.getEdgeMsgConstructor().constructEdgeConfiguration(edge))

.setMaxInboundMessageSize(maxInboundMessageSize)

.build();

}

return ConnectResponseMsg.newBuilder()

.setResponseCode(ConnectResponseCode.BAD_CREDENTIALS)

.setErrorMsg("Failed to validate the edge!")

.setConfiguration(EdgeConfiguration.getDefaultInstance()).build();

} catch (Exception e) {

log.error("[{}] Failed to process edge connection!", request.getEdgeRoutingKey(), e);

return ConnectResponseMsg.newBuilder()

.setResponseCode(ConnectResponseCode.SERVER_UNAVAILABLE)

.setErrorMsg("Failed to process edge connection!")

.setConfiguration(EdgeConfiguration.getDefaultInstance()).build();

}

}

return ConnectResponseMsg.newBuilder()

.setResponseCode(ConnectResponseCode.BAD_CREDENTIALS)

.setErrorMsg("Failed to find the edge! Routing key: " + request.getEdgeRoutingKey())

.setConfiguration(EdgeConfiguration.getDefaultInstance()).build();

}

public void startSyncProcess(boolean fullSync) {

// todo

log.trace("[{}][{}][{}] Staring edge sync process", "", "", this.sessionId);

syncCompleted = false;

interruptGeneralProcessingOnSync();

doSync(new GrpcSyncCursor(ctx, device, fullSync));

}

private void doSync(GrpcSyncCursor cursor) {

if (cursor.hasNext()) {

EdgeEventFetcher next = cursor.getNext();

log.info("[{}][{}] starting sync process, cursor current idx = {}, class = {}",

this.device, device.getId(), cursor.getCurrentIdx(), next.getClass().getSimpleName());

ListenableFuture<Pair<Long, Long>> future = startProcessingEdgeEvents(next);

Futures.addCallback(future, new FutureCallback<>() {

@Override

public void onSuccess(@Nullable Pair<Long, Long> result) {

doSync(cursor);

}

@Override

public void onFailure(Throwable t) {

log.error("[{}][{}] Exception during sync process", device, device.getId(), t);

}

}, ctx.getGrpcCallbackExecutorService());

} else {

DownlinkMsg syncCompleteDownlinkMsg = DownlinkMsg.newBuilder()

.setDownlinkMsgId(EdgeUtils.nextPositiveInt())

.setSyncCompletedMsg(SyncCompletedMsg.newBuilder().build())

.build();

Futures.addCallback(sendDownlinkMsgsPack(Collections.singletonList(syncCompleteDownlinkMsg)), new FutureCallback<>() {

@Override

public void onSuccess(Boolean isInterrupted) {

syncCompleted = true;

//ctx.getClusterService().onEdgeEventUpdate(edge.getTenantId(), edge.getId());

}

@Override

public void onFailure(Throwable t) {

log.error("[{}][{}] Exception during sending sync complete", device.getId(), device.getId(), t);

}

}, ctx.getGrpcCallbackExecutorService());

}

}

private ListenableFuture<Boolean> sendDownlinkMsgsPack(List<DownlinkMsg> downlinkMsgsPack) {

interruptPreviousSendDownlinkMsgsTask();

sessionState.setSendDownlinkMsgsFuture(SettableFuture.create());

sessionState.getPendingMsgsMap().clear();

downlinkMsgsPack.forEach(msg -> sessionState.getPendingMsgsMap().put(msg.getDownlinkMsgId(), msg));

scheduleDownlinkMsgsPackSend(1);

return sessionState.getSendDownlinkMsgsFuture();

}

private void scheduleDownlinkMsgsPackSend(int attempt) {

Runnable sendDownlinkMsgsTask = () -> {

try {

if (isConnected() && sessionState.getPendingMsgsMap().values().size() > 0) {

List<DownlinkMsg> copy = new ArrayList<>(sessionState.getPendingMsgsMap().values());

if (attempt > 1) {

log.warn("[{}][{}] Failed to deliver the batch: {}, attempt: {}", this.device.getId(), this.sessionId, copy, attempt);

}

log.trace("[{}][{}][{}] downlink msg(s) are going to be send.", this.device.getId(), this.sessionId, copy.size());

for (DownlinkMsg downlinkMsg : copy) {

if (this.clientMaxInboundMessageSize != 0 && downlinkMsg.getSerializedSize() > this.clientMaxInboundMessageSize) {

log.error("[{}][{}][{}] Downlink msg size [{}] exceeds client max inbound message size [{}]. Skipping this message. " +

"Please increase value of CLOUD_RPC_MAX_INBOUND_MESSAGE_SIZE env variable on the edge and restart it." +

"Message {}", this.device.getId(), device.getId(), this.sessionId, downlinkMsg.getSerializedSize(),

this.clientMaxInboundMessageSize, downlinkMsg);

sessionState.getPendingMsgsMap().remove(downlinkMsg.getDownlinkMsgId());

} else {

sendDownlinkMsg(ResponseMsg.newBuilder()

.setDownlinkMsg(downlinkMsg)

.build());

}

}

if (attempt < MAX_DOWNLINK_ATTEMPTS) {

scheduleDownlinkMsgsPackSend(attempt + 1);

} else {

log.warn("[{}][{}] Failed to deliver the batch after {} attempts. Next messages are going to be discarded {}",

this.device.getId(), this.sessionId, MAX_DOWNLINK_ATTEMPTS, copy);

stopCurrentSendDownlinkMsgsTask(false);

}

} else {

stopCurrentSendDownlinkMsgsTask(false);

}

} catch (Exception e) {

log.warn("[{}][{}] Failed to send downlink msgs. Error msg {}", this.device.getId(), this.sessionId, e.getMessage(), e);

stopCurrentSendDownlinkMsgsTask(true);

}

};

if (attempt == 1) {

sendDownlinkExecutorService.submit(sendDownlinkMsgsTask);

} else {

sessionState.setScheduledSendDownlinkTask(

sendDownlinkExecutorService.schedule(

sendDownlinkMsgsTask,

ctx.getEventStorageSettings().getSleepIntervalBetweenBatches(),

TimeUnit.MILLISECONDS)

);

}

}

private void onUplinkMsg(GrpcContextComponent ctx, UplinkMsg uplinkMsg) {

log.info("processUplinkMsg begin");

StopWatch stopWatch = new StopWatch();

stopWatch.start();

ListenableFuture<List<Void>> future = processUplinkMsg(ctx, uplinkMsg);

Futures.addCallback(future, new FutureCallback<>() {

@Override

public void onSuccess(@Nullable List<Void> result) {

UplinkResponseMsg uplinkResponseMsg = UplinkResponseMsg.newBuilder()

.setUplinkMsgId(uplinkMsg.getUplinkMsgId())

.setSuccess(true).build();

sendDownlinkMsg(ResponseMsg.newBuilder()

.setUplinkResponseMsg(uplinkResponseMsg)

.build());

stopWatch.stop();

log.info("sessionID:{} end processUplinkMsg {},times cost:{}", "success", sessionId, stopWatch.getTime(TimeUnit.MILLISECONDS) + " MILLISECONDS");

}

@Override

public void onFailure(Throwable t) {

String errorMsg = EdgeUtils.createErrorMsgFromRootCauseAndStackTrace(t);

UplinkResponseMsg uplinkResponseMsg = UplinkResponseMsg.newBuilder()

.setUplinkMsgId(uplinkMsg.getUplinkMsgId())

.setSuccess(false).setErrorMsg(errorMsg).build();

sendDownlinkMsg(ResponseMsg.newBuilder()

.setUplinkResponseMsg(uplinkResponseMsg)

.build());

stopWatch.stop();

log.info("sessionID:{} end processUplinkMsg {},times cost:{}", "Failure", sessionId, stopWatch.getTime(TimeUnit.MILLISECONDS) + " MILLISECONDS");

}

}, ctx.getGrpcCallbackExecutorService());

}

private ListenableFuture<List<Void>> processUplinkMsg(GrpcContextComponent ctx, UplinkMsg uplinkMsg) {

List<ListenableFuture<Void>> result = new ArrayList<>();

log.info("sessionID:{} client KEY {} defaulTopic setting {} extraTopic setting {} ",

sessionId, this.device.getRoutingKey(), this.device.getMonitorTopic(), this.device.getBatchTopic());

if (StringUtils.isEmpty(this.device.getMonitorTopic()) && StringUtils.isEmpty(this.device.getBatchTopic())) {

log.error("sessionID:{} error info : client topic setting must not be null ",sessionId);

return Futures.immediateFailedFuture(new RuntimeException(" error info : client topic setting must not be null "));

}

try {

if (uplinkMsg.getEntityDataCount() > 0) {

for (EntityDataProto entityData : uplinkMsg.getEntityDataList()) {

Object object = JSON.parse(entityData.getJsonData());

//非JSON数组

if (object instanceof JSONObject) {

try {

JSONObject jsonObject = (JSONObject) object;

Iterator<String> keys = jsonObject.keySet().iterator();

String firstKey = keys.next();

log.info("sessionID:{} recived primary KEY:{} ", sessionId, firstKey);

// 计算机实时监测数据

if (StringUtils.isNotEmpty(firstKey) && firstKey.startsWith(PROTOCOL_NODB_DATA_PREFIX)) {

Object firstKeyValueObj = JSON.parse(String.valueOf(jsonObject.get(firstKey)));

if (firstKeyValueObj instanceof JSONObject) {

List<String> batchMsgs = new ArrayList<>();

batchMsgs.add(increaseMsg(firstKey,firstKeyValueObj, PROTOCOL_NODB_DATA_PREFIX));

log.info("sessionID:{} starting processUplinkMsg...KEYFLAG {}, message size {} send to topic {} firstdata example {}",

sessionId, firstKey, batchMsgs.size(), this.device.getMonitorTopic(),

Objects.nonNull(batchMsgs.get(0)) ? JSON.toJSONString(batchMsgs.get(0)) : "");

sendBatchMsgKafka(this.device.getMonitorTopic(), batchMsgs, firstKey);

} else if (firstKeyValueObj instanceof JSONArray) {

JSONArray jsonArray = (JSONArray) firstKeyValueObj;

List<String> batchMsgs = new ArrayList<>();

for (int i = 0; i < jsonArray.size(); i++) {

batchMsgs.add(increaseMsg(firstKey,jsonArray.getJSONObject(i), PROTOCOL_NODB_DATA_PREFIX));

}

log.info("sessionID:{} starting processUplinkMsg...KEYFLAG {}, message size {} send to topic {} firstdata example {}",

sessionId, firstKey, batchMsgs.size(), this.device.getMonitorTopic(),

Objects.nonNull(batchMsgs.get(0)) ? JSON.toJSONString(batchMsgs.get(0)) : "");

sendBatchMsgKafka(this.device.getMonitorTopic(), batchMsgs, firstKey);

}

} else {

Object firstKeyValueObj = JSON.parse(String.valueOf(jsonObject.get(firstKey)));

if (firstKeyValueObj instanceof JSONObject) {

List<String> batchMsgs = new ArrayList<>();

//batchMsgs.add(increaseMsg(firstKey,firstKeyValueObj,PROTOCOL_BATCH_DATA_PREFIX));

batchMsgs.add(JSON.toJSONString(firstKeyValueObj));

log.info("sessionID:{} starting processUplinkMsg...KEYFLAG {}, message size {} send to topic {} firstdata example {}",

sessionId, firstKey, batchMsgs.size(), this.device.getBatchTopic(),

Objects.nonNull(batchMsgs.get(0)) ? JSON.toJSONString(batchMsgs.get(0)) : "");

sendBatchMsgKafka(this.device.getBatchTopic(), batchMsgs, firstKey);

} else if (firstKeyValueObj instanceof JSONArray) {

JSONArray jsonArray = (JSONArray) firstKeyValueObj;

List<String> batchMsgs = new ArrayList<>();

for (int i = 0; i < jsonArray.size(); i++) {

//batchMsgs.add(increaseMsg(firstKey,jsonArray.getJSONObject(i),PROTOCOL_BATCH_DATA_PREFIX));

batchMsgs.add(JSON.toJSONString(jsonArray.getJSONObject(i)));

}

log.info("sessionID:{} starting processUplinkMsg...KEYFLAG {}, message size {} send to topic {} firstdata example {}",

sessionId, firstKey, batchMsgs.size(), this.device.getBatchTopic(),

Objects.nonNull(batchMsgs.get(0)) ? JSON.toJSONString(batchMsgs.get(0)): "");

sendBatchMsgKafka(this.device.getBatchTopic(), batchMsgs, firstKey);

}

}

} catch (Exception e) {

e.printStackTrace();

return Futures.immediateFailedFuture(e);

}

} else if (object instanceof JSONArray) {

try {

JSONArray jsonArray = (JSONArray) object;

List<String> batchMsgs = new ArrayList<>();

for (int i = 0; i < jsonArray.size(); i++) {

batchMsgs.add(jsonArray.getJSONObject(i).toString());

}

sendBatchMsgKafka(this.device.getBatchTopic(), batchMsgs, "null");

} catch (Exception e) {

e.printStackTrace();

return Futures.immediateFailedFuture(e);

}

}

}

} else {

return Futures.immediateFailedFuture(new RuntimeException("消息为空"));

}

} catch (Exception e) {

log.error("[{}][{}] Can't process uplink msg [{}]", this.device.getId(), this.sessionId, uplinkMsg, e);

return Futures.immediateFailedFuture(e);

}

return Futures.allAsList(result);

}

private String increaseMsg(String firstKey, Object data, String protocolPrefix){

String firstKeySub = firstKey.substring(protocolPrefix.length(), firstKey.length());

Map<String,Object> newJsonObject = new HashMap<String,Object>();

newJsonObject.put(firstKeySub, data);

return JSON.toJSONString(newJsonObject);

}

private void sendBatchMsgKafka(String topicName, List<String> batchMsgs, String keyFlag) {

if (!CollectionUtils.isEmpty(batchMsgs)) {

batchMsgs.forEach(msg -> {

producerService.sendMessage(topicName, msg);

//log.info("keyFlag 【{}】 session ID {} grpc server send cellecttion data to kafka topic【{}】 data 【{}】",keyFlag,sessionId,topicName, msg);

});

}

}

private void sendDownlinkMsg(ResponseMsg downlinkMsg) {

//log.trace("[{}][{}] Sending downlink msg [{}]", this.client.getId(), this.sessionId, JSON.toJSONString(downlinkMsg));

if (isConnected()) {

downlinkMsgLock.lock();

try {

outputStream.onNext(downlinkMsg);

} catch (Exception e) {

e.printStackTrace();

log.error("[{}][{}] Failed to send downlink message [{}]", this.device.getId(), this.sessionId, downlinkMsg, e);

connected = false;

sessionCloseListener.accept(device.getId(), sessionId);

} finally {

downlinkMsgLock.unlock();

}

//log.trace("[{}][{}] Response msg successfully sent [{}]", this.client.getId(), this.sessionId, JSON.toJSONString(downlinkMsg));

}

}

private void onDownlinkResponse(GrpcContextComponent ctx, DownlinkResponseMsg msg) {

try {

if (msg.getSuccess()) {

sessionState.getPendingMsgsMap().remove(msg.getDownlinkMsgId());

log.debug("[{}][{}] Msg has been processed successfully!Msg Id: [{}], Msg: {}", this.device.getId(), device, msg.getDownlinkMsgId(), msg);

} else {

log.error("[{}][{}] Msg processing failed! Msg Id: [{}], Error msg: {}", this.device.getId(), device, msg.getDownlinkMsgId(), msg.getErrorMsg());

}

if (sessionState.getPendingMsgsMap().isEmpty()) {

log.debug("[{}][{}] Pending msgs map is empty. Stopping current iteration", this.device.getId(), device);

stopCurrentSendDownlinkMsgsTask(false);

}

} catch (Exception e) {

log.error("[{}][{}] Can't process downlink response message [{}]", this.device.getId(), this.sessionId, msg, e);

}

}

private ListenableFuture<Pair<Long, Long>> startProcessingEdgeEvents(EdgeEventFetcher fetcher) {

SettableFuture<Pair<Long, Long>> result = SettableFuture.create();

PageLink pageLink = fetcher.getPageLink(ctx.getEventStorageSettings().getMaxReadRecordsCount());

//processEdgeEvents(fetcher, pageLink, result);

return result;

}

/*private void processEdgeEvents(EdgeEventFetcher fetcher, PageLink pageLink, SettableFuture<Pair<Long, Long>> result) {

try {

PageData<EdgeEvent> pageData = fetcher.fetchEdgeEvents(edge.getTenantId(), edge, pageLink);

if (isConnected() && !pageData.getData().isEmpty()) {

log.trace("[{}][{}][{}] event(s) are going to be processed.", this.tenantId, this.sessionId, pageData.getData().size());

List<DownlinkMsg> downlinkMsgsPack = convertToDownlinkMsgsPack(pageData.getData());

Futures.addCallback(sendDownlinkMsgsPack(downlinkMsgsPack), new FutureCallback<>() {

@Override

public void onSuccess(@Nullable Boolean isInterrupted) {

if (Boolean.TRUE.equals(isInterrupted)) {

log.debug("[{}][{}][{}] Send downlink messages task was interrupted", tenantId, edge.getId(), sessionId);

result.set(null);

} else {

if (isConnected() && pageData.hasNext()) {

processEdgeEvents(fetcher, pageLink.nextPageLink(), result);

} else {

EdgeEvent latestEdgeEvent = pageData.getData().get(pageData.getData().size() - 1);

UUID idOffset = latestEdgeEvent.getUuidId();

if (idOffset != null) {

Long newStartTs = Uuids.unixTimestamp(idOffset);

long newStartSeqId = latestEdgeEvent.getSeqId();

result.set(Pair.of(newStartTs, newStartSeqId));

} else {

result.set(null);

}

}

}

}

@Override

public void onFailure(Throwable t) {

log.error("[{}] Failed to send downlink msgs pack", sessionId, t);

result.setException(t);

}

}, ctx.getGrpcCallbackExecutorService());

} else {

log.trace("[{}] no event(s) found. Stop processing edge events", this.sessionId);

result.set(null);

}

} catch (Exception e) {

log.error("[{}] Failed to fetch edge events", this.sessionId, e);

result.setException(e);

}

}*/

@Override

public void close() {

log.debug("[{}][{}] Closing session", this.device.getId(), sessionId);

connected = false;

try {

outputStream.onCompleted();

} catch (Exception e) {

e.printStackTrace();

log.debug("[{}][{}] Failed to close output stream: {}", this.device.getId(), sessionId, e.getMessage());

}

}

private void interruptPreviousSendDownlinkMsgsTask() {

if (sessionState.getSendDownlinkMsgsFuture() != null && !sessionState.getSendDownlinkMsgsFuture().isDone()

|| sessionState.getScheduledSendDownlinkTask() != null && !sessionState.getScheduledSendDownlinkTask().isCancelled()) {

log.debug("[{}][{}][{}] Previous send downlink future was not properly completed, stopping it now!", this.device.getId(), "edge.getId()", this.sessionId);

stopCurrentSendDownlinkMsgsTask(true);

}

}

private void interruptGeneralProcessingOnSync() {

log.debug("[{}][{}][{}] Sync process started. General processing interrupted!", "this.tenantId", "edge.getId()", "this.sessionId");

stopCurrentSendDownlinkMsgsTask(true);

}

public void stopCurrentSendDownlinkMsgsTask(Boolean isInterrupted) {

if (sessionState.getSendDownlinkMsgsFuture() != null && !sessionState.getSendDownlinkMsgsFuture().isDone()) {

sessionState.getSendDownlinkMsgsFuture().set(isInterrupted);

}

if (sessionState.getScheduledSendDownlinkTask() != null) {

sessionState.getScheduledSendDownlinkTask().cancel(true);

}

}

}

源码下载地址:

源码下载

1213

1213

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?