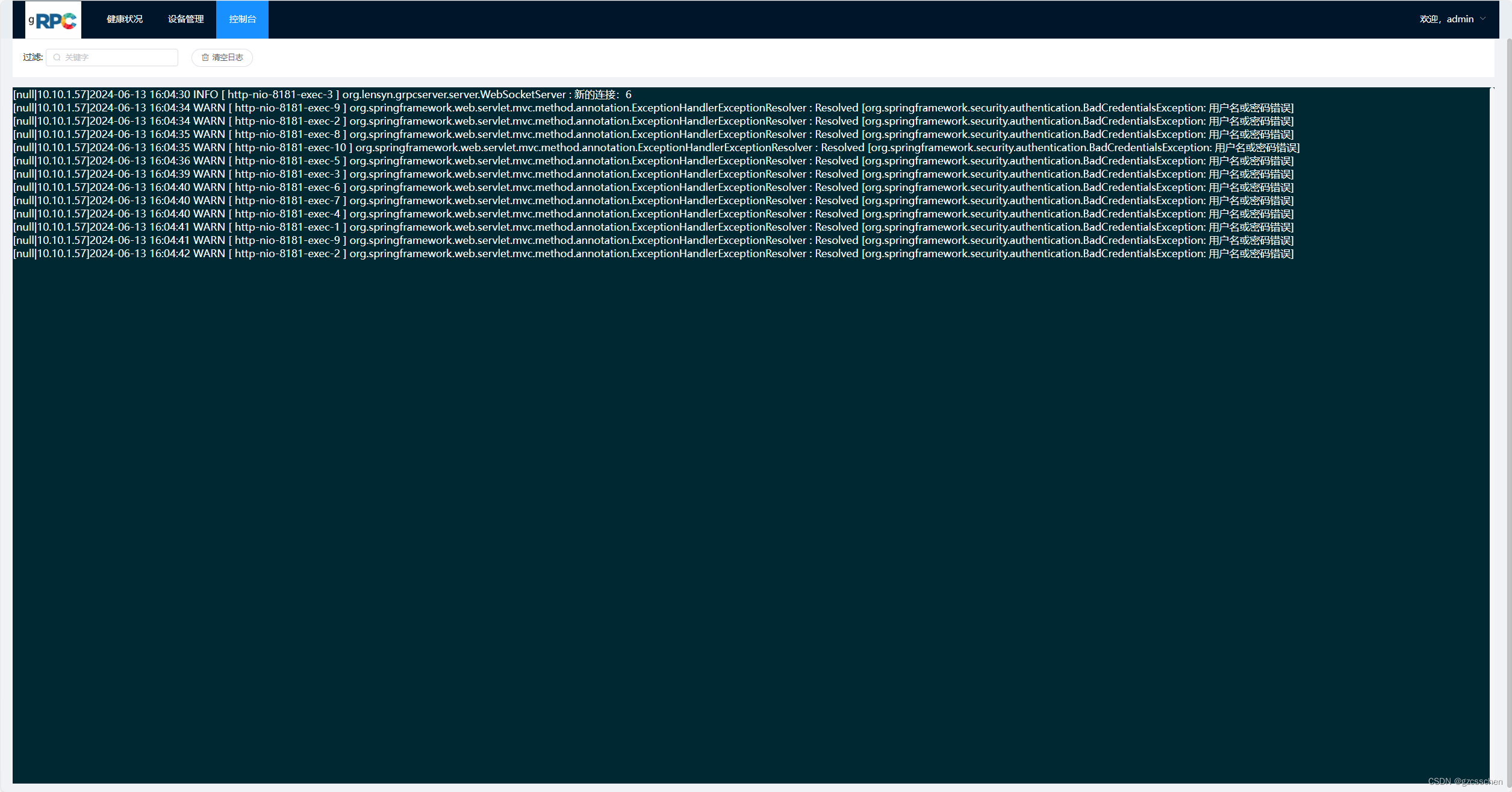

实现效果展示

实现思路

因为考虑到服务会部署多个节点的情况下,页面前端要展示所有节点的日志,所以采用每个节点的日志发送到kafka,然后kafka的消费端,再通过websocket 发送到页面进行滚动显示

如果是单体应用,就可以再append里面直接把日志数据发送到websocket,不用发送到kafka进行归集

代码实现

appender代码

package org.lensyn.grpcserver.log;

import ch.qos.logback.classic.spi.ILoggingEvent;

import ch.qos.logback.core.Layout;

import ch.qos.logback.core.UnsynchronizedAppenderBase;

import lombok.Getter;

import lombok.Setter;

import org.lensyn.grpcserver.common.util.DateTimeUtils;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.kafka.support.SendResult;

import org.springframework.stereotype.Component;

import org.springframework.util.concurrent.ListenableFuture;

import java.net.InetAddress;

import java.net.UnknownHostException;

@Getter

@Setter

@Component

public class MyLogbackAppender extends UnsynchronizedAppenderBase<ILoggingEvent> {

Layout<ILoggingEvent> layout;

private InetAddress addr;

private String GRPC_SERVER_NAME;

@Autowired

private KafkaTemplate<String, String> kafkaTemplate;

//自定义配置

String printString;

@Override

public void start(){

//这里可以做些初始化判断 比如layout不能为null ,

if(layout == null) {

addWarn("Layout was not defined");

}

//或者写入数据库 或者redis时 初始化连接等等

super.start();

try {

addr = InetAddress.getLocalHost();

GRPC_SERVER_NAME = System.getenv("GRPC_SERVER_NAME");

} catch (UnknownHostException e) {

throw new RuntimeException(e);

}

}

@Override

public void stop()

{

//释放相关资源,如数据库连接,redis线程池等等

System.out.println("logback-stop方法被调用");

if(!isStarted()) {

return;

}

super.stop();

}

@Override

public void append(ILoggingEvent event) {

if (event == null || !isStarted()){

return;

}

// 此处自定义实现输出

// 获取输出值:event.getFormattedMessage()

// System.out.print(event.getFormattedMessage());

// 格式化输出

//System.out.print(printString + ":" + layout.doLayout(event));

//ProducerService kafkaProducerService = SpringContextUtil.getAppContext().getBean(ProducerServiceImpl.class);

try {

String pattern = "yyyy-MM-dd HH:mm:ss";

String prfexString = "["+ GRPC_SERVER_NAME +"|"+ addr.getHostAddress()+"]" + DateTimeUtils.convertTimestamp2Date(event.getTimeStamp(),pattern) +" "+ event.getLevel().levelStr +" [ "+ event.getThreadName() + " ] "+event.getLoggerName();

if(event.getLoggerName().indexOf("kafka") != -1

|| event.getLoggerName().indexOf("MyLogbackAppender") != -1){

return;

}

//kafkaProducerService.sendMessage("test-topic",prfexString + " : " + event.getFormattedMessage());

ListenableFuture<SendResult<String, String>> future = kafkaTemplate.send("test-topic",prfexString + " : " + event.getFormattedMessage());

future.addCallback(success -> {

//log.info("send to kafka sucess ! ");

}, failure -> {

//log.error("send to kafka Failure ! ression :{}", failure.getMessage());

});

//webSocketServer.sendMessage( prfexString + " : " + event.getFormattedMessage());

} catch (Exception e) {

throw new RuntimeException(e);

}

}

}

核心代码说明

继承UnsynchronizedAppenderBase<ILoggingEvent> 实现append方法

kafka消费端启动,和注册自定义的appender

package org.lensyn.grpcserver.kafka;

import ch.qos.logback.classic.LoggerContext;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.lensyn.grpcserver.log.MyLogbackAppender;

import org.lensyn.grpcserver.server.WebSocketServer;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.boot.context.event.ApplicationReadyEvent;

import org.springframework.context.event.EventListener;

import org.springframework.kafka.annotation.KafkaListener;

import org.springframework.kafka.config.KafkaListenerEndpointRegistry;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.stereotype.Component;

import java.io.IOException;

@Component

public class KafkaConsumer {

@Value("${spring.kafka.consumer.auto-startup}")

private boolean autoStartup;

@Autowired

private KafkaListenerEndpointRegistry kafkaListenerEndpointRegistry;

@Autowired

private KafkaTemplate<String, String> kafkaTemplate;

@KafkaListener(id = "logConsumer", topics = "test-topic",groupId = "test-group",autoStartup = "false")

public void onMassage(ConsumerRecord<Integer, String> record) {

String topic = record.topic();

String msg = record.value();

WebSocketServer.SESSIONS.forEach( (s, session) -> {

try {

session.getBasicRemote().sendText(msg);

} catch (IOException e) {

throw new RuntimeException(e);

}

});

//LOGGER.info("msg-->{}",msg);

}

/**

* 启动kafka消费端,通过配置项决定是否启动

* 注册自定义实现的appender

**/

@EventListener(ApplicationReadyEvent.class)

public void initialLogConsumer() {

if(autoStartup){

// 判断监听容器是否启动,未启动则将其启动

if (!kafkaListenerEndpointRegistry.getListenerContainer("logConsumer").isRunning()) {

kafkaListenerEndpointRegistry.getListenerContainer("logConsumer").start();

}

// 将其恢复

kafkaListenerEndpointRegistry.getListenerContainer("logConsumer").resume();

}

//注册 自定义的日志Appender

LoggerContext lc = (LoggerContext) LoggerFactory.getILoggerFactory();

// 第二步:获取日志对象 (日志是有继承关系的,关闭上层,下层如果没有特殊说明也会关闭)

ch.qos.logback.classic.Logger rootLogger = lc.getLogger("root");

MyLogbackAppender myAppender = new MyLogbackAppender();

myAppender.setContext(lc);

// 自定义Appender设置name

myAppender.setName("MyLogbackAppender");

myAppender.start();

myAppender.setKafkaTemplate(kafkaTemplate);

rootLogger.addAppender(myAppender);

}

}

1880

1880

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?