Purified and Unified Steganographic Network

纯化和统一的隐写网络

School of Computer Science, Fudan University {gbli20, lisheng, zcluo21, zxqian, zhangxinpeng}@fudan.edu.cnEqual contributionCorresponding authors

Abstract 抽象

Steganography is the art of hiding secret data into the cover media for covert communication. In recent years, more and more deep neural network (DNN)-based steganographic schemes are proposed to train steganographic networks for secret embedding and recovery, which are shown to be promising. Compared with the handcrafted steganographic tools, steganographic networks tend to be large in size. It raises concerns on how to imperceptibly and effectively transmit these networks to the sender and receiver to facilitate the covert communication. To address this issue, we propose in this paper a Purified and Unified Steganographic Network (PUSNet). It performs an ordinary machine learning task in a purified network, which could be triggered into steganographic networks for secret embedding or recovery using different keys. We formulate the construction of the PUSNet into a sparse weight filling problem to flexibly switch between the purified and steganographic networks. We further instantiate our PUSNet as an image denoising network with two steganographic networks concealed for secret image embedding and recovery. Comprehensive experiments demonstrate that our PUSNet achieves good performance on secret image embedding, secret image recovery, and image denoising in a single architecture. It is also shown to be capable of imperceptibly carrying the steganographic networks in a purified network. Code is available at https://github.com/albblgb/PUSNet

隐写术是将秘密数据隐藏在封面媒体中以进行秘密通信的艺术。近年来,越来越多的基于深度神经网络(DNN)的隐写方案被提出来训练隐写网络进行秘密嵌入和恢复,这些方案被证明是有前景的。与手工制作的隐写工具相比,隐写网络的规模往往较大。它引发了人们对如何潜移默化地有效地将这些网络传输给发送方和接收方以促进秘密通信的担忧。为了解决这个问题,我们在本文中提出了一种纯化和统一的隐写网络(PUSNet)。它在纯化网络中执行普通的机器学习任务,该任务可以触发到隐写网络中,以使用不同的密钥进行秘密嵌入或恢复。我们将 PUSNet 的构造表述为稀疏权重填充问题,以便在纯化网络和隐写网络之间灵活切换。我们进一步将我们的 PUSNet 实例化为图像去噪网络,其中隐藏了两个隐写网络,用于秘密图像嵌入和恢复。综合实验表明,我们的 PUSNet 在单一架构中在秘密图像嵌入、秘密图像恢复和图像去噪方面取得了良好的性能。它还被证明能够在纯化网络中不知不觉地携带隐写网络。代码可在 https://github.com/albblgb/PUSNet 获得

1Introduction 1 介绍

Steganography aims to conceal secret data into a cover media, e.g., image[15], video [23] or text [21], which is one of the main techniques for covert communication through public channels. To conceal the presence of the covert communication, the stego media (i.e., the media with hidden data) is required to be indistinguishable from the cover media. Early steganographic approaches

隐写术旨在将秘密数据隐藏到封面媒体中, 例如图像 [15]、视频或文本,这是通过公共渠道进行秘密交流的主要技术之一。为了隐藏隐蔽通信的存在,stego 介质(即具有隐藏数据的介质)必须与覆盖介质无法区分。早期隐写方法[5, 32, 35]

隐写术旨在将秘密数据隐藏到覆盖媒体中, 例如图像 [15]、视频 [23] 或文本 [21],这是通过公共渠道进行秘密交流的主要技术之一。为了隐藏隐蔽通信的存在,stego 介质(即具有隐藏数据的介质)必须与覆盖介质无法区分。早期隐写法 [5, 32, 35]

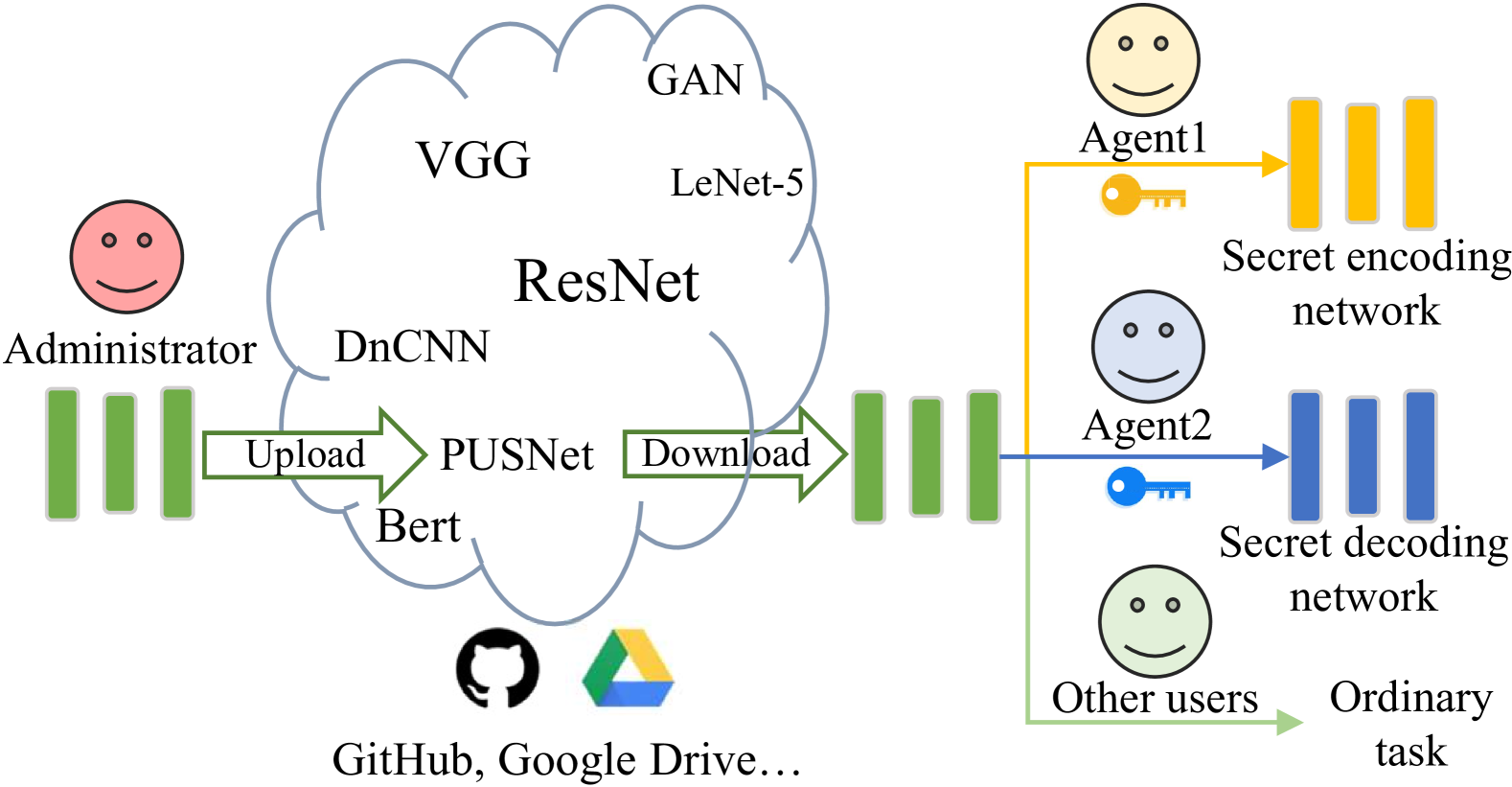

Figure 1:Administrator covertly transmits the secret steganographic networks to agents using the proposed PUSNet.

图 1: 管理员使用建议的 PUSNet 将秘密隐写网络秘密传输给代理。

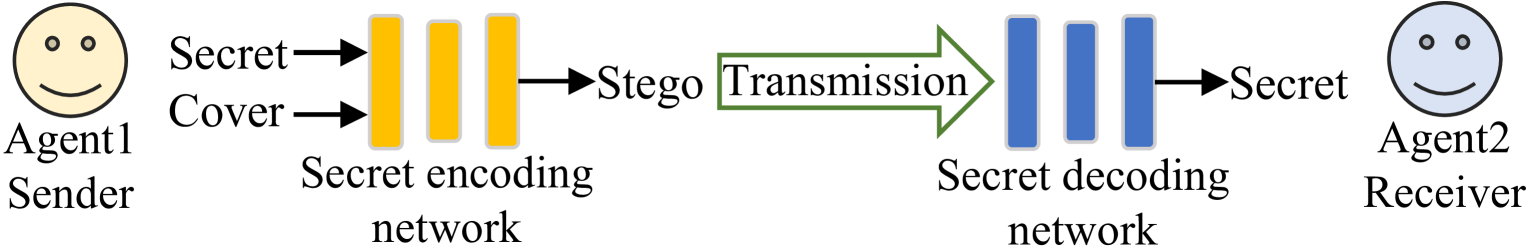

Figure 2:The agents (i.e., sender and receiver) perform the covert communication task using the received steganographic networks.

图 2: 代理(即发送者和接收者)使用接收到的隐写网络执行隐蔽通信任务。

are conducted under a handcrafted and adaptive coding strategy to minimize the distortion caused by data embedding.

在手工制作的自适应编码策略下进行,以尽量减少数据嵌入造成的失真。

In recent years, more and more deep neural network (DNN)-based steganographic schemes [38, 3, 34, 14, 31] are proposed to improve the steganographic performance.

近年来,越来越多的基于深度神经网络(DNN)的隐写方案 [38,3,34,14,31] 被提出来提高隐写性能。

A DNN-based steganographic scheme usually contains two main components, including a secret encoding (embedding) network and a secret decoding (recovery) network. The encoding network takes the cover media and the secret data as inputs to generate the stego media, while the decoding network retrieves the secrets from the stego media. These two networks are jointly learnt for optimized steganographic performance, which are shown to be superior to handcrafted steganographic tools.

基于 DNN 的隐写方案通常包含两个主要组件,包括秘密编码(嵌入)网络和秘密解码(恢复)网络。编码网络以覆盖介质和秘密数据为输入生成 stego 介质,而解码网络则从 stego 介质中检索秘密。这两个网络是共同学习的,以优化隐写性能,这被证明优于手工制作的隐写工具。

Regardless of the steganographic schemes, we have to transmit the steganographic tools to the sender and receiver for secret embedding and recovery. This is not a trivial problem, especially for the DNN-based steganographic schemes which significantly increase the size of steganographic tools. A typical encoding or decoding network would occupy over 100MB of storage, which is much larger than handcrafted steganographic tools. It raises concerns on how we could covertly and effectively transmit the DNN-based steganographic tools to the sender and receiver for covert communication.

无论采用何种隐写方案,我们都必须将隐写工具传输给发送方和接收方,以进行秘密嵌入和恢复。这不是一个小问题,特别是对于基于 DNN 的隐写方案,它显着增加了隐写工具的尺寸。一个典型的编码或解码网络将占用超过 100MB 的存储空间,这比手工制作的隐写工具要大得多。它引发了人们对我们如何秘密有效地将基于 DNN 的隐写工具传输给发送方和接收方进行秘密通信的担忧。

A promising solution to address the aforementioned issue is DNN model steganography, which has the capability to embed a secret DNN model into a benign DNN model without being noticed. The research of DNN model steganography is still in its infancy. Salem et al. [26] propose to establish a single DNN for both ordinary and secret tasks using multi-task learning. This scheme is not able to prevent unauthorized recovery of the secret DNN model from the stego DNN model (i.e., the model with hidden secret DNN model). Anyone who can access the stego DNN model would be able to perform both the ordinary and secret tasks. To deal with this issue, Li et al. [18] propose to embed a steganographic network into a benign DNN model according to some side information to form a stego DNN model. The steganographic network can be restored from the stego DNN model only for authorized people who own the side information. Unfortunately, this scheme is tailored for concealing a secret decoding network. It remains unanswered regarding how we could imperceptibly and securely embed a secret encoding network into a benign DNN model. On the other hand, it requires the transmission of side information to the receiver for the recovery of the secret decoding network, which is not convenient in real-world applications.

解决上述问题的一个有前途的解决方案是 DNN 模型隐写术,它能够在不被注意到的情况下将秘密 DNN 模型嵌入到良性 DNN 模型中。DNN 模型隐写术的研究仍处于起步阶段。塞勒姆等人。[26] 建议使用多任务学习为普通任务和秘密任务建立单一的 DNN。该方案无法防止从 stego DNN 模型(即具有隐藏秘密 DNN 模型的模型)中非法恢复秘密 DNN 模型。任何可以访问 stego DNN 模型的人都能够执行普通任务和秘密任务。为了解决这个问题,Li et al.[18] 提出根据一些侧面信息将隐写网络嵌入到良性 DNN 模型中,形成隐写 DNN 模型。隐写网络只能从 stego DNN 模型中恢复,仅适用于拥有侧面信息的授权人员。不幸的是,该方案是为隐藏秘密解码网络而量身定制的。关于我们如何在不知不觉中安全地将秘密编码网络嵌入到良性 DNN 模型中,这个问题仍然没有答案。另一方面,它需要将侧信息传输到接收方,以恢复秘密解码网络,这在实际应用中并不方便。

In this paper, we try to tackle the problem of DNN model steganography by a Purified and Unified Steganographic Network (PUSNet). As shown in Fig. 1, our PUSNet is a purified network (i.e., the benign DNN model) that performs an ordinary machine learning task, which could be uploaded to the public DNN model repository by administrator. Agents (i.e., the sender or receiver) can download the PUSNet, and trigger it into a secret encoding network or a decoding network using keys possessed by them, where the keys are different for triggering different networks. Other users (those without the key) could also download the PUSNet for an ordinary machine learning task. Subsequently, the sender and receiver engage in covert communication tasks using the restored secret steganographic networks, as depicted in Fig 2. By using our PUSNet, we imperceptibly conceal the secret encoding and decoding networks into a purified network. There is no need to look for secure and complicated ways to share the steganographic networks between the administrator and the agents.

在本文中,我们尝试通过纯化统一隐写网络(PUSNet)来解决 DNN 模型隐写术的问题。如图所示。1、我们的 PUSNet 是一个纯化的网络(即良性 DNN 模型),执行普通的机器学习任务,管理员可以将其上传到公共 DNN 模型存储库。代理(即发送方或接收方)可以下载 PUSNet,并使用他们拥有的密钥将其触发到秘密编码网络或解码网络中,其中触发不同网络的密钥不同。其他用户(没有密钥的用户)也可以下载 PUSNet 来执行普通的机器学习任务。随后,发送方和接收方使用恢复的秘密隐写网络进行秘密通信任务,如图 2 所示。通过使用我们的 PUSNet,我们在不知不觉中将秘密编码和解码网络隐藏到一个纯化的网络中。无需寻找安全且复杂的方法来在管理员和代理之间共享隐写网络。

To flexibly switch the function of the PUSNet between an ordinary machine learning task and the secret embedding or recovery task, we formulate the problem of constructing the PUSNet in a sparse weight filling manner. In particular, we consider the purified network as a sparse network and the steganographic networks as the corresponding dense versions. We use a key to generate a set of weights to fill the sparse weights in the purified network to trigger a secret encoding or decoding network. As an instantiation, we design and adopt a sparse image denoising network as the purified network for concealing two steganographic networks, including a secret image encoding network and a secret image decoding network. Various experiments demonstrate the advantage of our PUSNet for steganographic tasks. The main contributions are summarized below.

为了在普通机器学习任务和秘密嵌入或恢复任务之间灵活切换 PUSNet 的功能,我们提出了以稀疏权重填充的方式构建 PUSNet 的问题。特别是,我们将纯化网络视为稀疏网络,将隐写网络视为相应的密集版本。我们使用密钥生成一组权重来填充纯化网络中的稀疏权重,以触发秘密编码或解码网络。作为实例,我们设计并采用稀疏图像去噪网络作为隐藏两个隐写网络的纯化网络,包括秘密图像编码网络和秘密图像解码网络。各种实验证明了我们的 PUSNet 在隐写任务中的优势。主要贡献总结如下。

- 1)

We propose a PUSNet that is able to conceal both the secret encoding and decoding networks into a single purified network.

1)我们提出了一种 PUSNet,它能够将秘密编码和解码网络隐藏到一个纯化的网络中。 - 2)

We design a novel key-based sparse weight filling strategy to construct the PUSNet, which is effective in preventing unauthorized recovery of the steganographic networks without the use of side information.

2)设计了一种基于密钥的稀疏权重填充策略来构建 PUSNet,该策略在不使用侧面信息的情况下有效防止隐写网络的未经授权恢复。 - 3)

We instantiate our PUSNet as a sparse image denoising network with two steganographic networks concealed for secret image embedding and recovery, which justifies the ability of our PUSNet to covertly transmit the steganographic networks.

3) 我们将 PUSNet 实例化为稀疏图像去噪网络,其中隐藏了两个隐写网络,用于秘密图像嵌入和恢复,这证明了我们的 PUSNet 秘密传输隐写网络的能力是合理的。

2Related works 阿拉伯数字 相关作品

DNN-based Steganography. Most of the existing DNN-based Steganographic schemes are proposed by taking advantage of the encoder-decoder structure for data embedding and extraction. Hayes et al. [10] pioneer the research of such a technique, where the secrets are embedded into a cover image or extracted from a stego-image using an end-to-end learnable DNN (i.e., a secret encoding or decoding network). Zhu et al. [38] insert adaptive noise layers between the secret encoding and decoding network to improve the robustness. Baluja et al.[2, 3] propose to embed a secret color image into another one for large capacity data embedding, where an extra network is designed to convert the secret image into feature maps before data embedding. Zhang et al. [34] propose a universal network to transform the secret image into imperceptible high-frequency components, which could be directly combined with any cover image to form a stego-image. Researchers also devote efforts to the design of invertible steganographic networks [20, 14, 31, 9]. Jing et al. propose HiNet [14, 9] to conceal the secrets into the discrete wavelet transform domain of a cover image using invertible neural networks (INN). Lu et al. [20] increase the channels in the secret branch of the INN to improve the capacity. Xu et al. [31] introduce a conditional normalized flow to maintain the distribution of the high-frequency component of the secret image.

基于 DNN 的隐写术。 现有的基于 DNN 的隐写方案大多是利用编码器-解码器结构进行数据嵌入和提取而提出的。海耶斯等人。[10] 开创了这种技术的研究,其中秘密嵌入到封面图像中或使用端到端可学习的 DNN(即秘密编码或解码网络)从 stego-image 中提取。朱等人。[38] 在秘密编码和解码网络之间插入自适应噪声层,以提高鲁棒性。Baluja 等[2,3] 建议将一个秘密彩色图像嵌入到另一个图像中,以进行大容量数据嵌入,其中设计了一个额外的网络,在数据嵌入之前将秘密图像转换为特征图。张等人。[34] 提出了一种通用网络,将秘密图像转化为难以察觉的高频分量,可以直接与任何封面图像组合形成隐像。研究人员还致力于可逆隐写网络的设计[20,14,31,9]。 Jing 等提出了 HiNet[14,9],利用可逆神经网络(INN)将秘密隐藏到覆盖图像的离散小波变换域中。卢等人。[20] 增加 INN 秘密分支的通道,以提高容量。 徐等人。[31] 引入条件归一化流,以维持秘密图像高频分量的分布。

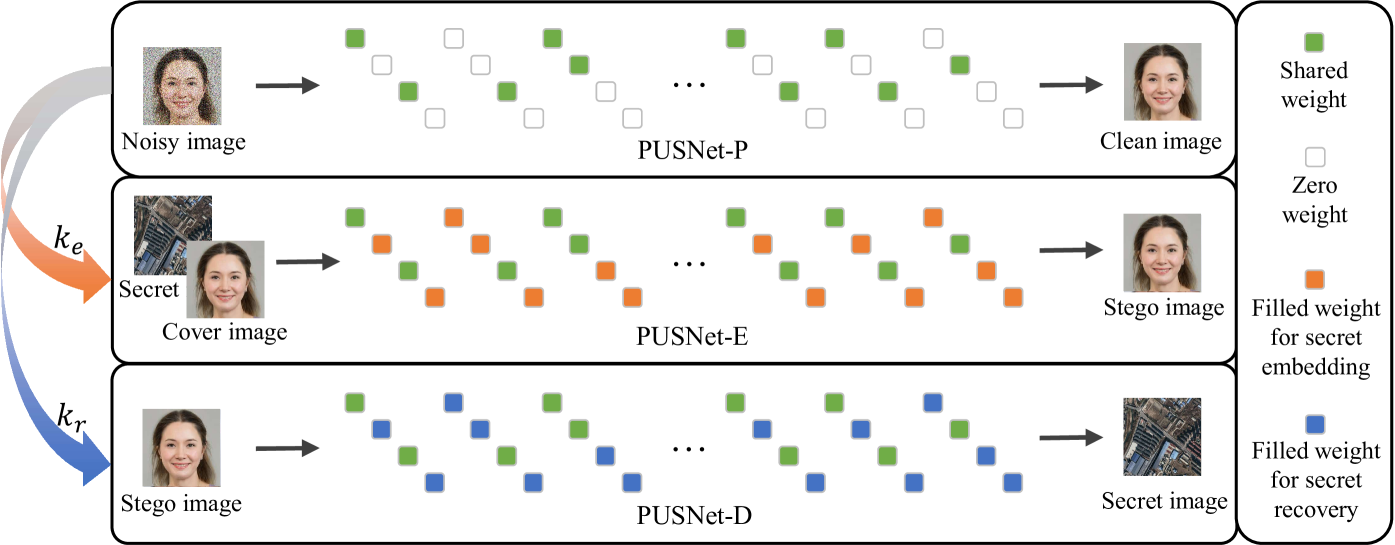

Figure 3:An overview of our proposed method.

图 3: 我们提出的方法概述。

DNN Model Steganography. DNN model steganography aims to conceal a secret DNN model into a benign DNN model imperceptibly. The secret DNN models perform secret machine learning tasks, which are required to be covertly transmitted. While the benign/stego DNN models are released to the public for ordinary machine learning tasks. A few attempts have been made in literature for DNN model steganography. A straightforward strategy is to take advantage of the multi-task learning to train a single stego DNN model for both the ordinary and secret tasks [26]. Such a strategy is not able to prevent unauthorized model extraction because anyone could use the stego DNN model for ordinary or secret tasks. Li et al. [18] pioneer the work of embedding a steganographic network into a benign DNN model with the capability of preventing unauthorized model recovery. In this scheme, a partial of the neurons from the benign DNN model is carefully selected and replaced with those from the secret decoding network, while the rest neurons are learnt to construct a stego DNN model applicable to an ordinary machine learning task. The locations of the neurons of the secret decoding network in the stego DNN model are recorded as side information for model recovery. This scheme is tailored for embedding the secret decoding network, which is difficult to be adopted for the covert communication of secret encoding networks. Besides, the use of side information makes it inconvenient in real-world applications for model recovery.

DNN 模型隐写术。 DNN 模型隐写术旨在在不知不觉中将秘密 DNN 模型隐藏为良性 DNN 模型。秘密 DNN 模型执行秘密机器学习任务,这些任务需要秘密传输。而良性/stego DNN 模型则向公众发布,用于普通机器学习任务。文献中已经对 DNN 模型隐写术进行了一些尝试。一个简单的策略是利用多任务学习来训练一个用于普通任务和秘密任务的单一 stego DNN 模型 [26]。 这种策略无法防止未经授权的模型提取,因为任何人都可以将 stego DNN 模型用于普通或秘密任务。李等人。[18] 率先将隐写网络嵌入到良性 DNN 模型中,并能够防止未经授权的模型恢复。在该方案中,从良性 DNN 模型中仔细选择部分神经元,并替换为来自秘密解码网络的神经元,而其余神经元则被学习以构建适用于普通机器学习任务的 stego DNN 模型。将 stego DNN 模型中秘密解码网络的神经元位置记录为侧面信息,用于模型恢复。该方案是为嵌入秘密解码网络量身定制的,该方案难以用于秘密编码网络的隐蔽通信。此外,侧面信息的使用使其在实际应用中进行模型恢复变得不方便。

3The proposed Method

3、所提出的方法

In this section, we elaborate in detail regarding how our PUSNet is established. We formulate the construction of the PUSNet as a sparse weight filling problem. Then, we introduce the loss function and training strategy for optimizing the PUSNet. Finally, we give the architecture of our PUSNet for instantiation.

在本节中,我们将详细阐述我们的 PUSNet 是如何建立的。我们将 PUSNet 的构造表述为稀疏权重填充问题。然后,介绍了损失函数和优化 PUSNet 的训练策略。最后,我们给出了 PUSNet 的架构以进行实例化。

3.1Sparse Weight Filling

3.1 稀疏重量填充

Our PUSNet is able to work on three different modes for an ordinary machine learning task, a secret embedding task, and a secret recovery task. In the following discussions, we denote our PUSNet as PUSNet-P, PUSNet-E, and PUSNet-D when it works as a purified network, secret encoding network, and secret decoding network, respectively.

我们的 PUSNet 能够为普通机器学习任务、秘密嵌入任务和秘密恢复任务提供三种不同的模式。在下面的讨论中,当我们的 PUSNet 分别用作纯化网络、秘密编码网络和秘密解码网络时,我们将 PUSNet、PUSNet-E 和 PUSNet-D 表示为。

Fig. 3 gives an overview of how the PUSNet works on different modes. In particular, the PUSNet-P is a sparse network and the PUSNet-E and PUSNet-D are its dense versions. To switch the purified network to the steganographic networks, we have to fill the sparse weights in the PUSNet-P with new weights that are generated according to a key.

无花果。 图 3 概述了 PUSNet 在不同模式下的工作原理。特别是,PUSNet-P 是一个稀疏网络,PUSNet-E 和 PUSNet-D 是它的密集版本。要将纯化网络切换到隐写网络,我们必须用根据键生成的新权重填充 PUSNet-P 中的稀疏权重。

Let’s denote the PUSNet-P as ℕ[W⊙M](⋅), where ℕ[⋅] and W denote the architecture and weights of the network, respectively, ⊙ represents the element-wise product and M is a binary mask with the same size as W. We consider the image denoising task as an ordinary machine learning task for the PUSNet-P. Given a noisy image xno and its clean version xcl, we can formulate the PUSNet-P by

让我们将 PUSNet-P 表示为 ℕ[W⊙M](⋅) ,其中 ℕ[⋅] 和 W 分别表示网络的架构和权重, ⊙ 表示元素乘积,并且 M 是大小与 相同的 W 二进制掩码。我们将图像去噪任务视为 PUSNet-P 的普通机器学习任务。给定一个噪声图像 xno 及其干净版本 xcl ,我们可以通过以下方式公式化 PUSNet-P

| ℕ[W⊙M](xno)→xcl. | (1) |

To switch the PUSNet-P into PUSNet-E, the sender could fill the sparse weights in the PUSNet-P by

要将 PUSNet-P 切换到 PUSNet-E,发送方可以通过以下方式填充 PUSNet-P 中的稀疏权重

| ℕ[W⊙M+We⊙M¯](xco,xse)→xst, | (2) |

where M¯ is a binary mask complementing M, xco, xse and xst refer to the cover, secret and stego-image, We is a set of random weights initialized by

其中 M¯ 是补充 M 的 xco 二进制掩码, xse 并 xst 指的是封面,秘密和 stego-image, We 是一组由 初始化的随机权重

| We=ℐ(ℕ[⋅],ke), | (3) |

where ℐ(⋅) is an algorithm for seed (i.e., key) based weight initialization and ke is the key to trigger the secret encoding network. We use the Xavier [8] algorithm to initialize the filled weights in the implementation.

式中 ℐ(⋅) ,是一种基于种子(即密钥)权重初始化的算法, ke 是触发秘密编码网络的密钥。我们使用 Xavier [8] 算法来初始化实现中的填充权重。

By the same token, the receiver could obtain the PUSNet-D by filling the sparse weights in the PUSNet-P by

同样,接收者可以通过填充 PUSNet-P 中的稀疏权重来获得 PUSNet-D

| ℕ[W⊙M+Wr⊙M¯](xst)→xse, | (4) |

where Wr a set of random weights initialized by

其中 Wr 一组初始化为

| Wr=ℐ(ℕ[⋅],kr), | (5) |

where kr is a key to trigger the secret decoding network.

其中 kr 是触发机密解码网络的密钥。

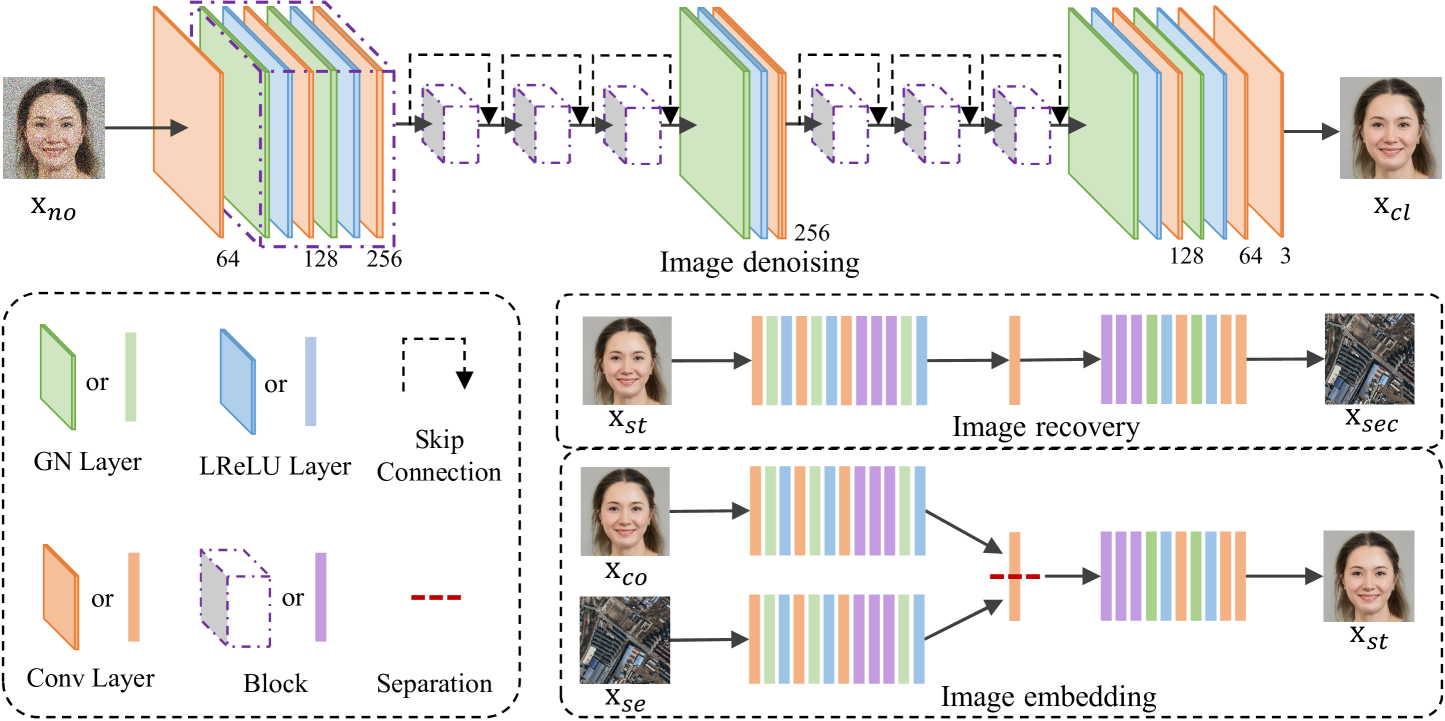

Figure 4:The architecture of the PUSNet.

图 4:PUSNet 的架构。

3.2Loss Function

3.2 损失函数

To effectively train the PUSNet, we design the following loss terms, including an embedding loss, a recovery loss, and a purified loss. Next, we explain each loss term in detail.

为了有效地训练 PUSNet,我们设计了以下损失项,包括嵌入损失、恢复损失和纯化损失。接下来,我们详细解释每个损失项。

Embedding loss. The embedding loss is designed to train the PUSNet-E which aims to embed a secret image xse into a cover image xco to generate a stego-image xst. It should be difficult for people to differentiate the stego-image from the cover image. To this end, we compute the embedding loss below:

嵌入损失。 嵌入损失旨在训练 PUSNet-E,该 PUSNet-E 旨在将秘密图像 xse 嵌入到封面图像 xco 中以生成 stego-image xst 。人们应该很难区分隐形图像和封面图像。为此,我们计算以下嵌入损失:

| ℒemb=∑n=1Nℓ2(xstn,xcon), | (6) |

where xcon and xstn are the n-th cover image and the corresponding stego-image in the training set, N is the number of samples for training, and ℓ2 is the L-2 norm to measure the distortion between the cover and stego-image.

式中 xcon ,和 是 xstn 训练集中的 n 第-个覆盖图像和对应的隐形图像, N 是训练的样本数, ℓ2 是测量覆盖和隐形图像之间畸变的 L-2 范数。

Recovery loss. The recovery loss is designed to train the PUSNet-D which recovers the secret image xse from the stego-image xst. The recovered secret image should be close to xse. Therefore, the recovery loss is given by

恢复损失。 恢复损失旨在训练 PUSNet-D,该 PUSNet-D 从隐像中恢复秘密图像 xse xst 。恢复的密钥映像应接近 xse 。因此,恢复损失由下式给出

| ℒrec=∑n=1Nℓ2(xsecn,xsen), | (7) |

where xstn and xsecn refer to the n-th secret image and the corresponding recovered version for training.

其中 xstn ,并 xsecn 参考 n 第-个秘密镜像和相应的恢复版本进行训练。

Purified loss. The purified loss is designed to train the PUSNet-P which conducts image denoising to restore a clean image from a noisy one. Given a noisy image xno for input, the PUSNet-P is expected to output a restored image that is close to the clean version xcl. The denoising loss is given by

纯化损失。 纯化后的损失旨在训练 PUSNet-P,该 PUSNet-P 进行图像去噪,以从嘈杂的图像中恢复干净的图像。给定一个噪声图像 xno 的输入,PUSNet-P 预计会输出一个接近干净版本 xcl 的恢复图像。去噪损失由下式给出

| ℒden=∑n=1Nℓ2(xdn,xcln), | (8) |

where xdn and xcln refer to the n-th restored image and the corresponding ground-truth clean image for training.

其中 xdn ,并 xcln 参考 n 第-个恢复的图像和相应的地面实况清理图像进行训练。

During the training, we only update the sparse weights in the PUSNet-P (denoted as Ws), which is shared among PUSNet-P, PUSNet-E and the PUSNet-D. Please refer to the green nodes in Fig. 3 for illustration of Ws. In other words, we only update a partial of the weights in PUSNet (i.e., Ws) to optimize the performance of the PUSNet-P, PUSNet-E, and PUSNet-D on different tasks. Let α be the learning rate, we update Ws below using gradient descent:

在训练过程中,我们只更新 PUSNet-P(表示为 Ws )中的稀疏权重,该权重在 PUSNet-P、PUSNet-E 和 PUSNet-D 之间共享。请参考图中的绿色节点。3 用于说明 Ws 。换句话说,我们只更新了 PUSNet 中的部分权重(即 Ws ),以优化 PUSNet-P、PUSNet-E 和 PUSNet-D 在不同任务上的性能。设 α 为学习率,我们使用梯度下降法在下面更新 Ws :

| Ws=Ws−α(λe∇Wsℒemb+λr∇Wsℒrec+λd∇Wsℒden), | (9) |

where λe, λr and λd are hyper-parameters for balancing the contributions of the gradients computed from PUSNet-E, PUSNet-D and PUSNet-P, respectively.

其中 λe ,和 λr λd 分别是用于平衡从 PUSNet-E、PUSNet-D 和 PUSNet-P 计算的梯度贡献的超参数。

3.3Network Architecture

3.3 网络架构

Similar to classic denoising DNN models [36, 22, 37], our PUSNet is a DNN constructed by stacking convolutional (Conv), normalization, and activation layers. Fig. 4 depicts the architecture of our PUSNet, which consists of 19 Conv layers. There is a group normalization (GN) [30] layer and a Leaky Rectified Linear Unit (LReLU) [11] before each Conv layer except for the first and last one. We adopt skip connections [12] from the fourth to sixteenth Conv layers. By following the suggestion given in [13], we place the skip connections between the Conv and GN layers.

与经典的去噪 DNN 模型 [36,22,37] 类似,我们的 PUSNet 是由堆叠卷积层(Conv)、归一化层和激活层构建的 DNN。图 4 描绘了我们的 PUSNet 的架构,它由 19 个 Conv 层组成。除了第一个和最后一个 Conv 层外,每个 Conv 层之前都有一个群归一化 (GN) [30] 层和一个泄漏整流线性单元 (LReLU) [11]。 我们采用从第四层到第十六层的跳过连接 [12]。 通过遵循 [13] 中给出的建议,我们在 Conv 层和 GN 层之间放置跳跃连接。

Taking a single image as input, the above architecture outputs a predicted image with the same size as the input, which is suitable for the image denoising and secret image recovery tasks. Since a secret image embedding task requires two images (i.e., xco and xse) for input, we propose below an adaptive strategy to make the PUSNet suitable for secret encoding. The basic concept is to duplicate the first half of the network into two identical sub-networks to process xco and xse separately to obtain two feature maps from the cover and secret image. These two feature maps are then concatenated and fed into the second half of the network to generate the stego-image xst, as shown in the lower part in Fig. 4. In our implementation, we take layers before the tenth Conv layer from the PUSNet to extract two feature maps from xco and xse. Then, we separate the filters of the tenth Conv layer into two halves, where each half is used to convolve with the features of xco or xse. As such, we can directly concatenate the convolved features and feed them into the rest of the PUSNet to generate xst. Such a strategy enables the PUSNet to process multiple images without causing additional overhead, which improves the flexibility of the PUSNet for different tasks.

以单个图像为输入,上述架构输出与输入大小相同的预测图像,适用于图像去噪和秘密图像恢复任务。由于秘密图像嵌入任务需要两个图像(即 xco 和 xse )进行输入,因此我们提出了一种自适应策略,使 PUSNet 适合秘密编码。基本概念是将网络的前半部分复制成两个相同的子网络进行处理 xco , xse 并分别从封面和秘密图像中得到两个特征图。然后将这两个特征图连接起来并输入网络的后半部分以生成隐形图像 xst ,如图 4 的下部所示。在我们的实现中,我们从 PUSNet 中获取第十个 Conv 层之前的层,从 和 xse 中 xco 提取两个特征图。然后,我们将第十个 Conv 层的滤波器分成两半,其中每一半用于卷积 或 xse 的 xco 特征。因此,我们可以直接连接卷积特征并将它们输入到 PUSNet 的其余部分以生成 xst .这种策略使 PUSNet 能够处理多个图像而不会造成额外的开销,从而提高了 PUSNet 在不同任务中的灵活性。

Table 1:Performance comparisons on different datasets. “↑”: the larger the better, “↓”: the smaller the better.

表 1: 不同数据集的性能比较。“↑↑\uparrow↑”:越大越好,“↓↓\downarrow↓”:越小越好。

| Methods 方法 | Cover/Stego-image pair 封面/隐形图像对 | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| DIV2K | COCO | ImageNet 图像网 | ||||||||||

| PSNR(dB)↑ PSNR(分贝) ↑ | SSIM↑ 是的 ↑ | APD↓ APD 的 ↓ | RMSE↓ | PSNR(dB)↑ PSNR(分贝) ↑ | SSIM↑ 是的 ↑ | APD↓ APD 的 ↓ | RMSE↓ | PSNR(dB)↑ PSNR(分贝) ↑ | SSIM↑ 是的 ↑ | APD↓ APD 的 ↓ | RMSE↓ | |

| HiDDeN [38] | 28.19 | 0.9287 | 8.01 | 11.00 | 29.16 | 0.9318 | 6.91 | 9.60 | 28.87 | 0.9234 | 7.43 | 10.21 |

| Baluja [3] 巴鲁贾 [3] | 28.42 | 0.9347 | 7.92 | 10.64 | 29.32 | 0.9374 | 7.04 | 9.36 | 28.82 | 0.9303 | 7.68 | 10.21 |

| UDH [34] | 37.58 | 0.9629 | 2.38 | 3.40 | 38.01 | 0.9033 | 6.12 | 9.55 | 37.89 | 0.9559 | 2.30 | 3.29 |

| HiNet [14] 海网 [14] | 44.86 | 0.9922 | 1.00 | 1.53 | 46.47 | 0.9925 | 0.81 | 1.30 | 46.88 | 0.9920 | 0.81 | 1.26 |

| PUSNet-E | 38.15 | 0.9792 | 2.30 | 3.33 | 39.09 | 0.9772 | 2.01 | 2.96 | 38.94 | 0.9756 | 2.21 | 3.06 |

| Methods 方法 | Secret/Recovered image pair 机密/恢复的映像对 | |||||||||||

| DIV2K | COCO | ImageNet 图像网 | ||||||||||

| PSNR(dB)↑ PSNR(分贝) ↑ | SSIM↑ 是的 ↑ | APD↓ APD 的 ↓ | RMSE↓ | PSNR(dB)↑ PSNR(分贝) ↑ | SSIM↑ 是的 ↑ | APD↓ APD 的 ↓ | RMSE↓ | PSNR(dB)↑ PSNR(分贝) ↑ | SSIM↑ 是的 ↑ | APD↓ APD 的 ↓ | RMSE↓ | |

| HiDDeN [38] | 28.42 | 0.8695 | 7.62 | 9.94 | 28.81 | 0.8576 | 7.20 | 9.54 | 28.23 | 0.8435 | 7.83 | 10.47 |

| Baluja [3] 巴鲁贾 [3] | 28.53 | 0.9036 | 7.53 | 10.66 | 29.13 | 0.9091 | 6.61 | 9.80 | 27.63 | 0.8909 | 8.33 | 12.26 |

| UDH [34] | 30.52 | 0.9120 | 5.62 | 7.92 | 30.52 | 0.9120 | 5.62 | 7.92 | 29.63 | 0.8916 | 6.67 | 10.33 |

| HiNet [14] 海网 [14] | 28.66 | 0.8507 | 7.25 | 9.68 | 28.08 | 0.8181 | 7.80 | 10.49 | 27.94 | 0.8159 | 8.03 | 10.83 |

| PUSNet-D | 26.88 | 0.8363 | 8.75 | 11.95 | 26.96 | 0.8211 | 8.71 | 12.14 | 26.28 | 0.8028 | 9.58 | 13.43 |

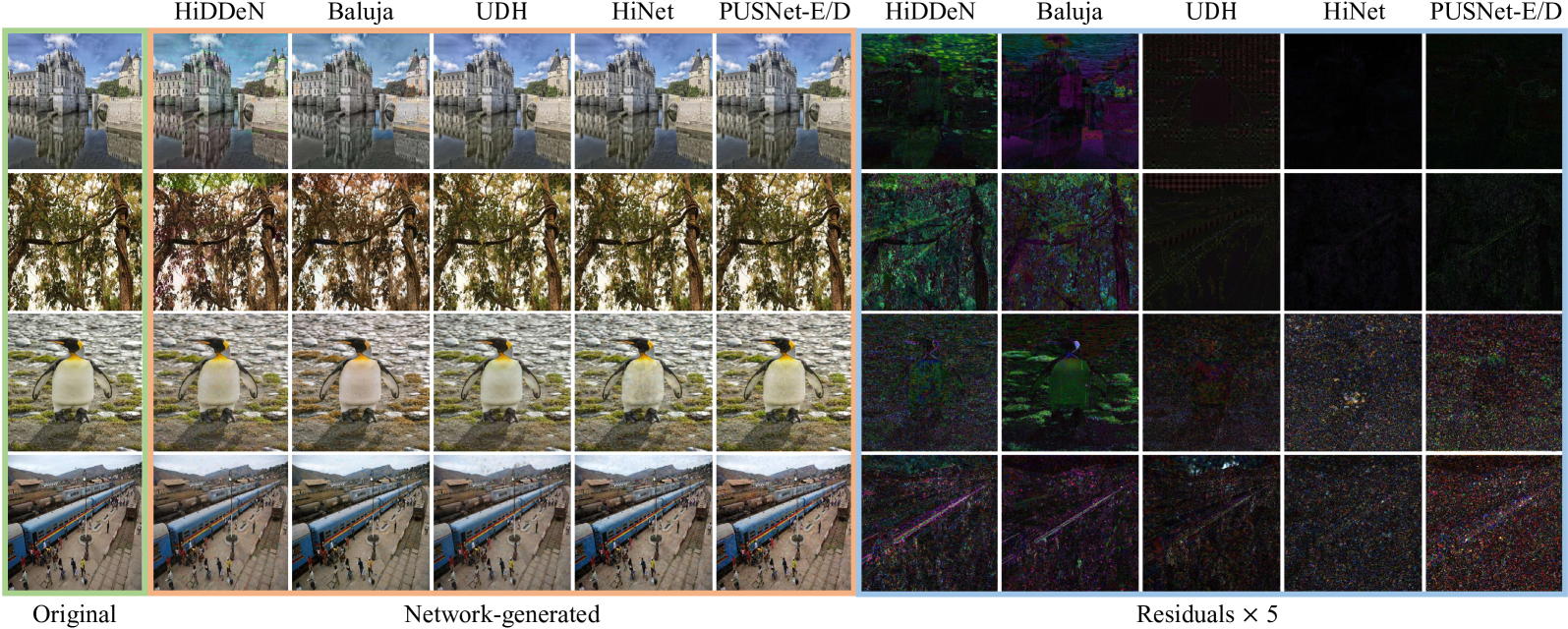

Figure 5:Examples of the stego and recovered images generated using different schemes, with a green border on the original images, an orange border on the generated images, and a blue border on × 5 magnified residuals between them. The cover/stego and secret/recovered images are given in the first two rows and the last two rows, respectively.

图 5: 使用不同方案生成的 stego 图像和恢复图像的示例,原始图像上有绿色边框,生成图像上有橙色边框,它们之间的×5 放大残差上有蓝色边框。封面/隐形和秘密/恢复图像分别在前两行和后两行给出。

3.4Sparse Mask Generation

3.4 稀疏掩码生成

As what have mentioned before, the purified network (i.e., PUSNet-P) has to be a sparse network to trigger the secret encoder or decoder network (i.e., PUSNet-E and PUSNet-D). Next, we explain how we initialize a sparse network PUSNet-P based on the network architecture given in the previous section. Randomly generating a sparse mask (i.e., M) may not be the best solution because it is weak in maintaining the performance of the purified network for image denoising.

如前所述,纯化网络(即 PUSNet-P)必须是一个稀疏网络才能触发秘密编码器或解码器网络(即 PUSNet-E 和 PUSNet-D)。接下来,我们将解释如何根据上一节中给出的网络架构初始化稀疏网络 PUSNet-P。随机生成稀疏掩码(即 M )可能不是最佳解决方案,因为它在保持纯化网络的图像去噪性能方面较弱。

Fortunately, researchers have proposed several approaches to prune the networks at the initialization stage [17, 28, 27, 7], which are shown to be effective for initializing a sparse network. In our implementation, we adopt a magnitude-based pruning method to generate the sparse mask [7]. Given a sparse ratio 𝒮 and the total number of weights in the PUSNet 𝒩, we generate the sparse mask M as follows.

幸运的是,研究人员提出了几种在初始化阶段修剪网络的方法 [17,28,27,7],这些方法被证明对初始化稀疏网络是有效的。在我们的实现中,我们采用了一种基于幅度的剪枝方法来生成稀疏掩码 [7]。 给定稀疏比率 𝒮 和 PUSNet 𝒩 中的权重总数,我们生成稀疏掩码, M 如下所示。

- 1)

Initialize all the weights in the PUSNet using a random seed, and sort them in descending order.

1) 使用随机种子初始化 PUSNet 中的所有权重,并按降序排序。 - 2)

Compute M by

2) 计算 MM\mathrm{M}roman_MM←1(W0>t), (10) where 1 is the indicator function, W0 is the initialized weights, and t is a threshold equals to the p-th largest weight in W0,where p=⌊𝒮⋅𝒩⌋ and ⌊⌋ is the floor operation.

其中 1 是指标函数, W0 是初始化的权重, t 是等于 W0 中 p 的第 - 大权重的阈值,其中 p=⌊𝒮⋅𝒩⌋ 和 ⌊⌋ 是底层运算。

4Experiments 4、实验

4.1Implementation Details

4.1 实施细节

Training. Our PUSNet is trained on the DIV2K training dataset [1], which consists of 800 high-resolution images. We randomly crop 256 × 256 patches from the dataset and apply horizontal and vertical flipping for data augmentation. The mini-batch size is set to 8, with half of the patches randomly selected as the cover images and the remaining patches as the secret images. We add Gaussian noise into the patches to generate noisy images for training. The PUSNet is trained for 3,000 iterations using Adam [16] optimizer with default parameters using a fixed weight decay of 1×10−5 and an initial learning rate of 1×10−4. The learning rate is reduced by half every 500 iterations. The hype-parameters λe, λr and λd are set as 1.0, 0.75, 0.25, respectively. Unless stated otherwise, we set the sparse ratio as 𝒮=0.9.

训练。 我们的 PUSNet 是在 DIV2K 训练数据集 [1] 上训练的,该数据集由 800 张高分辨率图像组成。我们从数据集中随机裁剪 256 × 256 个补丁,并应用水平和垂直翻转进行数据增强。小批量大小设置为 8,其中一半的补丁随机选择作为封面图像,其余补丁作为秘密图像。我们将高斯噪声添加到补丁中以生成用于训练的噪声图像。PUSNet 使用 Adam [16] 优化器训练 3,000 次迭代,默认参数使用固定权重衰减 1×10−5 和初始学习率 1×10−4 。每 500 次迭代,学习率降低一半。hype-parameters λe 和 λr λd 分别设置为 1.0、0.75、0.25。除非另有说明,否则我们将稀疏比率设置为 𝒮=0.9 。

Evaluation. We evaluate the performance of our PSUNet on three test sets, including the DIV2K test dataset, 1000 images randomly selected from the ImageNet test dataset [25], and 1000 images randomly selected from the COCO dataset [19]. All test images are resized to 512 × 512 pixels before being fed into the network. We adopt four metrics to measure the visual quality of the images, including Peak Signal-to-Noise Ratio (PSNR), Structural Similarity Index (SSIM) [29], Averaged Pixel-wise Discrepancy (APD), and Root Mean Square Error (RMSE). We adopt two steganalysis tools, including StegExpose [4] and SiaStegNet [33], to evaluate the undetectability of the stego-images generated by PUSNet-E. We also employ three strategies to detect for DNN model steganalysis, which aim to detect the existence of secret DNN models (e.g., the steganographic networks) from a purified DNN model. The network-generated images are quantified before the evaluation. All our experiments are conducted on Ubuntu 18.04 with four NVIDIA RTX 3090 Ti GPUs.

评估。 我们在三个测试集上评估了 PSUNet 的性能,包括 DIV2K 测试数据集、从 ImageNet 测试数据集 [25] 中随机选择的 1000 张图像和从 COCO 数据集中随机选择的 1000 张图像[19]。 在输入网络之前,所有测试图像的大小都会调整为 512 × 512 像素。我们采用四个指标来衡量图像的视觉质量,包括峰值信噪比(PSNR)、结构相似性指数(SSIM)[29]、平均像素差异(APD)和均方根误差(RMSE)。我们采用两种隐写分析工具,包括 StegExpose [4] 和 SiaStegNet [33],来评估 PUSNet-E 生成的隐写图像的不可检测性。我们还采用三种策略来检测 DNN 模型隐写分析,旨在从纯化的 DNN 模型中检测秘密 DNN 模型(例如隐写网络)的存在。在评估之前对网络生成的图像进行量化。我们所有的实验都是在带有四个 NVIDIA RTX 18.04 Ti GPU 的 Ubuntu 3090 上进行的。

Table 2:Comparison of the denoising performance of the PUSNet-P and PUSNet-C on different datasets.

表 2:PUSNet-P 和 PUSNet-C 在不同数据集上的去噪性能比较。

| Image pairs | DIV2K | COCO | ImageNet 图像网 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PSNR(dB)↑ PSNR(分贝) ↑ | SSIM↑ 是的 ↑ | APD↓ APD 的 ↓ | RMSE↓ | PSNR(dB)↑ PSNR(分贝) ↑ | SSIM↑ 是的 ↑ | APD↓ APD 的 ↓ | RMSE↓ | PSNR(dB)↑ PSNR(分贝) ↑ | SSIM↑ 是的 ↑ | APD↓ APD 的 ↓ | RMSE↓ | |

| 𝐱no/𝐱cl | 22.11 | 0.4432 | 15.95 | 19.99 | 22.11 | 0.3907 | 15.96 | 20.00 | 21.11 | 0.3902 | 15.96 | 20.00 |

| PUSNet-P(𝐱no)/𝐱cl | 32.25 | 0.9080 | 4.57 | 6.37 | 32.95 | 0.8926 | 4.29 | 5.89 | 32.96 | 0.8922 | 4.36 | 5.94 |

| PUSNet-C(𝐱no)/𝐱cl | 33.03 | 0.9236 | 4.11 | 5.84 | 33.68 | 0.9074 | 3.92 | 5.54 | 33.66 | 0.9073 | 4.00 | 5.52 |

4.2Visual quality

4.2 视觉质量

We evaluate the visual quality of the stego-image and the recovered secret image (termed as the recovered image for short) using our PUSNet. For better assessment, we compare our PSUNet against several state-of-the-art (SOTA) DNN-based steganographic techniques, including Baluja [3], HiDDeN [38], UDH [34], and HiNet [14]. For fairness, we retrain the aforementioned models on the DIV2K training dataset and evaluate their performance under the same settings as ours. As given in Table 1, we can see that our PUSNet outperforms Baluja [3] and HiDDeN [38] in all four metrics in terms of the visual quality of the stego-images. Specifically, the PUSNet-E achieves over 9.73 dB, 9.77 dB, and 10.07 dB performance gain on DIV2K, COCO, and ImageNet, respectively. While the PUSNet-D does not perform as well as the PUSNet-E, with a PSNR of over 26dB for the recovered images, which is still acceptable for revealing sufficient content in the recovered images.

我们使用 PUSNet 评估 stego-image 和恢复的秘密图像(简称恢复图像)的视觉质量。为了更好地评估,我们将我们的 PSUNet 与几种基于 DNN 的最先进的(SOTA)隐写技术进行了比较,包括 Baluja [3],HiDDeN [38],UDH [34] 和 HiNet [14]。 为了公平起见,我们在 DIV2K 训练数据集上重新训练上述模型,并在与我们相同的设置下评估它们的性能。如表 1 所示,我们可以看到,在隐光图像的视觉质量方面,我们的 PUSNet 在所有四个指标上都优于 Baluja [3] 和 HiDDeN [38]。 具体而言,PUSNet-E 在 DIV2K、COCO 和 ImageNet 上分别实现了超过 9.73 dB、9.77 dB 和 10.07 dB 的性能增益。虽然 PUSNet-D 的性能不如 PUSNet-E,但恢复图像的 PSNR 超过 26dB,这对于显示恢复图像中的足够内容仍然是可以接受的。

Fig. 5 illustrates the stego and recovered images using different schemes. To highlight the difference between the cover/stego or secret/recovered image pairs, we magnify their residuals by 5 times. It can be seen that our PUSNet-E and PUSNet-D are able to generate stego and recovered images with high visual quality. By using PUSNet-E, the residual between the stego-image and the cover image is at a low visual level, which is the second best among all the schemes. By using the PUSNet-D, we observe noticeable noise in the residual between the secret and recovered images. But we could still be able to look into the details of the image content from the recovered image. Overall, our PUSNet is capable to be served as a steganographic tool for covert communication.

无花果。 图 5 说明了使用不同方案的 stego 和恢复图像。为了突出覆盖/隐形或秘密/恢复图像对之间的差异,我们将它们的残差放大了 5 倍。可以看出,我们的 PUSNet-E 和 PUSNet-D 能够生成具有高视觉质量的隐形和恢复图像。通过使用 PUSNet-E,隐形图像和封面图像之间的残差处于较低的视觉水平,在所有方案中排名第二。通过使用 PUSNet-D,我们观察到秘密图像和恢复图像之间的残余中存在明显的噪声。但是我们仍然可以从恢复的图像中查看图像内容的详细信息。总体而言,我们的 PUSNet 能够作为秘密通信的隐写工具。

4.3Undetectability of the Stego-images

4.3 隐像的不可检测性

Next, we evaluate the undetectability of the stego-images generated using our PUSNet-E. We use two popular image steganalysis tools that are publicly available to carry out the evaluation, including StegExpose [4] and SiaStegNet [33]. The former is a traditional steganalysis tool which assembles a set of statistical methods, while the latter is a DNN-based steganalysis tool.

接下来,我们评估使用 PUSNet-E 生成的隐瞳图像的不可检测性。我们使用两种公开可用的流行图像隐写分析工具来进行评估,包括 StegExpose [4] 和 SiaStegNet [33]。 前者是传统的隐写分析工具,组装了一套统计方法,而后者是基于 DNN 的隐写分析工具。

We follow the same protocol as in [14] to use the StegExpose. In particular, we use our PUSNet-E on all the cover images in the three testing datasets to generate the stego-images, which are then fed into the StegExpose for evaluation. We obtain a receiver operating characteristic (ROC) curve by varying the detection thresholds in StegExpose, which is shown in Fig. 6 (a). The value of area under curve (AUC) of this ROC curve is 0.58, which is very close to random guessing (AUC=0.5). This demonstrates the high undetectability of our stego-images against the StegExpose.

我们遵循与 [14] 相同的协议来使用 StegExpose。特别是,我们在三个测试数据集中的所有封面图像上使用 PUSNet-E 来生成 stego-images,然后将其输入 StegExpose 进行评估。我们通过改变 StegExpose 中的检测阈值获得了接收者工作特征(ROC)曲线,如图 6(a)所示。该 ROC 曲线的曲线下面积(AUC)值为 0.58,非常接近随机猜测(AUC=0.5)。这证明了我们的 stego-images 对 StegExpose 的高度不可检测性。

Figure 6:The undetectability of the stego-images generated using PUSNet-E against (a) StegExpose and (b) SiaStegNet.

图 6: 使用 PUSNet-E 针对 (a) StegExpose 和 (b) SiaStegNet 生成的 stego-image 的不可检测性。

In order to conduct the evaluations using the SiaStegNet, we follow the protocol given in [9] to train SiaStegNet using different numbers of cover/stego-image pairs to investigate how many image pairs are needed to make SiaStegNet capable to detect the stego-images. Fig. 6(b) plots the detection accuracy of the SiaStegNet by varying the number of image pairs for training. It can be observed that, in order to accurately detect the existence of secret data, the adversary needs to collect at least 100 labeled cover/stego-image pairs. This could be challenging in real-world applications. Since there is always a trade-off between the payload and undetectability [39, 40], the sender could reduce the amount of the payload of the secret information in a stego-image to improve the undetectability.

为了使用 SiaStegNet 进行评估,我们按照 [9] 中给出的协议,使用不同数量的覆盖/隐瞳图像对训练 SiaStegNet,以研究需要多少个图像对才能使 SiaStegNet 能够检测隐瞳图像。图 6(b)通过改变训练图像对的数量绘制了 SiaStegNet 的检测精度。可以观察到,为了准确检测秘密数据的存在,对手需要收集至少 100 个标记的掩体/隐瞳图像对。这在实际应用中可能具有挑战性。由于有效载荷和不可检测性之间总是存在权衡[39,40],因此发送者可以减少隐形图像中秘密信息的有效载荷量,以提高不可检测性。

Table 3:Steganographic performance (mean±std) of the PUSNet-ER and PUSNet-DR.

表 3:PUSNet-ER 和 PUSNet-DR 的隐写性能(平均值±正负\pm±std)。

| Image pairs 图像对 | DIV2K | COCO | ImageNet 图像网 | |||

|---|---|---|---|---|---|---|

| PSNR(dB)↑ PSNR(分贝) ↑ | APD↓ APD 的 ↓ | PSNR(dB)↑ PSNR(分贝) ↑ | APD↓ APD 的 ↓ | PSNR(dB)↑ PSNR(分贝) ↑ | APD↓ APD 的 ↓ | |

| PUSNet-ER(𝐱se,𝐱co)/𝐱co 猫网 ER (𝐱se,𝐱co) / 𝐱co | 8.81±1.74 | 83.06±17.79 | 8.01±2.07 | 92.19±22.33 | 7.74±1.89 | 95.45±20.54 |

| PUSNet-DR(𝐱st)/𝐱se | 6.52±0.78 | 107.06±11.07 | 6.25±0.97 | 107.01±14.03 | 6.73±0.83 | 100.30±10.78 |

4.4Undetectability of the DNN model

4.4DNN 模型的不可检测性

Since we try to imperceptibly conceal the steganographic networks into a purified network, it is necessary to conduct an analysis to detect the existence of secret DNN models in a purified model that is transmitted through public channels. We term such a task as the DNN model steganalysis. It is unfortunate that almost all the existing steganalysis tools are designed for media (image/video/text) steganalysis. In this section, we empirically adopt several strategies for DNN model steganalysis. We assume that the adversary possesses the PUSNet-C, which is trained only for image denoising using the DIV2K training dataset.

由于我们试图在不知不觉中将隐写网络隐藏到纯化网络中,因此有必要进行分析以检测通过公共渠道传输的纯化模型中是否存在秘密 DNN 模型。我们将这样的任务称为 DNN 模型隐写分析。遗憾的是,几乎所有现有的隐写分析工具都是为媒体(图像/视频/文本)隐写分析而设计的。在本节中,我们根据经验采用了几种 DNN 模型隐写分析策略。我们假设对手拥有 PUSNet-C,该 PUSNet-C 仅使用 DIV2K 训练数据集进行图像去噪训练。

Figure 7:Visual comparisons of denoised images of the PUSNet-P and PUSNet-C. From left to right, the original clean images, the noisy images, the denoised images using PUSNet-P, and the denoised images using PUSNet-C.

图 7:PUSNet-P 和 PUSNet-C 去噪图像的视觉比较。从左到右,原始干净图像、噪声图像、使用 PUSNet-P 进行去噪图像和使用 PUSNet-C 进行去噪图像。

The PUSNet-C is regarded as the pure purified model, which does not contain any secret networks. Its counterpart is the PUSNet-P which can be used to trigger the PUSNet-E and PUSNet-D. We conduct the DNN model steganalysis in the following three aspects.

PUSNet-C 被认为是纯净模型,它不包含任何秘密网络。它的对应物是 PUSNet-P,可用于触发 PUSNet-E 和 PUSNet-D。我们从以下三个方面进行 DNN 模型隐写分析。

Performance reduction. In this strategy, we aim to measure the performance reduction between the PUSNet-P and PUSNet-C on the image denoising task. The PUSNet-P should have similar denoising ability compared to the PUSNet-C to avoid being noticed. Table. 2 provides the visual quality between the denoised/clean image pairs using different models, where PUSNet-P(𝐱no) and PUSNet-C(𝐱no) represent denoised versions of the image 𝐱no using the PUSNet-P and PUSNet-C, respectively. It can be seen that both the PUSNet-P and PUSNet-C are equipped with good image denoising ability, which significantly improve the visual quality of the images after denoising. Compared with the PUSNet-C, the performance reduction of PUSNet-P is neglectable, with less than 0.8dB decrease in PSNR on the COCO dataset. Figure 7 visualizes some examples of denoised images using the PUSNet-P and PUSNet-C, where we can hardly observe the difference between the denoised images using different denoising models.

性能下降。 在此策略中,我们旨在测量 PUSNet-P 和 PUSNet-C 在图像去噪任务上的性能降低。与 PUSNet-C 相比,PUSNet-P 应具有相似的去噪能力,以避免被注意到。表 2 提供了使用不同模型的去噪/干净图像对之间的视觉质量,其中 PUSNet-P (𝐱no) 和 PUSNet-C (𝐱no) 分别表示使用 PUSNet-P 和 PUSNet-C 的图像 𝐱no 的去噪版本。可以看出,PUSNet-P 和 PUSNet-C 都具备了良好的图像去噪能力,显著提高了去噪后图像的视觉质量。与 PUSNet-C 相比,PUSNet-P 的性能下降可以忽略不计,在 COCO 数据集上 PSNR 下降幅度小于 0.8dB。图 7 可视化了使用 PUSNet-P 和 PUSNet-C 进行去噪图像的一些示例,其中我们几乎无法观察到使用不同去噪模型的去噪图像之间的差异。

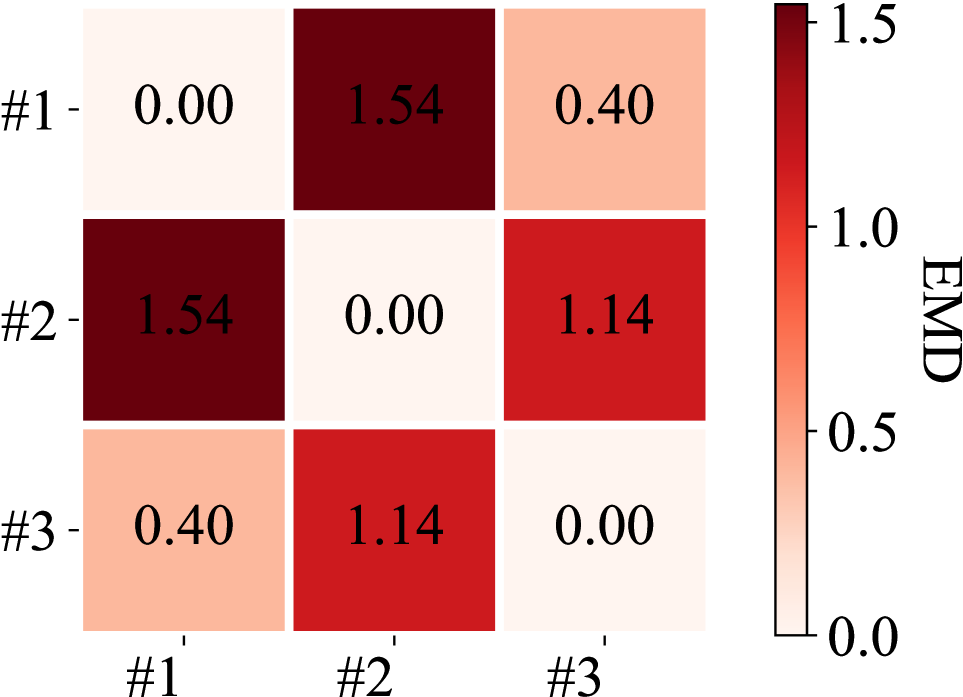

Weight Distribution. In this strategy, we aim to measure the distance between the distributions of the weights in PUSNet-P and PUSNet-C. We believe such a distance could be useful for DNN model steganalysis. We adopt the Earth Mover’s Distance (EMD) [24] to measure the distance between weight distributions of the PUSNet-P and PUSNet-C. Here, we provide two versions of PUSNet-C, including PUSNet-C1 and PUSNet-C2, which are trained using slightly different strategies. Specifically, the weight decays of their optimizers are set as 1×10−5 and 0 respectively. We consider the PUSNet-P to be secure if the EMDs between the PUSNet-P and PUSNet-C1 / PUSNet-C2 is less than that between PUSNet-C1 and PUSNet-C2. Fig. 8 plots the pairwise EMDs among different model pairs using POT [6]. It can be seen that the weight distributions of the PUSNet-P and PUSNet-C1 are similar as evidenced by a low EMD. Moreover, the EMD between PUSNet-P and PUSNet-C2 does not exceed the EMD between PUSNet-C1 and PUSNet-C2. Therefore, it is difficult to determine the existence of the secret modals in our PUSNet-P by computing the distance of the weight distribution between PUSNet-P and PUSNet-C.

重量分布。 在此策略中,我们旨在测量 PUSNet-P 和 PUSNet-C 中权重分布之间的距离。我们认为这样的距离可能对 DNN 模型隐写分析有用。 我们采用推土机距离 (EMD) [24] 测量 PUSNet-P 和 PUSNet-C 权重分布之间的距离。 在这里,我们提供了两个版本的 PUSNet-C,包括 PUSNet-C 1 和 PUSNet-C 2 ,它们使用略有不同的策略进行训练。具体来说,其优化器的权重衰减分别设置为 1×10−5 和 0。如果 PUSNet-P 和 PUSNet-C 1 / PUSNet-C 2 之间的 EMD 小于 PUSNet-C 1 和 PUSNet-C 2 之间的 EMD,我们认为 PUSNet-P 是安全的。图 8 使用 POT 绘制了不同模型对之间的成对 EMD[6]。 可以看出,PUSNet-P 和 PUSNet-C 1 的权重分布相似,低 EMD 证明了这一点。此外,PUSNet-P 和 PUSNet-C 2 之间的 EMD 不超过 PUSNet-C 1 和 PUSNet-C 2 之间的 EMD 。因此,很难通过计算 PUSNet-P 和 PUSNet-C 之间的权重分布距离来确定 PUSNet-P 中秘密模态的存在。

Figure 8:The pairwise EMDs among the PUSNet-C1 (#1), PUSNet-C2 (#2) and PUSNet-P (#3).

图 8:PUSNet-C11{}_{1}start_FLOATSUBSCRIPT 1 end_FLOATSUBSCRIPT 、PUSNet-C22{}_{2}start_FLOATSUBSCRIPT 2 end_FLOATSUBSCRIPT 和 PUSNet-P 之间的成对 EMD。

Analysis of steganographic networks Leakage. One may also wonder about the possibility of secret steganographic networks leakage if an adversary launches the sparse weight filling on the PUSNet-P by using a key that is randomly guessed. In what follows, we evaluate if it is possible to leak the PUSNet-E and PUSNet-D from the PUSNet-P under such an attack. We conduct the above sparse weight filling attack 1000 times to see if the PUSNet-E and PUSNet-D can be successfully triggered from the PUSNet-P. Table. 3 reports the PSNR and APD of the stego and recovered images generated using the randomly triggered networks, where PUSNet-ER and PUSNet-DR refer to the randomly triggered secret encoder and decoding networks, respectively. It can be seen that, both the stego and recovered images are poor in visual quality on different datasets, where the PSNR is less than 9dB and the APD is over 80. This indicates that it is difficult for the attacker to launch a successful attack by using a random key to trigger the secret encoding and decoding network.

隐写网络泄漏分析。 人们可能还想知道,如果对手使用随机猜测的密钥在 PUSNet-P 上启动稀疏权重填充,秘密隐写网络可能会泄露。在下文中,我们将评估在此类攻击下是否有可能从 PUSNet-P 泄漏 PUSNet-E 和 PUSNet-D。我们进行上述稀疏权重填充攻击 1000 次,看看是否能从 PUSNet-P 成功触发 PUSNet-E 和 PUSNet-D。表 3 报告了使用随机触发网络生成的 stego 和恢复图像的 PSNR 和 APD,其中 PUSNet-ER 和 PUSNet-DR 分别指随机触发的秘密编码器和解码网络。可以看出,在 PSNR 小于 9dB、APD 大于 80 的数据集上,stego 和恢复图像的视觉质量较差。这表明攻击者很难通过使用随机密钥触发秘密编解码网络来发起成功的攻击。

Table 4:Performance comparisons on hiding steganographic networks. ↘: performance reduction on the task.

表 4: 隐藏隐写网络的性能比较。↘↘ \searrow↘:任务性能下降。

| Tasks 任务 | Li et al. [18] 李等人。[18] | PUSNet | ||

|---|---|---|---|---|

| PSNR(dB) PSNR(分贝) | SSIM | PSNR(dB) PSNR(分贝) | SSIM | |

| Secret embedding 秘密嵌入 | - | - | 39.09 | 0.9772 |

| Secret recovery 机密恢复 | 28.52 | 0.8487 | 26.96 | 0.8211 |

| Image denoising ↘ 图像去噪 ↘ | 1.24 | 0.0219 | 0.73 | 0.0148 |

4.5Comparison against the SOTA

4.5 与 SOTA 的比较

In this section, we compare our PUSNet against the SOTA method proposed in [18]. Since the SOTA method is tailored for hiding a secret decoding network, we only take the decoding network of a popular DNN-based steganographic scheme (i.e., HiDDeN) as the secret DNN model for evaluation. We embed it into a benign DNN model using the SOTA method to form a stego DNN model, where the benign DNN model is with the same architecture as the secret DNN model. Table. 4 gives the performance of the secret embedding and recovery tasks using the secret DNN model extracted from the stego DNN model or triggered from our purified network, where “-” means not applicable and the secret recovery task is evaluated on the COCO dataset [19]. We can see that the performance of the secret recovery using the decoding network triggered from the PUSNet (i.e., the PUSNet-D) is slightly lower than that of the SOTA method. However, it brings a lower performance degradation on the image denoising task for the benign DNN model. We would also like to point out that our proposed method is able to conceal both the secret encoding and decoding networks in one single DNN model, which is much more useful than the SOTA method in real-world applications.

在本节中,我们将 PUSNet 与 [18] 中提出的 SOTA 方法进行比较。由于 SOTA 方法是为隐藏秘密解码网络而定制的,因此我们只将流行的基于 DNN 的隐写方案(即 HiDDeN)的解码网络作为秘密 DNN 模型进行评估。我们使用 SOTA 方法将其嵌入到良性 DNN 模型中,形成 stego DNN 模型,其中良性 DNN 模型与秘密 DNN 模型具有相同的架构。桌子。 图 4 给出了使用从 stego DNN 模型中提取的或从我们的纯化网络触发的秘密 DNN 模型的秘密嵌入和恢复任务的性能,其中“-”表示不适用,秘密恢复任务在 COCO 数据集上进行评估[19]。我们可以看到,使用 PUSNet 触发的解码网络(即 PUSNet-D)进行密钥恢复的性能略低于 SOTA 方法。然而,它给良性 DNN 模型的图像去噪任务带来了较低的性能下降。我们还想指出,我们提出的方法能够将秘密编码和解码网络隐藏在一个单一的 DNN 模型中,这在实际应用中比 SOTA 方法有用得多。

5Conclusion 5、结论

In this paper, we propose PUSNet to tackle the problem of covert communication of steganographic networks. The PUSNet is able to conceal secret encoding and decoding networks into a purified network which performs an ordinary machine learning task without being noticed. While the hidden steganographic networks could be triggered from the purified network using a specific key owned by the sender or receiver. To enable flexible switching between the purified and steganographic networks, We construct the PUSNet in a sparse weight filling manner. The switching is achieved by filling some key controlled and randomly generated weights into the sparse weight locations in the purified network. We instantiate our PUSNet in terms of a sparse image denoising network, a secret image encoding network, and a secret image decoding network. Various experiments have been conducted to demonstrate the advantage of our proposed method for covert communication of the steganographic networks.

在本文中,我们提出了 PUSNet 来解决隐写网络的隐蔽通信问题。PUSNet 能够将秘密编码和解码网络隐藏到一个纯化的网络中,该网络在不被注意的情况下执行普通的机器学习任务。而隐藏的隐写网络可以使用发送者或接收者拥有的特定密钥从纯化网络触发。为了实现纯化网络和隐写网络之间的灵活切换,我们以稀疏权重填充方式构建 PUSNet。切换是通过将一些关键控制和随机生成的权重填充到纯化网络中的稀疏权重位置来实现的。我们根据稀疏图像去噪网络、秘密图像编码网络和秘密图像解码网络来实例化我们的 PUSNet。已经进行了各种实验来证明我们提出的方法在隐写网络隐蔽通信方面的优势。

781

781

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?