magFilter简易实现

- 当纹理size < 绘制区域size时往往需要

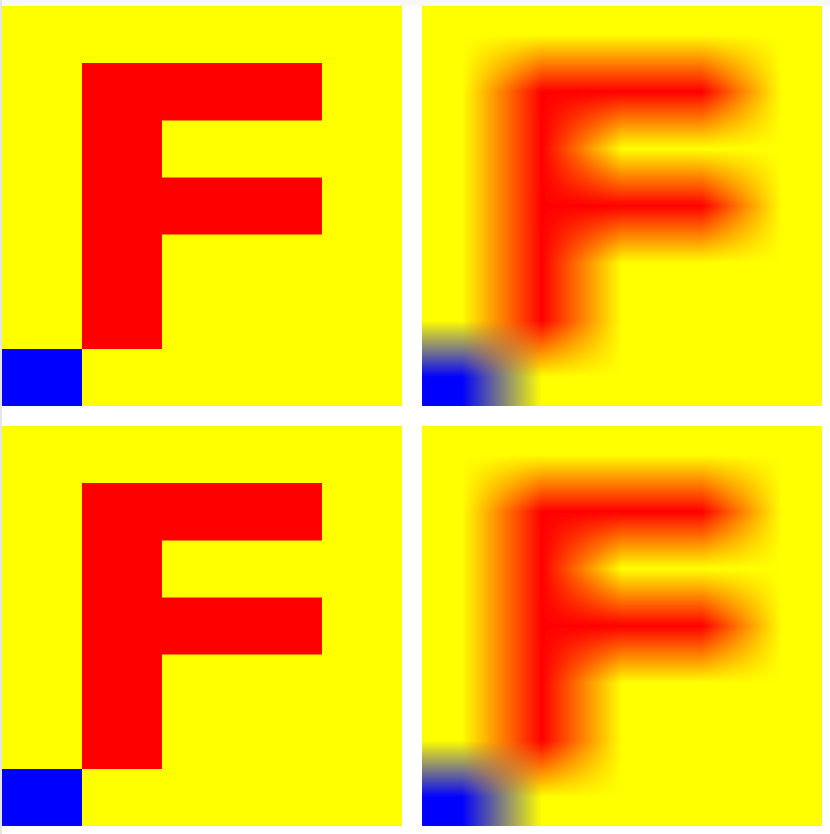

magFilter - 第一行分别为

WebGPU内置的sampler支持的最近邻(nearest) 和 双线性插值(linear) - 第二行为自定义shader实现的效果对比。

概念介绍

- 最近邻(nearest)

顾名思义选中UV坐标映射的像素。

- 线性插值(linear)

这里之所以叫双线性插值,因为纹理是2维的,对x,y分别线性插值后的结果再进行一次插值计算。

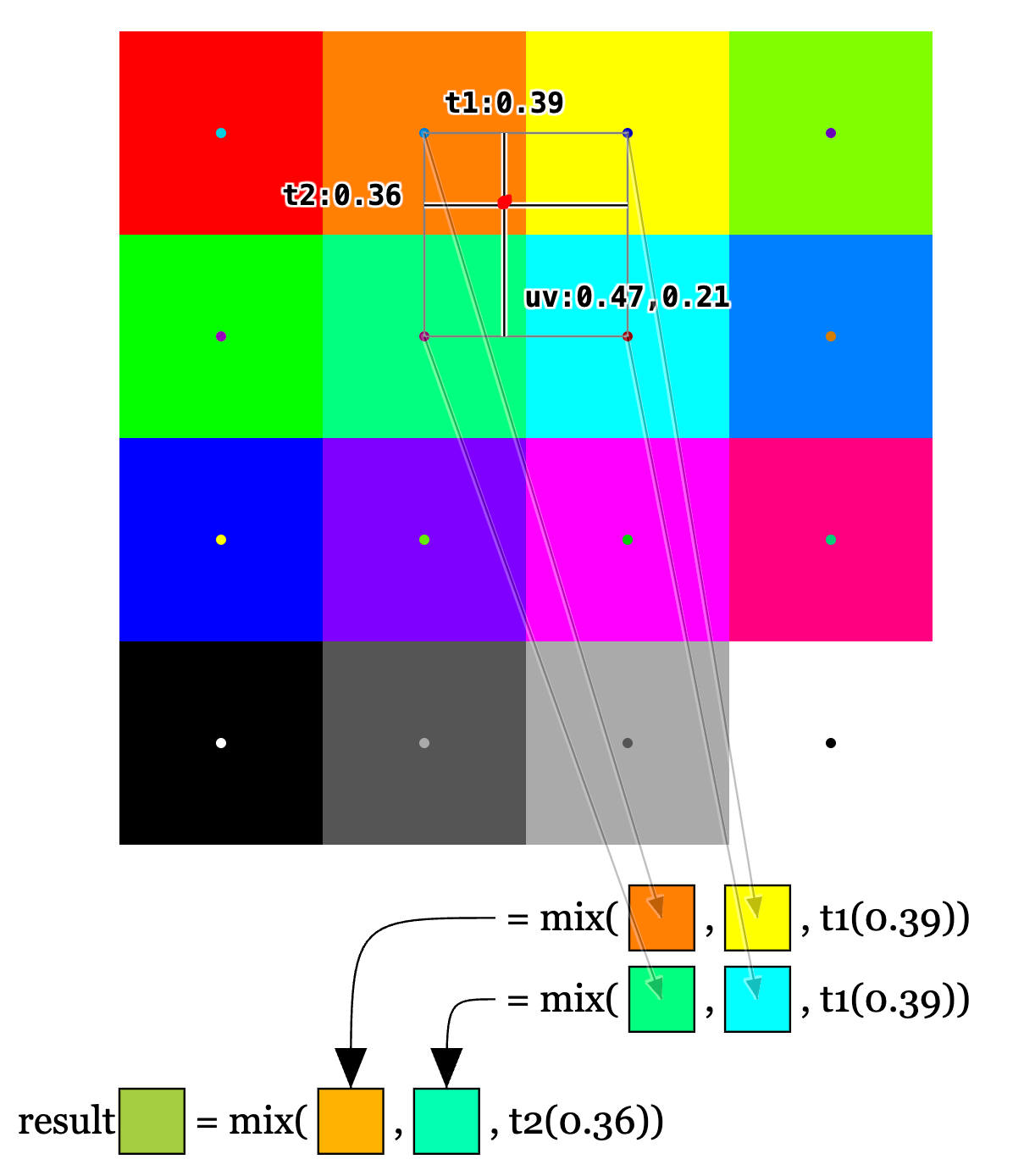

例如下方有t1、t2 两个变量,uv 是纹理坐标。t1 是所选像素的左上方中心点与其右边中心点之间的水平距离,0 表示水平位于像素的左边中心点,1 表示水平位于像素的右边中心点。t2 在竖直方向与其类似。

t1 用于在顶部 2 个像素之间进行 “混合”,以生成中间色。 混合在 2 个值之间进行线性插值,因此当 t1 为 0 时,我们只能得到第一种颜色。当 t1 = 1 时,我们只能得到第二种颜色。介于 0 和 1 之间的值会产生比例混合。例如,0.3 表示第一种颜色占 70%,第二种颜色占 30%。同样,底部 2 个像素也会计算出第二种中间色。最后,使用 t2 将两种中间颜色混合为最终颜色。。

数据准备

- canvas画布 800 x 800 像素

- 纹理 5x7 像素

// 顶点数据

const VBO = new Float32Array([

0.0, 0.0,

1.0, 0.0,

0.0, 1.0,

0.0, 1.0,

1.0, 0.0,

1.0, 1.0

]);

const b = [0, 0, 255, 255]; // 蓝色

const _ = [255, 255, 0, 255]; // 黄色

const r = [255, 0, 0, 255]; // 红色

// 纹理数据(“F”的翻转)

const textureData = new Uint8Array([

b, _, _, _, _,

_, r, _, _, _,

_, r, _, _, _,

_, r, r, r, _,

_, r, _, _, _,

_, r, r, r, _,

_, _, _, _, _,

].flat());

const u_size = 5, v_size = 7, BYTES_PER_TEXEL = 4 * textureData.BYTES_PER_ELEMENT;

工具函数

- 用户创建画布

/**

* 创建画布

* @param query 画布查询字符串或canvas元素

* @param width 画布视觉宽度

* @param height 画布视觉高度

* @returns 画布配置

*/

export async function createCanvas(query: string | null, width = 800, height = 600) {

const adapter = await navigator.gpu.requestAdapter({ /* powerPreference: 'high-performance' */ });

console.log(adapter)

const f16Supported = adapter.features.has('shader-f16');

const format = navigator.gpu.getPreferredCanvasFormat();

const alphaMode = 'premultiplied'; // opaque忽略alpha通道, 默认premultiplied 会将alpha通道的值乘以颜色值

const device = await adapter.requestDevice({

requiredFeatures: f16Supported ? ['shader-f16'] : []

});

const dpr = window.devicePixelRatio || 2;

let canvas: HTMLCanvasElement = typeof query === 'string' ? document.querySelector(query) : query;

if (!canvas) {

canvas = document.createElement('canvas');

}

canvas.width = width * dpr;

canvas.height = height * dpr;

const context = canvas.getContext('webgpu');

context.configure({

device,

format,

alphaMode,

usage: GPUTextureUsage.RENDER_ATTACHMENT | GPUTextureUsage.COPY_SRC

});

return {

context,

adapter,

device,

format,

alphaMode,

dpr,

size: [width, height]

};

}

Shader实现建议版本的magFilter采样

原代码

const sampler = device.createSampler({ magFilter: 'nearest' }); // 默认:最近邻

const sampler = device.createSampler({ magFilter: 'linaer' }); // 双线性插值

struct VsOutput {

@builtin(position) pos: vec4f,

@location(0) texcoord: vec2f

}

@vertex fn main(@location(0) pos: vec2f) -> VsOutput {

var output: VsOutput;

let xy = pos * 2; // 原顶点数据为长度为1的正方形,左下角位于(0, 0),此操作将图形放大2倍为变长为2的正方形

output.pos = vec4f(xy - 1, 0.0, 1.0); // 正方形水平、垂直方向在NDC中向右下移动了1个单位后左下角位于(-1, -1),右上角位于(1, 1)

output.texcoord = xy / 2; // 绘制区域坐标映射为uv, 值需要按比例转[0, 1]

return output;

}

@group(0) @binding(0) var Sampler: sampler;

@group(0) @binding(1) var Texture: texture_2d<f32>;

@fragment fn main(@location(0) texcoord: vec2f) -> @location(0) vec4f {

return textureSample(Texture, Sampler, texcoord);

}

自定义实现代码

// 线性插值lerp, 对mix的行为简单表达一下

fn lerp(x: vec4f, y: vec4f, a: f32) -> vec4f {

return x + (y - x) * a;

}

fn textureBilinear(texture: texture_2d<f32>, uv: vec2f) -> vec4f {

// 1. 获取纹理的宽高

let texSize = vec2f(textureDimensions(texture));

// 2. 获取uv坐标映射的纹理中的像素数组的水平和垂直方向的索引

// let texPos = vec2f(uv.x * texSize.x, uv.y * texSize.y) - 0.5;

let texPos = uv * texSize - 0.5; // 同上更简便的写法,简写要求必须同类型四则运算

// 2. 获取uv坐标映射的纹理中的像素数组的水平和垂直方向的索引, 去除小数部分

let p0 = vec2<i32>(floor(texPos));

let frac = texPos - vec2f(p0);

let c00 = textureLoad(texture, p0, 0);

let c01 = textureLoad(texture, p0 + vec2<i32>(1, 0), 0);

let c10 = textureLoad(texture, p0 + vec2<i32>(0, 1), 0);

let c11 = textureLoad(texture, p0 + vec2<i32>(1, 1), 0);

let c = mix(mix(c00, c01, frac.x), mix(c10, c11, frac.x), frac.y);

return c;

}

fn textureNearest(texture: texture_2d<f32>, uv: vec2f) -> vec4f {

// 1. 获取纹理的宽高和宽高比

let texSize = textureDimensions(texture);

// 2. 获取uv坐标映射的纹理中的像素数组的水平和垂直方向的索引, 去除小数部分

let p0 = vec2u(uv * vec2f(texSize)); // 取值范围[0, texSize - 1]

return textureLoad(texture, p0, 0);

}

完整版代码

- 重复代码多,主要考虑复制后少做修改可运行

- 关注

pipeline中fragment模块的模拟实现,其实JS也可以实现但通常远没有Shader效率高,因为着色器代码往往是并行执行的,通常默认一组4个像素同步处理,且分组间具有极高的并行计算能力。

import { createCanvas } from '@/utils';

// 顶点数据

const VBO = new Float32Array([

0.0, 0.0,

1.0, 0.0,

0.0, 1.0,

0.0, 1.0,

1.0, 0.0,

1.0, 1.0

]);

const b = [0, 0, 255, 255]; // 蓝色

const _ = [255, 255, 0, 255]; // 黄色

const r = [255, 0, 0, 255]; // 红色

const textureData = new Uint8Array([

b, _, _, _, _,

_, r, _, _, _,

_, r, _, _, _,

_, r, r, r, _,

_, r, _, _, _,

_, r, r, r, _,

_, _, _, _, _,

].flat());

const u_size = 5, v_size = 7, BYTES_PER_TEXEL = 4 * textureData.BYTES_PER_ELEMENT;

/** 加载展示原纹理(纹理 < 可视区) */

async function createMagNearestF(page: HTMLElement) {

const { context, device, adapter, format } = await createCanvas(null, 400, 400);

// 5 * 7 纹素(像素)的颜色纹理

const texture = device.createTexture({

size: [u_size, v_size],

format: 'rgba8unorm',

usage: GPUTextureUsage.TEXTURE_BINDING | GPUTextureUsage.COPY_DST

});

device.queue.writeTexture(

{ texture },

textureData,

{ bytesPerRow: u_size * BYTES_PER_TEXEL }, // 一行数由几个纹素(texel)组成 * 每个纹素的字节数(由 rgba 4个字节表示)

{ width: u_size, height: v_size }, // 从数据写入当前创建的texture容器的行、高

);

// 采样器

const sampler = device.createSampler({

addressModeU: 'clamp-to-edge',

addressModeV: 'clamp-to-edge',

// magFilter: 'linear'

});

// 顶点数据

const vbo = device.createBuffer({

size: VBO.byteLength,

usage: GPUBufferUsage.VERTEX | GPUBufferUsage.COPY_DST,

mappedAtCreation: true

});

const gpuBuffer = vbo.getMappedRange();

new Float32Array(gpuBuffer).set(VBO);

vbo.unmap();

const bindGroupLayout = device.createBindGroupLayout({

entries: [

{

binding: 0,

visibility: GPUShaderStage.VERTEX | GPUShaderStage.FRAGMENT,

sampler: { type: 'filtering' }

},

{

binding: 1,

visibility: GPUShaderStage.FRAGMENT,

texture: { sampleType: 'float' }

}

]

});

const bindGroup = device.createBindGroup({

layout: bindGroupLayout,

entries: [

{

binding: 0,

resource: sampler

},

{

binding: 1,

resource: texture.createView()

}

]

});

const pipeline = device.createRenderPipeline({

layout: device.createPipelineLayout({

bindGroupLayouts: [bindGroupLayout]

}),

vertex: {

module: device.createShaderModule({

code: /** wgsl */`

struct VsOutput {

@builtin(position) pos: vec4f,

@location(0) texcoord: vec2f

}

@vertex fn main(@location(0) pos: vec2f) -> VsOutput {

var output: VsOutput;

let xy = pos * 2;

output.pos = vec4f(xy - 1, 0.0, 1.0);

// output.texcoord = vec2f(xy.x, 1 - xy.y);

output.texcoord = xy / 2;

return output;

}

`

}),

buffers: [

{

arrayStride: VBO.BYTES_PER_ELEMENT * 2,

attributes: [

{ shaderLocation: 0, offset: 0, format: 'float32x2' }

]

}

]

},

fragment: {

module: device.createShaderModule({

code: /** wgsl */`

@group(0) @binding(0) var Sampler: sampler;

@group(0) @binding(1) var Texture: texture_2d<f32>;

@fragment fn main(@location(0) texcoord: vec2f) -> @location(0) vec4f {

return textureSample(Texture, Sampler, texcoord);

}

`

}),

targets: [{ format }], // navigator.gpu.getPreferredCanvasFormat() == bgra8unorm

},

primitive: {

topology: 'triangle-list'

},

});

const encoder = device.createCommandEncoder();

const pass = encoder.beginRenderPass({

colorAttachments: [

{

view: context.getCurrentTexture().createView(),

loadOp: 'clear',

storeOp: 'store'

}

]

});

pass.setPipeline(pipeline);

pass.setVertexBuffer(0, vbo);

pass.setBindGroup(0, bindGroup);

pass.draw(6);

pass.end();

device.queue.submit([encoder.finish()]);

page.append(context.canvas as HTMLCanvasElement);

}

/** 加载展示magFilter::linear纹理(纹理 < 可视区) */

async function createMagLinearF(page: HTMLElement) {

const { context, device, adapter, format } = await createCanvas(null, 400, 400);

// 5 * 7 纹素(像素)的颜色纹理

const texture = device.createTexture({

size: [u_size, v_size],

format: 'rgba8unorm',

usage: GPUTextureUsage.TEXTURE_BINDING | GPUTextureUsage.COPY_DST

});

device.queue.writeTexture(

{ texture },

textureData,

{ bytesPerRow: u_size * BYTES_PER_TEXEL }, // 一行数由几个纹素(texel)组成 * 每个纹素的字节数(由 rgba 4个字节表示)

{ width: u_size, height: v_size }, // 从数据写入当前创建的texture容器的行、高

);

// 采样器

const sampler = device.createSampler({

addressModeU: 'clamp-to-edge',

addressModeV: 'clamp-to-edge',

magFilter: 'linear'

});

// 顶点数据

const vbo = device.createBuffer({

size: VBO.byteLength,

usage: GPUBufferUsage.VERTEX | GPUBufferUsage.COPY_DST,

mappedAtCreation: true

});

const gpuBuffer = vbo.getMappedRange();

new Float32Array(gpuBuffer).set(VBO);

vbo.unmap();

const bindGroupLayout = device.createBindGroupLayout({

entries: [

{

binding: 0,

visibility: GPUShaderStage.VERTEX | GPUShaderStage.FRAGMENT,

sampler: { type: 'filtering' }

},

{

binding: 1,

visibility: GPUShaderStage.FRAGMENT,

texture: { sampleType: 'float' }

}

]

});

const bindGroup = device.createBindGroup({

layout: bindGroupLayout,

entries: [

{

binding: 0,

resource: sampler

},

{

binding: 1,

resource: texture.createView()

}

]

});

const pipeline = device.createRenderPipeline({

layout: device.createPipelineLayout({

bindGroupLayouts: [bindGroupLayout]

}),

vertex: {

module: device.createShaderModule({

code: /** wgsl */`

struct VsOutput {

@builtin(position) pos: vec4f,

@location(0) texcoord: vec2f

}

@vertex fn main(@location(0) pos: vec2f) -> VsOutput {

var output: VsOutput;

let xy = pos * 2;

output.pos = vec4f(xy - 1, 0.0, 1.0);

// output.texcoord = vec2f(xy.x, 1 - xy.y);

output.texcoord = xy / 2;

return output;

}

`

}),

buffers: [

{

arrayStride: VBO.BYTES_PER_ELEMENT * 2,

attributes: [

{ shaderLocation: 0, offset: 0, format: 'float32x2' }

]

}

]

},

fragment: {

module: device.createShaderModule({

code: /** wgsl */`

@group(0) @binding(0) var Sampler: sampler;

@group(0) @binding(1) var Texture: texture_2d<f32>;

@fragment fn main(@location(0) texcoord: vec2f) -> @location(0) vec4f {

return textureSample(Texture, Sampler, texcoord);

}

`

}),

targets: [{ format }], // navigator.gpu.getPreferredCanvasFormat() == bgra8unorm

},

primitive: {

topology: 'triangle-list'

},

});

const encoder = device.createCommandEncoder();

const pass = encoder.beginRenderPass({

colorAttachments: [

{

view: context.getCurrentTexture().createView(),

loadOp: 'clear',

storeOp: 'store'

}

]

});

pass.setPipeline(pipeline);

pass.setVertexBuffer(0, vbo);

pass.setBindGroup(0, bindGroup);

pass.draw(6);

pass.end();

device.queue.submit([encoder.finish()]);

page.append(context.canvas as HTMLCanvasElement);

}

/** 加载展示手动实现magFilter::linear纹理(纹理 < 可视区) */

async function createMagLinearManualF(page: HTMLElement) {

const { context, device, adapter, format } = await createCanvas(null, 400, 400);

// 5 * 7 纹素(像素)的颜色纹理

const texture = device.createTexture({

size: [u_size, v_size],

format: 'rgba8unorm',

usage: GPUTextureUsage.TEXTURE_BINDING | GPUTextureUsage.COPY_DST

});

device.queue.writeTexture(

{ texture },

textureData,

{ bytesPerRow: u_size * BYTES_PER_TEXEL }, // 一行数由几个纹素(texel)组成 * 每个纹素的字节数(由 rgba 4个字节表示)

{ width: u_size, height: v_size }, // 从数据写入当前创建的texture容器的行、高

);

// 采样器

const sampler = device.createSampler({

addressModeU: 'clamp-to-edge',

addressModeV: 'clamp-to-edge',

// magFilter: 'linear'

});

// 顶点数据

const vbo = device.createBuffer({

size: VBO.byteLength,

usage: GPUBufferUsage.VERTEX | GPUBufferUsage.COPY_DST,

mappedAtCreation: true

});

const gpuBuffer = vbo.getMappedRange();

new Float32Array(gpuBuffer).set(VBO);

vbo.unmap();

const bindGroupLayout = device.createBindGroupLayout({

entries: [

{

binding: 0,

visibility: GPUShaderStage.VERTEX | GPUShaderStage.FRAGMENT,

sampler: { type: 'filtering' }

},

{

binding: 1,

visibility: GPUShaderStage.FRAGMENT,

texture: { sampleType: 'float' }

}

]

});

const bindGroup = device.createBindGroup({

layout: bindGroupLayout,

entries: [

{

binding: 0,

resource: sampler

},

{

binding: 1,

resource: texture.createView()

}

]

});

const pipeline = device.createRenderPipeline({

layout: device.createPipelineLayout({

bindGroupLayouts: [bindGroupLayout]

}),

vertex: {

module: device.createShaderModule({

code: /** wgsl */`

struct VsOutput {

@builtin(position) pos: vec4f,

@location(0) texcoord: vec2f

}

@vertex fn main(@location(0) pos: vec2f) -> VsOutput {

var output: VsOutput;

let xy = pos * 2;

output.pos = vec4f(xy - 1, 0.0, 1.0);

// output.texcoord = vec2f(xy.x, 1 - xy.y);

output.texcoord = xy / 2;

return output;

}

`

}),

buffers: [

{

arrayStride: VBO.BYTES_PER_ELEMENT * 2,

attributes: [

{ shaderLocation: 0, offset: 0, format: 'float32x2' }

]

}

]

},

fragment: {

module: device.createShaderModule({

code: /** wgsl */`

// @group(0) @binding(0) var Sampler: sampler;

@group(0) @binding(1) var Texture: texture_2d<f32>;

fn textureBilinear(texture: texture_2d<f32>, uv: vec2f) -> vec4f {

// 1. 获取纹理的宽高

let texSize = vec2f(textureDimensions(texture));

// 2. 获取uv坐标映射的纹理中的像素数组的水平和垂直方向的索引

// let texPos = vec2f(uv.x * texSize.x, uv.y * texSize.y) - 0.5;

let texPos = uv * texSize - 0.5; // 同上更简便的写法,简写要求必须同类型四则运算

// 2. 获取uv坐标映射的纹理中的像素数组的水平和垂直方向的索引, 去除小数部分

let p0 = vec2<i32>(floor(texPos));

let frac = texPos - vec2f(p0);

let c00 = textureLoad(texture, p0, 0);

let c01 = textureLoad(texture, p0 + vec2<i32>(1, 0), 0);

let c10 = textureLoad(texture, p0 + vec2<i32>(0, 1), 0);

let c11 = textureLoad(texture, p0 + vec2<i32>(1, 1), 0);

let c = mix(mix(c00, c01, frac.x), mix(c10, c11, frac.x), frac.y);

return c;

}

@fragment fn main(@location(0) texcoord: vec2f) -> @location(0) vec4f {

// return textureSample(Texture, Sampler, texcoord);

return textureBilinear(Texture, texcoord);

}

`

}),

targets: [{ format }], // navigator.gpu.getPreferredCanvasFormat() == bgra8unorm

},

primitive: {

topology: 'triangle-list'

},

});

const encoder = device.createCommandEncoder();

const pass = encoder.beginRenderPass({

colorAttachments: [

{

view: context.getCurrentTexture().createView(),

loadOp: 'clear',

storeOp: 'store'

}

]

});

pass.setPipeline(pipeline);

pass.setVertexBuffer(0, vbo);

pass.setBindGroup(0, bindGroup);

pass.draw(6);

pass.end();

device.queue.submit([encoder.finish()]);

page.append(context.canvas as HTMLCanvasElement);

}

/** 加载展示手动实现magFilter::linear纹理(纹理 < 可视区) */

async function createMagNearestManualF(page: HTMLElement) {

const { context, device, adapter, format } = await createCanvas(null, 400, 400);

// 5 * 7 纹素(像素)的颜色纹理

const texture = device.createTexture({

size: [u_size, v_size],

format: 'rgba8unorm',

usage: GPUTextureUsage.TEXTURE_BINDING | GPUTextureUsage.COPY_DST

});

device.queue.writeTexture(

{ texture },

textureData,

{ bytesPerRow: u_size * BYTES_PER_TEXEL }, // 一行数由几个纹素(texel)组成 * 每个纹素的字节数(由 rgba 4个字节表示)

{ width: u_size, height: v_size }, // 从数据写入当前创建的texture容器的行、高

);

// 采样器

const sampler = device.createSampler({

addressModeU: 'clamp-to-edge',

addressModeV: 'clamp-to-edge',

// magFilter: 'linear'

});

// 顶点数据

const vbo = device.createBuffer({

size: VBO.byteLength,

usage: GPUBufferUsage.VERTEX | GPUBufferUsage.COPY_DST,

mappedAtCreation: true

});

const gpuBuffer = vbo.getMappedRange();

new Float32Array(gpuBuffer).set(VBO);

vbo.unmap();

const bindGroupLayout = device.createBindGroupLayout({

entries: [

{

binding: 0,

visibility: GPUShaderStage.VERTEX | GPUShaderStage.FRAGMENT,

sampler: { type: 'filtering' }

},

{

binding: 1,

visibility: GPUShaderStage.FRAGMENT,

texture: { sampleType: 'float' }

}

]

});

const bindGroup = device.createBindGroup({

layout: bindGroupLayout,

entries: [

{

binding: 0,

resource: sampler

},

{

binding: 1,

resource: texture.createView()

}

]

});

const pipeline = device.createRenderPipeline({

layout: device.createPipelineLayout({

bindGroupLayouts: [bindGroupLayout]

}),

vertex: {

module: device.createShaderModule({

code: /** wgsl */`

struct VsOutput {

@builtin(position) pos: vec4f,

@location(0) texcoord: vec2f

}

@vertex fn main(@location(0) pos: vec2f) -> VsOutput {

var output: VsOutput;

let xy = pos * 2;

output.pos = vec4f(xy - 1, 0.0, 1.0);

// output.texcoord = vec2f(xy.x, 1 - xy.y);

output.texcoord = xy / 2;

return output;

}

`

}),

buffers: [

{

arrayStride: VBO.BYTES_PER_ELEMENT * 2,

attributes: [

{ shaderLocation: 0, offset: 0, format: 'float32x2' }

]

}

]

},

fragment: {

module: device.createShaderModule({

code: /** wgsl */`

// @group(0) @binding(0) var Sampler: sampler;

@group(0) @binding(1) var Texture: texture_2d<f32>;

fn textureNearest(texture: texture_2d<f32>, uv: vec2f) -> vec4f {

// 1. 获取纹理的宽高和宽高比

let texSize = textureDimensions(texture);

// 2. 获取uv坐标映射的纹理中的像素数组的水平和垂直方向的索引, 去除小数部分

let p0 = vec2u(uv * vec2f(texSize)); // 取值范围[0, texSize - 1]

return textureLoad(texture, p0, 0);

}

@fragment fn main(@location(0) texcoord: vec2f) -> @location(0) vec4f {

// return textureSample(Texture, Sampler, texcoord);

return textureNearest(Texture, texcoord);

}

`

}),

targets: [{ format }], // navigator.gpu.getPreferredCanvasFormat() == bgra8unorm

},

primitive: {

topology: 'triangle-list'

},

});

const encoder = device.createCommandEncoder();

const pass = encoder.beginRenderPass({

colorAttachments: [

{

view: context.getCurrentTexture().createView(),

loadOp: 'clear',

storeOp: 'store'

}

]

});

pass.setPipeline(pipeline);

pass.setVertexBuffer(0, vbo);

pass.setBindGroup(0, bindGroup);

pass.draw(6);

pass.end();

device.queue.submit([encoder.finish()]);

page.append(context.canvas as HTMLCanvasElement);

}

export function init(page: HTMLDivElement) {

createMagNearestF(page);

createMagLinearF(page);

createMagNearestManualF(page);

createMagLinearManualF(page);

}

1732

1732

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?