| The Naive Bayesian classifier is based on Bayes’ theorem with independence assumptions between predictors. A Naive Bayesian model is easy to build, with no complicated iterative parameter estimation which makes it particularly useful for very large datasets. Despite its simplicity, the Naive Bayesian classifier often does surprisingly well and is widely used because it often outperforms more sophisticated classification methods. |

| Algorithm |

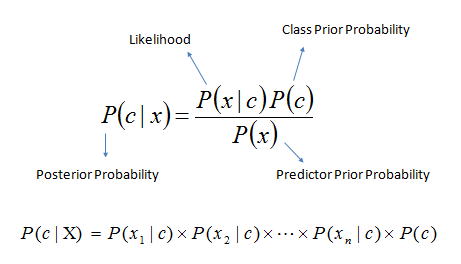

| Bayes theorem provides a way of calculating the posterior probability, P(c|x), from P(c), P(x), and P(x|c). Naive Bayes classifier assume that the effect of the value of a predictor (x) on a given class (c) is independent of the values of other predictors. This assumption is called class conditional independence. |

| ||||||||||||||||||||||||||||||||||||||

| Example: | ||||||||||||||||||||||||||||||||||||||

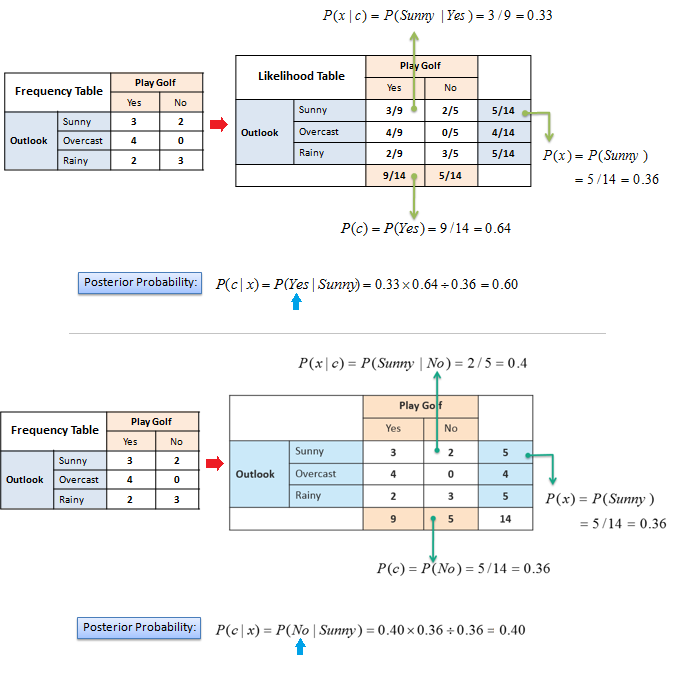

| The posterior probability can be calculated by first, constructing a frequency table for each attribute against the target. Then, transforming the frequency tables to likelihood tables and finally use the Naive Bayesian equation to calculate the posterior probability for each class. The class with the highest posterior probability is the outcome of prediction. | ||||||||||||||||||||||||||||||||||||||

|

| ||||||||||||||||||||||||||||||||||||||

| The zero-frequency problem | ||||||||||||||||||||||||||||||||||||||

| Add 1 to the count for every attribute value-class combination (Laplace estimator) when an attribute value (Outlook=Overcast) doesn’t occur with every class value (Play Golf=no). | ||||||||||||||||||||||||||||||||||||||

| Numerical Predictors | ||||||||||||||||||||||||||||||||||||||

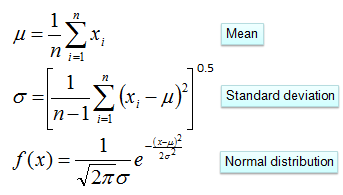

| Numerical variables need to be transformed to their categorical counterparts (binning) before constructing their frequency tables. The other option we have is using the distribution of the numerical variable to have a good guess of the frequency. For example, one common practice is to assume normal distributions for numerical variables. | ||||||||||||||||||||||||||||||||||||||

| The probability density function for the normal distribution is defined by two parameters (mean and standard deviation). | ||||||||||||||||||||||||||||||||||||||

|

| ||||||||||||||||||||||||||||||||||||||

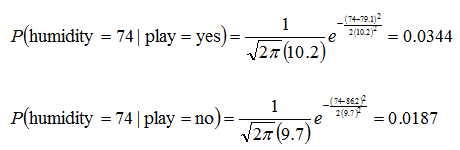

| Example: | ||||||||||||||||||||||||||||||||||||||

| ||||||||||||||||||||||||||||||||||||||

|

| ||||||||||||||||||||||||||||||||||||||

| Predictors Contribution | ||||||||||||||||||||||||||||||||||||||

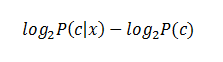

| Kononenko's information gain as a sum of information contributed by each attribute can offer an explanation on how values of the predictors influence the class probability. | ||||||||||||||||||||||||||||||||||||||

|

| ||||||||||||||||||||||||||||||||||||||

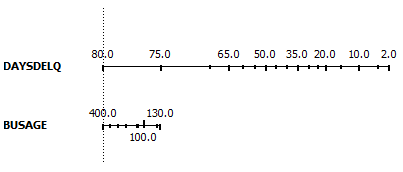

| The contribution of predictors can also be visualized by plotting nomograms. Nomogram plots log odds ratios for each value of each predictor. Lengths of the lines correspond to spans of odds ratios, suggesting importance of the related predictor. It also shows impacts of individual values of the predictor. | ||||||||||||||||||||||||||||||||||||||

|

| ||||||||||||||||||||||||||||||||||||||

reference:http://www.saedsayad.com/naive_bayesian.htm

600

600

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?