一、船员数据分析

- PassengerId :每一个乘客的标志符

- Survived:Lable值,代表是否获救

- Pclass:船员仓库等级

- Name:姓名

- Sex:性别

- Age:年龄

- SibSp:兄弟姐妹有几个

- Parch:老人孩子的数量

- Ticket:船票的编号

- Fare:船票价格

- Cabin:船舱位置,此列出现大量缺失,可以不要

- Embarked:上船地点

二、数据预处理

1.导入需要的包

import pandas as pa

import numpy as np

import matplotlib.pyplot as plt

from sklearn.ensemble import RandomForestClassifier

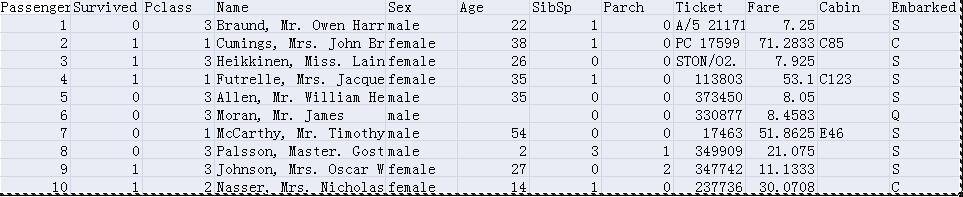

from sklearn.cross_validation import KFold2.观察数据的前几行

filename = "train.csv"

titanic = pa.read_csv(filename)

titanic.head()结果:

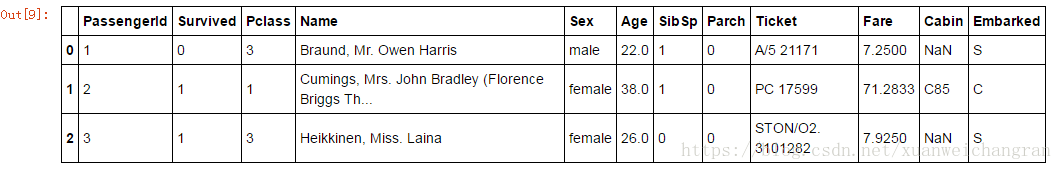

3.观察数据的简单数据特征

print titanic.describe()结果:

PassengerId Survived Pclass Age SibSp \

count 891.000000 891.000000 891.000000 714.000000 891.000000

mean 446.000000 0.383838 2.308642 29.699118 0.523008

std 257.353842 0.486592 0.836071 14.526497 1.102743

min 1.000000 0.000000 1.000000 0.420000 0.000000

25% 223.500000 0.000000 2.000000 NaN 0.000000

50% 446.000000 0.000000 3.000000 NaN 0.000000

75% 668.500000 1.000000 3.000000 NaN 1.000000

max 891.000000 1.000000 3.000000 80.000000 8.000000

Parch Fare

count 891.000000 891.000000

mean 0.381594 32.204208

std 0.806057 49.693429

min 0.000000 0.000000

25% 0.000000 7.910400

50% 0.000000 14.454200

75% 0.000000 31.000000

max 6.000000 512.329200 - 可以看到Age列数据只有714个,其余列均有891个,因此此列需要对缺失值进行填充

titanic["Age"]=titanic["Age"].fillna(titanic["Age"].median()) print titanic.describe()结果:

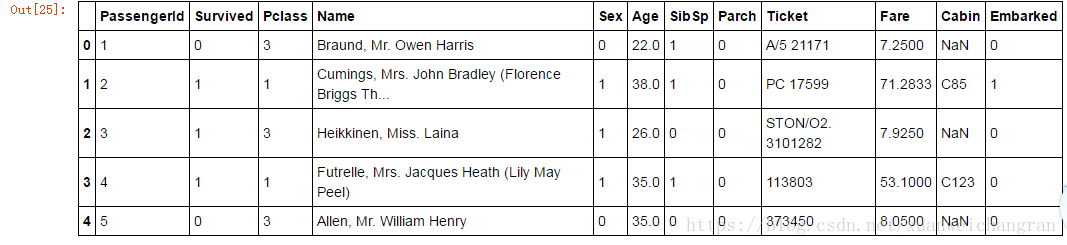

PassengerId Survived Pclass Age SibSp \ count 891.000000 891.000000 891.000000 891.000000 891.000000 mean 446.000000 0.383838 2.308642 29.361582 0.523008 std 257.353842 0.486592 0.836071 13.019697 1.102743 min 1.000000 0.000000 1.000000 0.420000 0.000000 25% 223.500000 0.000000 2.000000 22.000000 0.000000 50% 446.000000 0.000000 3.000000 28.000000 0.000000 75% 668.500000 1.000000 3.000000 35.000000 1.000000 max 891.000000 1.000000 3.000000 80.000000 8.000000 Parch Fare count 891.000000 891.000000 mean 0.381594 32.204208 std 0.806057 49.693429 min 0.000000 0.000000 25% 0.000000 7.910400 50% 0.000000 14.454200 75% 0.000000 31.000000 max 6.000000 512.329200 - 将string值转为int/float值

1) 首先,观察相应列有几种字符串print titanic["Sex"].unique() print titanic["Embarked"].unique()结果:

2) 然后,将相应字符串的位置附上对应的Int/float值['male' 'female'] ['S' 'C' 'Q' nan]titanic.loc[titanic["Sex"]=="male","Sex"] = 0; titanic.loc[titanic["Sex"]=="female","Sex"] = 1; titanic.loc[titanic["Embarked"]=="S","Embarked"] = 0; titanic.loc[titanic["Embarked"]=="C","Embarked"] = 1; titanic.loc[titanic["Embarked"]=="Q","Embarked"] = 2; titanic.head()结果:

替换成功

三、分类

def data_proprocess():

import pandas as pa

import numpy as np

import matplotlib.pyplot as plt

filename = "train.csv"

titanic = pa.read_csv(filename)

#titanic.head()

#print titanic.describe()

titanic["Age"]=titanic["Age"].fillna(titanic["Age"].median())

titanic['Embarked'] = titanic['Embarked'].fillna('S')

#print titanic["Sex"].unique()

#print titanic["Embarked"].unique()

titanic.loc[titanic["Sex"]=="male","Sex"] = 0;

titanic.loc[titanic["Sex"]=="female","Sex"] = 1;

titanic.loc[titanic["Embarked"]=="S","Embarked"] = 0;

titanic.loc[titanic["Embarked"]=="C","Embarked"] = 1;

titanic.loc[titanic["Embarked"]=="Q","Embarked"] = 2;

#titanic.head()

return titanic

def classify_LinearRegression(titanic):

import pandas as pa

import numpy as np

import matplotlib.pyplot as plt

from sklearn.cross_validation import KFold

from sklearn.linear_model import LinearRegression

predictors = ["Pclass","Sex","Age","SibSp","Parch","Fare","Embarked"]#特征

alg = LinearRegression()#线性回归

kf = KFold(titanic.shape[0],n_folds=3,random_state=1)#交叉验证集

predictions = []

for train,test in kf:

train_predictors = (titanic[predictors].iloc[train,:])

train_target = titanic["Survived"].iloc[train]

alg.fit(train_predictors,train_target)

test_predictions = alg.predict(titanic[predictors].iloc[test,:])

predictions.append(test_predictions)

predictions = np.concatenate(predictions,axis=0)

predictions[predictions > 0.5] =1

predictions[predictions <= 0.5] =0

accuracy = sum(predictions[predictions == titanic['Survived']])/len(predictions)

return accuracy

def classify_LogisticRegression(titanic):

import pandas as pa

import numpy as np

import matplotlib.pyplot as plt

from sklearn import cross_validation

from sklearn.linear_model import LogisticRegression

predictors = ["Pclass","Sex","Age","SibSp","Parch","Fare","Embarked"]#特征

alg = LogisticRegression(random_state=1)

scores = cross_validation.cross_val_score(alg,titanic[predictors],titanic["Survived"],cv=3)

return scores.mean()

print "LinearRegression Classification result is :"

print classify_LinearRegression(data_proprocess())

print "LogisticRegression Classification result is :"

print classify_LogisticRegression(data_proprocess())结果:

LinearRegression Classification result is :

0.261503928171

LogisticRegression Classification result is :

0.787878787879从结果可以看出,还是用逻辑回归做分类问题精度更高。

四、使用随机森林提高分类精度并将结果传到kaggle

def classify_RandomForestClassifier(train_data,test_data):

from sklearn.ensemble import RandomForestClassifier

from sklearn import cross_validation

import pandas as pa

import numpy as np

predictors = ["Pclass","Sex","Age","SibSp","Parch","Fare","Embarked"]

clf = RandomForestClassifier(n_estimators=10, max_depth=None,min_samples_split=2, random_state=0)

scores = cross_validation.cross_val_score(clf,train_data[predictors],train_data["Survived"],cv=3)

clf .fit(train_data[predictors],train_data["Survived"])

predict_result= clf.predict(test_data[predictors])

result = pa.DataFrame({'PassengerId':test_data['PassengerId'].as_matrix(), 'Survived':predict_result.astype(np.int32)})

result.to_csv("logistic_regression_predictions.csv", index=False)

return scores.mean()

print "train"

titanic_train=data_proprocess("train.csv")

print "test"

titanic_test=data_proprocess("test.csv")

classify_RandomForestClassifier(titanic_train,titanic_test)

本文介绍了一种基于泰坦尼克号数据集的生存预测模型。通过对数据进行预处理,包括处理缺失值、转换类别变量,并应用了线性回归、逻辑回归及随机森林等算法进行分类任务。结果显示,逻辑回归和随机森林在预测准确性方面表现更佳。

本文介绍了一种基于泰坦尼克号数据集的生存预测模型。通过对数据进行预处理,包括处理缺失值、转换类别变量,并应用了线性回归、逻辑回归及随机森林等算法进行分类任务。结果显示,逻辑回归和随机森林在预测准确性方面表现更佳。

1151

1151

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?