用途:通过数据采集组件采集各服务器的内存,CPU,IO等使用情况,达到警戒线时邮件提醒用户;通过探针监控线上的服务是否正常,当服务不正常时邮件提醒用户。

说明:1 本文为实际操作说明,需要具备linux基础和docker基础知识。

2 本文部署在内网服务器,内网docker镜像仓库为x.x.x.x:5000;需要把相关镜像推送到私服,如服务器可以联网连上dockerhub ,镜像名可以去掉x.x.x.x:5000。

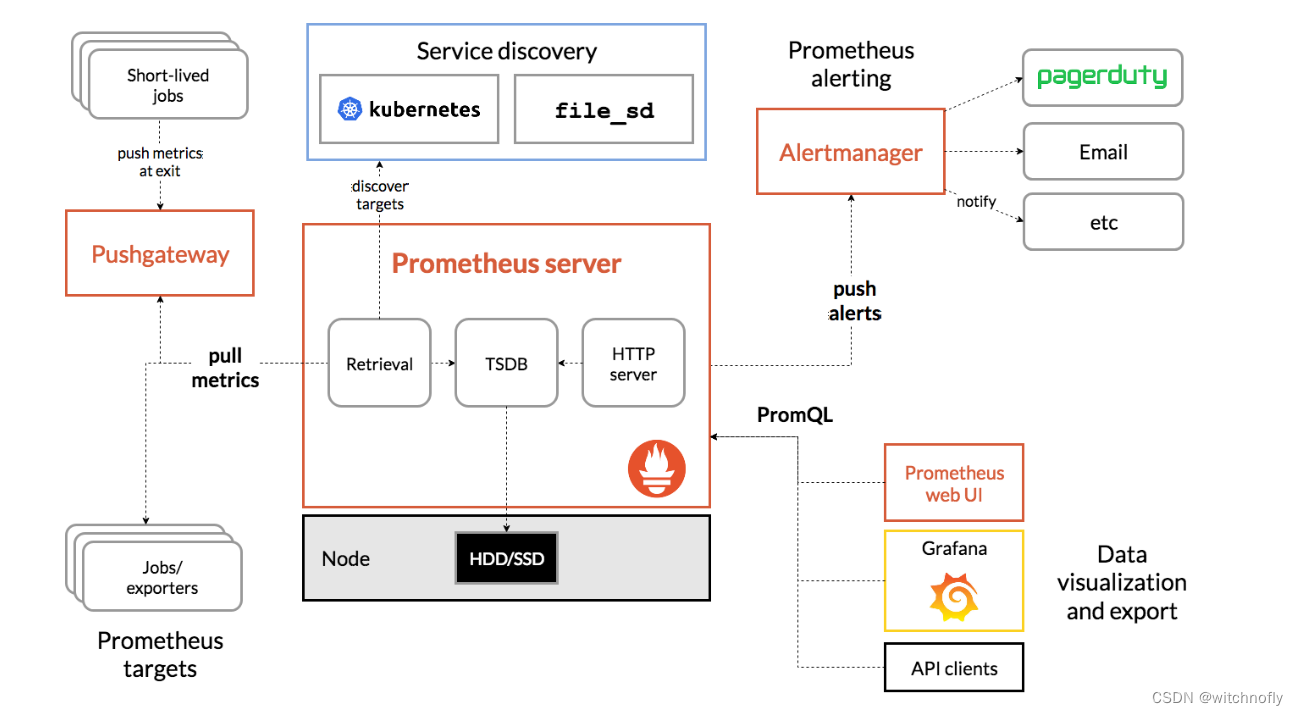

附官网prometheus架构图,方便理解:

本次部署采用docker部署,基本架构:

| 服务器 | 部署项目 | 用途 |

| x.x.x.192:9090 | prometheus server | 用于收集和存储时间序列数据 |

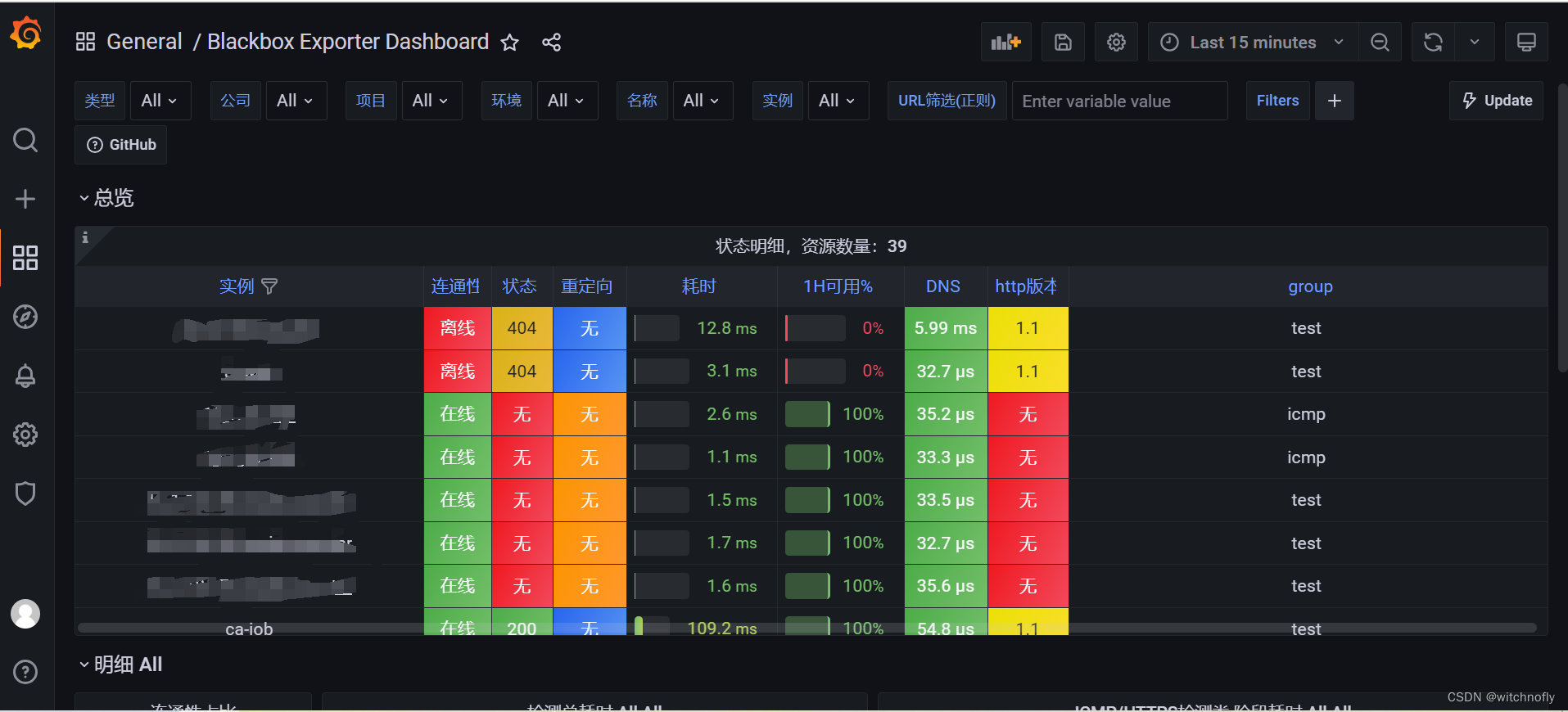

| x.x.x.192:3000 | grafana | 监控仪表盘,可视化监控数据 |

| x.x.x.192:9115 | blackbox-exporter | 对HTTP.HTTPS,TCP,ICMP,DNZ的探测 |

| x.x.x.192:9093 | alertmanager | 发出报警,常见的接收方式有:电子邮件,微信,钉钉, slack等。 |

| x.x.x.104:9200等目标服务器 | node-exporter | 监控服务器CPU、内存、磁盘、I/O等信息 |

| x.x.x.104:18080等目标服务器 | cadvisor | Docker容器监控 |

1 prometheus server部署

#由于网关隔离,服务器端无法访问外网,先通过本地pull镜像后推送到内网镜像仓库。

docker pull prom/prometheus

docker tag prom/prometheus x.x.x.x:5000/prom/prometheus

docker push x.x.x.x:5000/prom/prometheus

#启动Prometheus服务,首次启动个空镜像出来,

docker run -it -d --name=prometheus x.x.x.x:5000/prom/prometheus

#将镜像内/prometheus copy到服务器,进行数据持久化

docker cp prometheus:/prometheus ./data/

docker cp prometheus:/etc/prometheus/prometheus.yml ./

chmod 777 -R data

chmod 777 prometheus.yml

#编写启动脚本

vi run.sh

#执行脚本

chmod 755 run.sh && ./run.sh

#!/bin/bash

DIR="$( cd "$( dirname "$0" )" && pwd )"

NAME="prometheus"

IMAGE="x.x.x.x:5000/prom/prometheus"

docker pull $IMAGE

docker rm -f $NAME

docker run -d -p 9090:9090 \

--restart=always \

-v $DIR/prometheus.yml:/etc/prometheus/prometheus.yml \

-v /etc/localtime:/etc/localtime \

-v $DIR/targets:/etc/prometheus/targets \

-v $DIR/data:/prometheus \

--name $NAME \

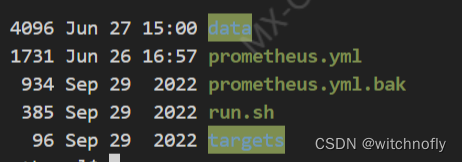

$IMAGE目录截图

data目录存储数据

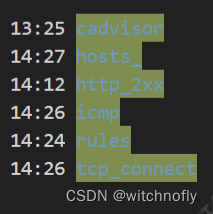

targets目录是配置文件:

prometheus.yml配置

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

alerting:

alertmanagers:

- static_configs:

- targets:

- x.x.x.192:9093

#邮件监控

rule_files:

- targets/rules/*.yml

scrape_configs:

#资源监控

- job_name: 'prometheus'

file_sd_configs:

- files:

- targets/hosts_/*.yml

refresh_interval: 5m

#http监控

- job_name: 'http_status'

metrics_path: /probe

params:

module: [http_2xx]

file_sd_configs:

- files:

- targets/http_2xx/*.yml

refresh_interval: 5m

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- target_label: __address__

replacement: x.x.x.192:9115

# 服务器连通性监控

- job_name: 'ping_status'

metrics_path: /probe

params:

module: [icmp]

file_sd_configs:

- files:

- targets/icmp/*.yml

refresh_interval: 5m

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- target_label: __address__

replacement: x.x.x.192:9115

#端口监控

- job_name: 'port_status'

metrics_path: /probe

params:

module: [tcp_connect]

file_sd_configs:

- files:

- targets/tcp_connect/*.yml

refresh_interval: 5m

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- target_label: __address__

replacement: x.x.x.192:9115资源监控配置:

#配置资源监控

mkdir ./target/hosts_

vi ./target/hosts_/host.ymltarget/hosts_/host.yml配置

- targets:

- 'localhost:9090'

- 'x.x.x.1:9200'

- 'x.x.x.2:9200'

- ...端口监控配置:

#配置端口联通性

mkdir ./target/tcp_connect

vi ./target/tcp_connect/tcp_connect.ymltarget/tcp_connect/tcp_connect.yml

- targets:

- 'x.x.x.104:9200'

labels:

instance: 'x.x.x.104 node exporter'

group: 'test'

- targets:

- 'x.x.x.105:9200'

labels:

instance: 'x.x.x.105 node exporter'

group: 'test'

...http监控配置:

#http配置

mkdir ./target/htto_2xx

vi ./target/htto_2xx/http_2xx.ymltarget/htto_2xx/http_2xx.yml

- targets:

- 'http://xxx/jobqueue/swagger-ui.html'

labels:

instance: 'jobqueue'

group: 'test'

- targets:

- 'http://xxx/jobexecutor/swagger-ui.html'

labels:

instance: 'jobexecutor'

group: 'test'

...主机连通性配置

#http配置

mkdir ./target/icmp

vi ./target/icmp/icmp.ymltarget/icmp/icmp.yml

- targets:

- 'x.x.x.192'

labels:

instance: 'x.x.x.192'

group: 'test'

- targets:

- 'x.x.x.143'

labels:

instance: 'x.x.x.143'

group: 'test'

...预警规则配置

#http配置

mkdir ./target/rules

vi ./target/rules/node-up.ymltarget/rules/node-up.yml

groups:

- name: Host

rules:

- alert: 主机状态报警

expr: up == 0

for: 1m

labels:

serverity: high

annotations:

summary: "{{$labels.instance}}:服务器宕机"

description: "{{$labels.instance}}:服务器延时超过5分钟"

- alert: CPU报警

expr: 100 * (1 - avg(irate(node_cpu_seconds_total{mode="idle"}[2m])) by(instance)) > 90

for: 1m

labels:

serverity: middle

annotations:

summary: "{{$labels.instance}}: High CPU Usage Detected"

description: "{{$labels.instance}}: CPU usage is {{$value}}, above 90%"

- alert: 内存报警

expr: (node_memory_MemTotal_bytes - node_memory_MemAvailable_bytes) / node_memory_MemTotal_bytes * 100 > 90

for: 1m

labels:

serverity: high

annotations:

summary: "{{$labels.instance}}: High Memory Usage Detected"

description: "{{$labels.instance}}: Memory Usage i{{ $value }}, above 90%"

- alert: 磁盘报警

expr: 100 * (node_filesystem_size_bytes{fstype=~"xfs|ext4"} - node_filesystem_avail_bytes) / node_filesystem_size_bytes > 90

for: 1m

labels:

serverity: middle

annotations:

summary: "{{$labels.instance}}: High Disk Usage Detected"

description: "{{$labels.instance}}, mountpoint {{$labels.mountpoint}}: Disk Usage is {{ $value }}, above 90%"

- alert: IO报警

expr: 100-(avg(irate(node_disk_io_time_seconds_total[1m])) by(instance)* 100) < 60

for: 1m

labels:

serverity: high

annotations:

summary: "{{$labels.mountpoint}} 流入磁盘IO使用率过高!"

description: "{{$labels.mountpoint }} 流入磁盘IO大于60%(目前使用:{{$value}})"

- alert: 网络报警

expr: ((sum(rate (node_network_receive_bytes_total{device!~'tap.*|veth.*|br.*|docker.*|virbr*|lo*'}[5m])) by (instance)) / 100) > 102400

for: 1m

labels:

serverity: high

annotations:

annotations:

summary: "{{$labels.mountpoint}} TCP_ESTABLISHED过高!"

description: "{{$labels.mountpoint }} TCP_ESTABLISHED大于1000%(目前使用:{{$value}}%)"

- alert: 非200HTTP状态码

expr: probe_http_status_code{job="http_status"} != 200

for: 1m

labels:

serverity: high

annotations:

summary: "{{$labels.instance}} 非200HTTP状态码!"

description: "{{$labels.mountpoint }} 非200HTTP状态码"

cadvisor

#cadvisor配置

mkdir ./target/cadvisor

vi ./target/cadvisor/cadvisor.ymltarget/cadvisor/cadvisor.yml

- targets:

- 'x.x.x.x:18080'2 Grafana 部署

#由于网关隔离,服务器端无法访问外网,先通过本地pull镜像后推送到内网镜像仓库。

docker pull grafana/grafana

docker tag grafana/grafanax.x.x.x:5000/grafana/grafana

docker push x.x.x.x:5000/grafana/grafana

#启动grafana 服务,首次启动个空镜像出来,

docker run -it -d --name=grafanax.x.x.x/grafana/grafana

#将镜像内/var/lib/grafana copy到服务器,进行数据持久化

dockercpgrafana:/var/lib/grafana./data/

chmod777 -R data

#编写启动脚本

virun.sh

#执行脚本

chmod755 run.sh && ./run.sh

run.sh脚本

#!/bin/bash

DIR="$( cd "$( dirname "$0" )" && pwd )"

NAME="grafana"

IMAGE="x.x.x.x:5000/grafana/grafana"

docker pull $IMAGE

docker rm -f $NAME

docker run -d -i -p 3000:3000 \

--name $NAME \

-v $DIR/data:/var/lib/grafana \

-v "/etc/localtime:/etc/localtime" \

-e "GF_SERVER_ROOT_URL=http://grafana.server.name" \

-e "GF_SECURITY_ADMIN_PASSWORD=admin" \

$IMAGE3 node-exporter部署

docker run -d -p 9100:9100 \

-v "/proc:/host/proc" \

-v "/sys:/host/sys" \

-v "/:/rootfs" \

-v "/etc/localtime:/etc/localtime" \

--restart=always \

x.x.x.x:5000/prom/node-exporter \

--path.procfs /host/proc \

--path.sysfs /host/sys \

--collector.filesystem.ignored-mount-points "^/(sys|proc|dev|host|etc)($|/)"4 blackbox-exporter部署

docker run -id \

--name blackbox-exporter \

--restart=always \

-p 9115:9115 \

x.x.x.x:5000/prom/blackbox-exporter5 alertmanager 部署

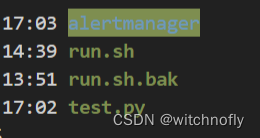

alertmanager目录与run.sh在同一个目录

DIR="$( cd "$( dirname "$0" )" && pwd )"

docker rm -f alertmanager

docker run -d \

-p 9093:9093 \

--name alertmanager \

--restart=always \

-v $DIR/alertmanager:/etc/alertmanager \

x.x.x.x:5000/prom/alertmanageralertmanager目录中是alertmanager.yml文件及template目录;template目录下存email.tmpl文件

alertmanager.yml

# 全局配置项

global:

resolve_timeout: 5m #超时,默认5min

#邮箱smtp服务

smtp_smarthost: 'mail.xxx.com.cn:25'

smtp_from: 'xxx@xxx.com.cn'

#smtp_auth_username: 'xxx@xxx.com.cn'

#smtp_auth_password: 'xxx'

#smtp_auth_password: ''

#smtp_hello: ''

smtp_require_tls: false

# 定义模板信息

templates:

- 'template/*.tmpl' # 路径

# 路由

route:

group_by: ['alertname'] # 报警分组依据

group_wait: 10s #组等待时间

group_interval: 10s # 发送前等待时间

repeat_interval: 4h #重复周期

receiver: 'email' # 默认警报接收者

# 警报接收者

receivers:

- name: 'email' #警报名称

email_configs:

- to: 'xxx@qq.com,xxx@xxx.com.cn' #接收警报的email

# html: '{{ template "email.to.html" . }}' # 模板

send_resolved: true

# 告警抑制

#inhibit_rules:

# - source_match:

# severity: 'critical'

# target_match:

# severity: 'warning'

# equal: ['alertname', 'dev', 'instance']

email.tmpl

=========start==========<br>

告警程序: prometheus_alert <br>

告警级别: {{ .Labels.severity }} 级 <br>

告警类型: {{ .Labels.alertname }} <br>

故障主机: {{ .Labels.instance }} <br>

告警主题: {{ .Annotations.summary }} <br>

告警详情: {{ .Annotations.description }} <br>

触发时间: {{ .StartsAt.Format "2019-08-04 16:58:15" }} <br>

=========end==========<br>grafana面板可以在https://grafana.com/grafana/dashboards查找模板 ,直接导入使用即可:

本文详细介绍了如何通过Docker在内网服务器上部署Prometheus监控系统,包括Prometheusserver、Grafana、node-exporter、blackbox-exporter和alertmanager,以实现服务器资源监控、服务状态探测和异常警报功能。此外,还涉及到配置文件如prometheus.yml和alertmanager.yml的设置,以及数据持久化和启动脚本的编写。

本文详细介绍了如何通过Docker在内网服务器上部署Prometheus监控系统,包括Prometheusserver、Grafana、node-exporter、blackbox-exporter和alertmanager,以实现服务器资源监控、服务状态探测和异常警报功能。此外,还涉及到配置文件如prometheus.yml和alertmanager.yml的设置,以及数据持久化和启动脚本的编写。

4058

4058

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?