audio2face部分

1.安装audio2face以及localserver

2.打开streamingplayer的demo场景

3.localhost/NVIDIA/Assets/Audio2face/blendshape_solve目录下找到male_bs_arkit.usd拖拽到场景

4.选中场景中的male_bs_arkit.usd将分页切换到A2F data convert分页

5.参数设置

6.设置参数后点击set up blendshape solve

7.通过ominiverse管理界面上的audio2face上的设置位置进入audio2face的安装位置

8. 修改audio2face安装目录下的exts\omni.audio2face.exporter\config目录下的extension.toml文件,最底部添加如下内容

[python.pipapi]

requirements=['python-osc']

use_online_index=true9.修改安装目录下exts\omni.audio2face.exporter\omni\audio2face\exporter\scripts目录下的facsSolver.py脚本

引用osc脚本

from pythonosc import udp_client在outWeight后面添加udp发送数据代码,加上后当有音频同步动画的时候会自动通过udp将数据发送出去

outWeight = outWeight * (outWeight > 1.0e-9)

# print (outWeight)

blend=["eyeBlinkLeft", "eyeLookDownLeft", "eyeLookInLeft", "eyeLookOutLeft", "eyeLookUpLeft", "eyeSquintLeft", "eyeWideLeft", "eyeBlinkRight", "eyeLookDownRight", "eyeLookInRight", "eyeLookOutRight", "eyeLookUpRight", "eyeSquintRight", "eyeWideRight", "jawForward", "jawLeft", "jawRight", "jawOpen", "mouthClose", "mouthFunnel", "mouthPucker", "mouthLeft", "mouthRight", "mouthSmileLeft", "mouthSmileRight", "mouthFrownLeft", "mouthFrownRight", "mouthDimpleLeft", "mouthDimpleRight", "mouthStretchLeft", "mouthStretchRight", "mouthRollLower", "mouthRollUpper", "mouthShrugLower", "mouthShrugUpper", "mouthPressLeft", "mouthPressRight", "mouthLowerDownLeft", "mouthLowerDownRight", "mouthUpperUpLeft", "mouthUpperUpRight", "browDownLeft", "browDownRight", "browInnerUp", "browOuterUpLeft", "browOuterUpRight", "cheekPuff", "cheekSquintLeft", "cheekSquintRight", "noseSneerLeft", "noseSneerRight", "tongueOut"]

client=udp_client.SimpleUDPClient('127.0.0.1',5008)

osc_array=outWeight.tolist()

count=0

for i in osc_array:

client.send_message('/'+str(blend[count]),i)

count+=1

return outWeight10.调用exts\omni.audio2face.player\omni\audio2face\player\scripts\streaming_server目录下的test_client.py将音频流式传输给audio2face应用

python test_client.py

UE5部分

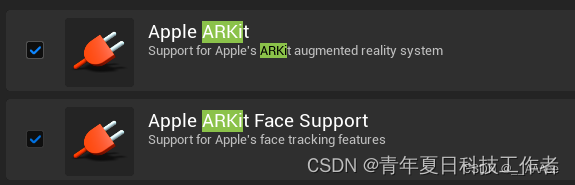

1.开启需要用到的插件

2.修改数字人蓝图

3.修改face_AnimBP

把 修改曲线和arkit_mapping的位置颠倒一下,修改成图片所示

运行

开启audio2face以及UE5的数字人程序

调用test_client.py驱动audio2face,经由audio2face生成变形动画后从udp端口发送到UE5驱动UE5数字人角色口型动画

可以采用外部程序监听弹幕后经由百度文字转语音工具获取音频文件,获取到文件后执行test_client进行完整的驱动

需要pip安装的包

1.pip install protobuf==3.20.* 注意目前版本只支持到3.20.*,版本高了会无法通过

2.pip install grpcio

3.pip install soundfile

本文详细介绍了如何在Unity中安装并配置Audio2Face,包括设置本地服务器、导入模型、调整参数、修改Python脚本以通过UDP发送动画数据,以及在UE5中与数字人角色的集成。还提到了所需Python库的安装和音频流传输方法。

本文详细介绍了如何在Unity中安装并配置Audio2Face,包括设置本地服务器、导入模型、调整参数、修改Python脚本以通过UDP发送动画数据,以及在UE5中与数字人角色的集成。还提到了所需Python库的安装和音频流传输方法。

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?