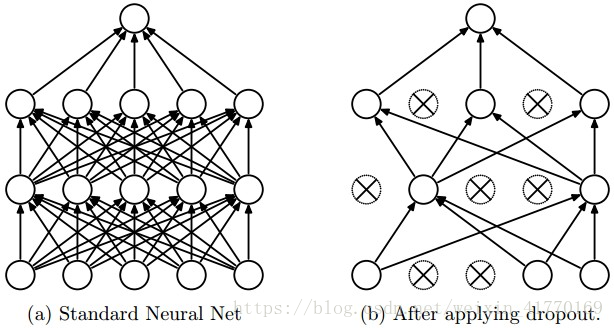

1、dropout原理

论文:Dropout: A Simple Way to Prevent Neural Networks fromOverfitting

防止过拟合的正则化技术

2、keras实现

tf.nn.dropout(hidden_layer, keep_prob)有2个参数:

(1)hidden_layer:应用dropout的tensor

(2)keep_prob:留存率。一般训练时初始留存率设置为0.5,测试时设为1.0,最大化模型的能力。

要求:用 ReLU 层和 dropout 层构建一个模型,keep_prob值设为 0.5。打印这个模型的 logits。

import tensorflow as tf

hidden_layer_weights = [

[0.1, 0.2, 0.4],

[0.4, 0.6, 0.6],

[0.5, 0.9, 0.1],

[0.8, 0.2, 0.8]]

out_weights = [

[0.1, 0.6],

[0.2, 0.1],

[0.7, 0.9]]

# Weights and biases

weights = [

tf.Variable(hidden_layer_weights),

tf.Variable(out_weights)]

biases = [

tf.Variable(tf.zeros(3)),

tf.Variable(tf.zeros(2))]

# Input

features = tf.Variable([[0.0, 2.0, 3.0, 4.0], [0.1, 0.2, 0.3, 0.4], [11.0, 12.0, 13.0, 14.0]])

# TODO: Create Model with Dropout

keep_prob = tf.placeholder(tf.float32)

hidden_layer = tf.add(tf.matmul(features, weights[0]), biases[0])

hidden_layer = tf.nn.relu(hidden_layer)

hidden_layer = tf.nn.dropout(hidden_layer, keep_prob)

logits = tf.add(tf.matmul(hidden_layer, weights[1]), biases[1])

# TODO: Print logits from a session

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

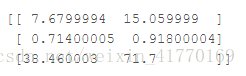

print(sess.run(logits, feed_dict={keep_prob:0.5}))

3个输入,每个输入给出2个结果。

本文介绍了一种防止神经网络过拟合的技术——Dropout,并通过Keras展示了其实现方式。利用ReLU激活函数和Dropout层构建了一个模型,设置keep_prob为0.5,并展示了如何在TensorFlow会话中运行此模型以获取logits。

本文介绍了一种防止神经网络过拟合的技术——Dropout,并通过Keras展示了其实现方式。利用ReLU激活函数和Dropout层构建了一个模型,设置keep_prob为0.5,并展示了如何在TensorFlow会话中运行此模型以获取logits。

4231

4231

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?