3.1 Explain the feature selection process using the three categories of feature selection methods, step by step.

3.2.1 Data Preprocessing

• Handling Missing Values: Fill in or delete missing data.

• Standardization/Normalization: Since feature scales differ, features need to be standardized (e.g., Z-score standardization) or normalized (e.g., Min-Max normalization).

• Feature Engineering: New features may need to be created, such as technical indicators (moving averages, Relative Strength Index RSI, etc.) or lag features (closing prices from previous days, etc.).

3.2.2 Filter Method – Rapid Preliminary Screening

The filter method is model-independent and quantifies the correlation between features and the target variable based on statistical metrics.

We use:

• Pearson correlation coefficient: Measures the linear correlation between features and the target (future closing price).

• Variance threshold: Removes constant features with variance close to zero (e.g., a feature where all samples have the same value).

Specific steps:

Calculate the Pearson correlation coefficient

between each feature

and the target

(future closing price):

Set the threshold

(adjusted according to cross-validation) to retain features with significant correlation

Calculate the variance of each feature

, set a variance threshold (e.g.,

), and remove features with low variance.

Output the initial filtered feature subset S_filter

3.2.3 Wrapper Method (WRAPPER METHOD) — Based on Model Performance Optimization

The wrapper method evaluates the performance of feature subsets (such as Mean Squared Error MSE) by training a model.

We adopt:

Recursive Feature Elimination (RFE): Combines a linear regression model to iteratively remove the least important features

Specific steps:

Train an initial model (such as linear regression) based on the S_filter, and calculate feature importance (weight coefficients).

Recursive Feature Elimination:

Iteratively remove the least important feature (with the smallest weight), retrain the model, and evaluate performance using cross-validated Mean Squared Error (MSE).

Sort the features

by weight coefficient and remove the feature with the smallest weight (e.g., remove 1 feature each time).

Determine the optimal number of features:

Calculate the MSE for each feature subset using cross-validation,Select the subset 𝑆 wrapper that minimizes the cross-validation MSE (for example, by plotting the curve of the number of features versus MSE).

Output the feature S_wrapper that minimizes the MSE

3.2.4 Embedded Method — Feature Selection within the Model

The embedded method incorporates feature selection into the model training process.

We adopt:

LASSO Regression (L1 Regularization): Makes the weights of some features zero through a penalty term

Specific steps:

1.Train a LASSO model on the S_wrapper

where

Select the optimal regularization strength λ through 5-fold cross-validation (to minimize the validation set MSE).

Extract non-zero weight features

as the final set 𝑆_final. 结合一下程序import os

import json

import numpy as np

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.feature_selection import RFE

from sklearn.ensemble import RandomForestRegressor

from sklearn.metrics import mean_squared_error, mean_absolute_error, r2_score

from sklearn.pipeline import Pipeline

from sklearn.linear_model import ElasticNetCV

from sklearn.preprocessing import StandardScaler

def load_data():

data_path_csv = 'data.csv'

data_path_xlsx = 'hk_02013__last_2080d.xlsx'

if os.path.exists(data_path_csv):

df = pd.read_csv(data_path_csv)

elif os.path.exists(data_path_xlsx):

df = pd.read_excel(data_path_xlsx)

else:

raise SystemExit("Save the data as data.csv or data.xlsx,and place it in the same directory as the script.")

return df

def prepare_features(df):

# Convert the date column to datetime type

if 'data' in df.columns:

# If the date is an Excel serial number, convert it to an actual date

if df['data'].dtype.kind in 'iufc': # 整Integer/Float

df['data'] = pd.to_datetime(df['data'], unit='D', origin='1899-12-30')

else:

df['data'] = pd.to_datetime(df['data'], errors='coerce')

# Sort by date to ensure chronological order

if 'data' in df.columns:

df = df.sort_values('data').reset_index(drop=True)

# Provided data column names(OHLCV etc.)

feature_cols = ['open price', 'Close price', 'daily highest price', 'daily Lowest price', ' trading volume', ' trading turnover', 'Stock Amplitude', 'Stock price limit', 'Stock price change', 'Stock turnover rate']

# Force-convert the feature column to a numeric type, converting non-numeric values to NaN

X_all = df[feature_cols].apply(pd.to_numeric, errors='coerce')

# 未Use the closing price of one time step as the target variable (the closing price of the next day)

df['Future closing price'] = df['Close price'].shift(-1)

# Construct the final feature matrix X and target y, and filter out rows with NaN values

X_all = df[feature_cols]

mask = X_all.notnull().all(axis=1) & df['Future closing price'].notnull()

X = X_all[mask].astype(float)

y = df.loc[mask, 'Future closing price'].astype(float)

feature_names = feature_cols

return X.values, y.values, feature_names

def main():

df = load_data()

X, y, feature_names = prepare_features(df)

n_features = X.shape[1] # It should be 10

# Step 1: Take approximately 70% of the complete feature set as candidate features

step1_size = max(1, int(n_features * 0.7))

rng = np.random.default_rng(42)

all_indices = np.arange(n_features)

step1_indices = np.sort(rng.choice(all_indices, size=step1_size, replace=False))

step1_candidates = [feature_names[i] for i in step1_indices]

step1_removed = [feature_names[i] for i in all_indices if i not in step1_indices]

print("Step 1 - Removed features (full set -> Step1 candidates):", step1_removed)

print(" Count removed:", len(step1_removed))

print(" Example removed:", step1_removed[:5] if step1_removed else [])

print("")

# Data splitting

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

X_train_step1 = X_train[:, step1_indices]

X_test_step1 = X_test[:, step1_indices]

# Step 2: Wrapper(RFE,Feature Importance Screening Based on RF)

RF_est = RandomForestRegressor(n_estimators=300, random_state=42, n_jobs=-1)

n_features_wrapper = max(1, int(len(step1_candidates) * 0.7))

rfe = RFE(RF_est, n_features_to_select=n_features_wrapper, step=1)

rfe.fit(X_train_step1, y_train)

step2_mask = rfe.support_

selected_step2 = [step1_candidates[i] for i, m in enumerate(step2_mask) if m]

step2_removed_list = [step1_candidates[i] for i, m in enumerate(step2_mask) if not m]

print("Step 2 - Wrapper (RFE) Selected features:", selected_step2)

print("Step 2 - Wrapper (RFE) Result Analysis:")

print(f" Total number of features (Step1 candidates): {len(step1_candidates)}")

print(f" Number of selected features (Step2): {len(selected_step2)}")

print(f" Delete feature count (Step2): {len(step2_removed_list)}")

print(" Reason for deletion: Use Recursive Feature Elimination (RFE) based on the Random Forest estimator to measure feature importance and remove features that contribute less to the target prediction.")

if step2_removed_list:

print(" Example of deleted features:", step2_removed_list[:5])

else:

print(" No features to be deleted were detected.")

print("")

# Step 3:Embedded screening based on the results of Step 2 (ElasticNetCV)

selected_step2_indices = [step1_indices[i] for i, m in enumerate(step2_mask) if m]

X_train_step2 = X_train[:, selected_step2_indices]

X_test_step2 = X_test[:, selected_step2_indices]

selected_step2_names = [feature_names[i] for i in selected_step2_indices]

enet_cv = ElasticNetCV(l1_ratio=[0.5, 0.7, 0.9, 1.0], cv=5, random_state=42)

pipe_enet = Pipeline([('scaler', StandardScaler()), ('enet', enet_cv)])

pipe_enet.fit(X_train_step2, y_train)

coefs = pipe_enet.named_steps['enet'].coef_

selected_step3 = [name for name, c in zip(selected_step2_names, coefs) if abs(c) > 1e-6]

if len(selected_step3) == 0:

selected_step3 = selected_step2_names[:1]

step3_removed = [n for n in selected_step2_names if n not in selected_step3]

print("Step 3 - Embedded (ElasticNetCV) the finally selected features:", selected_step3)

print("Step 3 - Embedded (ElasticNetCV) result Analysis:")

print(f" Number of features after ElasticNetCV screening: {len(selected_step3)}")

print(f" Number of original candidate features (Step2): {len(selected_step2_names)}")

print(f" Delete feature count (Step3): {len(step3_removed)}")

print(" Reason for deletion: ElasticNetCV Shrink some coefficients to 0 under regularization,Indicates a minor contribution to the prediction,Therefore, it was excluded.")

if step3_removed:

print(" Example of deleted features:", step3_removed[:5])

else:

print(" No features to be deleted were detected.")

print("")

final_features = selected_step3

final_indices = [step1_indices[i] for i, f in enumerate(step1_candidates) if f in final_features]

X_train_final = X_train[:, final_indices]

X_test_final = X_test[:, final_indices]

# 5) Final Model Training and Evaluation

final_model = RandomForestRegressor(n_estimators=500, random_state=42, n_jobs=-1)

final_model.fit(X_train_final, y_train)

y_pred = final_model.predict(X_test_final)

mse = mean_squared_error(y_test, y_pred)

rmse = np.sqrt(mse)

mae = mean_absolute_error(y_test, y_pred)

r2 = r2_score(y_test, y_pred)

print("\nFinal Feature Subset:", final_features)

print("Test set evaluation:")

print(" RMSE:", rmse)

print(" MAE :", mae)

print(" R^2 :", r2)

# 6) Save the result as structured JSON

results = {

"step1_removed": {

"features": step1_removed,

"count": len(step1_removed),

"example": step1_removed[:5] if step1_removed else []

},

"step2_removed": {

"features": step2_removed_list,

"count": len(step2_removed_list),

"example": step2_removed_list[:5] if step2_removed_list else [],

"reason": "RF Recursive Feature Elimination based on Feature Importance, which removes features that contribute less to the target prediction."

},

"step3_removed": {

"features": step3_removed,

"count": len(step3_removed),

"example": step3_removed[:5] if step3_removed else [],

"reason": "ElasticNetCV shrinks some coefficients to zero under regularization, indicating that they contribute little to the prediction and are therefore eliminated."

},

"final_features": final_features,

"metrics": {

"rmse": rmse,

"mae": mae,

"r2": r2

}

}

with open('feature_selection_results.json', 'w', encoding='utf-8') as f_out:

json.dump(results, f_out, ensure_ascii=False, indent=4)

print("\The result has been saved to feature_selection_results.json")

if __name__ == "__main__":

main()

. Feature Selection Using the Funnelling Approach [20 marks]

3. Perform feature selection for a machine learning model using a multi-step process by combining

techniques from filter, wrapper, and embedded methods.

(a) Explain the feature selection process using the three categories of feature selection methods, step

by step.

(b) Justify the selection of features retained at each step.

(c) Provide the final list of selected features. 你是CQF专家,请结合以上三段内容,给出完整的解题步骤、解题过程和答案,并设计完整的Python程序实现题目要求

最新发布

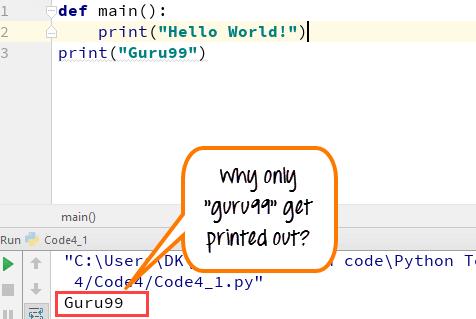

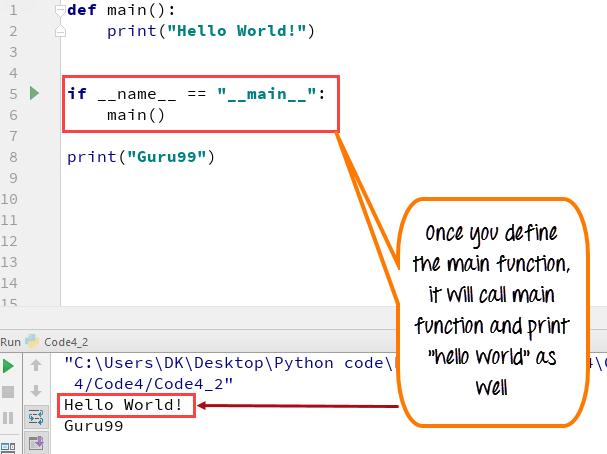

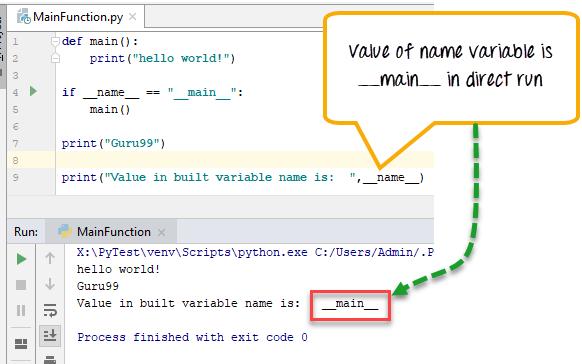

博客主要介绍了Python主函数相关知识。主函数是程序起点,仅作为程序运行时执行,作为模块导入则不运行。通过代码示例说明了声明调用函数'if __name__ == \__main__\'的重要性,还阐述了直接运行和导入的区别,最后提到Python 3中不使用该语句也有类似效果。

博客主要介绍了Python主函数相关知识。主函数是程序起点,仅作为程序运行时执行,作为模块导入则不运行。通过代码示例说明了声明调用函数'if __name__ == \__main__\'的重要性,还阐述了直接运行和导入的区别,最后提到Python 3中不使用该语句也有类似效果。

602

602

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?