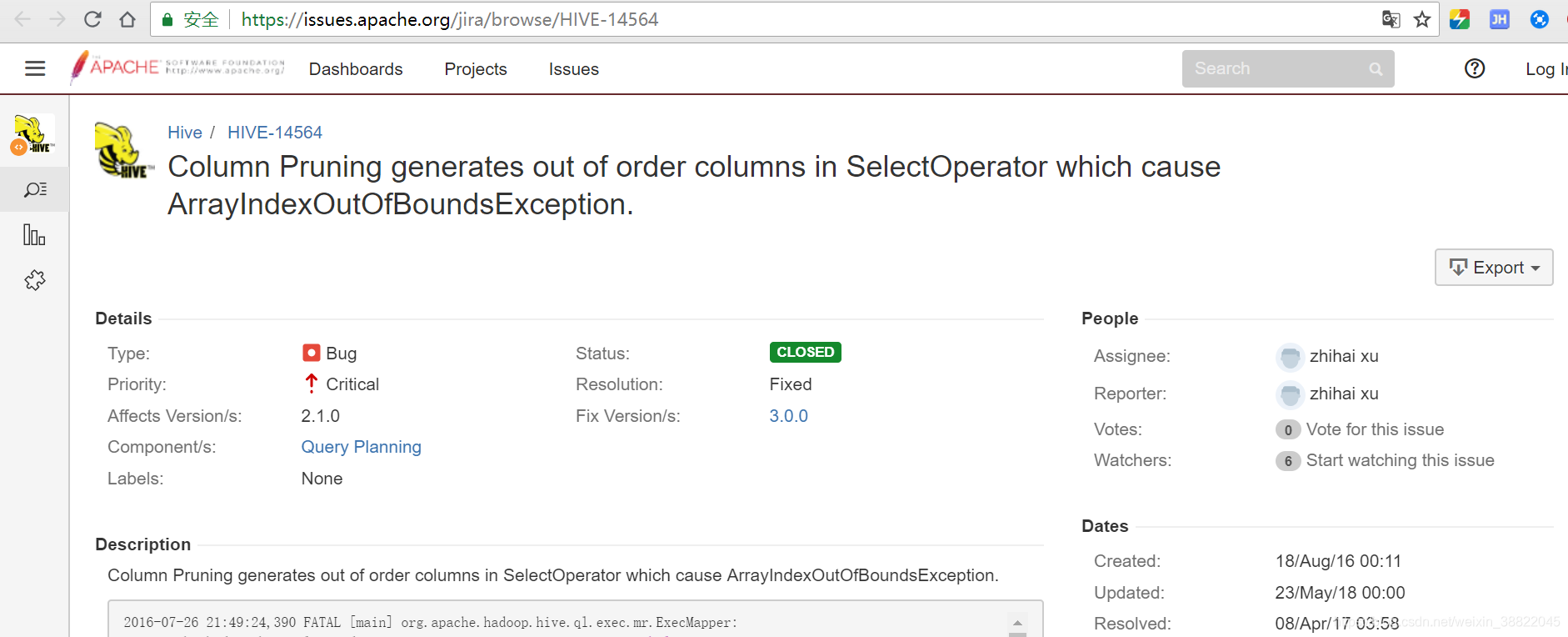

后续官方解决方案:https://issues.apache.org/jira/browse/HIVE-14564

异常详细情况

2019-02-28 16:33:44,429 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Got allocated containers 1

2019-02-28 16:33:44,429 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Assigning container Container: [ContainerId: container_e25_1551269222015_0034_01_000005, NodeId: bigdata001:45454, NodeHttpAddress: bigdata001:8042, Resource: <memory:2048, vCores:1>, Priority: 5, Token: Token { kind: ContainerToken, service: 192.168.30.230:45454 }, ] to fast fail map

2019-02-28 16:33:44,431 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Assigned from earlierFailedMaps

2019-02-28 16:33:44,432 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Assigned container container_e25_1551269222015_0034_01_000005 to attempt_1551269222015_0034_m_000000_3

2019-02-28 16:33:44,432 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Recalculating schedule, headroom=<memory:43008, vCores:1>

2019-02-28 16:33:44,432 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Reduce slow start threshold not met. completedMapsForReduceSlowstart 1

2019-02-28 16:33:44,432 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: After Scheduling: PendingReds:4 ScheduledMaps:0 ScheduledReds:0 AssignedMaps:1 AssignedReds:0 CompletedMaps:0 CompletedReds:0 ContAlloc:4 ContRel:0 HostLocal:0 RackLocal:1

2019-02-28 16:33:44,432 INFO [AsyncDispatcher event handler] org.apache.hadoop.yarn.util.RackResolver: Resolved bigdata001 to /default-rack

2019-02-28 16:33:44,433 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: attempt_1551269222015_0034_m_000000_3 TaskAttempt Transitioned from UNASSIGNED to ASSIGNED

2019-02-28 16:33:44,433 INFO [ContainerLauncher #6] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: Processing the event EventType: CONTAINER_REMOTE_LAUNCH for container container_e25_1551269222015_0034_01_000005 taskAttempt attempt_1551269222015_0034_m_000000_3

2019-02-28 16:33:44,433 INFO [ContainerLauncher #6] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: Launching attempt_1551269222015_0034_m_000000_3

2019-02-28 16:33:44,434 INFO [ContainerLauncher #6] org.apache.hadoop.yarn.client.api.impl.ContainerManagementProtocolProxy: Opening proxy : bigdata001:45454

2019-02-28 16:33:44,441 INFO [ContainerLauncher #6] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: Shuffle port returned by ContainerManager for attempt_1551269222015_0034_m_000000_3 : 13562

2019-02-28 16:33:44,441 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: TaskAttempt: [attempt_1551269222015_0034_m_000000_3] using containerId: [container_e25_1551269222015_0034_01_000005 on NM: [bigdata001:45454]

2019-02-28 16:33:44,442 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: attempt_1551269222015_0034_m_000000_3 TaskAttempt Transitioned from ASSIGNED to RUNNING

2019-02-28 16:33:45,434 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerRequestor: getResources() for application_1551269222015_0034: ask=1 release= 0 newContainers=0 finishedContainers=0 resourcelimit=<memory:43008, vCores:1> knownNMs=3

2019-02-28 16:33:45,785 INFO [Socket Reader #1 for port 35318] SecurityLogger.org.apache.hadoop.ipc.Server: Auth successful for job_1551269222015_0034 (auth:SIMPLE)

2019-02-28 16:33:45,796 INFO [IPC Server handler 4 on 35318] org.apache.hadoop.mapred.TaskAttemptListenerImpl: JVM with ID : jvm_1551269222015_0034_m_27487790694405 asked for a task

2019-02-28 16:33:45,796 INFO [IPC Server handler 4 on 35318] org.apache.hadoop.mapred.TaskAttemptListenerImpl: JVM with ID: jvm_1551269222015_0034_m_27487790694405 given task: attempt_1551269222015_0034_m_000000_3

2019-02-28 16:33:47,740 INFO [IPC Server handler 1 on 35318] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Progress of TaskAttempt attempt_1551269222015_0034_m_000000_3 is : 0.0

2019-02-28 16:33:47,743 FATAL [IPC Server handler 2 on 35318] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Task: attempt_1551269222015_0034_m_000000_3 - exited : java.lang.RuntimeException: org.apache.hadoop.hive.ql.metadata.HiveException: Hive Runtime Error while processing row [Error getting row data with exception java.lang.ArrayIndexOutOfBoundsException: -1746617499

at org.apache.hadoop.hive.serde2.lazybinary.LazyBinaryUtils.readVInt(LazyBinaryUtils.java:314)

at org.apache.hadoop.hive.serde2.lazybinary.LazyBinaryUtils.checkObjectByteInfo(LazyBinaryUtils.java:183)

at org.apache.hadoop.hive.serde2.lazybinary.LazyBinaryStruct.parse(LazyBinaryStruct.java:142)

at org.apache.hadoop.hive.serde2.lazybinary.LazyBinaryStruct.getField(LazyBinaryStruct.java:202)

at org.apache.hadoop.hive.serde2.lazybinary.objectinspector.LazyBinaryStructObjectInspector.getStructFieldData(LazyBinaryStructObjectInspector.java:64)

at org.apache.hadoop.hive.serde2.SerDeUtils.buildJSONString(SerDeUtils.java:354)

at org.apache.hadoop.hive.serde2.SerDeUtils.getJSONString(SerDeUtils.java:198)

at org.apache.hadoop.hive.serde2.SerDeUtils.getJSONString(SerDeUtils.java:184)

at org.apache.hadoop.hive.ql.exec.MapOperator.toErrorMessage(MapOperator.java:588)

at org.apache.hadoop.hive.ql.exec.MapOperator.process(MapOperator.java:557)

at org.apache.hadoop.hive.ql.exec.mr.ExecMapper.map(ExecMapper.java:163)

at org.apache.hadoop.mapred.MapRunner.run(MapRunner.java:54)

at org.apache.hadoop.mapred.MapTask.runOldMapper(MapTask.java:453)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:343)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:168)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1724)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:162)

]

at org.apache.hadoop.hive.ql.exec.mr.ExecMapper.map(ExecMapper.java:172)

at org.apache.hadoop.mapred.MapRunner.run(MapRunner.java:54)

at org.apache.hadoop.mapred.MapTask.runOldMapper(MapTask.java:453)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:343)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:168)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1724)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:162)

Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: Hive Runtime Error while processing row [Error getting row data with exception java.lang.ArrayIndexOutOfBoundsException: -1746617499

at org.apache.hadoop.hive.serde2.lazybinary.LazyBinaryUtils.readVInt(LazyBinaryUtils.java:314)

at org.apache.hadoop.hive.serde2.lazybinary.LazyBinaryUtils.checkObjectByteInfo(LazyBinaryUtils.java:183)

at org.apache.hadoop.hive.serde2.lazybinary.LazyBinaryStruct.parse(LazyBinaryStruct.java:142)

at org.apache.hadoop.hive.serde2.lazybinary.LazyBinaryStruct.getField(LazyBinaryStruct.java:202)

at org.apache.hadoop.hive.serde2.lazybinary.objectinspector.LazyBinaryStructObjectInspector.getStructFieldData(LazyBinaryStructObjectInspector.java:64)

at org.apache.hadoop.hive.serde2.SerDeUtils.buildJSONString(SerDeUtils.java:354)

at org.apache.hadoop.hive.serde2.SerDeUtils.getJSONString(SerDeUtils.java:198)

at org.apache.hadoop.hive.ser

探讨Hive在多表连接及使用limit时出现的序列化Bug,涉及列裁剪导致的数据字段丢失问题,提供官方解决方案链接。

探讨Hive在多表连接及使用limit时出现的序列化Bug,涉及列裁剪导致的数据字段丢失问题,提供官方解决方案链接。

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

2064

2064

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?