文章目录

1、YOLOv9 介绍

YOLOv9 是 YOLOv7 研究团队推出的最新目标检测网络,它是 YOLO(You Only Look Once)系列的最新迭代。YOLOv9 在设计上旨在解决深度学习中信息瓶颈问题,并提高模型在不同任务上的准确性和参数效率。

-

Programmable Gradient Information (PGI):YOLOv9 引入了可编程梯度信息(PGI)的概念,这是一种新的辅助监督框架,用于生成可靠的梯度信息,以便在训练过程中更新网络权重。PGI 通过辅助可逆分支来解决深度网络加深导致的问题,并提供完整的输入信息以计算目标函数。

-

Generalized Efficient Layer Aggregation Network (GELAN):YOLOv9 设计了一种新的轻量级网络架构 GELAN,它基于梯度路径规划。GELAN 通过使用传统的卷积操作,实现了比基于深度可分离卷积的最先进方法更好的参数利用率。

-

高效的性能:YOLOv9 在 MS COCO 数据集上的目标检测任务中取得了优异的性能,超越了所有先前的实时目标检测方法。它在准确性、参数利用率和计算效率方面都显示出了显著的优势

-

适用于不同规模的模型:PGI 可以应用于从轻量级到大型的多种模型,并且可以用于获得完整的信息,使得从头开始训练的模型能够达到或超越使用大型数据集预训练的最先进的模型。

-

改进的网络架构:YOLOv9 在网络架构上进行了改进,包括简化下采样模块和优化无锚点预测头。这些改进有助于提高模型的效率和准确性。训练策略:YOLOv9 遵循了 YOLOv7 AF 的训练设置,包括使用 SGD 优化器进行 500 个周期的训练,并在训练过程中采用了线性预热和衰减策略。

-

数据增强:YOLOv9 在训练过程中使用了多种数据增强技术,如 HSV 饱和度、值增强、平移增强、尺度增强和马赛克增强,以提高模型的泛化能力。

总的来说,YOLOv9 通过其创新的 PGI 和 GELAN 架构,以及对现有训练策略的改进,提供了一种高效且准确的目标检测解决方案,适用于各种规模的模型和不同的应用场景。

2、测试

使用Pip在一个Python>=3.8环境中安装ultralytics包,此环境还需包含PyTorch>=1.7。这也会安装所有必要的依赖项。

git clone https://github.com/WongKinYiu/yolov9.git

cd yolov9

pip install -r requirements.txt

提供的cooc预训练模型性能如下

| Model | Test Size | APval | AP50val | AP75val | Param. | FLOPs |

|---|---|---|---|---|---|---|

| YOLOv9-T | 640 | 38.3% | 53.1% | 41.3% | 2.0M | 7.7G |

| YOLOv9-S | 640 | 46.8% | 63.4% | 50.7% | 7.1M | 26.4G |

| YOLOv9-M | 640 | 51.4% | 68.1% | 56.1% | 20.0M | 76.3G |

| YOLOv9-C | 640 | 53.0% | 70.2% | 57.8% | 25.3M | 102.1G |

| YOLOv9-E | 640 | 55.6% | 72.8% | 60.6% | 57.3M | 189.0G |

2.1、官方Python测试

python detect.py --weights yolov9-c.pt --data data\coco.yaml --sources bus.jpg

注意,这里可能出现一个错误 fix solving AttributeError: 'list' object has no attribute 'device' in detect.py,在官方issues中可以找到解决方案,需要在将 detect.py 文件下面这种nms不分代码调整为

# NMS

with dt[2]:

pred = pred[0][1] if isinstance(pred[0], list) else pred[0]

pred = non_max_suppression(pred, conf_thres, iou_thres, classes, agnostic_nms, max_det=max_det)

# Second-stage classifier (optional)

之后重新运行正常。

以预训练的 yolov9-c.pt 模型为例测试:

CPU 0.8ms pre-process, 1438.7ms inference, 2.0ms NMS per image

GPU 0.7ms pre-process, 41.3ms inference, 1.4ms NMS per image

以预训练的简化模型 yolov9-c-converted.pt 为例测试:

CPU 0.9ms pre-process, 704.8ms inference, 1.6ms NMS per image

GPU 0.4ms pre-process, 22.9ms inference, 1.5ms NMS per image

从推理时间上,可以看出 converted 之后的模型执行时间降低了50%=,这个归功于模型重参数化,可以查看本文章最后一节内容。

2.1.1、正确的脚本

其实上面是错误的使用方式,可能库还没完善。目前推理、训练、验证个截断针对不同模型使用脚本文件是不同的

# inference converted yolov9 models

python detect.py --source './data/images/horses.jpg' --img 640 --device 0 --weights './yolov9-c-converted.pt' --name yolov9_c_c_640_detect

# inference yolov9 models

python detect_dual.py --source './data/images/horses.jpg' --img 640 --device 0 --weights './yolov9-c.pt' --name yolov9_c_640_detect

# inference gelan models

python detect.py --source './data/images/horses.jpg' --img 640 --device 0 --weights './gelan-c.pt' --name gelan_c_c_640_detect

2.2、Opencv dnn测试

2.2.1、导出onnx模型

按照惯例将pt转换为onnx模型,

python export.py --weights yolov9-c.pt --include onnx

输出如下:

(yolo_pytorch) E:\DeepLearning\yolov9>python export.py --weights yolov9-c.pt --include onnx

export: data=E:\DeepLearning\yolov9\data\coco.yaml, weights=['yolov9-c.pt'], imgsz=[640, 640], batch_size=1, device=cpu, half=False, inplace=False, keras=False, optimize=False, int8=False, dynamic=False, simplify=False, opset=12, verbose=False, workspace=4, nms=False, agnostic_nms=False, topk_per_class=100, topk_all=100, iou_thres=0.45, conf_thres=0.25, include=['onnx']

YOLOv5 v0.1-30-ga8f43f3 Python-3.9.16 torch-1.13.1+cu117 CPU

Fusing layers...

Model summary: 604 layers, 50880768 parameters, 0 gradients, 237.6 GFLOPs

PyTorch: starting from yolov9-c.pt with output shape (1, 84, 8400) (98.4 MB)

ONNX: starting export with onnx 1.14.0...

ONNX: export success 9.6s, saved as yolov9-c.onnx (194.6 MB)

Export complete (14.7s)

Results saved to E:\DeepLearning\yolov9

Detect: python detect.py --weights yolov9-c.onnx

Validate: python val.py --weights yolov9-c.onnx

PyTorch Hub: model = torch.hub.load('ultralytics/yolov5', 'custom', 'yolov9-c.onnx')

Visualize: https://netron.app

2.2.2、c++测试代码

测试代码和 yolov8一样。

参考前面博文 【opencv dnn模块 示例(23) 目标检测 object_detection 之 yolov8】训练部分。

2.3、测试统计

这里仅给出 yolov9-c-converted 的测试数据

python (CPU):704ms

python (GPU):22ms

opencv dnn(CPU):760ms

opencv dnn(GPU):27ms (使用opencv4.8相同的代码,gpu版本结果异常,cpu正常; opencv 4.10 均正常)

以下包含 预处理+推理+后处理:

openvino(CPU): 316ms

onnxruntime(GPU): 29ms

TensorRT:19ms

2.4、导出端到端的模型

在网络最后层添加NMS(使用Efficient NMS实现)。

2.4.1、官方导出

官方的python代码可以导出端到端的模型,

python export.py --weights yolov9-c-converted.pt --include onnx_end2end,

导出输出显示如下:

(yolo_pytorch) E:\DeepLearning\yolov9> python export.py --weights yolov9-c-converted.pt --include onnx_end2end

export: data=E:\DeepLearning\yolov9\data\coco.yaml, weights=['yolov9-c-converted.pt'], imgsz=[640, 640], batch_size=1, device=cpu, half=False, inplace=True, keras=False, optimize=False, int8=False, dynamic=True, simplify=True, opset=12, verbose=False, workspace=4, nms=False, agnostic_nms=False, topk_per_class=100, topk_all=100, iou_thres=0.45, conf_thres=0.25, include=['onnx_end2end']

YOLO v0.1-61-g3e4f970 Python-3.9.16 torch-1.13.1+cu117 CPU

Fusing layers...

gelan-c summary: 387 layers, 25288768 parameters, 64944 gradients, 102.1 GFLOPs

PyTorch: starting from yolov9-c-converted.pt with output shape (1, 84, 8400) (49.1 MB)

ONNX END2END: starting export with onnx 1.14.0...

E:\DeepLearning\yolov9\models\experimental.py:127: FutureWarning: 'torch.onnx._patch_torch._graph_op' is deprecated in version 1.13 and will be removed in version 1.14. Please note 'g.op()' is to be removed from torch.Graph. Please open a GitHub issue if you need this functionality..

out = g.op("TRT::EfficientNMS_TRT",

Starting to simplify ONNX...

ONNX export success, saved as yolov9-c-converted-end2end.onnx

ONNX END2END: export success 18.1s, saved as yolov9-c-converted-end2end.onnx (96.7 MB)

Export complete (23.0s)

查看模型输出结构

发现在使用TensorRt 8.6.1 转换为trt ,使用脚本为 trtexec.exe --onnx=E:\DeepLearning\yolov9\yolov9-c-converted-end2end.onnx --explicitBatch --fp16 --saveEngine=yolov9_end2end.engine --shapes=images:1x3x640x640,运行结果如下

[06/17/2024-16:55:18] [W] --explicitBatch flag has been deprecated and has no effect!

[06/17/2024-16:55:18] [W] Explicit batch dim is automatically enabled if input model is ONNX or if dynamic shapes are provided when the engine is built.

[06/17/2024-16:55:18] [I] === Model Options ===

[06/17/2024-16:55:18] [I] Format: ONNX

[06/17/2024-16:55:18] [I] Model: E:\DeepLearning\yolov9\yolov9-c-converted-end2end.onnx

[06/17/2024-16:55:18] [I] Output:

[06/17/2024-16:55:18] [I] === Build Options ===

[06/17/2024-16:55:18] [I] Max batch: explicit batch

[06/17/2024-16:55:18] [I] Memory Pools: workspace: default, dlaSRAM: default, dlaLocalDRAM: default, dlaGlobalDRAM: default

[06/17/2024-16:55:18] [I] minTiming: 1

[06/17/2024-16:55:18] [I] avgTiming: 8

[06/17/2024-16:55:18] [I] Precision: FP32+FP16

[06/17/2024-16:55:18] [I] LayerPrecisions:

[06/17/2024-16:55:18] [I] Layer Device Types:

[06/17/2024-16:55:18] [I] Calibration:

[06/17/2024-16:55:18] [I] Refit: Disabled

[06/17/2024-16:55:18] [I] Version Compatible: Disabled

[06/17/2024-16:55:18] [I] TensorRT runtime: full

[06/17/2024-16:55:18] [I] Lean DLL Path:

[06/17/2024-16:55:18] [I] Tempfile Controls: { in_memory: allow, temporary: allow }

[06/17/2024-16:55:18] [I] Exclude Lean Runtime: Disabled

[06/17/2024-16:55:18] [I] Sparsity: Disabled

[06/17/2024-16:55:18] [I] Safe mode: Disabled

[06/17/2024-16:55:18] [I] Build DLA standalone loadable: Disabled

[06/17/2024-16:55:18] [I] Allow GPU fallback for DLA: Disabled

[06/17/2024-16:55:18] [I] DirectIO mode: Disabled

[06/17/2024-16:55:18] [I] Restricted mode: Disabled

[06/17/2024-16:55:18] [I] Skip inference: Disabled

[06/17/2024-16:55:18] [I] Save engine: yolov9_end2end.engine

[06/17/2024-16:55:18] [I] Load engine:

[06/17/2024-16:55:18] [I] Profiling verbosity: 0

[06/17/2024-16:55:18] [I] Tactic sources: Using default tactic sources

[06/17/2024-16:55:18] [I] timingCacheMode: local

[06/17/2024-16:55:18] [I] timingCacheFile:

[06/17/2024-16:55:18] [I] Heuristic: Disabled

[06/17/2024-16:55:18] [I] Preview Features: Use default preview flags.

[06/17/2024-16:55:18] [I] MaxAuxStreams: -1

[06/17/2024-16:55:18] [I] BuilderOptimizationLevel: -1

[06/17/2024-16:55:18] [I] Input(s)s format: fp32:CHW

[06/17/2024-16:55:18] [I] Output(s)s format: fp32:CHW

[06/17/2024-16:55:18] [I] Input build shape: images=1x3x640x640+1x3x640x640+1x3x640x640

[06/17/2024-16:55:18] [I] Input calibration shapes: model

[06/17/2024-16:55:18] [I] === System Options ===

....

....

[06/17/2024-17:02:27] [I] === Performance summary ===

[06/17/2024-17:02:27] [I] Throughput: 69.7171 qps

[06/17/2024-17:02:27] [I] Latency: min = 13.728 ms, max = 16.7085 ms, mean = 14.4367 ms, median = 14.3938 ms, percentile(90%) = 15.1073 ms, percentile(95%) = 15.4926 ms, percentile(99%) = 15.9502 ms

[06/17/2024-17:02:27] [I] Enqueue Time: min = 1.29956 ms, max = 4.9646 ms, mean = 1.87213 ms, median = 1.59424 ms, percentile(90%) = 2.72937 ms, percentile(95%) = 3.20074 ms, percentile(99%) = 4.37158 ms

[06/17/2024-17:02:27] [I] H2D Latency: min = 0.390503 ms, max = 0.815796 ms, mean = 0.423973 ms, median = 0.416992 ms, percentile(90%) = 0.450592 ms, percentile(95%) = 0.461243 ms, percentile(99%) = 0.502686 ms

[06/17/2024-17:02:27] [I] GPU Compute Time: min = 13.2698 ms, max = 16.1987 ms, mean = 13.9726 ms, median = 13.9363 ms, percentile(90%) = 14.595 ms, percentile(95%) = 14.9934 ms, percentile(99%) = 15.4856 ms

[06/17/2024-17:02:27] [I] D2H Latency: min = 0.0107422 ms, max = 0.0581055 ms, mean = 0.0401282 ms, median = 0.0395508 ms, percentile(90%) = 0.0425415 ms, percentile(95%) = 0.0435791 ms, percentile(99%) = 0.057373 ms

[06/17/2024-17:02:27] [I] Total Host Walltime: 3.02652 s

[06/17/2024-17:02:27] [I] Total GPU Compute Time: 2.94822 s

[06/17/2024-17:02:27] [W] * GPU compute time is unstable, with coefficient of variance = 3.84873%.

[06/17/2024-17:02:27] [W] If not already in use, locking GPU clock frequency or adding --useSpinWait may improve the stability.

[06/17/2024-17:02:27] [I] Explanations of the performance metrics are printed in the verbose logs.

[06/17/2024-17:02:27] [I]

&&&& PASSED TensorRT.trtexec [TensorRT v8601] # trtexec.exe --onnx=E:\DeepLearning\yolov9\yolov9-c-converted-end2end.onnx --explicitBatch --fp16 --saveEngine=yolov9_end2end.engine --shapes=images:1x3x640x640

2.4.2、第三方导出

也可以使用第三方脚本转换。导出的onnx模型最后输出为Concat层,其名称为 /model.22/Concat_5

使用第三方导出脚本 onnx_add_nms_op.py ,在当前目录下修改参数运行

if __name__ == "__main__":

onnx_path = "yolov9-c-converted.onnx"

graph = gs.import_onnx(onnx.load(onnx_path))

# 添加op得到Efficient NMS plugin的input

graph = get_nms_input(graph,class_num=1,output_name="/model.22/Concat_5")

# 添加Efficient NMS plugin

graph = create_and_add_plugin_node(graph, 100)

# 保存图结构

onnx.save(gs.export_onnx(graph),"yolov9-c-converted_nms.onnx")

运行后,输出较少,

(yolo_pytorch) E:\DeepLearning\yolov9> python onnx_add_nmx_op.py

[Variable (output0): (shape=[1, 84, 8400], dtype=float32)]

The batch size is: 1

生成了一个 yolov9-c-converted_nms.onnx 模型文件,我们查看最后的输出

之后再使用TensorRt 8.6.1 导出,运行正常

[06/12/2024-15:05:17] [I] === Performance summary ===

[06/12/2024-15:05:17] [I] Throughput: 73.1388 qps

[06/12/2024-15:05:17] [I] Latency: min = 13.5259 ms, max = 14.8257 ms, mean = 13.7575 ms, median = 13.681 ms, percentile(90%) = 14.062 ms, percentile(95%) = 14.2742 ms, percentile(99%) = 14.4763 ms

[06/12/2024-15:05:17] [I] Enqueue Time: min = 1.19153 ms, max = 7.70068 ms, mean = 1.77655 ms, median = 1.53348 ms, percentile(90%) = 2.60284 ms, percentile(95%) = 3.04126 ms, percentile(99%) = 4.99609 ms

[06/12/2024-15:05:17] [I] H2D Latency: min = 0.390991 ms, max = 0.495361 ms, mean = 0.42517 ms, median = 0.420258 ms, percentile(90%) = 0.452637 ms, percentile(95%) = 0.459717 ms, percentile(99%) = 0.468262 ms

[06/12/2024-15:05:17] [I] GPU Compute Time: min = 13.0632 ms, max = 14.3699 ms, mean = 13.2923 ms, median = 13.2141 ms, percentile(90%) = 13.612 ms, percentile(95%) = 13.8035 ms, percentile(99%) = 13.9883 ms

[06/12/2024-15:05:17] [I] D2H Latency: min = 0.0187988 ms, max = 0.0535889 ms, mean = 0.0400298 ms, median = 0.0396729 ms, percentile(90%) = 0.0429688 ms, percentile(95%) = 0.0437012 ms, percentile(99%) = 0.0458984 ms

[06/12/2024-15:05:17] [I] Total Host Walltime: 3.03532 s

[06/12/2024-15:05:17] [I] Total GPU Compute Time: 2.9509 s

[06/12/2024-15:05:17] [W] * GPU compute time is unstable, with coefficient of variance = 1.62347%.

[06/12/2024-15:05:17] [W] If not already in use, locking GPU clock frequency or adding --useSpinWait may improve the stability.

[06/12/2024-15:05:17] [I] Explanations of the performance metrics are printed in the verbose logs.

[06/12/2024-15:05:17] [I]

&&&& PASSED TensorRT.trtexec [TensorRT v8601] # trtexec.exe --onnx=E:\DeepLearning\yolov9\yolov9-c-converted_nms.onnx --explicitBatch --fp16 --saveEngine=yolov9_end2end.engine

2.3、C++主要部分代码

如果使用代码测试模型,在日志初始化之后,先显示启用插件功能:

nvinfer1:initLibNvInferPlugins(&logger, "");

之后就是加载模型,进行测试输出,注意,1个输入、4个输出

const auto inpWidth = 640;

const auto inpHeight = 640;

auto scoreThreshold = 0.45f;

float h_input[inpWidth * inpHeight * 3];

int h_output_0[1]; //1

float h_output_1[1 * 100 * 4]; //1

float h_output_2[1 * 100]; //1

int h_output_3[1 * 100]; //1

.......

CHECK(cudaMemcpy(bindings[0], static_cast<const void*>(blob.data), 1 * 3 * 640 * 640 * sizeof(float), cudaMemcpyHostToDevice));

context->executeV2(bindings.data());

cudaMemcpy(h_output_0, bindings[1], 1 * sizeof(int), cudaMemcpyDeviceToHost);

cudaMemcpy(h_output_1, bindings[2], 1 * 100 * 4 * sizeof(float), cudaMemcpyDeviceToHost);

cudaMemcpy(h_output_2, bindings[3], 1 * 100 * sizeof(float), cudaMemcpyDeviceToHost);

cudaMemcpy(h_output_3, bindings[4], 1 * 100 * sizeof(int), cudaMemcpyDeviceToHost);

后处理如下

float x_factor = (float)modelInput.cols / inpWidth;

float y_factor = (float)modelInput.rows / inpHeight;

int numbers = *h_output_0;

for(int i = 0; i < numbers; i++) {

float conf = h_output_2[i];

if(conf < scoreThreshold)

continue;

int clsId = h_output_3[i];

float x = h_output_1[i * 4];

float y = h_output_1[i * 4 + 1];

float w = h_output_1[i * 4 + 2];

float h = h_output_1[i * 4 + 3];

drawPred(clsId, conf, x * x_factor, y * y_factor, w * x_factor, h * y_factor, img);

}

注意解码的结果,x,y就是矩形框左上角点的坐标,而不是矩形框的中心点。测试结果如下

3、自定义数据及训练

3.1、准备工作

基本和yolov5以后的训练一样了,可以参考前面博文 【opencv dnn模块 示例(23) 目标检测 object_detection 之 yolov8】训练部分。

准备数据集,一个标注文件夹,一个图片文件夹,以及训练、测试使用的样本集图像序列(实际使用),

文件 myvoc.yaml 描述了数据集的情况,简单如下:

train: E:/DeepLearning/yolov9/custom-data/vehicle/train.txt

val: E:/DeepLearning/yolov9/custom-data/vehicle/val.txt

# number of classes

nc: 4

# class names

names: ["car", "huoche", "guache", "keche"]

3.2、训练

之后直接训练,例如使用 yolov9-c 模型训练,脚本如下:

python train_dual.py --device 0 --batch 8 --data custom-data/vehicle/myvoc.yaml --img 640 --cfg models/detect/yolov9-c.yaml --weights '' --name yolov9-c --hyp hyp.scratch-high.yaml --min-items 0 --epochs 50 --close-mosaic 15

运行过程前3次可能出现 AttributeError: 'FreeTypeFont' object has no attribute 'getsize' 错误,可以选择更新 Pillow 版本即可。

(yolo_pytorch) E:\DeepLearning\yolov9>python train_dual.py --device 0 --batch 8 --data custom-data/vehicle/myvoc.yaml --img 640 --cfg models/detect/yolov9-c.yaml --weights '' --name yolov9-c --hyp hyp.scratch-high.yaml --min-items 0 --epochs 50 --close-mosaic 15

train_dual: weights='', cfg=models/detect/yolov9-c.yaml, data=custom-data/vehicle/myvoc.yaml, hyp=hyp.scratch-high.yaml, epochs=50, batch_size=8, imgsz=640, rect=False, resume=False, nosave=False, noval=False, noautoanchor=False, noplots=False, evolve=None, bucket=, cache=None, image_weights=False, device=0, multi_scale=False, single_cls=False, optimizer=SGD, sync_bn=False, workers=8, project=runs\train, name=yolov9-c, exist_ok=False, quad=False, cos_lr=False, flat_cos_lr=False, fixed_lr=False, label_smoothing=0.0, patience=100, freeze=[0], save_period=-1, seed=0, local_rank=-1, min_items=0, close_mosaic=15, entity=None, upload_dataset=False, bbox_interval=-1, artifact_alias=latest

YOLO v0.1-61-g3e4f970 Python-3.9.16 torch-1.13.1+cu117 CUDA:0 (NVIDIA GeForce GTX 1080 Ti, 11264MiB)

hyperparameters: lr0=0.01, lrf=0.01, momentum=0.937, weight_decay=0.0005, warmup_epochs=3.0, warmup_momentum=0.8, warmup_bias_lr=0.1, box=7.5, cls=0.5, cls_pw=1.0, obj=0.7, obj_pw=1.0, dfl=1.5, iou_t=0.2, anchor_t=5.0, fl_gamma=0.0, hsv_h=0.015, hsv_s=0.7, hsv_v=0.4, degrees=0.0, translate=0.1, scale=0.9, shear=0.0, perspective=0.0, flipud=0.0, fliplr=0.5, mosaic=1.0, mixup=0.15, copy_paste=0.3

ClearML: run 'pip install clearml' to automatically track, visualize and remotely train YOLO in ClearML

Comet: run 'pip install comet_ml' to automatically track and visualize YOLO runs in Comet

TensorBoard: Start with 'tensorboard --logdir runs\train', view at http://localhost:6006/

Overriding model.yaml nc=80 with nc=4

from n params module arguments

0 -1 1 0 models.common.Silence []

1 -1 1 1856 models.common.Conv [3, 64, 3, 2]

2 -1 1 73984 models.common.Conv [64, 128, 3, 2]

3 -1 1 212864 models.common.RepNCSPELAN4 [128, 256, 128, 64, 1]

4 -1 1 164352 models.common.ADown [256, 256]

5 -1 1 847616 models.common.RepNCSPELAN4 [256, 512, 256, 128, 1]

6 -1 1 656384 models.common.ADown [512, 512]

7 -1 1 2857472 models.common.RepNCSPELAN4 [512, 512, 512, 256, 1]

8 -1 1 656384 models.common.ADown [512, 512]

9 -1 1 2857472 models.common.RepNCSPELAN4 [512, 512, 512, 256, 1]

10 -1 1 656896 models.common.SPPELAN [512, 512, 256]

11 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

12 [-1, 7] 1 0 models.common.Concat [1]

13 -1 1 3119616 models.common.RepNCSPELAN4 [1024, 512, 512, 256, 1]

14 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

15 [-1, 5] 1 0 models.common.Concat [1]

16 -1 1 912640 models.common.RepNCSPELAN4 [1024, 256, 256, 128, 1]

17 -1 1 164352 models.common.ADown [256, 256]

18 [-1, 13] 1 0 models.common.Concat [1]

19 -1 1 2988544 models.common.RepNCSPELAN4 [768, 512, 512, 256, 1]

20 -1 1 656384 models.common.ADown [512, 512]

21 [-1, 10] 1 0 models.common.Concat [1]

22 -1 1 3119616 models.common.RepNCSPELAN4 [1024, 512, 512, 256, 1]

23 5 1 131328 models.common.CBLinear [512, [256]]

24 7 1 393984 models.common.CBLinear [512, [256, 512]]

25 9 1 656640 models.common.CBLinear [512, [256, 512, 512]]

26 0 1 1856 models.common.Conv [3, 64, 3, 2]

27 -1 1 73984 models.common.Conv [64, 128, 3, 2]

28 -1 1 212864 models.common.RepNCSPELAN4 [128, 256, 128, 64, 1]

29 -1 1 164352 models.common.ADown [256, 256]

30 [23, 24, 25, -1] 1 0 models.common.CBFuse [[0, 0, 0]]

31 -1 1 847616 models.common.RepNCSPELAN4 [256, 512, 256, 128, 1]

32 -1 1 656384 models.common.ADown [512, 512]

33 [24, 25, -1] 1 0 models.common.CBFuse [[1, 1]]

34 -1 1 2857472 models.common.RepNCSPELAN4 [512, 512, 512, 256, 1]

35 -1 1 656384 models.common.ADown [512, 512]

36 [25, -1] 1 0 models.common.CBFuse [[2]]

37 -1 1 2857472 models.common.RepNCSPELAN4 [512, 512, 512, 256, 1]

38[31, 34, 37, 16, 19, 22] 1 21549752 models.yolo.DualDDetect [4, [512, 512, 512, 256, 512, 512]]

yolov9-c summary: 962 layers, 51006520 parameters, 51006488 gradients, 238.9 GFLOPs

AMP: checks passed

optimizer: SGD(lr=0.01) with parameter groups 238 weight(decay=0.0), 255 weight(decay=0.0005), 253 bias

albumentations: Blur(p=0.01, blur_limit=(3, 7)), MedianBlur(p=0.01, blur_limit=(3, 7)), ToGray(p=0.01), CLAHE(p=0.01, clip_limit=(1, 4.0), tile_grid_size=(8, 8))

train: Scanning E:\DeepLearning\yolov9\custom-data\vehicle\train.cache... 998 images, 0 backgrounds, 0 corrupt: 100%|██████████| 998/998 00:00

val: Scanning E:\DeepLearning\yolov9\custom-data\vehicle\val.cache... 998 images, 0 backgrounds, 0 corrupt: 100%|██████████| 998/998 00:00

Plotting labels to runs\train\yolov9-c4\labels.jpg...

Image sizes 640 train, 640 val

Using 8 dataloader workers

Logging results to runs\train\yolov9-c4

Starting training for 50 epochs...

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

0/49 9.46G 5.685 6.561 5.295 37 640: 0%| | 0/125 00:04Exception in thread Thread-5:

Traceback (most recent call last):

File "D:\Python\anaconda3\envs\yolo_pytorch\lib\threading.py", line 980, in _bootstrap_inner

self.run()

File "D:\Python\anaconda3\envs\yolo_pytorch\lib\threading.py", line 917, in run

self._target(*self._args, **self._kwargs)

File "E:\DeepLearning\yolov9\utils\plots.py", line 300, in plot_images

annotator.box_label(box, label, color=color)

File "E:\DeepLearning\yolov9\utils\plots.py", line 86, in box_label

w, h = self.font.getsize(label) # text width, height

AttributeError: 'FreeTypeFont' object has no attribute 'getsize'

WARNING TensorBoard graph visualization failure Only tensors, lists, tuples of tensors, or dictionary of tensors can be output from traced functions

0/49 9.67G 6.055 6.742 5.403 65 640: 2%|▏ | 2/125 00:08Exception in thread Thread-6:

Traceback (most recent call last):

File "D:\Python\anaconda3\envs\yolo_pytorch\lib\threading.py", line 980, in _bootstrap_inner

self.run()

File "D:\Python\anaconda3\envs\yolo_pytorch\lib\threading.py", line 917, in run

self._target(*self._args, **self._kwargs)

File "E:\DeepLearning\yolov9\utils\plots.py", line 300, in plot_images

annotator.box_label(box, label, color=color)

File "E:\DeepLearning\yolov9\utils\plots.py", line 86, in box_label

w, h = self.font.getsize(label) # text width, height

AttributeError: 'FreeTypeFont' object has no attribute 'getsize'

0/49 9.67G 6.201 6.812 5.505 36 640: 2%|▏ | 3/125 00:09Exception in thread Thread-7:

Traceback (most recent call last):

File "D:\Python\anaconda3\envs\yolo_pytorch\lib\threading.py", line 980, in _bootstrap_inner

self.run()

File "D:\Python\anaconda3\envs\yolo_pytorch\lib\threading.py", line 917, in run

self._target(*self._args, **self._kwargs)

File "E:\DeepLearning\yolov9\utils\plots.py", line 300, in plot_images

annotator.box_label(box, label, color=color)

File "E:\DeepLearning\yolov9\utils\plots.py", line 86, in box_label

w, h = self.font.getsize(label) # text width, height

AttributeError: 'FreeTypeFont' object has no attribute 'getsize'

0/49 10.2G 5.603 6.38 5.564 21 640: 100%|██████████| 125/125 02:44

Class Images Instances P R mAP50 mAP50-95: 100%|██████████| 63/63 01:12

all 998 2353 3.88e-05 0.00483 2.16e-05 2.63e-06

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

1/49 12.1G 5.553 6.034 5.431 40 640: 100%|██████████| 125/125 02:53

Class Images Instances P R mAP50 mAP50-95: 100%|██████████| 63/63 01:29

all 998 2353 0.00398 0.0313 0.00276 0.000604

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

2/49 12.1G 5.061 5.749 5.102 49 640: 100%|██████████| 125/125 07:44

Class Images Instances P R mAP50 mAP50-95: 100%|██████████| 63/63 01:26

all 998 2353 0.00643 0.364 0.00681 0.0017

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

3/49 12.1G 4.292 5.013 4.58 35 640: 100%|██████████| 125/125 08:04

Class Images Instances P R mAP50 mAP50-95: 100%|██████████| 63/63 01:28

all 998 2353 0.0425 0.242 0.0273 0.00944

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

4/49 12.1G 3.652 4.46 4.164 30 640: 34%|███▎ | 42/125 02:45

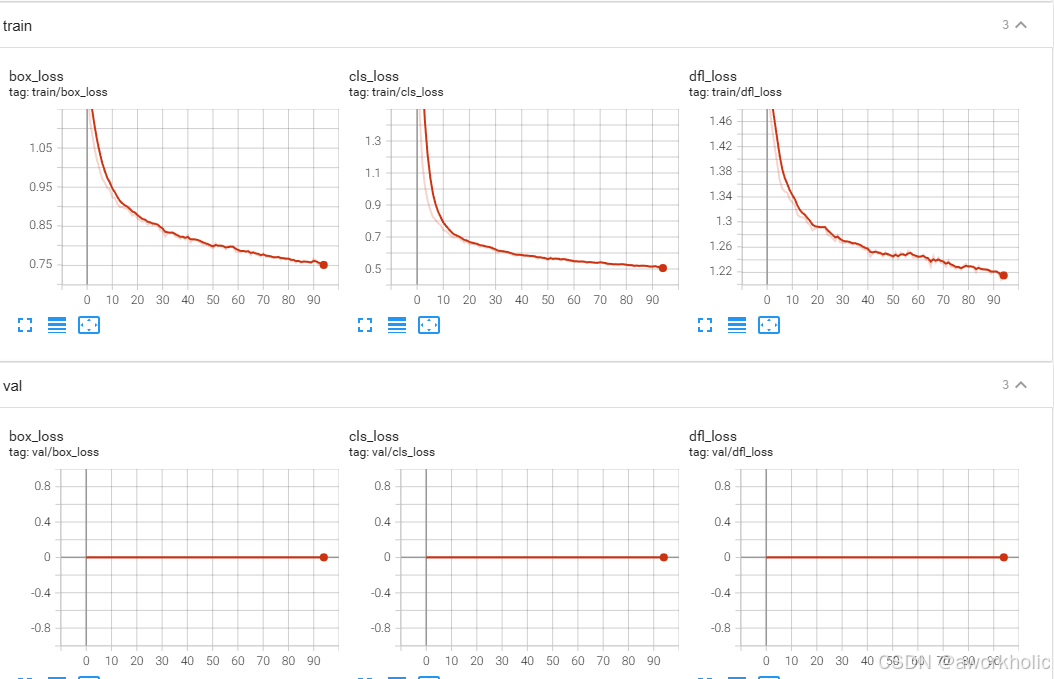

3.2.1、训练数据可视化的 val_loss 全为 0 问题解决

使用 tensorboard --logdir runs/train 之后,打开 http://localhost:6006/,我们实时查看训练中的loss 和 ap等数据。但是 val loss 曲线一直为0

解决方案,参考 https://github.com/WongKinYiu/yolov9/issues/132,修改 val_dual.py 文件, 打开第197注释即可。

193 # Loss

194 if compute_loss:

195 preds = preds[1]

196 #train_out = train_out[1]

197 loss += compute_loss(train_out, targets)[1] # box, obj, cls

198 else:

199 preds = preds[0][1]

3.3、模型重参数化

这里以 yolov9-c 转换为 yolov9-c-converted 模型为例。

例如在上一节我们已经训练好了一个模型,如下

我们使用如下reparameterization_yolov9-c.py脚本,注意 不同模型使用的重参数化脚本可能不一致。

import torch

from models.yolo import Model

model_file_path = r'runs\train\yolov9-c5\weights\best.pt'

model_converted_path = r'runs\train\yolov9-c5\weights\best-c-c.pt'

nc = 4

cfg = "./models/detect/gelan-c.yaml"

device = torch.device("cpu")

#cfg = "./models/detect/gelan-c.yaml"

model = Model(cfg, ch=3, nc=nc, anchors=3)

#model = model.half()

model = model.to(device)

_ = model.eval()

ckpt = torch.load(model_file_path, map_location='cpu')

model.names = ckpt['model'].names

model.nc = ckpt['model'].nc

idx = 0

for k, v in model.state_dict().items():

if "model.{}.".format(idx) in k:

if idx < 22:

kr = k.replace("model.{}.".format(idx), "model.{}.".format(idx+1))

model.state_dict()[k] -= model.state_dict()[k]

model.state_dict()[k] += ckpt['model'].state_dict()[kr]

elif "model.{}.cv2.".format(idx) in k:

kr = k.replace("model.{}.cv2.".format(idx), "model.{}.cv4.".format(idx+16))

model.state_dict()[k] -= model.state_dict()[k]

model.state_dict()[k] += ckpt['model'].state_dict()[kr]

elif "model.{}.cv3.".format(idx) in k:

kr = k.replace("model.{}.cv3.".format(idx), "model.{}.cv5.".format(idx+16))

model.state_dict()[k] -= model.state_dict()[k]

model.state_dict()[k] += ckpt['model'].state_dict()[kr]

elif "model.{}.dfl.".format(idx) in k:

kr = k.replace("model.{}.dfl.".format(idx), "model.{}.dfl2.".format(idx+16))

model.state_dict()[k] -= model.state_dict()[k]

model.state_dict()[k] += ckpt['model'].state_dict()[kr]

else:

while True:

idx += 1

if "model.{}.".format(idx) in k:

break

if idx < 22:

kr = k.replace("model.{}.".format(idx), "model.{}.".format(idx+1))

model.state_dict()[k] -= model.state_dict()[k]

model.state_dict()[k] += ckpt['model'].state_dict()[kr]

elif "model.{}.cv2.".format(idx) in k:

kr = k.replace("model.{}.cv2.".format(idx), "model.{}.cv4.".format(idx+16))

model.state_dict()[k] -= model.state_dict()[k]

model.state_dict()[k] += ckpt['model'].state_dict()[kr]

elif "model.{}.cv3.".format(idx) in k:

kr = k.replace("model.{}.cv3.".format(idx), "model.{}.cv5.".format(idx+16))

model.state_dict()[k] -= model.state_dict()[k]

model.state_dict()[k] += ckpt['model'].state_dict()[kr]

elif "model.{}.dfl.".format(idx) in k:

kr = k.replace("model.{}.dfl.".format(idx), "model.{}.dfl2.".format(idx+16))

model.state_dict()[k] -= model.state_dict()[k]

model.state_dict()[k] += ckpt['model'].state_dict()[kr]

_ = model.eval()

m_ckpt = {'model': model.half(),

'optimizer': None,

'best_fitness': None,

'ema': None,

'updates': None,

'opt': None,

'git': None,

'date': None,

'epoch': -1}

torch.save(m_ckpt, model_converted_path)

运行成功后,多了一个文件 best-c-c.pt 文件,且和官方提供的大小相同

我们进行测试如下,

(yolo_pytorch) E:\DeepLearning\yolov9>python detect.py --weights runs\train\yolov9-c5\weights\best-c-c.pt --source custom-data\vehicle\images\11.jpg --device 0

detect: weights=['runs\\train\\yolov9-c5\\weights\\best-c-c.pt'], source=custom-data\vehicle\images\11.jpg, data=data\coco128.yaml, imgsz=[640, 640], conf_thres=0.25, iou_thres=0.45, max_det=1000, device=0, view_img=False, save_txt=False, save_conf=False, save_crop=False, nosave=False, classes=None, agnostic_nms=False, augment=False, visualize=False, update=False, project=runs\detect, name=exp, exist_ok=False, line_thickness=3, hide_labels=False, hide_conf=False, half=False, dnn=False, vid_stride=1

YOLO v0.1-61-g3e4f970 Python-3.9.16 torch-1.13.1+cu117 CUDA:0 (NVIDIA GeForce GTX 1080 Ti, 11264MiB)

Fusing layers...

gelan-c summary: 387 layers, 25230172 parameters, 6348 gradients, 101.8 GFLOPs

image 1/1 E:\DeepLearning\yolov9\custom-data\vehicle\images\11.jpg: 480x640 8 cars, 52.2ms

Speed: 0.0ms pre-process, 52.2ms inference, 7.0ms NMS per image at shape (1, 3, 640, 640)

Results saved to runs\detect\exp39

结果如下图

本文介绍了YOLOv9目标检测网络,它引入PGI和GELAN架构,解决信息瓶颈问题,提高准确性和参数效率。还进行了官方Python、Opencv dnn等测试,给出不同环境下的推理时间。此外,说明了自定义数据的准备、训练过程以及模型重参数化方法。

本文介绍了YOLOv9目标检测网络,它引入PGI和GELAN架构,解决信息瓶颈问题,提高准确性和参数效率。还进行了官方Python、Opencv dnn等测试,给出不同环境下的推理时间。此外,说明了自定义数据的准备、训练过程以及模型重参数化方法。

449

449

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?