import tensorflow as tf

from keras.layers import *

import keras.backend as K

from tensorflow.python.ops import control_flow_ops

from tensorflow.python.ops import tensor_array_ops

def cosine2(input_t, states):

'''

余弦相似度

:param q:

:param a:

:return:

'''

state_shape = K.int_shape(states)

shape_s = state_shape[-1]

batch_t = state_shape[0]

pooled_len_1 = tf.sqrt(tf.reduce_sum(tf.multiply(input_t , input_t),1))

pooled_len_1=tf.reshape(pooled_len_1, (batch_t, 1))

pooled_len_1 = tf.tile(pooled_len_1,[1,3])

pooled_len_2 = tf.sqrt(tf.reduce_sum(tf.multiply(states , states),2))

pooled_mul_12 = tf.matmul(states, tf.reshape(input_t, (-1, shape_s, 1)))

pooled_mul_12 = tf.transpose(pooled_mul_12, [2, 0, 1])

score = tf.div(pooled_mul_12,pooled_len_1*pooled_len_2)

score = tf.transpose(score, [1, 0, 2])

return score

def cosine22(input_t, states):

'''

余弦相似度 numpy

:param q:

:param a:

:return:

'''

state_shape =states.shape

shape_s = state_shape[-1]

# timestep = state_shape[1]

pooled_len_1 = np.sqrt(np.sum(np.multiply(input_t , input_t),1))

pooled_len_1=np.tile(np.reshape(pooled_len_1, (-1, 1)), 3)

pooled_len_2 = np.sqrt(np.sum(np.multiply(states , states),2))

pooled_mul_12 = np.matmul(states, np.reshape(input_t, (-1, shape_s, 1)))

pooled_mul_12=np.transpose(pooled_mul_12, [2, 0, 1])

score = np.divide(pooled_mul_12, pooled_len_1 * pooled_len_2)

# score= np.matmul(states, np.reshape(input_t, (-1, shape_s, 1)))

print(score)

score=np.transpose(score,[1,0,2])

print(score)

return score

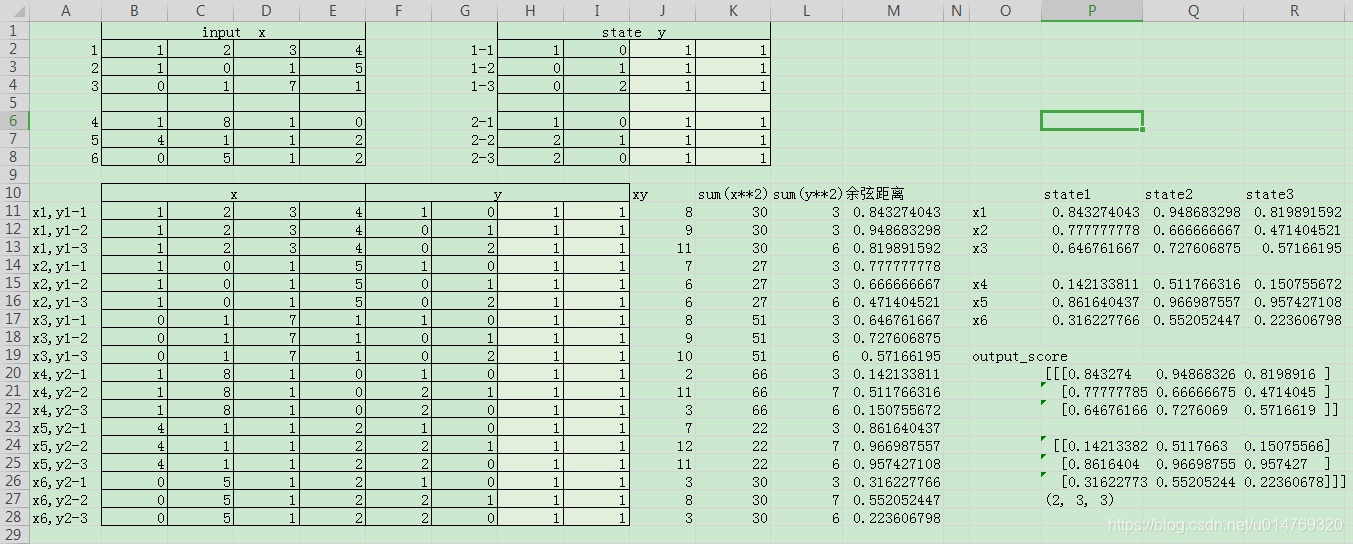

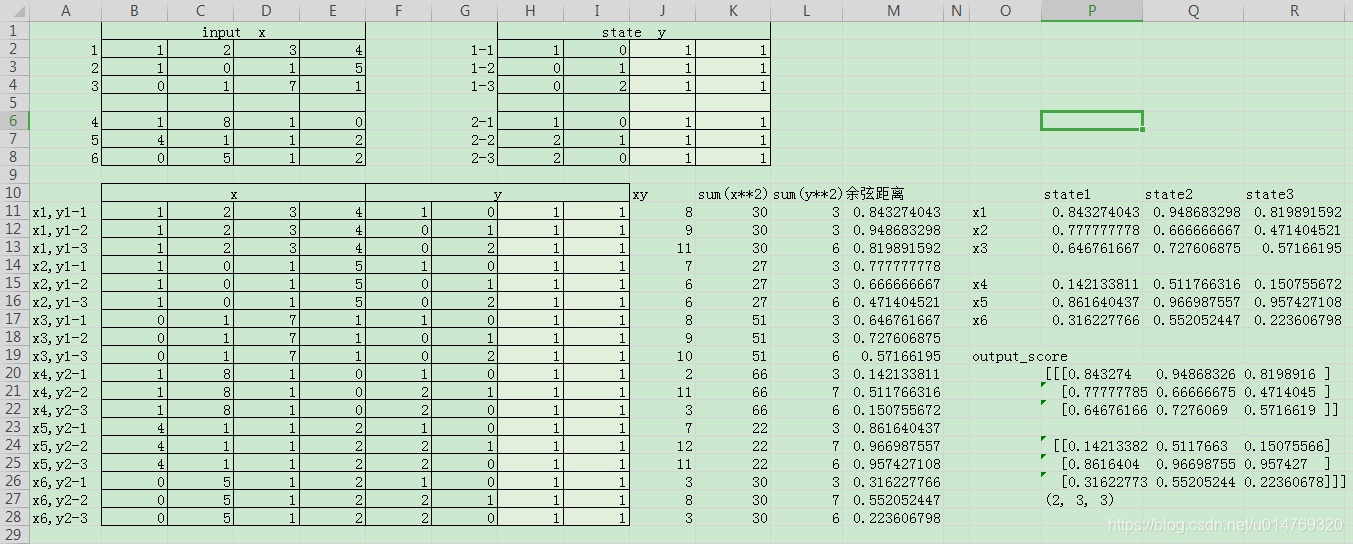

inputs=tf.constant([[[1,2,3,4],[1,0,1,5],[0,1,7,1]],

[[1,8,1,0],[4,1,1,2],[0,5,1,2]]],dtype="float32")

state = tf.constant([[[1,0,1,1],[0,1,1,1],[0,2,1,1]],

[[1,0,1,1],[2,1,1,1],[2,0,1,1]]],dtype="float32")

def lala(inputs,state):

input_shape = K.int_shape(inputs)

input_length = input_shape[1]

ndim = len(inputs.get_shape())

axes = [1, 0] + list(range(2, ndim))

inputs = tf.transpose(inputs, (axes))

time_steps = tf.shape(inputs)[0]

print(inputs.shape)

print(state.shape)

output_ta = tensor_array_ops.TensorArray(

dtype=inputs.dtype,

size=time_steps,

tensor_array_name='output_ta')

input_ta = tensor_array_ops.TensorArray(

dtype=inputs.dtype,

size=time_steps,

tensor_array_name='input_ta')

cosin_score = tensor_array_ops.TensorArray(

dtype=inputs.dtype,

size=time_steps,

tensor_array_name='cosin_score')

input_ta = input_ta.unstack(inputs)

time = tf.constant(0, dtype='int32', name='time')

def _step (time, output_ta_t,cosin_score_t):

current_input = input_ta.read(time)

score = cosine2( current_input,state)

cosin_score_t = cosin_score_t.write(time, score)

output=current_input

# output, new_states = self.step_function(current_input,

# tuple(states))

# # 内部的逻辑

# for state, new_state in zip(states, new_states):

# new_state.set_shape(state.get_shape())

output_ta_t = output_ta_t.write(time, output)

return time + 1, output_ta_t,cosin_score_t

last_time,output,cos_score= control_flow_ops.while_loop(

cond=lambda time, *_: time < time_steps,

body=_step,

loop_vars=(time, output_ta,cosin_score),

parallel_iterations=32,

swap_memory=True,

maximum_iterations=input_length)

outputs = output.stack()

scores=cos_score.stack()

last_output = output_ta.read(last_time - 1)

axes = [1, 0] + list(range(2, len(outputs.get_shape())))

outputs = tf.transpose(outputs, axes)

scores=tf.transpose(scores, [2, 1, 0, 3])

return scores[0]

aaa=K.eval(lala(inputs,state))

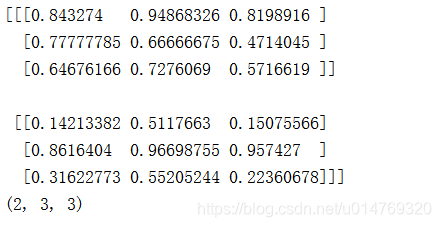

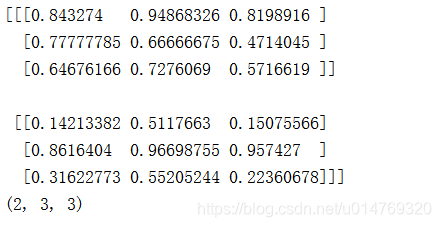

print(aaa)

print(aaa.shape)

本文介绍了一种使用TensorFlow和Keras实现的余弦相似度计算方法,包括定义输入张量、状态张量,以及通过矩阵运算计算余弦相似度的详细步骤。该方法适用于批量数据处理,为文本相似度、推荐系统等应用提供了基础。

本文介绍了一种使用TensorFlow和Keras实现的余弦相似度计算方法,包括定义输入张量、状态张量,以及通过矩阵运算计算余弦相似度的详细步骤。该方法适用于批量数据处理,为文本相似度、推荐系统等应用提供了基础。

491

491

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?