1、应用场景

- Hbase:分布式数据库

- MapReduce:分布式计算

- 实现分布式计算

- 读写分布式存储

- HDFS:

文件系统,以文件形式管理数据,一次写入,多次读取 Hive:数据仓库,以表形式管理数据,但底层数据还是文件,一次写入,多次读取Hbase:数据库,以表的形式管理数据,但底层优先使用内存,随机实时读写访问

2、集成原理

- 读:

InputFormat

- Text/File:读取文件

- DB:读数据库

- Table:读Hbase

- 写:

OutputFormat

- Text/File:写文件

- DB:写数据库

- Table:写Hbase

3、读Hbase数据

- 需求:读stu表中的数据,写入文件

- 分析

- TableInputFormat

- TextOutputFormat

- 读Hbase数据的开发规则

job.setInputFormat(xxxInputFormat.class);

xxxInputFormat.setInputPath(inputPath);

job.setMapperClass

job.setMapperOutputKeyClass

job.setMapperOutputValueClass

- 现在读hbase,MapReduce将读Hbase的配置做了封装

- 调用这个 方法,传递参数,就能实现输入input和Map的配置

TableMapReduceUtil.initTableMapperJob(

给定输入和Map的属性

);

- Mapper类需要继承自TableMapper

- 读Hbase:用的是TableInputFormat

- Key:ImmutableBytesWritable:一种特殊的字节数组类型

- Value:Result:存储一个Rowkey的所有数据

- 一个Rowkey变成一个KV,调用一次map方法

- KeyIn:不能指定,定死了

- ValueIn:不能指定,定死了

- KeyOut

- ValueOut

- 其他的:Reduce和Shuffle和以前写法是一样的

- 实现

public class ReadHbaseTable extends Configured implements Tool {

@Override

public int run(String[] args) throws Exception {

Job job = Job.getInstance(this.getConf(),"read");

job.setJarByClass(ReadHbaseTable.class);

Scan scan = new Scan();

TableMapReduceUtil.initTableMapperJob(

"student:stu",

scan,

ReadHbaseMap.class,

Text.class,

Text.class,

job

);

job.setNumReduceTasks(0);

TextOutputFormat.setOutputPath(job,new Path("datas/output/hbase"));

return job.waitForCompletion(true) ? 0:-1;

}

public static void main(String[] args) throws Exception {

Configuration conf = HBaseConfiguration.create();

int status = ToolRunner.run(conf, new ReadHbaseTable(), args);

System.exit(status);

}

public static class ReadHbaseMap extends TableMapper<Text, Text>{

Text outputKey = new Text();

Text outputValue = new Text();

@Override

protected void map(ImmutableBytesWritable key, Result value, Context context) throws IOException, InterruptedException {

String rowkey = Bytes.toString(key.get());

this.outputKey.set(rowkey);

for(Cell cell : value.rawCells()){

String family = Bytes.toString(CellUtil.cloneFamily(cell));

String column = Bytes.toString(CellUtil.cloneQualifier(cell));

String val = Bytes.toString(CellUtil.cloneValue(cell));

long ts = cell.getTimestamp();

this.outputValue.set(family+"\t"+column+"\t"+val+"\t"+ts);

context.write(this.outputKey,this.outputValue);

}

}

}

}

4、存储到Hbase

- 需求:将文件中数据写入Hbase

- 分析

- Input:TextInputFormat

- Output:TableOutputFormat

- 写入Hbase的规则

- Driver类中配置reduce和output,不能用以前的方式,调用Hbase封装好的方法来配置reduce和output

- 以前

job.setreduceclass

job.setoutputkey

job.setoutputvalue

job.setoutputformat(xxxxOutputFormat.class);

xxxxOutputFormat.setOutputpath

TableMapReduceUtil.initTableReducerJob(

配置reduce和输出的参数

);

- Reducer类必须继承自TableReducer

- 其他的代码还是和以前一样

- 实现

- 开发代码,打成jar包

public class WriteHbaseTable extends Configured implements Tool {

@Override

public int run(String[] args) throws Exception {

Job job = Job.getInstance(this.getConf(),"write");

job.setJarByClass(WriteHbaseTable.class);

TextInputFormat.setInputPaths(job,new Path(args[0]));

job.setMapperClass(WriteToHbaseMap.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(Put.class);

TableMapReduceUtil.initTableReducerJob(

"student:mrwrite",

WriteToHbaseReduce.class,

job

);

return job.waitForCompletion(true) ? 0:-1;

}

public static void main(String[] args) throws Exception {

Configuration conf = HBaseConfiguration.create();

int status = ToolRunner.run(conf, new WriteHbaseTable(), args);

System.exit(status);

}

public static class WriteToHbaseMap extends Mapper<LongWritable,Text,Text, Put>{

Text rowkey = new Text();

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String[] split = value.toString().split("\t");

String row = split[0];

String name = split[1];

String age = split[2];

String sex = split[3];

this.rowkey.set(split[0]);

Put putname = new Put(Bytes.toBytes(row));

putname.addColumn(Bytes.toBytes("info"),Bytes.toBytes("name"),Bytes.toBytes(name));

context.write(rowkey,putname);

Put putage = new Put(Bytes.toBytes(row));

putage.addColumn(Bytes.toBytes("info"),Bytes.toBytes("age"),Bytes.toBytes(age));

context.write(rowkey,putage);

Put putsex = new Put(Bytes.toBytes(row));

putsex.addColumn(Bytes.toBytes("info"),Bytes.toBytes("sex"),Bytes.toBytes(sex));

context.write(rowkey,putsex);

}

}

public static class WriteToHbaseReduce extends TableReducer<Text,Put,Text>{

@Override

protected void reduce(Text key, Iterable<Put> values, Context context) throws IOException, InterruptedException {

for (Put value : values) {

context.write(key,value);

}

}

}

}

create 'student:mrwrite','info'

hdfs dfs -mkdir -p /user/hbaseinput/

hdfs dfs -put hbaseinput.txt /user/hbaseinput/

yarn jar hbase.jar cn.hanjiaxiaozhi.hbase.mr.WriteHbaseTable /user/hbaseinput/

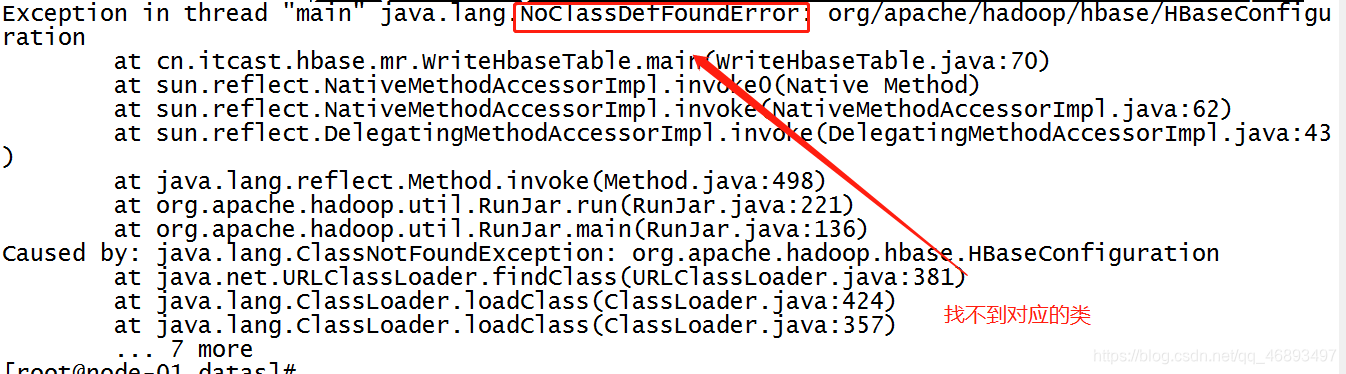

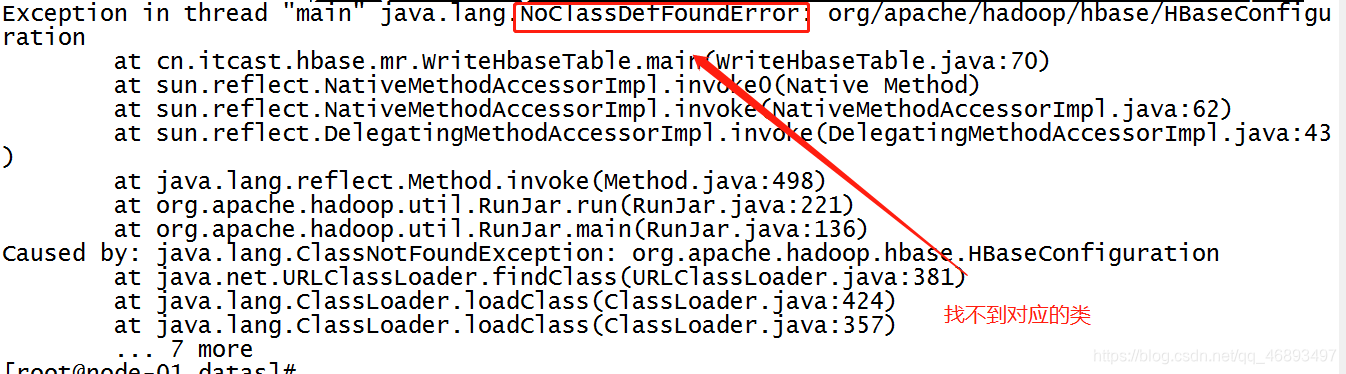

- 报错:

- 分析

- 环境变量中类冲突,jar包冲突

- 环境变量中没有这个类,缺少jar包

- 当前我们在Hadoop中运行Hbase的程序,肯定会缺少Hbase的jar包

- 解决

hbase mapredcp

/export/servers/hbase-1.2.0-cdh5.14.0/lib/hbase-client-1.2.0-cdh5.14.0.jar:/export/servers/hbase-1.2.0-cdh5.14.0/lib/hbase-server-1.2.0-cdh5.14.0.jar:/export/servers/hbase-1.2.0-cdh5.14.0/lib/hbase-prefix-tree-1.2.0-cdh5.14.0.jar:/export/servers/hbase-1.2.0-cdh5.14.0/lib/htrace-core-3.2.0-incubating.jar:/export/servers/hbase-1.2.0-cdh5.14.0/lib/hbase-common-1.2.0-cdh5.14.0.jar:/export/servers/hbase-1.2.0-cdh5.14.0/lib/zookeeper-3.4.5-cdh5.14.0.jar:/export/servers/hbase-1.2.0-cdh5.14.0/lib/guava-12.0.1.jar:/export/servers/hbase-1.2.0-cdh5.14.0/lib/hbase-hadoop-compat-1.2.0-cdh5.14.0.jar:/export/servers/hbase-1.2.0-cdh5.14.0/lib/netty-all-4.0.23.Final.jar:/export/servers/hbase-1.2.0-cdh5.14.0/lib/hbase-protocol-1.2.0-cdh5.14.0.jar:/export/servers/hbase-1.2.0-cdh5.14.0/lib/protobuf-java-2.5.0.jar:/export/servers/hbase-1.2.0-cdh5.14.0/lib/metrics-core-2.2.0.jar

- 将这些jar包的路径添加到Hadoop的运行环境变量中

export HADOOP_CLASSPATH=$HADOOP_CLASSPATH:/export/servers/hbase-1.2.0-cdh5.14.0/lib/hbase-client-1.2.0-cdh5.14.0.jar:/export/servers/hbase-1.2.0-cdh5.14.0/lib/hbase-server-1.2.0-cdh5.14.0.jar:/export/servers/hbase-1.2.0-cdh5.14.0/lib/hbase-prefix-tree-1.2.0-cdh5.14.0.jar:/export/servers/hbase-1.2.0-cdh5.14.0/lib/htrace-core-3.2.0-incubating.jar:/export/servers/hbase-1.2.0-cdh5.14.0/lib/hbase-common-1.2.0-cdh5.14.0.jar:/export/servers/hbase-1.2.0-cdh5.14.0/lib/zookeeper-3.4.5-cdh5.14.0.jar:/export/servers/hbase-1.2.0-cdh5.14.0/lib/guava-12.0.1.jar:/export/servers/hbase-1.2.0-cdh5.14.0/lib/hbase-hadoop-compat-1.2.0-cdh5.14.0.jar:/export/servers/hbase-1.2.0-cdh5.14.0/lib/netty-all-4.0.23.Final.jar:/export/servers/hbase-1.2.0-cdh5.14.0/lib/hbase-protocol-1.2.0-cdh5.14.0.jar:/export/servers/hbase-1.2.0-cdh5.14.0/lib/protobuf-java-2.5.0.jar:/export/servers/hbase-1.2.0-cdh5.14.0/lib/metrics-core-2.2.0.jar

本文介绍了Hbase分布式数据库与MapReduce分布式计算的集成应用。阐述了应用场景、集成原理,详细说明了读Hbase数据到文件和将文件数据存储到Hbase的开发规则及实现过程,还分析了运行程序时出现的jar包冲突和缺失问题,并给出解决办法。

本文介绍了Hbase分布式数据库与MapReduce分布式计算的集成应用。阐述了应用场景、集成原理,详细说明了读Hbase数据到文件和将文件数据存储到Hbase的开发规则及实现过程,还分析了运行程序时出现的jar包冲突和缺失问题,并给出解决办法。

870

870

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?