论文地址:Focaler-IoU: More Focused Intersection over Union Loss

代码:GitHub

摘要:

边框回归在目标检测领域扮演着关键角色,目标检测的定位精度很大程度上取决于边框回归损失函数。现有的研究通过利用边框之间的几何关系来提升回归效果,而忽略难易样本分布对于边框回归的影响。在本文我们分析了难易样本分布对回归结果的影响,接着我们提出Focaler-IoU方法,其能够在不同的检测任务中通过聚焦不同的回归样本来提升检测器的效果。最后针对不同的检测任务使用现有先进检测器与回归方法进行对比实验,使用本文方法后检测效果得到进一步提升。

分析:

样本不均衡问题存在于各类目标检测任务中,根据目标被检测的难易可以被分为困难样本与简单样本。从目标的尺度来分析,一般的检测目标可以被看作是简单样本,极小目标由于难以精准定位可以看作困难样本。对于简单样本占主导的检测任务,在边框回归过程聚焦简单样本,将有助于提升检测效果。对于困难样本占比较高的检测任务来说与之相反,需要聚焦困难样本的边框回归。

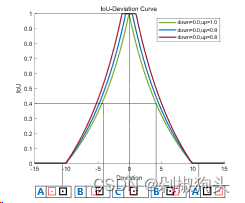

如图:其中图左与图右分别代表针对困难样本与简单样本的线性区间映射曲线图。

Focaler-IoU:

为了在能够在不同的检测任务中聚焦不同的回归样本,我们使用线性区间映射的方法来重构IoU损失,使得边框回归效果得到提升。公式如下:

其中![]() 为重构之后的Focaler-IoU,IoU为原始IoU值,

为重构之后的Focaler-IoU,IoU为原始IoU值,![]() 。通过调节d与u的值能够使得

。通过调节d与u的值能够使得![]() 聚焦不同的回归样本。

聚焦不同的回归样本。

其损失定义如下:

将Focaler-IoU应用至现有基于IoU的边框回归损失函数中![]() ,

,![]() ,

, ![]() ,

, ![]() ,

,![]() 定义如下:

定义如下:

实验:

PASCAL VOC on YOLOv8

AI-TOD on YOLOv5

结论:

在本文,我们分析了难易样本的分布对目标检测的影响,当困难样本占主导时,需要聚焦困难样本提升检测效果。当简单样本占比较大,则与之相反。接着我们提出了Focaler-IoU方法,通过线性区间映射重构原有IoU损失,从而达到聚焦难易样本的目的。最后通过对比实验证明了本文方法能够有效提升检测效果。

本文探讨了目标检测中难易样本对边框回归的影响,提出Focaler-IoU方法,通过线性区间映射调整IoU损失,以适应不同任务中对简单/困难样本的关注。实验证明该方法能有效提升检测性能。

本文探讨了目标检测中难易样本对边框回归的影响,提出Focaler-IoU方法,通过线性区间映射调整IoU损失,以适应不同任务中对简单/困难样本的关注。实验证明该方法能有效提升检测性能。

6277

6277

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?