准备工作

- 导入库

import re #正则表达式

import time #程序睡眠防止反爬

import requests #访问url

from wordcloud import WordCloud #词云库

from wordcloud import STOPWORDS, ImageColorGenerator #评论中的一些词语在分割后可能会产生歧义, 需要将其停用

import matplotlib.pyplot as plt #绘图库

import matplotlib #设定绘图风格

from PIL import Image #导入词云背景图所需要的库

import numpy as np #不用我说了吧

from imageio import imread #导入词云背景图所备用的库

import jieba #中文分词库

- 目标

https://www.mafengwo.cn/search/q.php?q=%E4%BC%8A%E7%8A%81&seid=F5A9009A-07AE-4577-A84B-F653CB21522B

主函数体

import re

import time

import requests

from wordcloud import WordCloud

from wordcloud import WordCloud, STOPWORDS, ImageColorGenerator

import matplotlib.pyplot as plt

import matplotlib

from PIL import Image

import numpy as np

from imageio import imread

import jieba

#评论内容所在的url,?后面是get请求需要的参数内容

def getComment(n, http):

Comment = str()

comment_url='http://pagelet.mafengwo.cn/poi/pagelet/poiCommentListApi?'

for i in range(len(http)):

poi = http[i][http[i].rfind("/") + 1 : http[i].rfind(".")]

requests_headers={

'Referer': http[i],

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.88 Safari/537.36'

}#请求头

for num in range(1, n):

requests_data={

'params': '{"poi_id":'+ poi + ',"page":"%d","just_comment":1}' % (num) #经过测试只需要用params参数就能爬取内容

}

response =requests.get(url=comment_url,headers=requests_headers,params=requests_data)

if 200 == response.status_code:

page = response.content.decode('unicode-escape', 'ignore').encode('utf-8', 'ignore').decode('utf-8')#爬取页面并且解码

page = page.replace('\\/', '/')#将\/转换成/

#日期列表

date_pattern = r'<a class="btn-comment _j_comment" title="添加评论">评论</a>.*?\n.*?<span class="time">(.*?)</span>'

date_list = re.compile(date_pattern).findall(page)

#星级列表

star_pattern = r'<span class="s-star s-star(\d)"></span>'

star_list = re.compile(star_pattern).findall(page)

#评论列表

comment_pattern = r'<p class="rev-txt">([\s\S]*?)</p>'

comment_list = re.compile(comment_pattern).findall(page)

for num in range(0, len(date_list)):

#日期

date = date_list[num]

#星级评分

star = star_list[num]

#评论内容,处理一些标签和符号

comment = comment_list[num]

comment = str(comment).replace(' ', '')

comment = comment.replace('<br>', '')

comment = comment.replace('<br />', '')

comment = comment.replace('<br/>', '')

print("日期:{0}, 评分:{1}, 评论:{2}".format(date, star, comment),num)

Comment = Comment + comment

else:

print("爬取失败")

return Comment

def wordcloud_fig(comm, inpath, outpath, color, dpi):

seg =" ".join(jieba.lcut(comm, cut_all = False, HMM=True))

word = ["田", "不","对", "上", "了", "的",

"我", "在", "是", "也", "我们", "都",

"这里", "这", "有", "去", "一个", "就",

"没有", "已经","台", "阿富汗", "才", "知道", "回回", "时候"]

jieba.add_word("喀拉峻",freq = 20000, tag = None)

mask = imread(inpath, pilmode="RGB")

stopwords = set(STOPWORDS)

for num in word:

stopwords.add(num)

wordcloud = WordCloud(scale=8, font_path="D:\\Desktop\\SimSun.ttf",width=2000, height=1860,

background_color=None, stopwords=stopwords, mask=mask, max_font_size=None,

random_state=None, relative_scaling='auto',repeat=False, mode='RGBA',colormap=color) #设置词云属性

wordcloud.generate(seg) #导入词汇产生词云

%pylab inline

plt.imshow(wordcloud, interpolation='bilinear', cmap=plt.cm.gray)

plt.axis("off")

plt.savefig(outpath, dpi=dpi) #dpi越高图片越清晰

plt.show()

以伊犁哈萨克自治州为例

将主函数体封装后, 我们选取了一下几个伊犁哈萨克自治州的热门景点(见url)

http_yili = [

"https://www.mafengwo.cn/poi/6583819.html",

"https://www.mafengwo.cn/poi/2026.html",

"http://www.mafengwo.cn/poi/27788.html",

"http://www.mafengwo.cn/poi/27802.html",

"http://www.mafengwo.cn/poi/7693277.html",

"http://www.mafengwo.cn/poi/28099.html",

"http://www.mafengwo.cn/poi/28069.html"

]

com_yili = getComment(n=10, http=http_yili) #将爬取到的评论信息进行分词

wordcloud_fig(com_yili, "D:\\Desktop\\ciyun\\output_fig\\yili2.png") #绘制词云图并导出

其中伊犁哈萨克自治州的行政区划图可以在这里下载(地图选择器)

如果下载json格式还需要一些列的转换, 因此我们可以下载SVG格式, 然后再去svg to png这个网站将svg格式转为png格式, 这样导入的图片就可以用于制作词云的背景图片. 值得注意的时, 如果直接将转换完成的png图片用作词云背景会导致wordcloud库识别错误, 因为行政区划图没有明确的颜色差, 会导致词云绘制到区域外部而不是内部, 解决办法是在Windows自带的画图中将行政区域填充为黑色:

以这种形式作为词云背景图就不会发生错误.

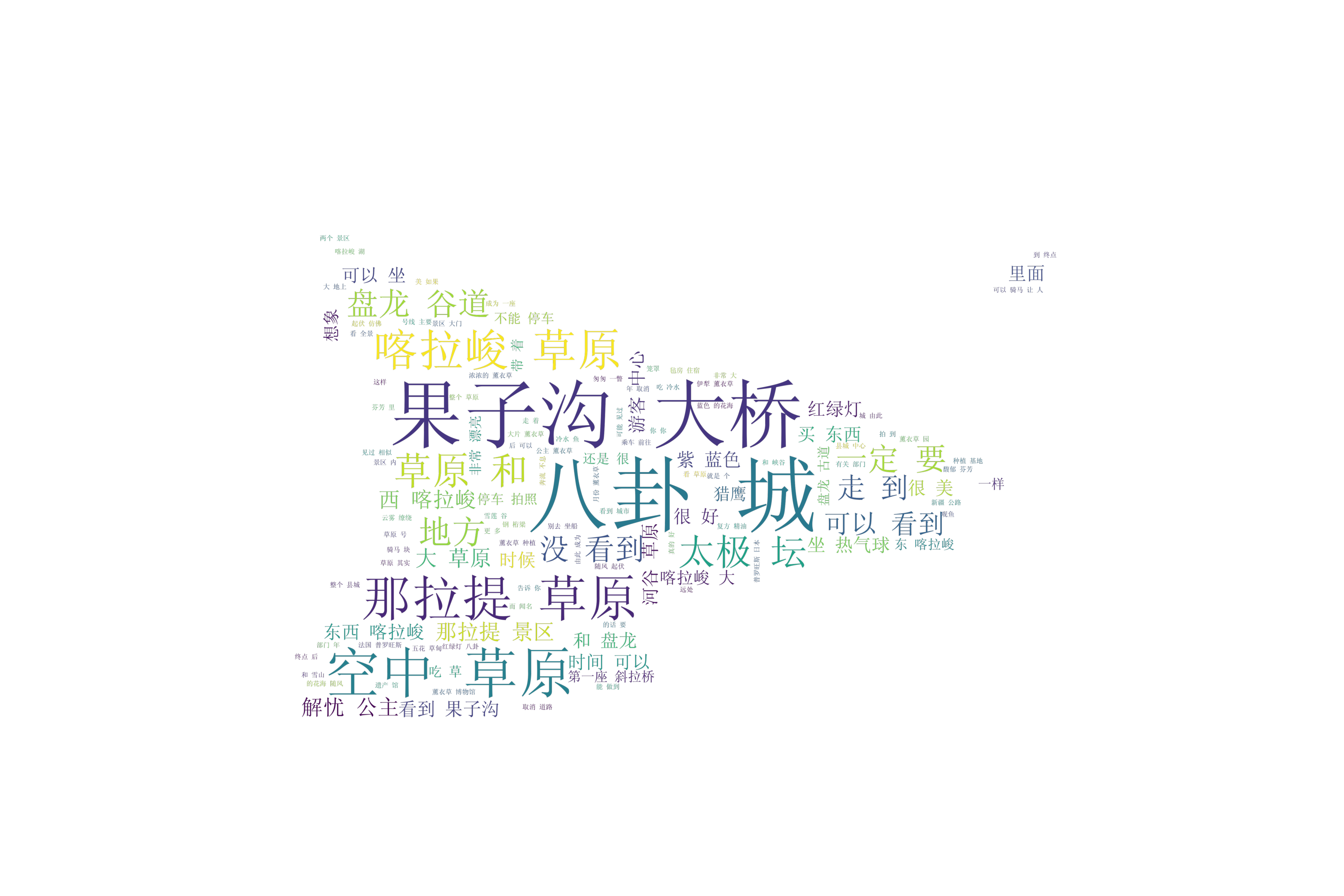

- 词云结果

该博客主要介绍了如何通过Python爬虫获取伊犁哈萨克自治州热门景点的游客评论,并利用jieba分词进行词云图的绘制。通过对评论内容的抓取,展示了游客的评价、评分以及日期等信息,最后生成的词云图揭示了游客关注的热点话题。

该博客主要介绍了如何通过Python爬虫获取伊犁哈萨克自治州热门景点的游客评论,并利用jieba分词进行词云图的绘制。通过对评论内容的抓取,展示了游客的评价、评分以及日期等信息,最后生成的词云图揭示了游客关注的热点话题。

1681

1681

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?